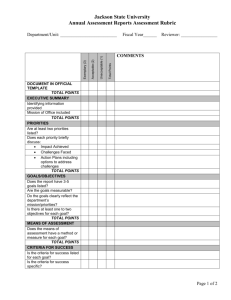

Exceeds Standard

advertisement

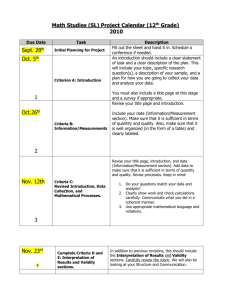

Changes in Student and Teacher Attitudes and Behaviors in an Integrated High School Curriculum Presented by: Nicolle Gottfried & Catherine Saldutti Background Design of an integrated, standards- & inquirybased 2-year Biology and Chemistry program Use of research-based best practices In cooperation with: teachers, students, Teachers College, Columbia and The Rockefeller University Piloted in 2 Public Schools in New York City Teachers of varying levels of experience Students representing diverse needs Background, cont. Evaluation of the pilot test included many data sources: Classroom Observations Interviews of teachers, administrators and students Written student and teacher reflections Review of student work and Regents test scores Pre- and post-participation questionnaires including an attitudinal battery Our Approach Multiple sources of data designed to provide a mosaic of understanding To be used both formatively and summatively All data were collected by EduChange and Gottfried, and reported anonymously Data Analysis Data analyzed in aggregate, by teacher, school and ethnicity Qualitative data and observations reported as notable trends, quotes and describable behavioral changes Quantitative data (e.g. survey responses and reflections) have been categorized, analyzed and reported as percentages and means These data are compared to reasonable matched non-participant normative data for New York City students, when available Key Findings Student and teacher attitudes toward program participation change over 2-years in a predictable pattern Model of Student and Teacher Attitude Change 100 80 60 Teacher Student 40 20 0 Fall 1st Year Spring 1st Year Fall 2nd Year Spring 2nd Year Key Findings, cont. Students in the program perform on par or better than matched counterparts on the Regents Living Environment (biology) exams Teachers improve relative to “best practices,” in parallel to student improvements Students demonstrate very sophisticated conceptual learning, habits of mind relating to science, and how to facilitate their own learning “When I felt confused, I tended to just tune everything out. When I’m confused now, I should ask questions in class.” Additional Questions? Contact: Nicolle Gottfried (nmgottfried@sbcglobal.net) Catherine Saldutti (catherine@educhange.com) Assessing Discrete Inquiry Skills Using a Classroom Laboratory Rubric: Six Student Case Studies Presented by: Catherine Saldutti & Nicolle Gottfried Six Case Studies 6 students who had participated in both years of the pilot program and had most of the requested labs available (rubric-based, teacher-selected) 2 high-achieving student in laboratory writeups 2 mid-achieving students 2 low-achieving students Represent School A and School B Study Focus All lab write-ups assessed using a 4-point rubric designed to address performance levels of inquiry habits Same rubric criteria and performance levels over 2 years, with several opportunities to revisit these inquiry habits in different laboratory contexts Case Studies: Evaluation of a portfolio of lab writeups, Fall 2002-present Focused on 3 of 18 rubric criteria Criterion #1: Understanding the Purpose of the Experiment Performance Levels Insufficient Evidence Approaches Standard Achieves Standard Understanding the Purpose of the Experiment 1B. Does not explain the main purpose clearly OR does not use own words 1B. Explains the main purpose of the experiment clearly in own words (Introduction) (Introduction) (Introduction) 1B. Explains the main purpose of the experiment clearly in own words, including how we will know if the purpose has been achieved (Introduction) Criterion Exceeds Standard (Achieves Standard plus…) 1B. Identifies 2 or more additional purposes for conducting the experiment (Introduction) Criterion #2: Understanding the Design of the Experiment Performance Levels Insufficient Evidence Approaches Standard Achieves Standard Exceeds Standard (Achieves Standard plus…) 4. Predicts 1 or 2 sources of error but does not provide logical explanations 4. Predicts once source of error when conducting the experiment, explaining it logically 4. Logically predicts 2 sources of error when conducting the experiment 4. Proposes 2 or more ways to ensure that the experiment is conducted safely and accurately Criterion Understanding the Design of the Experiment (Materials and Methods) Criterion #3: Analyzing and Interpreting Data Performance Levels Insufficient Evidence Approaches Standard Achieves Standard Exceeds Standard (Achieves Standard plus…) 4. Offers 1 new experimental question or purposes that is not directly related outcomes of this experience 4. Offers 1 new experimental question or purposes directly related to outcomes of this experience 4. Offers 2 new experimental questions or purposes directly related to outcomes of this experience 4. Takes one of the new experimental questions and outlines a design for a new experiment Criterion Analyzing and Interpreting Data (Analysis and Discussion) Preliminary Findings A longitudinal assessment system that culls out discrete inquiry habits of mind, regardless of the laboratory context, helps students improve over time Purpose More detailed descriptions Tighter connections Error Notion of inherent error is difficult Requires a “cognitive leap” Further Experimental Questions Questions loosely related to the lab at first Mid & high-level students move toward connections to results Additional Questions? Contact: Catherine Saldutti (catherine@educhange.com) Nicolle Gottfried (nmgottfried@sbcglobal.net)