Test Plan - University of Missouri

advertisement

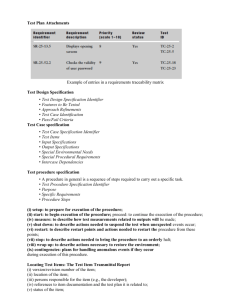

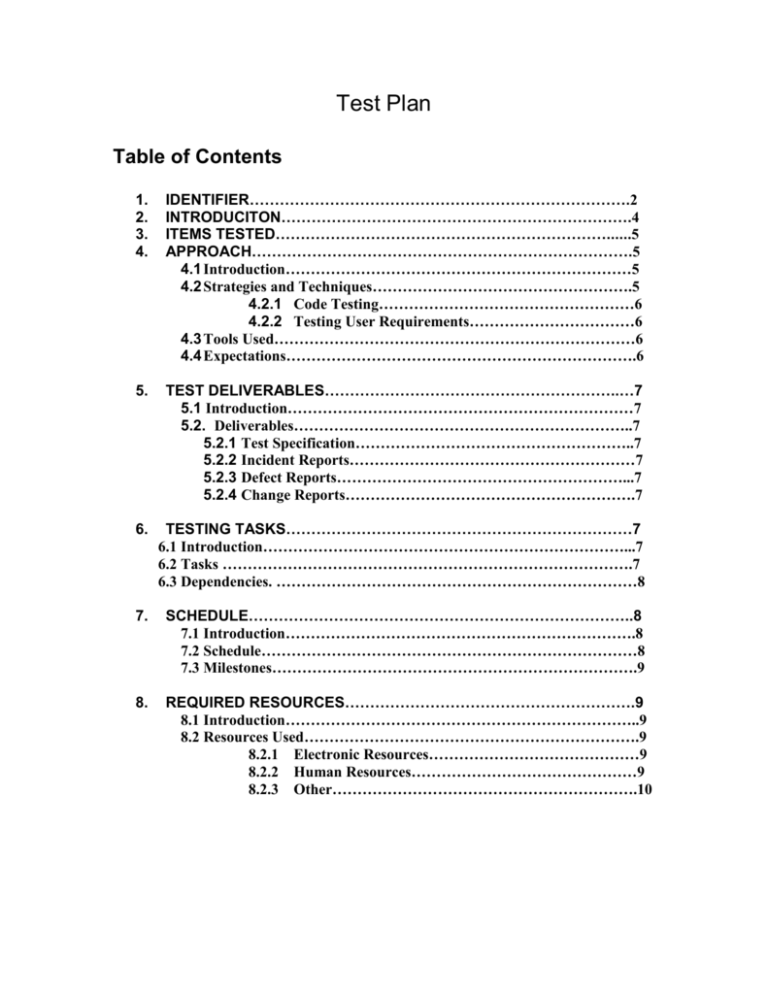

Test Plan Table of Contents 1. 2. 3. 4. IDENTIFIER………………………………………………………………….2 INTRODUCITON…………………………………………………………….4 ITEMS TESTED…………………………………………………………......5 APPROACH………………………………………………………………….5 4.1 Introduction……………………………………………………………5 4.2 Strategies and Techniques…………………………………………….5 4.2.1 Code Testing……………………………………………6 4.2.2 Testing User Requirements……………………………6 4.3 Tools Used………………………………………………………………6 4.4 Expectations…………………………………………………………….6 5. TEST DELIVERABLES…………………………………………………..…7 5.1 Introduction……………………………………………………………7 5.2. Deliverables…………………………………………………………..7 5.2.1 Test Specification………………………………………………..7 5.2.2 Incident Reports…………………………………………………7 5.2.3 Defect Reports…………………………………………………...7 5.2.4 Change Reports………………………………………………….7 6. TESTING TASKS……………………………………………………………7 6.1 Introduction………………………………………………………………...7 6.2 Tasks ……………………………………………………………………….7 6.3 Dependencies. ………………………………………………………………8 7. SCHEDULE…………………………………………………………………..8 7.1 Introduction…………………………………………………………….8 7.2 Schedule…………………………………………………………………8 7.3 Milestones……………………………………………………………….9 8. REQUIRED RESOURCES………………………………………………….9 8.1 Introduction……………………………………………………………..9 8.2 Resources Used………………………………………………………….9 8.2.1 Electronic Resources……………………………………9 8.2.2 Human Resources………………………………………9 8.2.3 Other…………………………………………………….10 Authors: Ryan Johnson Sepideh Dehghani Kam Walia Jason Eastburn Michael Nadrowski Adnan Syed 1. Identifier This standard describes a set of basic test documents that are associated with the dynamic aspects of software testing. The standard defines the purpose, outline, and content of each basic document. While the documents described in the standard focus on dynamic testing, several of them may be applicable to other testing activities. This standard is applied to HHN because it runs on any type of computer such as digital. Applicability is not restricted by the size, complexity, or criticality of the software. However, the standard does not specify any class of software to which it must be applied. The standard addresses the documentation of both initial development testing and the testing of subsequent software releases. For HHN software release, it may be applied to all phases of testing from module testing through user acceptance. However, since all of the basic test documents may not be useful in each test phase, the particular documents to be used in a phase are not specified. Each organization using the standard will need to specify the classes of software to which it applies and the specific documents required for a particular test phase. The standard does not call for specific testing methodologies, approaches, techniques, facilities, or tools, and does not specify the documentation of their use. The standard also does not imply or impose specific methodologies for documentation control, configuration management, or quality assurance. Additional documentation (e.g., a quality assurance plan) may be needed depending on the particular methodologies used. Within each standard document, the content of each section (i.e., the text that covers the designated topics) may be tailored to the particular application and the particular testing phase. In addition to tailoring content, additional documents may be added to the basic set, additional sections may be added to any document, and additional content may be added to any section. It may be useful to organize some of the sections into subsections. Some or all of the contents of a section may be contained in another document which is then referenced. Each organization using the standard should specify additional content requirements and conventions in order to reflect their own particular methodologies, approaches, facilities, and tools for testing, documentation control, configuration management, and quality assurance. 1.1 References This standard shall be used in conjunction with the following publication. IEEE Std 610.12-1990, IEEE Standard Glossary of Software Engineering Terminology. 1.2 Definitions This clause contains key terms as they are used in this standard. 1.2.1 design level: The design decomposition of the software item (e.g., system, subsystem, program, or module). 1.2.2 pass/fail criteria: Decision rules used to determine whether a software item or a software feature passes or fails a test. 1.2.3 software feature: A distinguishing characteristic of a software item (e.g., performance, portability, or functionality). 1.2.4 software item: Source code, object code, job control code, control data, or a collection of these items. 1.2.5 test: (A) A set of one or more test cases, or (B) A set of one or more test procedures, or (C) A set of one or more test cases and procedures. 1.2.6 test case specification: A document specifying inputs, predicted results, and a set of execution conditions for a test item. 1.2.7 test design specification: A document specifying the details of the test approach for a software feature or combination of software features and identifying the associated tests. 1.2.8 test incident report: A document reporting on any event that occurs during the testing process which requires investigation. 1.2.9 testing: The process of analyzing a software item to detect the differences between existing and required conditions (that is, bugs) and to evaluate the features of the software item. 1.2.10 test item: A software item which is an object of testing. 1.2.11 test item transmittal report: A document identifying test items. It contains current status and location information. 1.2.12 test log: A chronological record of relevant details about the execution of tests. 1.2.13 test plan: A document describing the scope, approach, resources, and schedule of intended testing activities. It identifies test items, the features to be tested, the testing tasks, who will do each task, and any risks requiring contingency planning. 1.2.14 test procedure specification: A document specifying a sequence of actions for the execution of a test. 1.2.15 test summary report: A document summarizing testing activities and results. It also contains an evaluation of the corresponding test items. 2. INTRODUCTION 2.1 Objectives A system test plan for HHN should support the following objectives: 1. To detail the activities required to prepare for and conduct the system test. 2. To communicate to all responsible parties the tasks that they are to perform and the schedule to be followed in performing the tasks. 3. To define the sources of the information used to prepare the plan. 4. To define the test tools and environment needed to conduct the system test. 2.2 Background The Healthy Homes Network of Greater Kansas City (HHN) is a community-based, nonprofit organization dedicated to the promotion of safe and healthy homes. Over one hundred individuals, representing more than fifty nonprofit organizations, public agencies, universities, hospitals and neighborhood groups, are collaborating to help residents of metropolitan Kansas City access information and services that will allow them to improve the quality of their lives. Working in conjunction with the staff at Children’s Mercy Hospital’s Center for Children’s Environmental Health, the HHN will identify children eligible for the grant, assess the environmental health of the home, survey the physical health of the child, and provide extensive, individualized education to the family. Local contractors will then perform low-cost repairs based on the results of the initial assessments and surveys. Six months after the completion of the repair work, assessments and surveys will be repeated and the results will be scientifically evaluated to determine the health impact of low cost remediation coupled with in-home education. In addition, the grant will allow the HHN to operate walk-in resource enters, a website and search-enabled database, as well as provide extensive outreach and training on a variety of topics. The university of Missouri-Kansas City and The Center for the city will provide planning and technology assistance toward the development of resource centers for residents who are interested in accessing information and services related to creating and maintaining safe and healthy homes. 3. ITEMS TESTED Items tested are the specific work products to be tested. The specification will be the source for establishing expected results. The versions to be tested will be placed in the appropriate libraries by the configuration administrator. The administrator will also control changes to the versions under test and notify the test group when new versions are available. The following documents will provide the basis for defining correct operation: HHN system statement of Requirement Document HHN system statement of Project Plan HHN system statement of Architectural Document 3.1 FEATURES TO BE TESTED The following list describes the features that will be tested: Database conversion Requirements Document Architecure Source Code Security Recovery Performance 3.2 FEATURES NOT TO BE TESTED All the features that will not be used when the system is initially installed will be included in this section. 4. APPROACH 4.1 Introduction This section explains strategies, techniques, and tools that were used during our tests. The amount of time, effort as well as our goals and expectations required to complete tests are also specified in this section. 4.2 Strategies and Techniques Our goal is to find defects. Whether we are testing a particular user requirement, performing simple regression test, or simply testing portion of the actual code, it is imperative that we follow a proper testing scenario. Different tests require different testing scenarios. In this document, however, we will focus on techniques and tools that we have adopted during tests performed by our tester and developers. 4.2.1 Code Testing The time and effort that was put on testing the actual code varied and largely and depended on the complexity of the code. For example, connecting to the Access Database using ASP code was tested within 5 minutes. Function “ADD”, on the other hands, required thorough testing, including unit testing as well as integration and regression testing and took about as much time as writing the code itself. The strategy that we adopted for code testing was largely based on unit tests done by the programmer and then regression test using the client code. 4.2.1 Testing User Requirements. User requirements have been initially tested at the beginning of the project. The strategy we have adopted during requirements testing was very simple and yet effective. It was based on conducting informal meetings, where team members expressed their concerns and opinions on particular user requirement. Use cases were also thoroughly analyzed. Usually the meetings lasted 30minutes to 1hour. 4.3 Tools Used. The code testing was performed using various software. Macromedia Code Inspector was one of them, and so was Microsoft Visual Studio .NET. 4.4 Expectations We expect to find defects or potential defects. We are coming out of presumption that our software has errors and it is our job to find them as quickly as possible. 5. TEST DELIVERABLES 5.1 Introduction This section outlines main testing deliverables including test specification, incident reports, defect reports and change reports. 5.2 Deliverables The main deliverable of the Test Plan is of course this document. However there are other important deliverables that provide valuable information. 5.2.1 Test Specification Test specification are included in separate document titled Test Specification. Test Specification document outlines Unique Identifiers, Items and Features Tested, Inputs, Expected Output and Test Procedure. 5.2.2 Incident Reports A test case failure creates an incident. The incident may or may not be caused by a defect. Incidents are detailed in the Test Specification Document. 5.2.3 Defect Reports Defect Reports along with Incident Reports are analyzed in the Test Specification Document. 5.2.4 Change Reports Change in any reports, including this one, will be done in formal way. A copy the new document with changes will be provided among group members and also online version will be updated. 6. TESTING TASKS 6.1 Introduction This section outlines individual tasks required to complete the testing effort including dependencies between tasks. 6.2 Tasks Testing Tasks Verifying User Requirements ---Use Cases Verifying Correctness of the Architecture Scheme Testing Software Implementation ---ADD function --DELETE function --MODIFY function --Hit Counter --Database Implementation Tester Requirement Engineer / Group Requirement Engineer / Group Architect Start date Completion Date 09/08/03 09/11/03 09/15/03 09/25/03 10/16/03 11/06/03 09/25/03 09/25/03 06/30/03 09/29/03 10/20/03 09/25/03 06/30/03 07/09/03 10/02/03 10/23/03 10/23/03 10/30/03 Week of 11/09/03 Developers/Group Developers/Group Developers/Group Developers/Group Developers/Group Developers/Group 6.3 Dependencies There are few dependencies that that are worth noting. First, the Verification of the User Requirements had to be done as a first step. That was done by Requirements Engineer along with the support from the Group during the first week of the project. Also Use Cases were thoroughly tested. Next Architect verified the correctness of the architecture scheme that we as a group have pre-selected (3-Tier Architecture). Then, and only then we could proceed to Implementation phase. Code inspection was done mainly by Developers and verified with during the Regression tests by the Group. 7. SCHEDULE 7.1 Introduction This section outlines schedule for completing Test Cases outlined above. It also shows the amount of effort a particular task took as well as major milestones of Testing Phase. 7.2 Schedule Week of 11/16/03 W 1 Testing Tasks Verifying User Requirements ---Use Cases Verifying Correctness of the Architecture Scheme Testing Software Implementation ---ADD function --DELETE function --MODIFY function --Hit Counter --Database Implementation Testing Effort Prediction Acctual Intermediate Intermediate /Hard Intermediate Intermediate /Hard Intermediate Easy Intermediate Hard Intermediate N/A N/A Intermediate Easy Intermediate N/A N/A Intermediate Intermediate Start date Completion Date 09/08/03 09/11/03 09/15/03 09/25/03 10/16/03 11/06/03 10/25/03 10/14/03 N/A N/A 11/01/03 11/10/03 10/30/03 N/A N/A 11/10/03 11/01/03 11/10/03 7.3 Milestones Major milestones include: Verifying the correctness of the User Requirements, verifying architecture, and validating and verifying source code. 8. REQUIRED RESOURCES 8.1 Introduction This section outlines resources that were used during the testing phase. 8.2 Resources Used We can divide our resources into few categories, including Electronic Resources, Human Resources and Other. 8.2.1 Electronic Resources Probably the most frequently used resource that was utilized during the Testing Phase was the various software systems. We have used Macromedia Code Inspector as well as Microsoft Visual Studio .NET. Both product allowed us to preview the results instantaneously in web browser of our choice, therefore making Unit Testing effortless. 8.2.2 Human Resources Week of 11/09/03 Week 11/16 Human Resources were crucial during testing User Requirements. Expertise of developers, as well as good managerial skills allowed us to quickly pin-point possible defects or misunderstandings with client. 8.2.3 Other There are other resources that we have used during the Testing Phase that do not fall in the above categories. They include support from online resources that allowed us to more accurately and efficiently track down errors and learn better programming techniques.