a review sheet for the final exam

advertisement

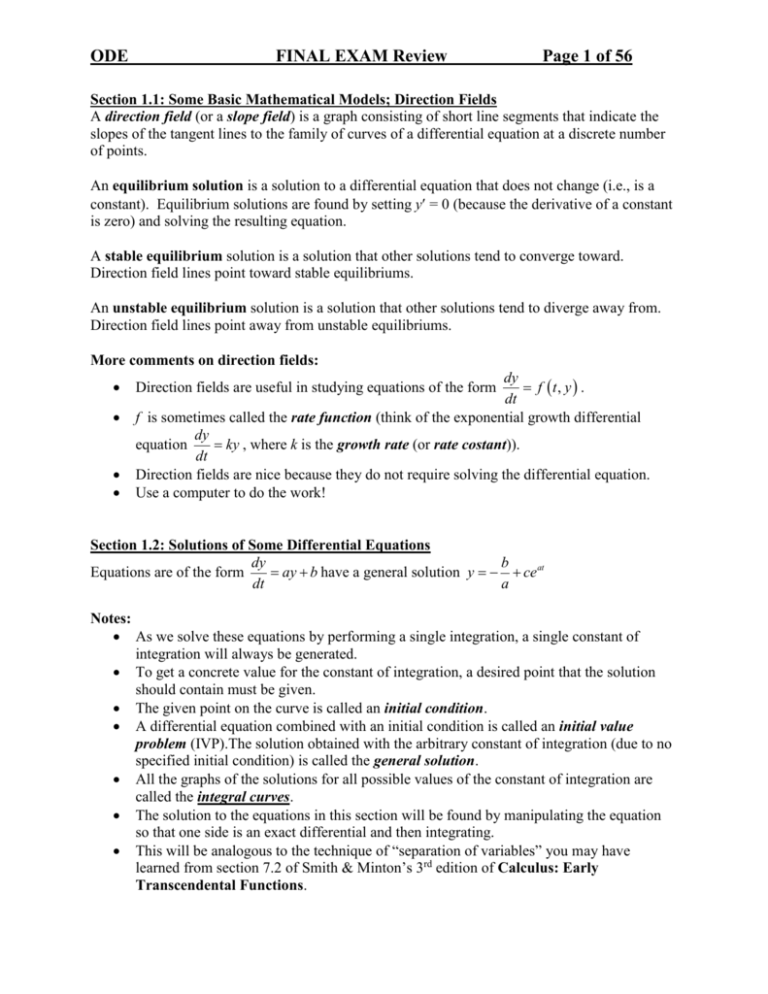

ODE FINAL EXAM Review Page 1 of 56 Section 1.1: Some Basic Mathematical Models; Direction Fields A direction field (or a slope field) is a graph consisting of short line segments that indicate the slopes of the tangent lines to the family of curves of a differential equation at a discrete number of points. An equilibrium solution is a solution to a differential equation that does not change (i.e., is a constant). Equilibrium solutions are found by setting y = 0 (because the derivative of a constant is zero) and solving the resulting equation. A stable equilibrium solution is a solution that other solutions tend to converge toward. Direction field lines point toward stable equilibriums. An unstable equilibrium solution is a solution that other solutions tend to diverge away from. Direction field lines point away from unstable equilibriums. More comments on direction fields: dy f t, y . dt f is sometimes called the rate function (think of the exponential growth differential dy ky , where k is the growth rate (or rate costant)). equation dt Direction fields are nice because they do not require solving the differential equation. Use a computer to do the work! Direction fields are useful in studying equations of the form Section 1.2: Solutions of Some Differential Equations dy b ay b have a general solution y ce at Equations are of the form dt a Notes: As we solve these equations by performing a single integration, a single constant of integration will always be generated. To get a concrete value for the constant of integration, a desired point that the solution should contain must be given. The given point on the curve is called an initial condition. A differential equation combined with an initial condition is called an initial value problem (IVP).The solution obtained with the arbitrary constant of integration (due to no specified initial condition) is called the general solution. All the graphs of the solutions for all possible values of the constant of integration are called the integral curves. The solution to the equations in this section will be found by manipulating the equation so that one side is an exact differential and then integrating. This will be analogous to the technique of “separation of variables” you may have learned from section 7.2 of Smith & Minton’s 3rd edition of Calculus: Early Transcendental Functions. ODE FINAL EXAM Review Page 2 of 56 Section 1.3: Classification of Differential Equations Ordinary vs. Partial Differential Equations Ordinary Differential Equations Only ordinary derivatives appear in the equation Example: d 2Q t dQ t 1 L R Q t E t 2 dt dt C (RLC series circuit with a drive voltage) Partial Differential Equations Only partial derivatives appear in the equation Example: 2u x, t u x, t 2 x 2 t (heat conduction equation) Systems of Differential Equations …consist of multiple differential equations involving multiple variables. Used when one quantity depends on other quantities… dx ax xy dt Example: Lotka-Volterra (predator-prey) equations: dy cy xy dt Order of a Differential Equation …is the order of the highest derivative that appears (analogy: the degree of a polynomial is the largest exponent) General form for an nth order differential equation: F t , u t , u t , , u n t 0 for some function F. We’ll deal with differential equations that can be solved explicitly for the highest derivative: y n t f t , y t , y t , , y n1 t . Linear vs. Nonlinear Differential Equations F t , y t , y t , , y n t 0 is linear if F is a linear function of y t , y t , , y n t ; note: F does not have to be linear in t… The general form of a linear differential equation of order n is: an t y n t an1 t y n1 t a1 t y t a0 t y t g t Nonlinear differential equations are not of the above form… Many times, nonlinear equations can approximated as being linear over small regions of the solution. This process is known as linearization. Solutions of Differential Equations …are functions such that all necessary derivatives exist over an interval of interest of n n 1 the independent variable and that satisfy t f t , t , t , , t . To verify a given solution, substitute it and its derivatives into the given differential equation to show that the equation is satisfied. ODE FINAL EXAM Review Page 3 of 56 Section 2.1: Linear Equations; Method of Integrating Factors dy p t y g t Form of a linear first-order differential equation: dt Formula for the solution of a first-order linear differential equations with constant coefficients: t dy ay g t , then t eat , and y t e at eas g s ds ce at If dt t0 Formula for the solution of a general first-order linear differential equation: t dy 1 p t y g t , then t exp p dt , and y t If s g s ds c dt t t0 Section 2.2: Separable Equations dy f x, y dx M x Separable first-order differential equation: f x, y N y General form of a first-order differential equation: Another form for a separable first-order differential equation: M x dx N y dy 0 Solution: M x dy dx N y N y dy M x dx dy y f v , where v can be converted to a separable equation. dx x Recall the following homework problem: Note: equations of the form ODE FINAL EXAM Review Page 4 of 56 ODE FINAL EXAM Review Page 5 of 56 Section 2.3: Modeling with First Order Equations Three Key Steps in Mathematical Modeling Construct the model (use the steps at the end of section 1.1). Analyze the model by solving it or making appropriate simplifications to solve it. Compare the results from the model with experiment and observation. Section 2.4: Differences Between Linear and Nonlinear Equations Linear Fist-Order Differential Equations dy p t y g t dt Solution: First, compute t exp p t dt Then answer is explicitly 1 y t t g t dt c t Theorem 2.4.1: Existence and Uniqueness of the Solution of a Linear First-Order Differential Equation If the functions p and g are continuous in an open interval I containing the point t = t0, then there exists a unique function y = (t) in I that satisfies the initial value problem y p t y g t and y t0 y0 . Nonlinear Fist-Order Differential Equations dy f t, y dt … we have only looked at one kind, which is separable equations: dy F y G t dt Solution: dy First, separate variables: G t dt F y Then integrate for an implicit equation dy F y G t dt Theorem 2.4.2: Existence and Uniqueness of the Solution of a Nonlinear First-Order Differential Equation f If the functions f and are continuous in the y rectangle containing the point (t0, y0), then there exists a unique solution y = (t) in that rectangle that satisfies the initial value problem y f t , y and y t0 y0 . The proof is rooted in the fact that we can write the solution in terms of operations on The proof is beyond the scope of this class. It continuous functions. should be noted that if the conditions of the hypothesis are not satisfied, then it is possible to get multiple solutions for one initial condition Note that if the uniqueness criteria are met, then graphs of two solutions can not intersect, because if they did then that implies two solutions (i.e., contradicting uniqueness) for a given initial condition. Interval of Definition: Interval of Definition: …is determined by any discontinuities in p and …cannot be determined by looking at f. g … must look at the solution itself General Solutions: General Solutions: …can always be written using the constant of …many times cannot be summarized with the ODE FINAL EXAM Review integration c. Implicit vs. Explicit Solutions: …the solution is stated explicitly as an integral. Page 6 of 56 constant of integration; example: equilibrium solutions. Implicit vs. Explicit Solutions: …the solutions obtained are usually implicit. Section 2.5: Autonomous Equations and Population Dynamics In this section, we look at a restricted form of a nonlinear separable equation: dy f y . dt When f is independent of t, the equation is called autonomous (i.e., t does not drive the solution; the solution only depends on itself…). Logistic Growth Model Accounts for the fact that the growth rate depends on population instead of dy ry , use dt dy h y y for some function h. dt We like h to model the fact that as populations get larger, the growth rate gets smaller due to “using up” the environement. h y r ay is a simple model that gets used extensively. dy r ay y …called the logistic equation, and is usually written the form: dt r dy y r 1 y where K is the environmental carrying capacity (or a dt K saturation level), and r is the intrinsic growth rate. dy 0 , we are also solving When finding equilibrium solutions by solving dt f y 0 ; zeros of f are called critical points. A phase line graph shows the y-axis and how solutions either converge toward or diverge from equilibrium solutions. dy For stable equilibrium solutions, points toward the equilibrium. dt dy For unstable equilibrium solutions, points away from the equilibrium. dt dy y r 1 y , T is called the threshold level for the For he equation dt T population. ODE FINAL EXAM Review Page 7 of 56 Section 2.6: Exact Equations and Integrating Factors In this section, we examine the equation M x, y N x, y If once again the solution comes from x, y c , then dy 0. dx d d x, y c dx dx dx dy 0 x dx y dx dy x x, y y x, y 0 dx Thus: Theorem 2.6.1: Exact Differential Equations and Their Solution Let the functions M , N , M y , and N x be continuous in some rectangular region R. Then the equation M x, y N x, y y 0 is an exact differential equation in the region R if and only if M y x, y N x x, y at each point of R. That is, there exists a function satisfying x x, y M x, y and y x, y N x, y if and only if M y x, y N x x, y . The method of solving an exact equation is identical to finding the potential for a conservative vector field… Integrating Factors… Sometimes a differential equation that is not exact can be converted to an exact equation by multiplying the equation by an integrating factor x, y : dy 0 dx dy x, y M x, y x , y N x , y 0 dx For this equation to be exact, it must be true that M y N x M x N y Which leads to the partial differential equation: y M x N N x y M x N M y N x 0 If can be found, then an exact equation is formed, whose solution can be obtained using the prior technique. Unfortunately, the equation to find is usually harder to solve than the original equation (in general). However, we can look for simplifications, like what happens if the original equation is such that depends only on x or y. ODE FINAL EXAM Review Differential Equation where the integrating factor depends on x only (i.e., x ): M y N x d N Nx dx d M y Nx dx N M y Page 8 of 56 Differential Equation where the integrating factor depends on y only (i.e., y ): M y N x d M M y Nx dy d Nx M y dy M Criterion for x only: Criterion for y only: M y Nx Nx M y is a function of x only. N Note that the equation for is both linear and separable now. is a function of y only. M Note that the equation for is both linear and separable now. Section 2.7: Numerical Approximations: Euler’s Method y f x, y y y x y f x, y x y f x, y x yi 1 yi f xi , yi x yi 1 yi f xi , yi x Or, letting h = x, yi 1 yi hyi To perform Euler’s method quickly on your calculator: Store the initial conditions in variables X and Y. Enter the update formula for Y then for X separated by a colon. Repeatedly Use 2ND ENTER until you are at the final x-value. If you want to see the intermediate values of Y, you’ll have to type Y every time… Example: for y x e y , y(1) = -2, h = 0.25 1X -2 Y Y + (X + e^(-Y))*0.25 Y:X+0.25X Y 2ND ENTER 2ND ENTER … ODE FINAL EXAM Review Page 9 of 56 Section 3.1: Homogeneous Equations with Constant Coefficients Big idea: ay t by t cy t 0 has solution y t ert or y t tert for some values r. Here are four fundamental second-order differential equations and their solutions: y t 0 y t c1t c0 y t k 2 y t y t 2 y t OR OR y t k 2 y t 0 y t 2 y t 0 y t c1e kt c2 e kt y t c1eit c2 e it y t k1 cosh kt k2 sinh kt y t c1 cos t c2 sin t To solve a second-order differential equation with constant coefficients: ay t by t cy t 0 Assume a solution of the form y t ert , substitute it and its derivatives y t rert and y t r 2ert into the equation. ar 2 e rt brert ce rt 0 ar 2 br c e rt 0 Then find the values r1 and r2 that satisfy the resulting characteristic equation: ar 2 br c 0 The general solution is a linear combination of the two solutions: y t c1er1t c2er2t Section 3.2: Solutions of Linear Homogeneous Equations; the Wronskian Big Idea: Any two solutions we find of a homogeneous linear second-order differential equation are all that is needed if the Wronskian is non-zero on the interval of the existence of the solution. Theorem 3.2.1: Existence and Uniqueness Theorem (for Linear Second-Order Differential Equations) For the initial value problem y p t y q t y g t , y t0 y0 , y t0 y0 where p, q, and g are continuous on some open interval I containing the point t0, then there is exactly one solution y t of this problem, and the solution exists throughout the interval I. Theorem 3.2.2: Principle of Superposition ODE FINAL EXAM Review Page 10 of 56 If y1 and y2 are solutions of the differential equation L y y p t y q t y 0 , then the linear combination c1y1 + c2y2 is a solution for any values of the constants c1 and c2. The Wronskian: W y1 t0 y1 t0 y2 t 0 y1 t0 y2 t0 y2 t0 y1 t0 y2 t0 Theorem 3.2.3: If y1 and y2 are two solutions of the differential equation L y y p t y q t y 0 and the initial conditions y t0 y0 , y t0 y0 must be satisfied by y, then it is always possible to choose the constants c1 and c2 so that y c1 y1 t c2 y2 t satisfies this initial value problem if and only if the Wronskian W y1 y2 y2 y1 is not zero at t0. Theorem 3.2.4: If y1 and y2 are two solutions of the differential equation L y y p t y q t y 0 then the family of solutions y c1 y1 t c2 y2 t with arbitrary constants c1 and c2 includes every solution of the differential equation if and only if there is a point t0 where the Wronskian W y1 y2 y2 y1 is not zero. Notes: y c1 y1 t c2 y2 t is called the general solution of L y y p t y q t y 0 . y1 and y2 are said to form a fundamental set of solutions. To find the general solution, and thus all solutions of L y y p t y q t y 0 , we need only find two solutions of the given equation whose Wronskian is non-zero. Theorem 3.2.5: If the differential equation L y y p t y q t y 0 has coefficients p and q that are continuous on some open interval I, and if t0 I, and if y1 is a solution that satisfies the initial conditions y1 t0 1, y1 t0 0 and if y2 is a solution that satisfies the initial conditions y2 t0 0, y2 t0 1 then y1 and y2 form a fundamental set of solutions of the equation. ODE FINAL EXAM Review Page 11 of 56 Theorem 3.2.6: (Abel’s Theorem) If y1 and y2 are two solutions of the differential equation L y y p t y q t y 0 With the coefficients p and q continuous on an open interval I, then the Wronskian W y1 , y2 t is given by: W y1 , y2 t c exp p t dt where c is a certain constant that depends on y1 and y2, but not on t. Further, W y1 , y2 t either is zero for all t I (if c = 0), or else is never zero in I (if c 0). Section 3.3: Complex Roots of the Characteristic Equation Big Idea: When there are complex roots to the characteristic equation, each of the fundamental solutions will have a sinusoidal factor. Euler’s Formula: eit cos t i sin t Notes: The complex solutions from the quadratic formula always come in conjugate pairs. i t i t If those complex roots are r i , then the general solution y t c1e c2e can always be written as y t d1et cos t d2et sin t . Additional topics from homework: A Euler equation is of the form t 2 y ty y 0 . It can be solved by making the substitution x ln t , which results in the constant coefficient equation d2y dy 1 y 0 2 dx dx A general linear homogeneous equation y p t y q t y 0 can be solved using the substitution x u t q t dt if and only if q t 2 p t q t q t 3 2 constant . ODE FINAL EXAM Review Page 12 of 56 Section 3.4: Repeated Roots; Reduction of Order Big Idea: When there are repeated roots to the characteristic equation, the second of the fundamental solutions is formed by multiplying the first solution by a factor of the independent variable. Summary of Sections 3.1 – 3.4: To solve a second-order linear homogeneous differential equation with constant coefficients: ay by cy 0 Assume a solution of the form y t ert , which leads to a corresponding characteristic equation: ar 2 br c 0 Solve the characteristic equation to find its two roots r1 and r2: If the roots are real and unequal, then the general solution is y t c1er1t c2er2t If the roots are complex conjugates i , then the general solution is y t c1et cos t c2et sin t If the roots are the same, then the general solution is y t c1er1t c2ter1t If we have a more complicated second-order initial value problem like: y p t y q t y 0, y t0 y0 , y t0 y0 , and we know that y1 and y2 are solutions of the differential equation, then we can be sure that they form a fundamental set of y t y2 t 0 0 solutions if the Wronskian is nonzero: W 1 0 y1 t0 y2 t0 Reduction of Order (end of 3.4): If we know a solution y1 of the more general secondorder differential equation: y p t y q t y 0 , then we can derive the second solution y2 by assuming y2 t v t y1 t and substitute y2 into the original equation, resulting in y1v 2 y1 py1 v 0 , which is a first order equation for v. ODE FINAL EXAM Review Page 13 of 56 Section 3.5: Nonhomogeneous Equations; Method of Undetermined Coefficients Big idea: One way to solve ay by cy g t is to “guess” a particular solution that has the same form as g(t), then work out the value(s) of the coefficient(s) that make that guess work. Theorem 3.5.1: The Difference of Nonhomogeneous Solutions Is the Homogeneous Solution If Y1 and Y2 are two solutions of the nonhomogeneous equation L y y p t y q t y g t , then their difference Y1 Y2 is a solution of the corresponding homogeneous solution L y y p t y q t y 0 . If, in addition, y1 and y2 are a fundamental set of solutions of the homogeneous equation, then Y1 Y2 c1 y1 t c2 y2 t , for certain constants c1 and c2. Theorem 3.5.2: General Solution of a Nonhomogeneous Equation The general solution of the nonhomogeneous equation L y y p t y q t y g t , can be written in the form y t c1 y1 t c2 y2 t Y t m where , y1 and y2 are a fundamental set of solutions of the corresponding homogeneous equation, c1 and c2, are arbitrary constants, and Y is some specific solution of the nonhomogeneous equation. Method of Undetermined Coefficients: When g(t) is an exponential, sinusoid, or polynomial, make a guess for the particular solution that is the same exponential, sinusoids of the same frequency, or polynomial of the same degree, except with arbitrary coefficients that you determine by substituting your guess into the nonhomogeneous equation. This technique works because derivatives of these types of functions result in the same type of function. If the form for the particular solution replicates any terms of the homogeneous solution, then factors of t must be applied until there is no replication. ODE FINAL EXAM Review Page 14 of 56 Forms for the Particular Solution of ay by cy g t for Assorted g(t): g t Pn t (i.e., a polynomial of degree n) g t A cost B sin t g t A e kt g t ekt Pn t g t ekt A cos t B sin t y p t Rn t (i.e., a polynomial of degree n, but with different coefficients than Pn) Exception #1: r = 0 is a root of the homogeneous equation y p t t Rn t Exception #2: r = 0 is a double root of the homogeneous equation y p t t 2 Rn t y p t C1 cost C 2 sin t Exception: r = ±i are roots of the homogeneous equation y p t t C1 cos t C2 sin t y p t C1e kt Exception #1: r = k is a root of the homogeneous equation y p t C1tekt Exception #2: r = k is a double root of the homogeneous equation y p t C1t 2 e kt y p t Rn t ekt (i.e., the product of a polynomial of degree n, and an exponential). Exception #1: r = k is a root of the homogeneous equation y p t tRn t ekt Exception #2: r = k is a double root of the homogeneous equation y p t t 2 Rn t ekt y p t ekt C1 cos t C2 sin t Exception #1: r = k ± i are roots of the homogeneous equation y p t tekt C1 cos t C2 sin t ODE FINAL EXAM Review Page 15 of 56 g t Pn t cos t Qn t sin t y p t Rn t cos t Sn t sin t (i.e., the sum of the products of distinct polynomials of degree n with sinusoids). Exception: r = ±i are roots of the homogeneous equation y p t t Rn t cos t Sn t sin t g t ekt Pn t cos t ekt Qn t sin t y p t ekt Rn t cos t ekt Sn t sin t Exception #1: r = k ± i are roots of the homogeneous equation y p t t e kt Rn t cos t e kt S n t sin t Section 3.6: Variation of Parameters Big Idea: The method of variation of parameters works by assuming a solution that is of the form of a sum of the products of arbitrary functions and the fundamental homogeneous solutions. The basic idea behind the method of variation of parameters, due to Lagrange, is to find two fundamental solutions y1 and y2 of a corresponding homogeneous equation, then assume that the solution of the nonhomogeneous equation is: y t u1 t y1 t u2 t y2 t with the additional criterion that u1 t y1 t u2 t y2 t 0 . Theorem 3.6.1: Solution of a Nonhomogeneous Second-Order Equation If the functions p, q, and g are continuous on an open interval I, and if the functions y1 and y2 are a fundamental set of solutions of the homogeneous equation y p t y q t y 0 corresponding to the nonhomogeneous equation y p t y q t y g t , then a particular solution is: t y2 s g s y s g s Y t y1 t ds y2 t 1 ds , W y1 , y2 s W y1 , y2 s t0 t0 t where t0 is any conveniently chosen point in I. The general solution is y t c1 y1 t c2 y2 t Y t . ODE FINAL EXAM Review Page 16 of 56 Section 3.7: Mechanical and Electrical Vibrations Big Idea: Second order differential equations with constant coefficients provide very good models for oscillating systems, like a mass hanging from a spring or a series RLC circuit. Mu u ku 0 Recall: A cos t B sin t R cos t , where R A2 B 2 and B 0 if A 0 tan 1 . A if A 0 Resonant (angular) frequency: 0 2 1 2 Period: T f k M Frequency: f Quasifrequency of the damped system: 0 1 2 4MK , ODE FINAL EXAM Review Page 17 of 56 Section 3.8: Forced Vibrations Big Idea: A damped spring-mass (or circuit) system described in section 3.7 that is driven by a sinusoidal force will eventually settle into a steady oscillation with the same frequency as the driving force. The amplitude of this steady state solution increases as the drive frequency gets closer to the natural frequency of the system, and as the damping decreases. An undamped spring-mass system that is driven by a sinusoidal force results in an oscillation that is the average of the two frequencies and modulated by a sinusoidal envelope that is the difference of the frequencies. Forced Vibrations with Damping In this section, we will restrict our discussion to the case where the forcing function is a sinusoid. Thus, we can make some general statements about the solution: The equation of motion with damping will be given by: mu u ku F0 cos t Its solution will be of the form: u t c1u1 t c2u2 t A cos t B sin t homogeneous solution uh t "transient solution" particular solution u p t "steady state solution" Notes: The homogeneous solution uh t 0 as t , which is why it is called the “transient solution.” The constants c1 and c2 of the transient solution are used to satisfy given initial conditions. The particular solution u p t is all that remains after the transient solution dies away, and is a steady oscillation at the same frequency of the driving function. That is why it is called the “steady state solution,” or the “forced response.” The coefficients A and B must be determined by substitution into the differential equation. If we replace u p t U t A cos t B sin t with u p t U t R cos t , m 0 2 2 2 F0 then R , cos , sin , m 2 0 2 2 2 2 , and k 0 2 . (See scanned notes at end for derivation) m Note that as 0 , cos 1 and sin 0 0 . Note that when 0 , Note that as , (mass is out of phase with drive). 2 The amplitude of the steady state solution can be written as a function of all the parameters of the system: ODE FINAL EXAM Review R F0 F0 2 m 2 0 2 2 2 2 F0 2 2 2 m 0 1 2 20 2 2 0 0 F0 2 Page 18 of 56 4 2 k2 2 k 2 m 2 1 2 2 m 0 m 0 2 2 F0 2 2 2 2 k 1 2 2 0 mk 0 F0 / k 2 2 2 1 2 2 0 0 , 2 mk k 1 R 2 F0 2 2 1 2 0 2 0 k Notice that R is dimensionless (but proportional to the amplitude of the motion), F0 F since 0 is the distance a force of F0 would stretch a spring with spring constant k. k mass 2 2 time 2 1 Notice that is dimensionless… mk mk mass mass time2 k F Note that as 0 , R 1 R 0 . k F0 Note that as , R 0 (i.e., the drive is so fast that the system cannot respond to it and so it remains stationary). The frequency that generates the largest amplitude response is: ODE FINAL EXAM Review Page 19 of 56 d k R 0 d F0 1 2 2 1 2 1 2 2 2 2 2 2 0 0 0 2 d 2 2 1 2 3 d 0 2 0 2 2 2 2 1 2 2 0 0 2 2 2 1 2 0 0 0 2 0, 2 1 2 0 0 2 1 2 0 2 2 max 0 2 1 2 2 max 2 max k 2 0 m 2mk 2 0 2 2 2m 2 Plugging this value of the frequency into the amplitude formula gives us: F0 Rmax 0 1 2 2 4mk 1 , then the maximum value of R occurs for 0 . 4mk Resonance is the name for the phenomenon when the amplitude grows very large because the damping is relatively small and the drive frequency is close to the undriven frequency of oscillation of the system. If ODE FINAL EXAM Review Page 20 of 56 Forced Vibrations Without Damping The equation of motion of an undamped forced oscillator is: mu ku F0 cos t When 0 (non-resonant case), the solution is of the form: u t c1 cos 0t c2 sin 0t F0 k cos t , 0 2 2 m m 0 When 0 (resonant case), the solution is of the form: F u t c1 cos 0t c2 sin 0t 0 t sin 0t 2m For the non-resonant case with initial condition u 0 0, u 0 0 , c1 and u t F0 cos t cos 0t , which can be written as m 0 2 2 0 t 0 t 2 F0 u t sin sin . 2 2 2 2 m 0 F0 , c2 0 , m 0 2 2 ODE FINAL EXAM Review Page 21 of 56 Section 4.1: General Theory of nth Order Linear Equations Usual form of an nth order linear differential equation: dny d n 1 y dy P0 t n P1 t n 1 Pn 1 t Pn t y G t dt dt dt Assumptions: The functions P0 t , , Pn t , G t are continuous and real-valued on some interval I : t , and P0 t 0 for any t I . Linear differential operator form: dny d n 1 y L y n p1 t n1 dt dt pn 1 t dy pn t y g t dt Notes: Solving an nth order equation ostensibly requires n integrations This implies n constants of integration Also implies n initial conditions to completely specify an IVP: n 1 n 1 o y t0 y0 , y t0 y0 , y t0 y0 Theorem 4.1.1: Existence and Uniqueness Theorem (for nth-Order Linear Differential Equations) If the functions where p1 , , pn , and g are continuous on the open interval I, then exists exactly one solution y t of the differential equation dny d n1 y dy p t pn 1 t pn t y g t that also satisfied the initial conditions 1 n n 1 dt dt dt n 1 y t0 y0 , y t0 y0 , y t0 y0 n1 . This solution exists throughout the interval I. The Homogeneous Equation: L y y n p1 t y n1 pn1 t y pn t y 0 Notes: If the functions y1 , y2 , , yn are solutions, then so is a linear combination of them, y t c1 y1 t c2 y2 t cn yn t To satisfy the initial conditions, we get n equations in n unknowns: c1 y1 t0 c2 y2 t0 cn yn t0 y0 c1 y1 t0 c2 y2 t0 c1 y1 n 1 t0 c2 y2 n 1 t0 cn yn t0 y0 cn yn n 1 t0 y0 n 1 ODE FINAL EXAM Review This system will have a solution for c1 , c2 , , cn provided the determinant of the matrix of coefficients is not zero (i.e., Cramer’s Rule again). In other words, the Wronskian is nonzero, just like for second-order equations. y1 y2 yn W y1 , y2 , y1 yn y2 0 , pn are continuous on the open interval I, if the functions , yn are solutions of y n p1 t y n1 W y1 , y2 , pn1 t y pn t y 0 , and if yn 0 for at least one point in I, then every solution of the differential equation can be written as a linear combination of y1 , y2 , Notes: y t c1 y1 t c2 y2 t y n p1 t y n1 yn y1 n 1 y2 n 1 yn n 1 Note that a slightly modified form of Abel’s Theorem still applies: W y1 , y2 , yn t c exp p1 t dt Theorem 4.1.2: If the functions where p1 , y1 , y2 , Page 22 of 56 y1 , y2 , , yn . cn yn t is called the general solution of pn1 t y pn t y 0 . , yn are said to form a fundamental set of solutions. Linear Dependence and Independence: The functions f1 , f 2 , , f n are said to be linearly dependent on an interval I if there exist constants k1 , k2 , , kn , NOT ALL ZERO, such that k1 f1 t k2 f 2 t FOR ALL t I . The functions f1 , f 2 , dependent there. kn f n t 0 , f n are said to be linearly independent on I if they are not linearly ODE FINAL EXAM Review Theorem 4.1.3: If y1 , y2 , , yn is a fundamental set of solutions to y n p1 t y n1 Page 23 of 56 pn1 t y pn t y 0 on an interval I, then y1 , y2 , , yn are linearly independent on I. Conversely, if y1 , y2 , , yn are linearly independent solutions of the equation, then they form a fundamental set of solutions on I. The Nonhomogeneous Equation: L y y n p1 t y n1 pn1 t y pn t y g t Notes: If Y1(t) and Y2(t) are solutions of the nonhomogeneous equation, then L Y1 Y2 t L Y1 t L Y2 t g t g t 0 I.e., the difference of any two solutions of the nonhomogeneous equation is a solution of the homogeneous equation. So, the general solution of the nonhomogeneous equation is: y t c1 y1 t c2 y2 t cn yn t Y t , where Y(t) is a particular solution of the nonhomogeneous equation. We will see that the methods of undetermined coefficients and reduction of order can be extended from second-order equations to nth-order equations. Section 4.2: Homogeneous Equations with Constant Coefficients For equations of the form L y a0 y n a1 y n1 an1 y an y 0 , “it is natural to anticipate that” y = ert is a solution for correct values of r. Under this anticipation, L ert ert a0 r n a1r n 1 an 1r an e rt Z r 0 , where the polynomial Z r a0r n a1r n1 an1r an is called the characteristic polynomial, and Z r 0 is called the characteristic equation. Recall that a polynomial of degree n has n zeros, and thus the characteristic polynomial can be written as: Z r a0 r r1 r r2 r rn Practice: All roots are real and unequal… y t c1er1t c2er2t cnernt Practice: Some roots are complex… Recall that if a polynomial has real coefficients, then it can be factored into linear and irreducible quadratic factors. The linear irreducible quadratic factors will factor into complex conjugate roots i , which will correspond to solutions of et cos t , et sin t . ODE FINAL EXAM Review Page 24 of 56 Practice: Some roots are repeated… If a root r1 is repeated s times, then that repeated root will generate s solutions: er1t , ter1t , t 2er1t , , t s 1er1t The same applies if the repeated roots are complex. Section 4.3: The Method of Undetermined Coefficients If the nonhomogeneous term g(t) of the linear nth order differential equation with constant coefficients L y a0 y n a1 y n1 an1 y an y g t is of an “appropriate form” (i.e., a sinusoidal, polynomial, or exponential function), then the method of undetermined coefficients can be used to find the particular solution. Recall that if any term of the proposed particular solution replicates a term of the homogeneous solution, then the entire particular solution must be multiplied by a sufficient number of factors of t to eliminate the replication. Section 4.4: The Method of Variation of Parameters Big Idea: The method of variation of parameters for determining a particular solution of the nonhomogeneous nth order linear differential equation L y y n p1 t y n1 pn1 t y pn t y g t is a direct extension of the method for second-order differential equations in section 3.6. The basic idea is that once the homogeneous solution is determined, yh t c1 y1 t c2 y2 t cn yn t Then the particular solution is of the form y p t u1 t y1 t u2 t y2 t un t yn t with the (n – 1) additional criteria u1 t y1 t u2 t y2 t un t yn t 0, u1 t y1 t u2 t y2 t un t yn t 0, u1 t y1 t u2 t y2 t un t yn t 0, u1 t y1 n 2 t u2 t y2 n 2 t This results in the system of equations: un t yn n 2 t 0. ODE FINAL EXAM Review y1 t u1 t y2 t u2 t Page 25 of 56 yn t un t 0, y1 t u1 t y2 t u2 t yn t un t 0, y1 t u1 t y2 t u2 t yn t un t 0, y1 n 2 t u1 t y2 n 2 t u2 t yn n 2 t un t 0, y1 n 1 t u1 t y2 n 1 t u2 t yn n 1 t un t g t . The solution for any one function um(t) is: g t Wm t um t , where W(t) is the Wronskian W t W y1 , y2 , , yn t , and Wm(t) is the W t determinant obtained from W by replacing the mth column with the column [0, 0, …, 1]. n t m 1 t0 Thus, y p t ym t g s Wm s ds . W s ODE FINAL EXAM Review Page 26 of 56 Section 5.1: Review of Power Series 1. Definition of convergence of a power series: A power series a x x n 0 m n n 0 is said to converge at a point x if lim an x x0 exists for that x. m n n 0 2. Definition of absolute convergence of a power series: A power series a x x n 0 said to converge absolutely at a point x if a x x n 0 n 0 n n 0 n is converges. a. Absolute convergence implies convergence… 3. Ratio test: If, for a fixed value of x, lim n an 1 x x0 an x x0 n 1 n x x0 lim n an 1 x x0 L , then an the power series converges absolutely at that value of x if x x0 L 1, and diverges if x x0 L 1. If x x0 L 1, then the test is inconclusive. 4. If a x x n 0 n n 0 converges at x = x1, it converges absolutely for x x0 x1 x0 , and if it diverges at x = x1, it diverges for x x0 x1 x0 . 5. Radius and interval of convergence: The radius of convergence is a nonnegative number such that a x x n 0 n 0 n converges absolutely for x x0 and diverges for x x0 . a. Series that converge only when x = x0 are said to have = 0. b. Series that converge for all x are said to have = . c. If > 0, then the interval of convergence of the series is x x0 . ODE FINAL EXAM Review Given that Page 27 of 56 an x x0 and bn x x0 converge for x x0 … n n 0 n n 0 6. Sum of series: an x x0 bn x x0 an bn x x0 n n 0 n n 0 n n 0 7. Product and Quotient of series: n n a x x 0 n bn x x0 n 0 n 0 2 3 2 3 a0 a1 x x0 a2 x x0 a3 x x0 ... b0 b1 x x0 b2 x x0 b3 x x0 ... a0 b0 b1 x x0 b2 x x0 b3 x x0 ... 2 3 a1 x x0 b0 b1 x x0 b2 x x0 b3 x x0 ... 2 a x x b b x x b x x 3 b x x ... a2 x x0 b0 b1 x x0 b2 x x0 b3 x x0 ... 2 3 3 0 0 1 0 2 0 2 2 3 3 3 0 ... a0b0 a0b1 a1b0 x x0 a0b2 a1b1 a2b0 x x0 a0b3 a1b2 a2b1 a3b0 x x0 ... 2 n n ak bn k x x0 n 0 k 0 To do a quotient, can write as a multiplication and equate terms: a x x n 0 n b x x n 0 n n 0 n n n n n d n x x0 d n x x0 bn x x0 an x x0 n 0 n 0 n 0 n 0 0 OR can do long division… 8. Derivatives of a series: d n n 1 a x x nan x x0 n 0 dx n 0 n 1 2 d n n2 a x x n n 1 an x x0 0 2 n dx n 0 n2 9. Taylor series: f n x0 n x x0 is the Taylor series for a function f(x) about the point x = x0. n! n 0 3 ODE FINAL EXAM Review Page 28 of 56 10. Equality of series: every corresponding term is equal… 11. Analytic functions: have a convergent Taylor series with non-zero radius of convergence about some point x = x0. 12. Shift of index of Summation… Section 5.2: Series Solutions Near an Ordinary Point, Part I d2y dy Will consider homogeneous equations of the form P x 2 Q x R x y 0 where the dx dx polynomial coefficients are polynomials, like: The Bessel equation: x 2 y xy x 2 2 y 0 The Legendre equation: 1 x 2 y 2 xy 1 y 0 Ordinary point: a point x0 such that P(x0) 0. y p x y q x y 0 Singular point: a point x0 such that P(x0) = 0. p(x) or q(x) becomes unbounded (See sections 5.4 – 5.7) To solve a differential equation near an ordinary point using a power series technique: Assume a power series form of the solution y x an x n which converges in the n 0 interval x x0 Plug the power series into the equation and equate like terms Obtain a recurrence relation for the coefficients of higher-order terms in terms of earlier coefficients. If possible, try to find the general term, which is an explicit function for the coefficients in terms of the index of summation. Write the final answer in terms of those coefficients. Check the radius of convergence. ODE FINAL EXAM Review Page 29 of 56 Section 5.3: Series Solutions Near an Ordinary Point, Part II To justify the statement that a solution of P x y Q x y R x y 0 y p x y q x y m can be written as y x an x x0 , we must be able to compute m!am x0 for n n 0 any order derivative m based only on the information given by the IVP. Note: So it seems that p and q need to at least be infinitely differentiable at x0, but in addition they also need to be analytic at x0. In other words, they must have Taylor series expansions that converge in some interval about x0. Theorem 5.3.1 (due to Fuchs) If x0 is an ordinary point of the differential equation P x y Q x y R x y 0 (i.e., pQ P and q R P are analytic at x0), then its general solution is y an x x0 a0 y1 x a1 y2 x , n n 0 where a0 and a1 are arbitrary, and y1 and y2 are two power series solutions that are analytic at x0. The solutions y1 and y2 form a fundamental set of solutions. Also, the radius of convergence of y1 and y2 is at least as large as the minimum of the radii of convergence of p and q. Note: From the theory of complex-valued rational expressions, it turns out that radius of convergence of a power series of a rational expression about a point x0 is the distance from x0 to the nearest zero of the (complex-valued) denominator. ODE FINAL EXAM Review Page 30 of 56 Section 5.4: Euler Equations; Regular Singular Points P x y Q x y R x y 0 Singular points are x = x0 such that P(x0) = 0. Euler equations have singular points at x = 0: x 2 y xy y 0 To solve Euler equations, make an assumption for the solution of y = xr. When the roots are real and distinct, then the two fundamental solutions are obtained. When the roots are repeated, multiply the first fundamental solution by ln(x) to obtain the second fundamental solution. When the roots are complex, r i , the fundamental solutions are y1 x cos ln x and y2 x sin ln x In summary, the solutions of x 2 y xy y 0 are: r1 r2 c1 x c2 x , given r1 and r2 are distinct real roots of r r 1 r 0 y x c1 c2 ln x x r1 , given r1 is a real double root of r r 1 r 0 c1 cos ln x c2 sin ln x x , given i are complex roots of r r 1 r 0 For negative x values, one can make the substitution x , and show that, for any x value, the solutions are: r r c1 x c2 x r y x c1 c2 ln x x c1 cos ln x c2 sin ln x x 1 2 1 We do not have a general theory of how to handle any possible singularity of P x y Q x y R x y 0 . So, for the next few sections, we will restrict the discussion to the power series solution of this type of equation near regular singular points. A regular singular point at x = x0 is a singular point with the additional restrictions that Q x 2 R x lim x x0 is finite , and lim x x0 is finite . x x0 x x0 P x P x ODE FINAL EXAM Review Page 31 of 56 Section 5.5: Series Solutions Near a Regular Singular Point, Part I Big Idea: According to Frobenius, it is valid to assume a series solution of the form y x x0 r a x x n 0 n n 0 to a second-order linear differential equation near a regular singular point x = x0. Recall: If x = x0 is a regular singular point of the second order linear equation P x y Q x y R x y 0 , then lim x x x0 Q x R x lim xp x finite and lim x 2 lim x 2 q x finite . x x0 P x x x0 P x x x0 This means xp x pn x x0 and x 2 q x qn x x0 are convergent for some radius n n 0 n n 0 about x0. Thus, we can write the original equation as: x 2 y xp x xy x 2 q x y 0 If all the coefficients pn and qn are zero except for p0 and q0, then the equation reduces to x 2 y p0 xy q0 y 0 , which is an Euler equation. In fact, this is called the corresponding Euler equation when all the coefficients pn and qn are not zero, and the roots of this corresponding Euler equation play a role in the solution called “the exponents at the singularity.” Since the equation we are trying to solve looks like an equation with “Euler coefficients” times power series, we will look for a solution that is of the form of an “Euler solution” times a power series: y x x0 r a x x a x x n 0 n n 0 n 0 n r n 0 As part of the solution, we must determine: The values of r that make this a valid solution. The recurrence relation for the coefficients an. The radius of convergence. The theory behind a solution of this form is due to Frobenius. ODE FINAL EXAM Review Page 32 of 56 To solve a linear second order equation near a regular singular point using the method of Frobenius: Identify singular points and verify they are regular. Assume a solution of y an x x0 r n and its derivatives: n 0 y an r n x x0 n 0 r n 1 , y an r n r n 1 x x0 r n2 n 0 Substitute the assumed solution and its derivatives into the given equation. o You may have to re-write coefficients in terms of x x0 … Shift indices so that all series solutions have x x0 “Spend” any terms needed so that all series start at the same index value. o This should result in a term that looks like the characteristic equation of the corresponding Euler equation. o This is called the indicial equation. o The roots of the indicial equation are called the exponents of the singularity. o They are the same as the roots of the corresponding Euler equation. r n Set the coefficient of x x0 to zero to get the recurrence relation. r n in the general term. Use the recurrence relation with each exponent to get the general term for each of the two solutions for each exponent. o If the exponents of the singularity are equal or differ by an integer, then it is only valid to get the series solution for the larger root. (What to do for the second solution will be covered in 5.6). Compute the radius of convergence for each solution. ODE FINAL EXAM Review Page 33 of 56 5.6: Series Solutions Near a Regular Singular Point, Part II To solve a linear second order equation near a regular singular point using the method of Frobenius REGARDLESS OF THE EXPONENTS OF THE INDICIAL EQUATION: Identify singular points and verify they are regular. Find the exponents of the singularity by solving the corresponding Euler equation Q x R x x 2 y p0 xy q0 y 0 , where p0 lim x and q0 lim x 2 , or using the indicial x 0 x 0 P x P x equation. Assume a solution of y x r1 an x x0 . n n 0 Substitute the assumed solution and its derivatives into the given equation. r n Shift indices so that all series solutions have x x0 in the general term. “Spend” any terms needed so that all series start at the same index value. o This should result in a term with a factor that looks like the characteristic equation of the corresponding Euler equation; this is the indicial equation. r n Set the coefficients of x x0 to zero to get the recurrence relation. Get the second solution using the theorem below… ODE FINAL EXAM Review Page 34 of 56 Theorem 5.6.1: General Solution of a Second Order Equation with Real Exponents of the Singularity near a Regular Singular Point Consider the differential equation x 2 y x xp x y x 2 q x y 0 , where x = 0 is a regular singular point. Then xp x and x 2 q x are analytic at x = 0 with convergent power series expansions xp x pn x n and x 2 q x qn x n for x where 0 is the minimum of n 0 n 0 the radii of convergence for xp x and x q x . Let r1 and r2 be the roots of the indicial equation 2 F r r r 1 p0r q0 0 , with r1 r2 if r1 and r2 are real. Then in either the interval x 0 or 0 x , there exists a solution of the form r y1 x x 1 1 an r1 x n n 1 where the an r1 are given by the recurrence relation n 1 F r n an ak r k pn k qn k 0 k 0 with a0 = 1 and r = r1. There are three cases for the second solution: If r1 r2 is not zero or a positive integer, then in either the interval x 0 or 0 x , there exists a second solution of the form r y2 x x 2 1 an r2 x n . n 1 The an r2 are determined by the same recurrence relation as the an r1 , with with a0 = 1 and r = r2. These power series solutions converge for at least x . If r1 = r2 then the second solution is of the form r y2 x y1 x ln x x 1 bn r1 x n . n 1 If r1 r2 = N, a positive integer, then the second solution is of the form r y2 x ay1 x ln x x 2 1 cn r2 x n . n 1 The coefficients an , bn r1 , cn r2 and the constant a can be determined by substituting the form of the series solution for y2 into the original differential equation. The constant a may turn out to be zero. Each of these series converge at least for x and defines an analytic function near x = 0. In all three cases, the two solutions form a fundamental set of solutions. ODE FINAL EXAM Review Page 35 of 56 Section 5.7: Bessel’s Equation Bessel’s Equation: x 2 y xy x 2 2 y 0 x = 0 is a regular singular point The roots of the indicial equation are . The value of is called the “order” of the equation. The first solution for a given value of is called the “Bessel function of the first kind of order ,” and is denoted by J(x). The second solution for a given value of is called the “Bessel function of the second kind of order ,” and is denoted by Y(x). Bessel Equation of Order Zero (i.e., = 0): x 2 y xy x 2 y 0 The roots of the indicial equation are r1 = r2 = 0. m m 1 x 2 m 1 x 2 m y1 x a0 1 2 m J 0 x 1 2m 2 2 m 1 2 m! m1 2 m ! J0(x) 1 as x 0. 2 2 J0 x cos x as x . 4 x 1 y2 x J 0 x ln x m 1 o Hm 1 1 1 2 3 1 2 2m m 1 Hm m! 2 x2m m 1 1 ; i.e., a partial sum of the harmonic series up to m. m k 1 k ODE FINAL EXAM Review Page 36 of 56 The traditional Bessel function of the second kind of order zero is a linear combination of y2 x and J 0 x : Y0 x y x ln 2 J 0 x 2 m 1 1 H m 2 m 2 Y0 x ln 2 J 0 x 2 m x 2 m 1 2 m! o is the “Euler-Mascheroni” constant: lim H n ln n 0.577 215 665 2 n 2 Y0 x 2 2 Y0 x sin x as x . 4 x ln x as x 0 . 1 ODE FINAL EXAM Review Page 37 of 56 1 Bessel Equation of Order One-Half (i.e., = ½ ): x 2 y xy x 2 y 0 4 The roots of the indicial equation are r1 = + ½ , r2 = - ½ . m m 1 1 x 2 m 1 x 2 m1 1 1 2 2 y1 x x 1 x 2 sin x x m 0 2m 1 ! m1 2m 1! 1 2 2 By convention, J 1 x sin x , x > 0. 2 x n 1n x 2 n 1 x 2 n 1 cos x sin x y2 x x a0 a1 a1 1 a0 1 n 1 2n 1 ! n 1 2n ! x 2 x 2 The Bessel function of the second kind of order one-half is: 1 2 1 2 2 J 1 x cos x , x > 0. 2 x ODE FINAL EXAM Review Page 38 of 56 Bessel Equation of Order One (i.e., = 1): x 2 y xy x 2 1 y 0 The roots of the indicial equation are r1 = 1, r2 = -1. 2m 1 x x 1 x J1 x 2 m 2 m 0 2 m 1 !m ! m 0 m 1!m ! 2 series can be written as powers of (x/2)… m (Note: J1(x) = ½ y1(x) so that the 3 2 2 J1 x cos x as x . 4 x m 1 H m H m1 2 m 1 y2 x J1 x ln x 1 x , x>0 x m1 22 m m ! m 1! The traditional Bessel function of the second kind of order one is: 2 Y1 x y2 x ln 2 J1 x 2 Y1 x as x 0 . x 1 2 m 1 J1(x) 0 as x 0. 1 m 3 2 2 Y1 x sin x as x . 4 x ODE FINAL EXAM Review Page 39 of 56 Bessel Equations of Higher Positive Integer Order (i.e., = positive integer, > 1): x 2 y xy x 2 2 y 0 The roots of the indicial equation are r1 = , r2 = -. 1 x 2 m J x m0 m !m ! 2 J(x) 0 as x 0. 2 1 as x . 2 2 J x cos x 4 x m 1 Weisstein, Eric W. "Bessel Function of the First Kind." From MathWorld--A Wolfram Web Resource. http://mathworld.wolfram.com/BesselFunctionoftheFirstKind.htmlhttp://mathworld.wolfr am.com/BesselFunctionoftheFirstKind.html J x cos J x Y x sin Y x 1! 2 as x 0 . x 2 1 as x 2 2 Y x . sin x 4 x Weisstein, Eric W. "Bessel Function of the First Kind." From MathWorld--A Wolfram Web Resource. http://mathworld.wolfram.com/BesselFunctionoftheFirstKind.htmlhttp://mathworld.wolfr am.com/BesselFunctionoftheSecondKind.html 1 ODE FINAL EXAM Review Page 40 of 56 Section 6.1: Definition of the Laplace Transform Review of Improper Integrals (Calc 2): If f is continuous on the interval [a, ), then the improper integral f t dt is defined as: a A f t dt lim f t dt . A a a If the limit exists as A , then the integral is said to converge. Otherwise, the integral diverges. Piecewise Continuous Functions: A function f is piecewise continuous on an interval t if the interval can be partitioned by n points t0 t1 ... tn such that f is continuous on each subinterval Ii = ti 1 t ti the limit of f as the endpoints are approached from within each limit are finite. Upshot: f is continuous everywhere except at a finite number of jump discontinuitues. To integrate a piecewise continuous function: t1 t2 f t dt f t dt f t dt t1 f t dt tn1 It can be difficult to tell if an improper integral with any given piecewise converges when the antiderivative can’t be written in terms of elementary functions… ODE FINAL EXAM Review Page 41 of 56 Theorem 6.1.1: Integral Comparison Test for Convergence and Divergence If f is piecewise continuous for t a , and if f t g t when t M for some positive constant M, and if M a g t dt converges, then f t dt t M and if M a g t dt diverges, , then also converges. However, if f t g t 0 for f t dt also diverges. Integral Transforms: An integral transform, in general, is a relation of the form F s K s, t f t dt , where K is a a given function, called the kernel of the transformation. Alpha and beta need not be finite numbers. Note that an integral transform transforms a function f into another function F, called the “transform of f.” The Laplace Transform: …has a kernel of e st . …is defined by L f t F s e st f t dt 0 …we will use the Laplace transform to: Transform an initial value problem for an unknown function f in the t domain to an unknown function F in the s domain. Solve the resulting algebraic problem to find F. Recover f from F by “inverting the transform.” Theorem 6.1.2: Criteria for a Laplace Transform to Exist Suppose that: 1. f is piecewise continuous on the interval 0 t A for any positive A. 2. f t Ke at when t M for some real, positive constants K and M, and a real a. Then the Laplace transform L f t F s e st f t dt exists for s a . 0 Functions that satisfy the hypotheses of theorem 6.1.2 are described as being “of exponential 2 order” as t . f t et is not of exponential order. ODE FINAL EXAM Review Page 42 of 56 Section 6.2: Solution of Initial Value Problems Theorem 6.2.1: Laplace Transform of the Derivative of a Function Suppose that f is continuous and that f is piecewise continuous on any interval 0 t A and that there exist constants K, a, and M such that f t Ke at for t M . Then L f t exists for s > a and is given by: L f t sL f t f 0 . Laplace Transform of the second derivative of a function: L f t s 2 L f t sf 0 f 0 Theorem 6.2.2: Laplace Transform of the nth Derivative of a Function …. Then L f n t exists for s > a and is given by: L f n t s n L f t s n1 f 0 sf n 2 0 f n 1 0 . To solve an initial value problem with a Laplace transform: Transform the differential equation. Solve the resulting algebraic equation for L f t . Look up each term in a table of Laplace transforms (page 317 or the table on the next page); the actual solution is the inverse of each term of L f t . Note that some algebra will most likely need to be done to get the transform to look like sums of the transforms in the table below. ODE FINAL EXAM Review f t L F s 1 1 e at t n , n = positive integer t p , p > -1 sin at cos at sinh at cosh at eat sin bt eat cos bt t n e at , n = positive integer uc t Page 43 of 56 Laplace Transform Table F s L f t 1 ,s>0 s 1 ,s>0 sa n! ,s>0 s n 1 p 1 ,s>0 s p 1 a ,s>0 2 s a2 s ,s>0 2 s a2 a , s > |a| 2 s a2 s , s > |a| 2 s a2 b ,s>a 2 s a b2 sa s a 2 b2 n! s a n 1 ,s>a ,s>a uc t f t c e cs ,s>0 s ecs F s ect f t F s c f ct 1 s F , c > 0 c c t f t g d F sG s 0 t c e cs f n t s n F s s n1 f 0 t F n s n f t f n1 0 ODE FINAL EXAM Review Page 44 of 56 Section 6.3: Step Functions Definition of the unit step function (or Heaviside function): 0, t c, uc t c0 1, t c, e cs The Laplace transform of uc t is . s Theorem 6.3.1: Laplace transform of a horizontally translated function. If F s L f t exists for s a 0 , and if c is a positive constant, then L uc t f t c e cs L f t e cs F s . Conversely if f t L 1 F s , then uc t f t c L 1 e cs F s . Theorem 6.3.2: Inverse Laplace transform of a horizontally translated transform. If F s L f t exists for s a 0 , and if c is a constant, then L ect f t F s c , s > a + c. Conversely if f t L 1 F s , then ect f t L 1 F s c . Section 6.4: Differential Equations with Discontinuous Forcing Functions Pertinent relations from section 6.3: e cs L uc t s L uc t f t c e cs L f t e cs F s uc t f t c L 1 e cs F s L ect f t F s c ect f t L 1 F s c ODE FINAL EXAM Review Page 45 of 56 Section 6.5: Impulse Functions Recall from Physics and calc 2 applications that an impulse is the time integral of a force, which results in a change in momentum: dp F ma dt pf tf pi ti dp F t dt tf p p p F t dt pf i ti In this section, we will examine “the” impulse function, which models a force of a very large magnitude that acts over a very short time. 1 , t Let d t 2 . Note: lim d t 0, t 0 0 0, t Then the impulse imparted by this force is: I d t dt lim I lim 0 0 1 1 dt lim 2 1 0 2 2 Define the Dirac delta function (or an idealized unit impulse function) as: t 0, t 0 t dt 1 ODE FINAL EXAM Review Page 46 of 56 Note: The Dirac delta function can be shifted, which results in a description of an impulse occurring at a later time: t t0 0, t t0 t t dt 1 0 The Laplace Transform of the Dirac delta function can be derived by looking once again at the limit of d t . L t t0 e st 0 Note: t t f t dt f t 0 0 Section 6.6: The Convolution Integral Theorem 6.6.1: The Convolution Theorem If F s = L f t and G s = L g t both exist for s > a 0, then H s = F s G s = L h t , s a, where t t 0 0 h t f t g d f g t d . The function h is known as the convolution of f and g. The two integrals above are known as convolution integrals. ODE FINAL EXAM Review Page 47 of 56 Section 7.1: Introduction to Systems of First Order Linear Equations A second-degree or higher differential can be converted to a system of first order equations by letting x1 = u, x2 = u, etc. General Notes: In this chapter, we will examine systems of first-order differential equations: x1 F1 t , x1 , x2 , , xn x2 F2 t , x1 , x2 , , xn xn Fn t , x1 , x2 , , xn A solution of this system on an interval I : t is a set of n functions that are differentiable in I and that satisfy the above system of equations. An IVP is formed when n initial conditions are stated. x1 t 1 t , x2 t 2 t , , xn t n t x1 t0 x10 , x2 t0 x20 , , xn t0 xn0 Theorem 7.1.1 Existence and Uniqueness of a Solution to a System of First-Order Equations Let each of the functions F1, …, Fn and the partial derivatives F1 x1 , …, F1 xn , …, Fn x1 , …, Fn xn be continuous in an open region R of the tx1x2…xn space defined by t , 1 x1 1 , 2 x2 2 , …, n xn n , and let the point t , x , x , 0 0 1 0 2 , xn0 be in R. Then there is an interval t t0 h in which there exists a unique solution x1 1 t , , xn n t of the system of differential equations that also satisfies the initial conditions. In this chapter, the discussion will be restricted to systems where the functions F1, …, Fn are linear functions of x1, …, xn. Thus, the most general system we will examine is: x1 p11 t x1 p1n t xn g1 t x2 p21 t x1 p2 n t xn g 2 t xn pn1 t x1 pnn t xn g n t If all the g1(t), …, gn(t) are zero, then the system is homogeneous. Otherwise, it is nonhomogeneous. Theorem 7.1.2 Existence and Uniqueness of a Solution to a System of Linear FirstOrder Equations Let each of the functions p11, p12, …, pnn, g1(t), …, gn(t) are continuous on an open interval I : t , then there exists a unique solution x1 1 t , , xn n t of the system that also satisfies the initial conditions. Moreover, the solution exists throughout I. ODE FINAL EXAM Review Page 48 of 56 Section 7.2: Review of Matrices A matrix is a rectangular array of numbers (called elements) arranged in m rows and n columns: a1n a11 a12 a a22 a2 n 21 A amn am1 am 2 aij is used to denote the element in row i and column j. (aij) is used to denote the matrix whose generic element is aij. The transpose of a matrix is a new matrix that is formed by interchanging the rows and columns of a given matrix. The transpose is denoted with a superscript T: 1 2 3 1 4 7 T if A 4 5 6 , then A 2 5 8 ; if A = (aij), then AT = (aji). 7 8 9 3 6 9 The conjugate of a matrix is a new matrix that is formed by taking the conjugate of every element of a given matrix. The conjugate is denoted with a bar over the matrix name. 1 i 2 3i 1 i 2 3i if A , then A ; if A aij , then A aij . 4 5i 6 7i 4 5i 6 7i The adjoint of a matrix is the transpose of the conjugate of a given matrix. The adjoint is denoted with a superscript asterisk: 1 i 2 3i 1 i 4 5i if A , then A* . 4 5i 6 7i 2 3i 6 7i Properties of Matrices 1. Equality: Two matrices A and B are equal only if all corresponding elements are equal; i.e., aij = bij for all i and j. 2. Zero: The matrix with zero for every element is denoted as 0. 3. Addition: The sum of two m n matrices A and B is computed by adding corresponding elements: A B aij bij aij bij 1 2 5 6 6 8 3 4 7 8 10 12 a. Matrix addition is commutative and associative: A B B A ; A B C A B C 4. Multiplication by a number: The product of a complex number and a matrix is computed by multiplying every element by the number: ODE FINAL EXAM Review Page 49 of 56 A aij aij 1 2 2 i 4 2i 3 4 6 3i 8 4i a. Multiplication by a constant obeys the distributive laws A B A B ; A A A b. The negative of a matrix is negative one times the matrix: A 1 A 2 i 5. Subtraction: The difference of two m n matrices A and B is computed by subtracting corresponding elements: A B aij bij aij bij 1 2 5 6 4 4 1 1 3 4 7 8 4 4 4 1 1 . Note: the last step is not required, but it illustrates a simplifying technique that is sometimes used. 6. Multiplication of matrices: Matrix multiplication is only defined when the number of columns in the first factor equals the number of rows in the second factor. a. The product of an m p and a p n matrix is an m n matrix. b. If AB C , then element cij is computed by taking the sum of the products of corresponding elements from row i in matrix A and column j of matrix B: p cij aik bkj k 1 c. Matrix multiplication is associative: AB C A BC d. Matrix multiplication is distributive: A B C AB AC e. Matrix multiplication is usually NOT commutative: AB BA (usually) 7. Multiplication of vectors: n a. The dot product: xT y xi yi i 1 b. Properties of the dot product: xT y yT x ; xT y z xT y xT z ; xT y xT y xT y n c. The scalar (inner) product: x, y xi yi xT y i 1 d. The magnitude, or length, of a vector x can be represented with the inner product: x x, x . ODE FINAL EXAM Review Page 50 of 56 e. Note: Two vectors are orthogonal if x, y 0 ; the (orthogonal) unit vectors are 1 0 0 i 0 , j 1 , and k 0 . 0 0 1 f. Properties of the scalar product: x, y y, x , x, y z x, y y, z , x, y x, y , x, y x, y 8. Identity: The multiplicative identity for matrices, denoted by I, is the square matrix with one in every diagonal element and zero elsewhere. The diagonal runs from the top left to bottom right, only. 0 1 0 0 1 0 I 1 0 0 a. Multiplication by the identity matrix is commutative for square matrices: AI IA A . 9. Inverse: For some square matrices, there exists another unique matrix such that their product is the identity. Such matrices are said to be nonsingular, or invertible. Matrices that are not invertible are called noninvertible or singular. The “other unique matrix” is called the (multiplicative) inverse, and is denoted with a superscript -1: If A is invertible, then AA-1 = A-1A = I. a. The determinant is a quantity that can be computed for any square matrix, and whose value determines if a matrix is singular or not. A zero determinant means the matrix is noninvertible. The determinant of a matrix A is denoted as: det(A) = |A|. b. The determinant of any square matrix can be computed by taking the sum of the products of all the elements and their cofactors from any one row or column of the matrix. c. The cofactor Cij of a given element aij is the product of the minor Mij of the element and an appropriate factor of -1: Cij = (-1)i+jMij . d. The minor Mij of an element aij is the determinant of the matrix obtained by eliminating the ith row and jth column from the original matrix. e. Thus, a determinant can be computed using a chain of smaller determinants. It is useful to know the following formula for the last step in the chain: a11 a12 a b ad bc a11a22 a12 a21 c d a21 a22 ODE FINAL EXAM Review f. If B = A-1, then bij C ji det A Page 51 of 56 , where (Cji) is the transpose of the cofactor matrix of A. g. This formula is extremely inefficient for computing the inverse. The preferred method for computing the inverse of a matrix A is to form the augmented matrix A | I, and then perform elementary row operations on the augmented matrix until A is transformed to the identity matrix. That will leave I transformed to A-1. This process is called row reduction or Gaussian elimination. h. The three elementary row operations are: i. Interchange any two rows. ii. Multiply any row by a nonzero number. iii. Add any multiple of one row to another row. 10. Matrix Functions: Are matrices with functions for the elements. They can be integrated and differentiated element-by-element… ODE FINAL EXAM Review Page 52 of 56 Section 7.3: Linear Algebraic Equations; Linear Independence, Eigenvalues, Eigenvectors Systems of Linear Algebraic Equations A system of n linear equations in n variables can be written in matrix form as follows: a1n x1 b1 a11 a12 a11 x1 a12 x2 a1n xn b1 a a22 a2 n x2 b2 21 Ax B a x a x a x b nn n n n1 1 n 2 2 amn xn bn am1 am 2 If B 0 , then the system is homogeneous; otherwise, it is nonhomogeneous. If A is nonsingular (i.e., det(A) 0), then A-1 exists, and the unique solution to the system is: x A 1B A picture for the 3 3 system is: y z 2 x x 2 y 3z 12 2 x 2 y z 9 Solution: (-1, 2, -3) ODE FINAL EXAM Review Page 53 of 56 If A is singular, then either there are no solutions, or there are an infinite number of solutions. Example pictures for 3 3 systems are: Three parallel planes No Solution z 0 z 2 z 4 Note: Inconsistent systems yield false equations (like 0 = 2 or 0 = 4) after trying to solve them. Planes intersect in three parallel lines No Solution 2 x y 2 x y 2 Note: Inconsistent systems yield a false equation (like 0 = -6) after trying to solve them. All three planes intersect along same line Infinite number of solutions 1 x y z 2 x y z x 0.5 Note: This type of dependent system yields one equation of 0 = 0 after row operations. All three planes are the same Infinite number of solutions y z 1 x 2 x 2 y 2 z 2 3x 3 y 3z 3 Note: This type of dependent system yields two equations of 0 = 0 after row operations. ODE FINAL EXAM Review Page 54 of 56 If A is singular, then the homogeneous system Ax = 0 will have infinitely many solutions (in addition to the trivial solution). If A is singular, then the nonhomogeneous system Ax = B will have infinitely many solutions when (B, y) = 0 for all vectors y satisfying A*y = 0 (recall A* is the adjoint of A). These solutions will always take the form x = x(0) + , where x(0) is the particular solution, and is the general form for the corresponding homogeneous solution. In practice, linear systems are solved by performing Gaussian elimination on the augmented matrix A | B. Linear Independence 1 k A set of k vectors x , , x are said to be linearly dependent if there exist k complex numbers c1 , , ck not all zero, such that c1x1 ck x k 0 . This term is used because if it is true that not all the constants are zero, then one of the vectors depends on one or more of the other vectors: ci xi c1x1 ci 1xi 1 ci 1xi 1 ck x k . On the other hand, if the only values of c1 , c1 c2 1 ck 0 , then the vectors x , , ck that make c1x1 ck x k 0 be true are , x k are said to be linearly independent. The test for linear dependence or independence can be represented with matrix arithmetic: Consider n vectors with n components. Let xij be the ith component of vector x(j), and let X = (xij). Then: x11 c1 x1 n cn x11c1 x1n cn Xc 0 1 n xn c1 xn cn xn1c1 xnn cn If det(X) = 0, then c = 0, and thus the system is linearly independent. If det(X) 0, then there are nonzero values of ci, and thus the system is linearly dependent. ODE FINAL EXAM Review Page 55 of 56 Note: Frequently, the columns of a matrix A are thought of as vectors. The columns of vectors are linearly independent iff det(A) 0. If C = AB, it happens to be true that det(C) = det(A)det(B). Thus, if the columns of A and B are linearly independent, then so are the columns of C. Eigenvalues and Eigenvectors The equation Ax = y is a linear transformation that maps a given vector x onto a new vector y. Special vectors that map onto multiples of themselves are very important in many applications, because those vectors tend to correspond to “preferred modes” of behavior represented by the vectors. Such vectors are called eigenvectors (German for “proper” vectors), and the multiple for a given eigenvector is called its eignevalue. To find eigenvalues and eigenvectors, we start with the definition: Ax x , which can be written as A I x 0 , which has solutions iff det A I 0 The values of that satisfy the above determinant equation are the eigenvalues, and those eigenvalues can then be plugged back into the defining equation Ax x to find the eigenvectors. You will see that eigenvectors are only determined up to an arbitrary factor; choosing the factor is called normalizing the vector. The most common factor to choose is the one that results in the eigenvector having a length of 1. Notes: In these examples, you can see that finding the eigenvalues of an n n matrix involved solving a polynomial equation of degree n, which means that there are always n eigenvalues for an n n matrix. Also in these two examples, all the eigenvalues were distinct. However, that is not always the case. If a given eigenvalue appears m times as a root of the polynomial equation, then that eigenvalue is said to have algebraic multiplicity m. Every eigenvalue will have q linearly independent eigenvectors, where 1 q m. The number q is called the geometric multiplicity of the eigenvalue. Thus, if each eigenvalue of A is simple (has algebraic multiplicity m = 1), then each eigenvalue also has geometric multiplicity q = 1. If 1 and 2 are distinct eigenvalues of a matrix A, then their corresponding eigenvectors are linearly independent. So, if all the eigenvalues of an n n matrix are simple, then all its eigenvectors are linearly independent. However, if there are repeated eigenvalues, then there may be less than n linearly independent eigenvectors, which will pose complications later on when solving systems of differential equations (which we won’t have time to get to…). Symmetric matrices are a subset of Hermitian, or self-adjoint matrices: A*= A; a ji aij Hermitian matrices have the following properties: All eigenvalues are real. There are always n linearly independent eigenvectors, regardless of multiplicities. All eigenvectors of distinct eigenvalues are orthogonal. ODE FINAL EXAM Review Page 56 of 56 If an eigenvalue has algebraic multiplicty m, then it is always possible to choose m mutually orthogonal eigenvectors. Section 7.4: Basic Theory of Systems of First Order Linear Equations Section 7.5: Homogeneous Linear Systems with Constant Coefficients Assume a solution of the form x ξe rt , plug it into the system, then find the eigenvalues for r and the eigenvectors for . Section 7.6: Complex Eigenvalues If the eigenvalues come in complex conjugate pairs, then the solution will break down into an exponential times vectors with sines and cosines for elements.