Language ability

advertisement

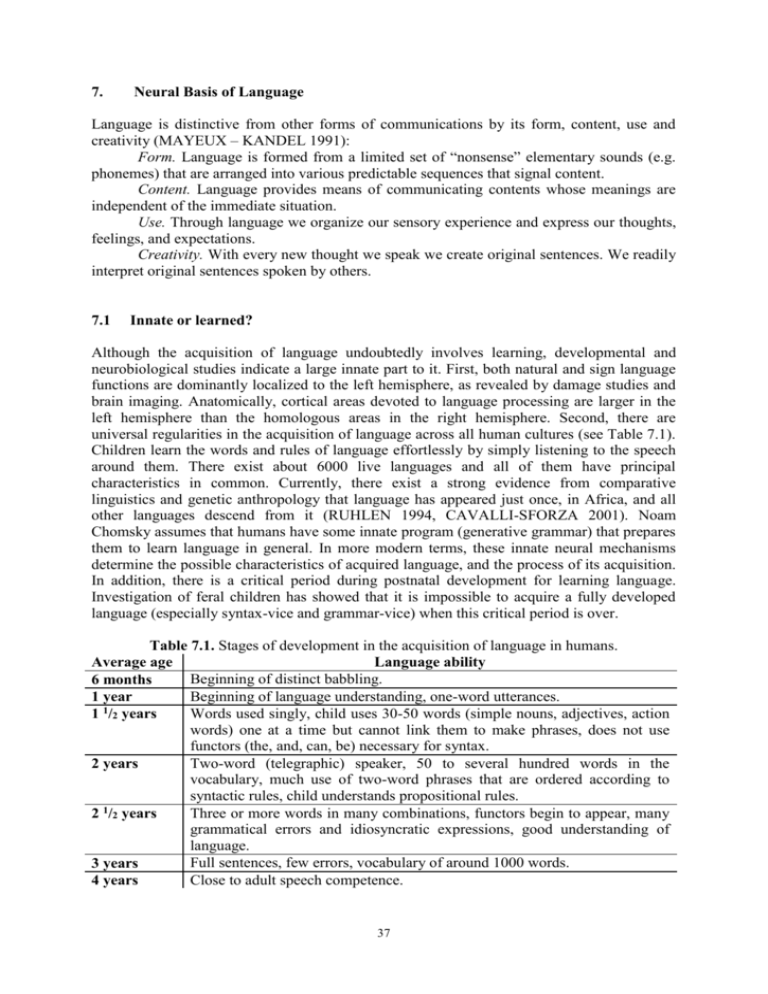

7. Neural Basis of Language Language is distinctive from other forms of communications by its form, content, use and creativity (MAYEUX – KANDEL 1991): Form. Language is formed from a limited set of “nonsense” elementary sounds (e.g. phonemes) that are arranged into various predictable sequences that signal content. Content. Language provides means of communicating contents whose meanings are independent of the immediate situation. Use. Through language we organize our sensory experience and express our thoughts, feelings, and expectations. Creativity. With every new thought we speak we create original sentences. We readily interpret original sentences spoken by others. 7.1 Innate or learned? Although the acquisition of language undoubtedly involves learning, developmental and neurobiological studies indicate a large innate part to it. First, both natural and sign language functions are dominantly localized to the left hemisphere, as revealed by damage studies and brain imaging. Anatomically, cortical areas devoted to language processing are larger in the left hemisphere than the homologous areas in the right hemisphere. Second, there are universal regularities in the acquisition of language across all human cultures (see Table 7.1). Children learn the words and rules of language effortlessly by simply listening to the speech around them. There exist about 6000 live languages and all of them have principal characteristics in common. Currently, there exist a strong evidence from comparative linguistics and genetic anthropology that language has appeared just once, in Africa, and all other languages descend from it (RUHLEN 1994, CAVALLI-SFORZA 2001). Noam Chomsky assumes that humans have some innate program (generative grammar) that prepares them to learn language in general. In more modern terms, these innate neural mechanisms determine the possible characteristics of acquired language, and the process of its acquisition. In addition, there is a critical period during postnatal development for learning language. Investigation of feral children has showed that it is impossible to acquire a fully developed language (especially syntax-vice and grammar-vice) when this critical period is over. Table 7.1. Stages of development in the acquisition of language in humans. Average age Language ability Beginning of distinct babbling. 6 months Beginning of language understanding, one-word utterances. 1 year 1 Words used singly, child uses 30-50 words (simple nouns, adjectives, action 1 /2 years words) one at a time but cannot link them to make phrases, does not use functors (the, and, can, be) necessary for syntax. Two-word (telegraphic) speaker, 50 to several hundred words in the 2 years vocabulary, much use of two-word phrases that are ordered according to syntactic rules, child understands propositional rules. 1 Three or more words in many combinations, functors begin to appear, many 2 /2 years grammatical errors and idiosyncratic expressions, good understanding of language. Full sentences, few errors, vocabulary of around 1000 words. 3 years Close to adult speech competence. 4 years 37 In summary, psychologists and linguists now believe that the mechanisms for the universal aspects of language acquisition are determined by the structures in the human brain. Thus, the human brain is innately prepared to learn and use language. The particular language spoken and the dialect and accent are determined by the social environment. The questions now being debated are which language characteristics derive from neural structures specifically related to language acquisition and which from cognitive characteristics that are more general. 7.2 Language areas in the brain - aphasias The basic model of language processing during the simple task of repeating the word that has been heard is the Wernicke-Geschwind model (MAYEUX – KANDEL 1991) (Fig. 7.1). According to this model, this task involves transfer of information from the inner ear through the auditory nucleus in thalamus to the primary auditory cortex (Brodmann’s area 41), then to the higher-order auditory cortex (area 42), before it is relayed to the angular gyrus (area 39). Angular gyrus is a specific region of the parietal-temporal-occipital association cortex, which is thought to be concerned with the association of incoming auditory, visual and tactile information. From here, the information is projected to Wernicke’s area (area 22) and then, by means of the arcuate fasciculus, to Broca’s area (44, 45), where the perception of language is translated into the grammatical structure of a phrase and where the memory for word articulation is stored. This information about the sound pattern of the phrase is then relayed to the facial area of the motor cortex that controls articulation so that the word can be spoken. It turned out that a similar pathway is involeved in naming an object that has been visually recognized. This time, the input proceeds form retina and LGN to the primary visual cortex, then to area 18, before it arrives to the angular gyrus, from where it is relayed by a particular component of arcuate fasciculus directly to Broca’s area, bypassing Wernicke’s area. Fig. 7.1. Lateral view of the exterior surface of the left hemisphere. Broca’s area (Brodmann’s areas 44/45) is adjacent to the regions of premotor (6) and motor (4) cortices that control the movements of facial expressions, articulation and phonation. Wernicke’s area (area 22) lies in the posterior superior temporal lobe near the primary and higher-order auditory cortices in the superior temporal lobe (areas 41/42). Wernicke’s and Broca’s areas are joined by a fiber tract called the arcuate fasciculus (according to MAYEUX – KANDEL 1991). However, recent cognitive and imaging studies have revealed that language processing involves a larger number of areas and a more complex set of interconnections than just a serial interconnection of Wernicke’s area to Broca’s area. A more realistic scheme illustrating the neural processing of language is shown in Fig. 7.2. Aphasias are disturbances of language caused by insult (trauma, tumor, stroke) to specific regions of the brain – especially to the cerebral cortex7.1. Lesions in different parts of 7.1 Normal language is dependent not only on cortical-cortical but also on subcortical structures and connections. Lesions that do not affect the cerebral cortex, typically vascular lesions in the basal ganglia and/or thalamus, can also result in aphasia. Basal ganglia take part in motor output and thalamus in perception. 38 the cerebral cortex cause selective language disturbances, instead of an overall reduction in language ability. Furthermore, the damage to the brain often affects also other cognitive and intellectual skills to some degree. Speech Auditory processing Auditory cortex (areas 41/42) Semantic association Left anterior inferior frontal cortex Speech Writing Visual processing Visual cortex (areas 17, 18, 19) Motor programming Left premotor area (44, 45, 6) Language comprehension Temporo-parietal cortex (areas 39, 22, 37, 40) Motor output Motor cortex (4) Writing Fig. 7.2. Recent model of the neural processing of language build upon the Wernicke-Geschwind original model. Simplified scheme shows the relationships between various anatomical structures and functional components of language (according to MAYEUX – KANDEL 1991). Connections are reciprocal. Wernicke’s aphasia is characterized by a prominent deficit in language comprehension. The lesion primarily affects area 22 (Wernicke’s area), and often extends to the superior portions of the temporal lobe (areas 39/40), and inferiorly to area 37. Comprehension of both auditory and visual language inputs is severely impaired, accompanied with severe reading and writing disabilities. Speech is fluent, grammatical, but lacks meaning. Phenomena like empty speech, neologisms, and logorrhea occur. Patients are generally unaware of these speech failures, probably because of the lack of their own language comprehension. Occasionally, a right visual field defect is encountered. Conduction aphasia is the result of damage to the arcuate fasciculus. Symptoms resemble those of the Wernicke’s aphasia. Many patients with conduction aphasia have some degree of impairment of voluntary movement. Broca’s aphasia is characterized by a prominent deficit in language production. Lesions affect areas 44 and 45 (Broca’s area), and in severe cases also other prefrontal regions (8, 9, 10, 46) and premotor regions (area 6). The most severe case is the complete muteness. Usually, speech contains only key words, nouns are expressed in singular, verbs in the infinitive. Articles, adjectives, adverbs and grammar are missing altogether. Unlike Wernicke’s aphasia, patients with Broca’s aphasia are generally aware of these errors. Reading and writing are also impaired, because they include also motor components. Some defects in comprehension related to syntax may be encountered. Right hemiparesis and loss of vision is almost always present in this type of aphasia. Lesions to the prefrontal cortical regions other than Broca’s area or to the parietaltemporal cortical regions other than Wernicke’s area can result in various language deficits in production or comprehension, respectively. When certain portions of higher-order visual areas are damaged, specific disorders of reading and/or writing follow (dyslexias, alexias and agraphias). Homologous language areas in the right hemisphere process affective components of language like musical intonation (prosody) and emotional gesturing. Disturbances in affective components of language associated with damage to the right hemispehere are called aprosodias. The organization of prosody in the right hemispehere seems to mirror the 39 anatomical organization of the cognitive aspects of language in the left hemisphere. Thus, patients with posterior lesions do not comprehend the affective content of other people’s language. On the other hand, lesion to the anterior portion of the right hemisphere leads to a flat tone of voice whether one is happy or sad. 7.3 Evolution of language In most individuals the left hemisphere is dominant for language and the cortical speech area of the temporal lobe (the planum temporale) is larger in the left than in the right hemisphere. Since important gyri and sulci often leave impression upon the skull, it is possible to examine human fossils in search for such impressions. Marjorie LeMay searched the fossil skulls for the morphological asymmetries associated with speech and has found them besides in the modern Homo sapiens also in Neanderthal man (Homo sapiens neanderthalensis, dating back 30,000 to 50,000 years) and in Peking man (Homo erectus pekinensis, dating back 300,000 to 500,000 years). The left hemisphere is also dominant for the recognition of species-species cries in Japanese macaque monkeys, and asymmetries similar to those of humans are present in brains of modern-day great apes. G. Rizzolatti et al. (1996) have found out that neurons in the ventral premotor cortex of macaque monkeys are active not only when the monkey prepares (contemplates) for its own actions, but also when she watches others, either monkeys or humans, to perform a given action. Thus, these mirror neurons follow or "imitate" what others are doing. They may form a neural basis for learning by imitation. In humans, the ventral premotor area includes Broca’s area (areas 44/45), which is a specific cortical area associated with expressive and syntactical aspects of language. Thus maybe, evolution of the ventral premotor area with its mirror neurons played an important role in evolution of neural basis for language. It is also intriguing to see areas responsible for contemplation of actions and areas processing language being at the same place in the brain (thinking of thinking). Although the anatomical structures that are prerequisites for language may have arisen early, many linguistits believe that language per se emerged rather late in the prehistoric period of human evolution (about 100,000 years ago). There exist a strong evidence from comparative linguistics and genetic anthropology that language arose only once, in Africa, and all other languages descend from a single original language (RUHLEN 1994, CAVALLISFORZA 2001). Modern Homo sapiens, which evolved in Africa, began to leave Africa some 100,000-80,000 years ago, while taking this first language with them. To gain insights into the evolution of language and also into the its neural basis, studies of communication systems and language abilities of animals are still carried on (SAVAGE-RUMBAUGH – BRAKKE 1996, HERMAN – MORREL-SAMMUELS 1996). It seems that communication systems are the most advanced in animals that have been proven to possess high cognitive and social intelligence, like dolphins, whales, and great apes, like bonobos (pygmy shimpanzees), shimpanzees, gorillas and orangutans. Since these species lack the anatomical apparatus for human-like speech production, researchers have to design sophisticated means to carry out symbolic communication with them. For instance, for communication with bonobos, a lexigram board is being used (SAVAGE-RUMBAUGH – BRAKKE 1996). Abstract symbols depicted on the plastic keybord are each associated with the acoustic English word with that meaning. Words (lexigrams) stand for nouns, adjectives, verbs as well as for concrete objects (like banana, chase) and abstract objects (like good, bad, “monster”). One of the very important findings of Savage-Rumbough and her colleagues is that it is not necessary to train bonobos human language, in spite that in the wild they have their own communication system (gestures, sounds, etc. which are not yet deciphered). Simply by observing and listening to the caretakers’ input, as a child observes and listens to 40 those around it, they began to use symbols spontaneously and appropriately to communicate with humans (among themselves they communicate in bonobo). Thus, instead of limited training sessions, humans and bonobos live together, they play together, go out for walks, cook, groom, etc. During this extensive playful periods, caretakers associate words in their fluent speech with lexigrams (by pressing them) and with content (determined by situation). Exposure to lexigrams and spoken language must begin early in development, during the first year after birth, otherwise the language comprehension and production will be very limited. First bonobos raised in this “human-bonobo” culture, Kanzi and Panbanisha, being 10 years or so old, could understand thousands of English sentences. These and other studies with apes and dolphins showed that comprehensive abilities of these species are more highly developed than their productive competencies (SAVAGE-RUMBAUGH – BRAKKE 1996, HERMAN – MORREL-SAMMUELS 1996). Dolphins can understand and execute thousands of 3-4 word commands in which the order of words changes meaning. The same is true for great apes. Great apes have been shown to understand a variety of concepts and propositions – i.e. same-different, negation, quantifiers, spatial relationships, if-then relationships, causal relationships. The bonobo lexigram board contains about 250 symbols. Kanzi spontaneously began to creatively combine them into two-word combinations to convey a novel meaning. Researchers counted 723 of such combinations (SAVAGE-RUMBAUGH – BRAKKE 1996). Moreover, Kanzi’s (and Panbanisha’s) language productions convey messages about their internal states – what they want to do or how they are feeling (sad, happy). In short summary, bonobos’ capacity for processing grammar remains limited. With respect to the language production, they are at the level of a two-year child (see Table 7.1). With respect to the language understanding, they can be at the level of 2.5-3-year child. Thus, so far it seems that fully developed language is an exclusive form of human communication. However, we should not be absolutely certain about it until natural communication of dolphins, whales and perhaps other species (bonobos, chimpanzees, elephants, octopus), is completely deciphered and understood. An important topic in the study of language is the relation of language and its evolution to other cognitive functions and their evolution, respectively (GÄRDENFORS 2001). Language is to communicate about that which is not here and not now. Thus, a more general cognitive abilities such as being able to create detached representations and being able to make anticipatory planning (planning about future needs, goals, events, etc.) can be a cognitive prerequisites for language. Grammar is an enhanced formal mean for organization of language. Except these general cognitive abilities, since language is a mean of communication, the development of advanced forms of cooperation may go hand in hand with the evolution of language. Without the aid of symbolic communication about detached contents, we would not be able to share visions about the future. We need it in order to convince each other that a future goal is worth striving for (GÄRDENFORS 2001). 41