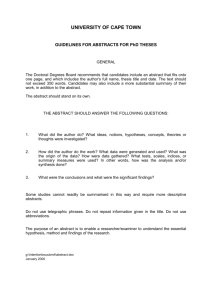

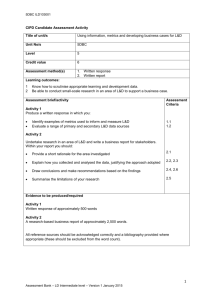

ResearchNotes - Cambridge English

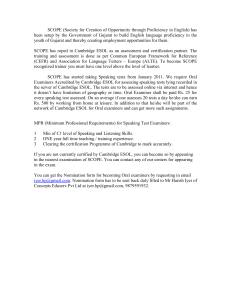

advertisement