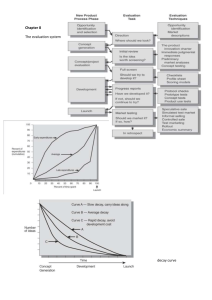

PRODUCTIVE TIME IN EDUCATION A review of the

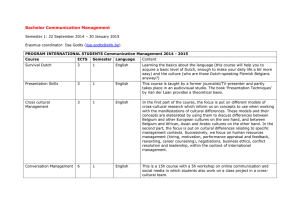

advertisement