An attentional system combining top-down and bottom

advertisement

An attentional system combining top-down and bottom-up influences

Babak Rasolzadeh, Mårten Björkman and Jan-Olof Eklundh

NADA/CVAP

S-100 44 Stockholm, Sweden

{babak2,celle,joe}@nada.kth.se

Abstract

Attention plays an important role in human processing

of sensory information as a mean of focusing resources towards the most important inputs at the moment. In vision it

has been argued attentional processes are crucial for dealing with the complexity of real world scenes. The problem

has often been posed in terms of visual search tasks. It has

been shown that both the use of prior task and context information - top-down influences - and favoring information

that stands out clearly in the visual field - bottom-up influences - can make such tasks more efficient. In generic scene

analysis one presumably has a combination of these influences. In this paper we describe a computational model

that performs such a combination in a principled way. The

system learns an optimal representation of the influences of

task and context and thereby constructs a biased saliency

map representing the top-down information. This map is

combined with bottom-up saliency maps in a process progressing over time as a function over the input. The system

is applied to search tasks in single images as well as in real

scenes, in the latter case using an active vision system capable of gaze shifting. The proposed model has the desired

qualities and goes beyond earlier proposed systems.

1

Introduction

When observing a visual environment humans tend to do

a subconscious ranking of the “interestingness” of the different components of that scene. This ranking depends on

the observer as well as the scene. What this means in a

more pragmatic sense is that our goals and desires interact

with the intrinsic properties of the environment so that the

ranking of components in the scene is done with respect to

how they relate to their surroundings (bottom-up) and to our

objectives (top-down) [10, 16]. In humans the attended region is then selected through dynamic modifications of cortical connectivity or through the establishment of specific

temporal patterns of activity, under both top-down (task-

dependent) and bottom-up (scene-dependent) control [2].

Current models of how this is done in the human visual system generally assume a bottom-up, fast and primitive mechanism that biases the observer towards selecting stimuli based on their saliency (most likely encoded in

terms of center-surround mechanisms) and a second slower,

top-down mechanism with variable selection criteria, which

directs the ’spotlight of attention’ under cognitive, volitional control [20]. In computer vision, attentive processing for scene analysis initially largely dealt with salience

based models, following [20] and the influential model of

Koch and Ullman [13]. However, several computational

approaches to selective attentive processing that combines

top-down and bottom-up influences have been presented in

recent years.

Koike and Saiki [14] propose that a stochastic WTA enables the saliency-based search model to cause the variation of the relative saliency to change search efficiency, due

to stochastic shifts of attention. Ramström and Christensen

[18] calculate feature and background statistics to be used in

a game theoretic WTA framework for detection of objects.

Choi et al. [4] suggest learning the desired modulations of

the saliency map (based on the Itti and Koch model [15])

for top-down tuning of attention, with the aid of an ARTnetwork. Navalpakkam and Itti [17] enhance the bottom-up

salience model to yield a simple, yet powerful architecture

to learn target objects from training images containing targets in diverse, complex backgrounds. Earlier versions of

their model did not learn object hierarchies and could not

generalize, but the current model can do that by combining

object classes into a more general super-class.

Lee et al. [12] showed that an Interactive Spiking Neural

Network can be used to bias the bottom-up processing towards a task (in their case in face detection), but their model

was limited to the influence of user provided top-down cues

and could not learn the influence of context. In Frintrop’s

VOCUS-model [7] there are two versions of the saliency

map; a top-down map and a bottom-up one. The bottomup map is similar to that of Itti and Koch’s, while the topdown map is a tuned version of the bottom-up one. The total

saliency map is a linear-combination of the two maps using

a fixed user provided weight. This makes the combination

rigid and non-flexible, which may result in loss of important

bottom-up information. Oliva et al. [1] show that top-down

information from visual context modulates the saliency of

image regions during the task of object detection. Their

model learns the relationship between context features and

the location of the target during past experience in order to

select interesting regions of the image.

In this paper we will define the top-down information as

consisting of two components: 1) task-dependent information which is usually volitional, and 2) contextual scenedependent information. We then propose a simple, but effective, Neural Network that learns the optimal bias of the

top-down saliency map, given these sources of information.

The most novel part of the work is a dynamic combination of the bottom-up and top-down saliency maps. Here

an information-measure (based on entropy measures) indicates the importance of each map and thus how the linearcombination should be altered over time. The combination

will vary over time and be governed by a differential equation that can be solved at least numerically for some special

cases. Together with a mechanism for Inhibition-of-Return,

this dynamic system manages to adjust itself to an balanced

behavior, where neither top-down nor bottom-up information is ever neglected.

by combining top-down and bottom-up influences in a principled way.

Our framework will be based on the notion of salience

maps, SMs. To define a Top-down SM, SMT D (t), t denoting time, we need a preferably simple search-system based

on a learner that is trained to find objects of interest in cluttered scenes. In parallel, we apply an unbiased version of

the same system to provide a Bottom-up SM, SMBU (t). In

the following we will develop a way of computing these two

kinds of maps and show that it is possible to define a dynamic active combination where neither one always wins,

i.e. the system never reaches a static equilibrium, although

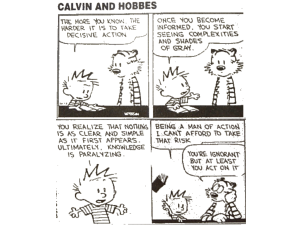

it sometimes reaches dynamic ones. The model (Fig. 1)

consists of four main parts:

• Biased Saliency Map with weights,

• Learning Top-down mechanism by weight association,

• Inhibition-of-Return and stochastic Winner-Take-All,

• Combination of SMBU (t) and SMT D (t), that

evolves over time t.

Our model applies to visual search and e.g. recognition in

general, but especially to cases when new visual information can be acquired. In fact we run on an active vision system capable of fixating on objects in a scene in real-time.

2.1

Figure 1. An attentional model that combines Bottom-up and Top-down saliency,

with Inhibition-of-Return and a stochastic

Winner-Take-All mechanism, with contextand task-dependent top-down weights.

Several computational models of visual attention have

been described in the literature. One of the best known systems is the Neuromorphic Vision Toolkit (NVT), a derivative

of the Koch-Ullman model [13] that was (and is) developed

by the group around Itti et al. [15, 11, 17]. We will use

a slightly modified version of this system for our computations of salience maps. Some limitations of the NVT have

been demonstrated, such as the non-robustness under translations, rotations and reflections, shown by Draper and Lionelle [6]. However, our ultimate aim is to develop a system

running on a real-time active vision system and we therefore

seek to achieve a fast computational model, trading off time

against precision. NVT is suitable in that respect.

2.1.1

2

The Model

It is known in human vision that even if a person is

in pure exploration mode (i.e. bottom-up mode) his/hers

own preferences affect the search scan-paths. On the other

hand, even if the search is highly volitional (top-down), the

bottom-up pop-out effect is not suppressible. This is called

attentional capture [19]. Our aim is to introduce a model

that displays such a behavior, i.e. does attentional capture,

Biased Saliency Maps

Weighting the SM

As mentioned above we base both Top-down and Bottomup salience on the same type of map. However, to obtain the

Top-down version we bias this saliency map by introducing

weights for each feature and conspicuity map. Thus our

approach largely follows Frintrop [7], but weighting is done

in a different way, which has important consequences, as

will be shown later.

Similarly to Itti’s original model, we use color, orientation and intensity features. However, the four broadly

tuned color channels R, G, B and Y, all calculated according to the NVT-model, are further weighted with the individual weights (ωR , ωG , ωB , ωY ). The orientation maps

(O0◦ , O45◦ , O90◦ , O135◦ ) are computed by Gabor-filters

and weighted with similar weights (ω0◦ , ω45◦ , ω90◦ , ω135◦ )

in our model. Following the original version, we then create scale-pyramids for all 9 maps (including the intensity

map I) and form conventional center-surround-differences

by across-scale-subtraction and apply Itti’s normalization

operator. This leads to the final conspicuity

maps for intensity I¯ , color C̄ and orientation Ō . As a final set

of weight parameters we introduce one weight for each of

these maps, (ωI , ωC , ωO ). To summarize the calculations:

RG(c, s) = |(ωR R(c) − ωG G(c))

(ωR R(s) − ωG G(s))|

BY (c, s) = |(ωB B(c) − ωY Y (c))

(ωB B(s) − ωY Y (s))|

Oθ (c, s) = ωθ |Oθ (c)

C̄ =

MM

c

Oθ (s)|

N (RG(c, s)) − N (BY (c, s))

s

Ō =

X

MM

N(

θ

I¯ =

c

MM

c

N (Oθ (c, s)))

s

N (|I(c)

I(s)|)

s

SMT D = ωI I¯ + ωC C̄ + ωO Ō

L

Here denotes the across-scale-subtraction,

the acrossscale-summation. The center-scales are c ∈ {2, 3, 4} and

the surround-scales s = c+δ, where δ ∈ {3, 4} as proposed

by Itti and Koch. We call the final modulated saliency map

the Top-down map, SMT D . The Bottom-up map, SMBU

can be regarded as the same map with all weights being 1.

As pointed out by Frintrop, the number of introduced

weights in some sense represents the degrees of freedom

when choosing the “task” or the object/region to train on.

We have modified Frintorp’s scheme in the way feature

weights are partitioned. In the following section we will

see the benefit of this.

2.2

Weight-optimization and Contextual

vector

A relevant question to pose is: how much “control” do

we have over the Top-down map by changing the weights?

This, of course, depends on what sort of “control” we want

to have over the saliency map, which in turn depends on

what we mean by top-down information. As said before we

divide top-down information in two categories; i) task and

ii) context information. To tune and optimize the weightparameters of the SM for a certain task, we also have to

examine what kind of context-information would be important. For instance, the optimal weight-parameters for the

same task typically differ from one context to the other.

These two issues will be considered in this section.

2.2.1

Optimization method(s) for the ROI

First we need to formalize the optimization problem. For a

given Region Of Interest (ROI) characteristic for a particular object, we define a measure of how the Top-down map

differs from the optimum as:

eROI (ω̄) =

max (SM (ω̄)) − max ( SM (ω̄)|ROI )

max (SM (ω̄))

where ω̄ = (ωI , ωO , ωC , ωR , ωG , ωB , ωY , ω0◦ , ω45◦ , ω90◦ ,

ω135◦ ) is the weight vector. The optimization problem will

then be given by ω̄opt = arg min eROI (ω̄). ω̄opt maximizes peaks within the ROI and minimizes peaks outside

ROI. With this set of weights, we significantly increase

the probability of the winning point being within a desired

region. To summarize; given the task to find a certain (type)

of ROI we are able to find a good set of hypotheses by

calculating the Top-down map SMT D (ω̄opt ).

Let us take a closer look at the optimization problem.

It can be shown [?] that the sets

of

weights

(ωI , ωO , ωC ) , (ωR , ωG , ωB , ωY )

and

(ω0◦ , ω45◦ , ω90◦ , ω135◦ ) are mutually independent during

optimization and thus each set can be optimized separately,

unlike the distribution of weights proposed by Frintrop.

This in turn speeds up the numerical solution considerably.

Two different numerical methods were applied to the optimization problem: constrained non-linear programming

(CNP) and particle-swarm optimization (PSO). Although

PSO converges to the global optimum more often than CNP,

we chose to use CNP since it was much faster, despite that

it found a lot of local optima before finding any global ones.

2.2.2

Defining the Context

The scheme for optimizing the weights is in principle independent of context. However, the system must also include the correlation between the optimal weights and the

environmental top-down information, i.e. we have to know

both types of top-down information (context- and task dependent) in order to derive the set of optimal weights.

There is a large number of different definitions of context.

However, we only need to consider definitions relevant to

our particular choice of weights. A simple example is that

a large weight on the red color channel would be favorable when searching for a red ball on a green lawn, but the

same weighting would not be appropriate when searching

for the same ball in a red room! With this in mind a justified context definition would be in terms of histograms,

one histogram for each conspicuity map. We have, however, chosen a much simpler way of representing context,

namely by the total energy of each feature map, in our case

a 9-dimensional contextual vector, here denoted as ᾱ (one

dimension for intensity, four for colors and four for orientations).

2.3

Learning Context with a Neural Network

Now assume that we have the optimized weight vectors

and contextual vectors for a large set of examples with desired ROI:s. The goal of our system is to automatically correlate the context information with the choice of optimal

weight-parameters (for a certain type of ROI) without any

optimization. This can be achieved by using the given set

of optimized examples (consisting of several pairs {ω̄opt ,ᾱ}

for each type of ROI) as a training set. Of course, this requires that there is some interdependence between the two.

We will show that for each type of ROI/object (10 objects

in our tests) such a coupling can be found, if the training set

obeys certain criteria.

Since the training involves a pattern association task, a

method of choice is neural networks (NN). As always with

NNs the best suited structure of the net depends on the specific dynamics of the training and test domains. Therefore

when one finds the best fitted net-structure for the training

set there is immediately a constraint on the test set, i.e. we

cannot expect the net to perform well in new tasks where it

lacks training [8].

Our NNs use interpolative heteroassociation for learning, which in essence is a pairing of a set of input patterns

and a set of output patterns, where an interpolation is done

in lack of recall. For example, if ᾱ is a key pattern and ω̄

is a memorized pattern, the task is to make the network retrieve a pattern “similar” to ω̄ at the presentation of anything

similar to ω̄. The network was trained with LevenbergMarquardt’s algorithm and as activation function in the NN

we used an anti-symmetric function (hyperbolic tangent) in

order to speed up the training.

2.4

Stochastic WTA-network and IOR

As stated above we will consider the saliency maps as

2D-functions that evolve over time. To select the next point

of interest, we suggest a Winner-Take-All (WTA) network

approach similar to that of Koch & Ullman, but with a slight

modification. Since we want to avoid a purely deterministic behavior of the model, a stochastic feature is added.

We view the final saliency map as a representation of a 2D

probability density function, i.e. the SM-value at a particular pixel represents the log-probability of that point being

chosen as the next point of interest. Thus the additive nature

of the feature integration process corresponds to multiplications when viewed in terms of probabilities.

We use a top-down coarse-to-fine WTA selection

process, similar to that of Culhane & Tsotsos [5], but instead of simply selecting the dominating points in each

level of a saliency pyramid, we use random sampling with

saliency values as log-probabilities. Thus also low saliency

point might be chosen, even if its less likely. Furthermore,

the stochastical nature make the system less prune to getting

stuck at single high saliency points.

To prevent the system from getting stuck in certain regions also in a static environment, we have implemented an

additional Inhibition-of-Return (IOR) mechanism. We let

the SM-value of the winner point (and its neighbors) decay, until another point wins and the gaze is shifted. The

stronger and closer a point pi is to the winner point pwin ,

the faster its SM-value should decay. This leads to the difSM0

IOR

ferential equation: ∂SM

= − kpi −p

· SM |pi ,

∂t

pi

win k+1

that with the boundary condition SM (t = 0) = SM0 has

the solution

SM0 · t

SMIOR (t) = SM0 · exp −

.

kpi − pwin k + 1

Thus we have obtained a non-deterministic and nonstatic saliency calculation that can be biased by changing

the weight-parameters. Next we will show how the Topdown and Bottom-up saliency maps can be combined.

2.5

Top-down ft. Bottom-up

So far we have defined a Bottom-up map SMBU (t)

representing the unexpected feature-based information flow

and a Top-down map SMT D (t) representing the taskdependent contextual information. To obtain a mechanism

for visual attention we need to combine these into a single saliency map that helps us to determine where to “look”

next.

2.5.1

The E-measure

In order to do this we rank the “importance” of the saliency

maps, using a measure that indicates how much gain/value

there is in attending that single map at any particular moment. To do this we define an energy measure (E-measure)

following Hu et al, who introduced the Composite Saliency

Indicator (CSI) for similar purposes [9]. In their case, however, they applied the measure on each individual feature

map. We will use the same measure, but only on the Topdown and Bottom-up saliency maps.

Importance is computed as follows. First the SMs

are thresholded, using a threshold derived through entropy

analysis [21]. This results in a set of salient regions. Next

the spatial compactness, Sizeconvexhull , is computed from

the convex hull polygon {(x1 , y1 ); ...; (xK , yK )} of each

salient region. If there are multiple regions the compactness

of all regions is summed up. Using the saliency density

P

|SM (p)−SM (q)|

P

q∈θn (p)

Dsaliency =

p∈θ

|θn (p)|

|θ|

,

where θ is a the set of all salient points and θn (p) represents

the set of salient neighbors to point p, the E-measure is then

defined as

ECSI (SM ) =

Dsaliency

.

Sizeconvexhull

Accordingly, if a particular map has many salient points

located in a small area, that map might have a higher Evalue than one with even more salient points, but spread

over a larger area. This measure favors SMs that contain a

small number of very salient regions.

2.5.2

Combining SMBU and SMT D

We now have all the components needed to combine the two

saliency maps. We may use a regulator analogy to explain

how. Assume that the attentional system contains several

(parallel) processes and that a constant amount of processing power has to be distributed among these. In our case this

means that we want to divide the attentional power between

SMBU (t) and SMT D (t). Thus the final saliency map will

be a linear combination

SMf inal = k · SMBU + (1 − k) · SMT D .

Here the k-value varies between 0 and 1, depending on the

relative importance of the Top-down and Bottom-up maps,

according to the tempo-differential equation

k>0

1

EBU (t)

dk

k

0≤k≤1

= −c · k(t) + a ·

, k=

dt

ET D (t)

0

k<0

where Ex (t) = ECSI (SM x (t)). The two parameters c

and a, both greater than 0, can be viewed as the amount of

concentration (devotion on search-task) and the alertness

(susceptibility for bottom-up info) of the system. As far as

we know the equation above has no analytical solution and

we therefore solve it numerically between each attentional

shift.

The first term represents an integration of the second

one. This means that a saliency peak needs to be active

for a sufficient number of updates to be selected, making

the system less sensitive to spurious peaks. If the two energy measures are constant, k will finally reach an equilibrium at aEBU /cET D . In the end, SMBU and SMT D will

be weighted by aEBU and max(cET D − aEBU , 0) respectively. Thus the top-down saliency map will come into play,

as long as ET D is sufficiently larger than EBU . Since ET D

is larger than EBU in almost all situations when the object

of interest is visible in the scene, simply weighting SMT D

by ET D leads to a system dominated by the top-down map.

The dynamics of the system comes as a result of integrating the combination of saliencies with the Inhibitionof-Return mechanism from previous section. If a single

salient top-down peak is attended to, saliencies in the corresponding region will be suppressed, resulting in a lowered

ET D value and less emphasis on the top-down flow, making bottom-up information more likely to come into play.

However, the same energy measure will hardly be affected,

if there are many salient top-down peaks of similar strength.

Thus the system tends to visit each top-down candidate before attending to purely bottom-up ones. This, however, depends on the strength of each individual peak. Depending

on alertness, strong enough bottom-up peaks could just as

well be visited first.

3

Experimental Results

As described above the model we use consists of three

main modules:

• The optimization of top-down weights (off-line)

• The Neural Network which associates context and

weight (on-line)

• The dynamical combination of SMBU and SMT D

Correspondingly, the experiments below were divided to

show how these different modules affect the performance of

the model. We will present some results from these experiments and discuss their implications for our understanding

of the model. Also some experimental results on the overall performance are discussed. Fig. 2 shows the 10 objects

used in the experiments. Training of these object, with their

Figure 2. The set of objects used for experiments.

respective ROIs, was done in two different complementary

ways:

1) IMageDataBase-Training (IMDBT): six sets of 12 different images each, with all 10 objects visible in each image. The sets were taken with different backgrounds and

lighting conditions (dark/bright); resulting in 96 images,

each containing all 10 objects and annotated with the coordinates of the ROI for each object in every image.

2) Active Self-Training (AST): a real-time system with

stepping-motor controlled webcam equipped with a path

prediction algorithm (based on the tilt and pan of the camera) that keeps track of selected ROI (object) and can therefore change its view, while keeping the ROI in sight.

In both cases optimization was done for each ROI (object) and image resulting in a large database of {ROI, ω̄opt ,

ᾱ}. These databases were then separately used for training

the NNs.

3.1

Whenever one seeks to optimize, it is important to understand the broader perspective of the optimized system’s

performance. Here this implies that although one may reach

a global minimum (given the error function defined earlier), it does not necessarily mean that our Top-down map

is “perfect” (like it is in Fig. 3). In fact, the Top-down

map may not rank the best hypothesis the highest, in-spite

of eROI (ω̄) being at its global minimum for that specific

image and object. What this means is that for some objects min[eROI (ω̄opt )] 6= 0, or simply that our optimization

method failed to find a proper set of weights the Top-down

map at the desired location, see Fig. 4.

Figure 3. An example of successful optimization; the ROI is marked in the left image. Without optimization (unitary weights)

the saliency map is purely bottom-up (middle), but with an optimization that minimizes

eROI (ω̄) (in this case to 0) the optimal weightvector ω̄opt clearly ranks the ROI as the best

hypothesis of the Top-down map (right).

Figure 4. An example of poor optimization; although the optimization may reach a global

minimum for eROI (ω̄) (in this case >0) the optimal weight-vector ω̄opt doesn’t rank the ROI

as the best hypothesis of the Top-down map

(right).

Another observation worth mentioning is the fact that

there may be several global optima in weight space each

resulting in different Top-down maps. For example, even

if there exists many linear independent weight-vectors ω̄i

for which eROI (ω̄i ) = 0, the Top-down maps SMT D (ω̄i )

will in general be different from one another (with different

ECSI -measure).

3.2

Saccades

BU

N N25% (ᾱ)

N N50% (ᾱ)

N N100% (ᾱ)

N N25% (.)

N N50% (.)

N N100% (.)

Opt.

Optimization results

NN-training

When performing the pattern association (equivalent

with context-learning) on the Neural Network it is impor-

1

7.4%

16.6%

22.3%

43.9%

13.0%

9.9%

6.2%

78.1%

2

11.3%

15.1%

10.3%

11.4%

14.6%

13.4%

11.9%

3.1%

3

18.5%

24.9%

19.8%

13.7%

18.1%

11.2%

14.5%

10.5%

≥4

63.0%

43.4%

47.6%

31.0%

54.3%

65.5%

67.4%

8.3%

Table 1. Percentage of test images as a function of saccades required to find ROI using the different methods in the leftmost

column. The results were averaged over

the entire test set of objects(ROI:s). BU is

purely bottom-up search, N Ni (ᾱ) is top-down

search guided by a Neural Network (trained

on i% of the training data available) choosing

context-dependent weights, and N Ni (.) is the

same without any context information.

tant that the training data is “pure”. This means that only

training data that gives the best desired result should be included. Thus for both modes of training (IMDBT and AST)

only examples {ROI, ω̄opt , ᾱ} where eROI (ω̄opt ) = 0

were used. To examine the importance of our context information we created another set of NNs trained without any

input, i.e. simple pattern learning. For the NN-calculations

this simply leads to an averaging network over the training

set {ROI, ω̄opt }. Quantitative results of these experiments

are shown in Table 1. Results using optimized weights (last

row) in some sense represent the best performance possible, whereas searches using only the Bottom-up map perform the worst. One can also observe the effect of averaging (learning weights without context) over a large set; you

risk to always perform poor, whereas if the set is smaller

you may at least manage to perform well on the test samples that resemble some few training samples. Each NN

had the same structure, based on 13 hidden neurons, and

was trained using the same number of iterations. Since all

weights (11) can be affected by all context-components (9)

and since each weight can be increased, decreased or neither, a minimum number of 12 hidden units is necessary for

good learning.

3.3

SM-combination and interaction

The final set of experiments show the behavior of the

whole system, in particular its behavior as a function of a

and c. Although we did not define any desirable formal

behavior, we want to test the dynamics of control as described in Section 2.5.2. For this reason several sequences

consisting of 30 frames each were created in which the 10

objects were removed one-by-one, with the blue car as the

object being searched for. This scenario generally resulted

in an incremental raising of the E-measure of the two maps

during the sequence. For three different combinations of

alertness and concentration, the gaze shifts were registered

as well as the dynamic parameter values (EBU (t), ET D (t)

and k(t)), see Fig. 8. Figures 5-7 show images of each

attentional shift observed during the experiments.

Figure 7. An high alertness case: Frames from

the resulting sequence with a > c, corresponding to the arrows in the right graph of

Fig. 8.

Figure 5. A balanced case: Frames from the resulting sequence with a ≈ c, corresponding

to the arrows in the left graph of Fig. 8.

Figure 6. An high concentration case: Frames

from the resulting sequence with a < c, corresponding to the arrows in the middle graph

of Fig. 8.

When choosing a > c the system should favor the

Bottom-up map and thus attend to more bottom-up peaks

(BU), than top-down ones (TD). As seen to the right in Fig.

8, this is achieved thanks to a higher average of k(t) during

the sequence. Similarly, a < c favors the Top-down map

by keeping the average k(t) low, which thus results in TDpeaks dominating. In the case where a ≈ c, the k-value is

controlled entirely by the E-measures. The result is a more

balanced “competition” between TD- and BU-peaks.

These results show that the behavior of the system (regarding preference towards bottom-up or top-down information) can be biased by the choice of the alertness (a) and

concentration (c) parameters. However, they also demonstrate the fact that even if the system can be very much biased towards one map, there is still possibility for the other

map to affect the final saliency, due to the stochastic WTA

and the IOR mechanism.

4

Conclusions

The aim of this paper has been to describe a computational model for visual attention capable of balancing

bottom-up influences with information on context and task.

The balance is controlled by a single differential equation

in combination with a mechanism for Inhibition-of-Return

and stochastic Winner-Take-All. Unlike many previous examples of similar models, the balance is not fixed, but can

vary over time. Neural networks were used to learn the influence of context information on search tasks and it was

shown that even a simple context model might considerbly

improve the ablility to find objects of interest.

For future versions we intend to explore other models for

context and task association. We further hope to formalize

the behaviour of the system and feed this information back

into the network. We also intend to integrate the proposed

attentional model with an existing system for recognition

and figure-ground segmentation [3], and perform more experiments on robotic tasks, such as mobile manipulation.

To make the system more responsive to changes in the environment, the model needs to complemented with motion

and stereo cues.

5

Acknowledgements

We would like to thank the staff at CVAP for their time

and cooperation. We appreciate the advice of professor

Örjan Ekeberg at SANS (KTH) concerning the optimization problem and the neural networks used.

parameter values

2.5

BU TD

TD

ETD(t)

2

TD

BU

BU

EBU(t)

BU

TD

k(t)

TD

BU

1.5

1

0.5

0

0

5

10

15

20

25

30

35

time t

parameter values

2.5

ETD(t)

EBU(t)

2

TD

TD

k(t)

TD

BU

BU

1.5

1

0.5

0

0

5

10

15

20

25

30

35

time t

parameter values

3.5

BU

BU

BU

3

TD

ETD(t)

EBU(t)

TD

k(t)

2.5

BU

2

BU BU BU BU

1.5

1

0.5

0

0

5

10

15

20

25

30

35

time t

Figure 8. The dynamics of the combination of

saliency maps; a ≈ c (upper), a < c (middle),

a > c (lower), all three with IOR.

References

[1] M. S. C. A. Oliva, A. Torralba and J. M. Henderson. Topdown control of visual attention in object detection. Proc.

IEEE Int’l Conf. Image Processing, (I):253–256, September

2003.

[2] C. A. B. Olshausen and D. van Essen. A neurobiological

model of visual attention and invariant pattern recognition

based on dynamic routing of information. Journal of Neuroscience, (13):4700–4719, 1993.

[3] M. Björkman and J.-O. Eklundh. Foveated figure-ground

segmentation and its role in recognition. Proc. British Machine Vision Conf., pages 819–828, September 2005.

[4] S. Choi, S. Ban, and M. Lee. Biologically motivated visual attention system using bottom-up saliency map and topdown inhibition. Neural Information Processing-Letters and

Review, (2(1)), 2004.

[5] S. Culhane and J. Tsotsos. A prototype for data-driven visual

attention. Proc. Int’l Conf. on Pattern Recognition, A:36–39,

1992.

[6] B. Draper and A. Lionelle. Evaluation of selective attention under similarity transforms. Proc. Int’l Workshop on

Attention and Performance in Computer Vision, pages 31–

38, April 2003.

[7] S. Frintrop. VOCUS: A Visual Attention System for Object

Detection and Goal-directed Search. PhD thesis, University

of Bonn, July 2005.

[8] S. Haykin. Neural Networks: A Comprehensive Foundation.

Prentice Hall, Upper Saddle River, NJ, USA, 1994.

[9] Y. Hu, X. Xie, W.-Y. Ma, L.-T. Chia, and D. Rajan. Salient

region detection using weighted feature maps based on the

human visual attention model. IEEE Pacific-Rum Conf. on

Multimedia, 2004.

[10] L. Itti. Models of Bottom-Up and Top-Down Visual Attention. PhD thesis, California Institute of Technology, January

2000.

[11] L. Itti and C. Koch. Computational modeling of visual attention. Nature Reviews Neuroscience, (2(3)):194–203, Mars

2001.

[12] H. B. K. Lee and J. Feng. Selective attention for cueguided

search using a spiking neural network. Proc. of the Int’l

Workshop on Attention and Performance in Computer Vision, pages 55–62, 2003.

[13] C. Koch and S. Ullman. Shifts in selective visual attention:

Towards the underlying neural circuitry. Human Neurobiology, (4):219–227, 1985.

[14] T. Koike and J. Saiki. Stochastic guided search model for

search asymmetries in visual search tasks. Biologically Motivated Computer Vision, pages 408–417, 2002.

[15] C. K. L. Itti and E. Niebur. A model of saliency-based visual

attention for rapid scene analysis. IEEE Transactions on

Pattern Analysis and Machine Intelligence, (20(11)):1254–

1259, 1998.

[16] Z. Li. A saliency map in primary visual cortex. Trends in

Cognitive Sciences, (6(1)):9–16, July 2002.

[17] V. Navalpakkam and L. Itti. Sharing resources: Buy attention, get recognition. Proc. Int’l Workshop Attention and

Performance in Computer Vision, July 2003.

[18] O. Ramström and H. Christensen. Object detection using

background context. Proc. Int’l Conf. Pattern Recognition,

pages 45–48, 2004.

[19] J. Theeuwes. Stimulus-driven capture and attentional set:

Selective search for colour and visual abrupt onsets. Journal

of Experimental Psychology: Human Perception & Performance, (20):799–806,, 1994.

[20] A. Treisman and G. Gelade. A feature integration theory of

attention. Cognitive Psychology, (12):97–136, 1980.

[21] A. Wong and P. Sahoo. A gray-level threshold selection

method based on maximum entropy principle. IEEE Trans.

Systems, Man and Cybernetics, (19):866–871, 1989.