Basics of Hypothesis Testing

advertisement

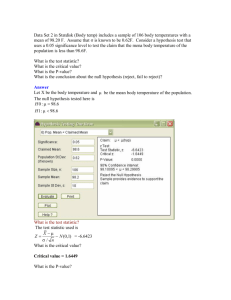

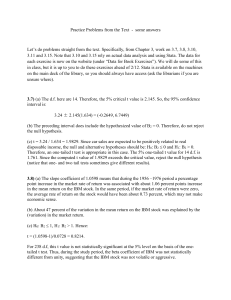

Basics of Hypothesis Testing1 Hypothesis testing is a handy way to carry out some statistical inference about the population based on sample information. First, we need a sample . . . we generally assume that sample has a normal distribution, with common mean and variance, in order to do the majority of our testing.2 Put mathematically, we’re hoping that X ~ N[µ,σ 2 ]. We then use our sample mean to make statements about the population mean µ. Depending on what type of statement we’re making, we use either a one-tailed or a twotailed test. Both tests require the calculation of a t statistic. The T Statistic The first piece of information we need is a T statistic for our data, which is given by T= x −µ s n where x is our sample mean, µ is the hypothesized population mean, s is our estimated standard deviation, and n is our sample size. If you were looking at a table of summary statistics, the denominator could be derived in two ways; either divide the estimated standard deviation by the root of the sample size, or just take use standard error statistic.3 We also need a critical t value, which is calculated independent of our data (minus the fact that degrees of freedom are related to n). It is determined by the distribution of a t statistic, and will be based off only our significance level and our degrees of freedom.4 Back in the day, the critical t value used to be found using tables in the back of stat books. For this class, we often use Excel to get the critical t. To do so, the formula is: =TINV(α, df) or =TINV((2*α, df)) where α is the significance level we’re going to use for our test. The formula on the left applies for 2-tailed tests, while the formula on the right applies for 1-tailed tests. Aside: Midterm 1 This handout is a brief review of material covered in lecture and the course notes. It is my production, and not that of Dr. Cameron. It should not be considered as a substitute for or modification of any course material. In any case of discrepancy, Dr. Cameron’s material supersedes any notes made here. 2 This assumption makes the t test the most powerful test on the population mean. 3 The standard error measures the variability in the mean of our sample. Since the mean is an average, it is always going to be less variable than any one particular random draw. The larger the number of draws taken from a normal distribution, the less variable the mean is going to be, as data will naturally begin to cluster around a particular value. That’s why we use the root of n to scale down our error. 4 In this case, our degrees of freedom is given by (n-1), because we have lost one degree of freedom when we estimated the variable s. Make sure you’re familiar with this formulation. Consider this information provided on one of Dr. Cameron’s past midterms: TINV(0.01,50) TINV(0.10,50) 2.67779 1.64787 You will be asked to do hypothesis testing, and you’ll need to know which applicable critical t to use. For example, if he asked you to do a two-tailed test with a significance level of 0.01 and 50 degrees of freedom, the first one (2.678) would be appropriate. If he asked you to do a one-tailed test with a significance level of 0.05, you’d want the second value (1.648). After we get our critical t value, we compare it to the T calculated using our data. If we’re doing a two-tailed test, we reject the null whenever the absolute value of our T is greater than the critical t. If we’re doing a one-tailed test, our rejection region depends on which test we’re using. We’ll go into more detail on that in a second. Significance Levels Our α is chosen by deciding how certain we want to be in our testing. We could be 100 percent certain about our inference if we were general enough, but then the inference would be pretty useless. Instead, we allow ourselves a little bit of an error cushion in exchange for being allowed to make some more specific statements. The amount of error we want to allow is our significance level. Say we choose a significance level of 0.05. That means that if our data tells us to reject our null hypothesis, 95% of the time we’d be correct to do so, but 5% of the time we will reject when we shouldn’t. A significance level of 0.01 means that 99% of the time we’d be correct to reject the null, while 1% of the time we’d be wrong to do so. Similar logic applies with any specified significance level. A little intuition using the two-tailed case Like any other random variable, the T value calculated from our data has a particular distribution. As the T value gets larger and larger in absolute value, there is a lower and lower probability of seeing such values if the null hypothesis is true. When we run a hypothesis test, we see if the T statistic falls toward the center or toward the tails. Whenever it falls too far out in the tails (too far being determined by our critical t statistic), we say there is a low probability that we would see that value if it our null were true, and reject the null. There two reasons that we could find we should reject our null. First, it could be that our null hypothesis is incorrect, which is supported by our sample data. Second, it could be that our null hypothesis is correct, but our sample data is way off base (i.e. we got a strange sample for one reason or another). When we use a significance level of 0.05, we’re setting it up so that 95% of the time, if our data tells us to reject the null, it is because in fact the null hypothesis is incorrect. That other 5% of the time, we’re rejecting when we shouldn’t, because our sample just happens to be strange and is leading us to the wrong conclusion about the population. The P Value P values are another way of deciding if we should or shouldn’t reject the null hypothesis. The formula in Excel is =TDIST(T, df, tails) where T is equal to the t statistic calculated using our data, df is again our degrees of freedom, and tails is telling Excel if we’re doing a 1 or 2-tailed test. The p value tells us what the probability of incorrectly rejecting the null hypothesis given a particular value for T. For example, a p value of 0.023 would mean that given our T stat, we will be incorrectly rejecting the null 2.3% of the time. A p value of 0.359 would mean that given our T, we would be wrong to reject the null 58.2% of the time. Whenever the p value is LESS than the significance level at which we’re testing, we reject the null. Say we were testing at a 5% significance level. Than the p value of 2.3% would mean that we reject our null (what with 2.3 < 5) whereas the 35.9% would mean we fail to reject (as 35.9 > 5). Two-tailed vs. One-Tailed Tests Told you we’d come back to this. There are two differences worth noting between twotailed and one-tailed tests. The first is what we consider to be our rejection region in the t distribution. For a two-tailed test, we reject if our T statistic is too extreme in either direction. In other words, we reject if the absolute value of our T is greater than the critical t. For a one-tailed test, our rejection region depends on our null hypothesis.5 • • If our null hypothesis is that µ is less than or equal to µ0, then the rejection region is in the upper tail (i.e. a very positive T) If our null hypothesis is that µ is greater than or equal to µ0, then the rejection region is in the lower tail (i.e. a very negative T) The other difference is how we formulate our question. For a two-tailed test, the null is always that µ is equal to some value, where the alternative is that µ is not. For a onetailed test, the null hypothesis is determined by what we are trying to test. As a general rule, we try to make our hypothesis to be tested the alternative (HA), because it is harder to reject the null than to accept. Said another way: 5 A good general overview of hypothesis testing, including null formulation and rejection regions, is on page 54 of the class reader. • • If the hypothesis to be tested is that µ is greater than µ0, then we make the null hypothesis that µ is less than or equal to µ0. If the hypothesis to be tested is that µ is less than µ0, then we make the null hypothesis µ is less than or equal to µ0.