How to compare distributions? or rather Has my sample been drawn

advertisement

How to compare distributions?

or rather

Has my sample been drawn from this distribution?

or even

Kolmogorov-Smirnov and the others...

Stéphane Paltani

ISDC/Observatoire de Genève

1/23

The Problem... (I)

Sample of observed values (random variable):

{Xi}, i=1,...,N

Expected distribution:

f(x), Xmin ≤ x ≤ Xmax

→ Is my {Xi},

i=1,...,N sample a probable outcome from a draw of N values

from a distribution f(X),

Xmin ≤ X≤ Xmax

?

2/23

Example... (I)

An X-ray detector collects photons at specific times:

{Xi}≡{ti}, i=1,...,N

Is the source constant ?

Expected distribution:

f t =

1

, tmin ≤ t ≤ tmax

3/23

t max −t min

The Problem... (II)

Sample of observed values (random variable):

{Xi}, i=1,...,N

Sample of observed values (random variable):

{Yj}, j=1,...,M

→ Are the {Xi},

i=1,...,N distributed the same way as the {Yj}, j=1,...,M

?

4/23

Example... (II)

We measure the amplitude of variability of different types of active galactic nuclei.

Seyfert 1 galaxies:

QSOs:

{σiS},

{σjQ},

{σkB},

i=1,...,N

j=1,...,M

k=1,...,P

BL Lacs:

Do these different types of objects have different variability properties ?

5/23

Distribution Cumulatives

For continuous distributions, we can easily compare visually how well two

distributions agree by building the cumulatives of these distributions. The cumulative of

a distribution is simply its integral:

x

F x=∫X

min

f y dy

The cumulative of a sample can be calculated in a similar way:

C { X } x=∑i H x− X i

(Heaviside function)

i

Different approaches can then be followed to obtain a quantitative assessment

6/23

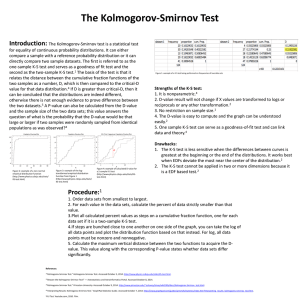

Statistique de Kolmogorov-Smirnov

The simplest one: We determine the maximum separation between the two

cumulatives :

D =

max ∣C X − F x− X min ∣

X min ≤ x≤ X max

i

7/23

Virtues of Kolmogorov-Smirnov (I)

If one compares two samples, their

variance must be added, and:

N e=

N⋅M

N M

It is possible to estimate the expected distribution of D in the case of a uniform

distribution:

∞

P D=2 ∑ −1

j=1

2

j−1 −2j

e

2

, =

N 0.12

0.11

D

N

P(λ>D) is a one-sided probability distribution, so the smaller D, the larger the

probability

8/23

Virtues of Kolmogorov-Smirnov (II)

D is preserved under any transformation x → y = ψ(x), where ψ(x) is an arbitrary

strictly monotonic function

→ P(λ>D) does

not depend on the shape of the underlying distributions !

9/23

Correct usage of Kolmogorov-Smirnov

●

●

●

Decide for a threshold α, for instance 5%

If P(λ>D)>α, then one cannot exclude that the sample has been drawn from the

given distribution

One can never be sure that the sample has been actually drawn from the given

distribution

DO NOT SAY:

The probability that the sample has been drawn from this distribution is X%

SAY:

If P(λ>D)>α:

We cannot exclude that the sample has been drawn from this distribution

If P(λ>D)<α:

The probability of such a high KS value to be obtained is only X%

10/23

Caveats (I)

No correlation in the sample is allowed: Sample must be in the Poisson regime

There is a general problem among non-frequentists (the Bayesians) with the notion of

null hypothesis: Such frequentist tests implicitly specify classes of alternative

hypotheses and exclude others...

Furthermore, one may reject the hypothesis if the model parameters are slightly wrong

11/23

Caveats (II)

In cases where the model has parameters, if one estimates them using the same

data,one cannot use KS statistics anymore!

→ Use Monte Carlo simulations

→ For Gaussian distribution, use Shapiro-Wilk normality test

12/23

Caveats (III)

80 photons/1000 added

in the tails

80 photons/1000 added

in the center

Sensitivity of the Kolmogorov-Smirnov tes is not constant between Xmin and Xmax

since the variance of C{Xi} is proportional to F(x-Xmin).(1-F(x-Xmin)), which

reaches a maximum for

F(x-Xmin) = 0.5

13/23

Non-uniformity Correction

Anderson-Darling's statistics:

∗

D =

∣C X − F x− X min ∣

max

i

X min ≤x≤ X max

F x− X min 1− F x− X min

Or:

∗∗

D =

∫

X min ≤ x≤ X max

∣C X − F x− X min ∣

i

F x− X min 1− F x− X min

dF

One can think of other ones...

However, none of them provides a simple (or even workable) expression for

P(λ>D*(*))...

14/23

Kuiper's Statistics

The simplest modification to KS is the best one!

−

V =D D =

And:

max

X min ≤x≤ X max

∞

C { X }− F x− X min X

i

P V =2 ∑ 4j −1e

j=1

2

2

2

2

−2j

, =

max

min

≤x≤ X max

F x− X min−C { X }

N 0.155

i

0.24

V

N

15/23

Kuiper on a Circle

60 photons/1000 added

in the tails

60 photons/1000 added

in the center

If the distribution is defined over a periodic domain, Kuiper's statistics does not depend

on the choice of the origin, contrarily to KS.

16/23

Application of Kuiper's statistics to

the search of periodic signals

Kuiper test

Rayleigh test

0

1

Standard test is Rayleigh test, which is nothing else than the Fourier power spectrum

for signals expressed in the form:

S t = ∑ t −t i

i

17/23

Interrupted Observations

0

“good time intervals”

1

Phase exposure map Expected “uniform” distribution

But X-ray observations are often interrupted because of various instrumental problems,

so the expected distribution is not uniform!

Kuiper test can take into account very naturally the non-uniformity of the phase

exposure map

18/23

Examples with simulated data

Continuous

case

Real GTIs

Real GTIs

with signal

19/23

Examples with real data

EX Hya

UW Pic

20/23

Ad nauseam: Kuiper for the fanatical... (I)

α,β :

21/23

Ad nauseam: Kuiper for the fanatical... (II)

Expected

Best

Asymptotic

equation,

instead of

analytical

expression

Tail probabilities

22/23

References

●

Any text book in statistics...

●

Press W. et al., Numerical Recipes in C,F777, ..., Sect. 14

●

Kuiper N.H., 1962, Proc. Koninklijke Nederlandse Akad. Wetenshappen A 63, 38

●

Stephens M.A., 1965, Biometrika 70, 11

●

Paltani S., 2004, A&A 420, 789

23/23