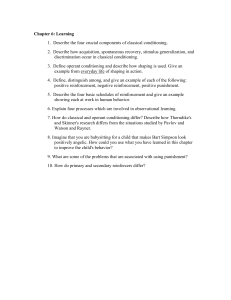

Schedules of Operant Conditioning Handout

advertisement

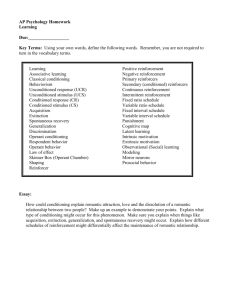

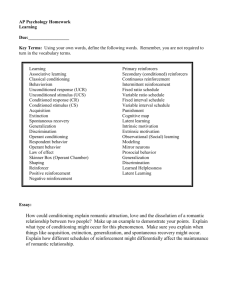

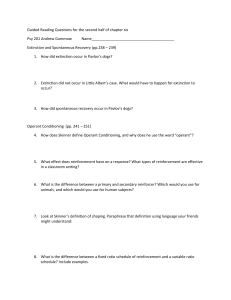

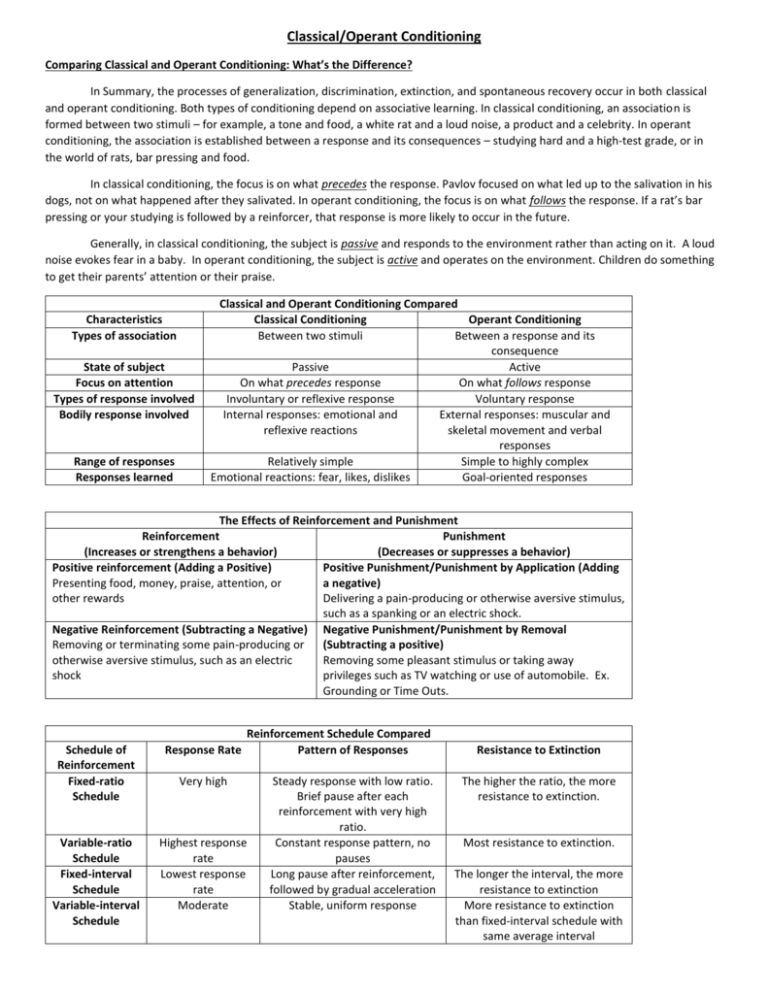

Classical/Operant Conditioning Comparing Classical and Operant Conditioning: What’s the Difference? In Summary, the processes of generalization, discrimination, extinction, and spontaneous recovery occur in both classical and operant conditioning. Both types of conditioning depend on associative learning. In classical conditioning, an association is formed between two stimuli – for example, a tone and food, a white rat and a loud noise, a product and a celebrity. In operant conditioning, the association is established between a response and its consequences – studying hard and a high-test grade, or in the world of rats, bar pressing and food. In classical conditioning, the focus is on what precedes the response. Pavlov focused on what led up to the salivation in his dogs, not on what happened after they salivated. In operant conditioning, the focus is on what follows the response. If a rat’s bar pressing or your studying is followed by a reinforcer, that response is more likely to occur in the future. Generally, in classical conditioning, the subject is passive and responds to the environment rather than acting on it. A loud noise evokes fear in a baby. In operant conditioning, the subject is active and operates on the environment. Children do something to get their parents’ attention or their praise. Characteristics Types of association State of subject Focus on attention Types of response involved Bodily response involved Range of responses Responses learned Classical and Operant Conditioning Compared Classical Conditioning Operant Conditioning Between two stimuli Between a response and its consequence Passive Active On what precedes response On what follows response Involuntary or reflexive response Voluntary response Internal responses: emotional and External responses: muscular and reflexive reactions skeletal movement and verbal responses Relatively simple Simple to highly complex Emotional reactions: fear, likes, dislikes Goal-oriented responses The Effects of Reinforcement and Punishment Reinforcement Punishment (Increases or strengthens a behavior) (Decreases or suppresses a behavior) Positive reinforcement (Adding a Positive) Positive Punishment/Punishment by Application (Adding Presenting food, money, praise, attention, or a negative) other rewards Delivering a pain-producing or otherwise aversive stimulus, such as a spanking or an electric shock. Negative Reinforcement (Subtracting a Negative) Negative Punishment/Punishment by Removal Removing or terminating some pain-producing or (Subtracting a positive) otherwise aversive stimulus, such as an electric Removing some pleasant stimulus or taking away shock privileges such as TV watching or use of automobile. Ex. Grounding or Time Outs. Reinforcement Schedule Compared Pattern of Responses Schedule of Reinforcement Fixed-ratio Schedule Response Rate Variable-ratio Schedule Fixed-interval Schedule Variable-interval Schedule Highest response rate Lowest response rate Moderate Very high Steady response with low ratio. Brief pause after each reinforcement with very high ratio. Constant response pattern, no pauses Long pause after reinforcement, followed by gradual acceleration Stable, uniform response Resistance to Extinction The higher the ratio, the more resistance to extinction. Most resistance to extinction. The longer the interval, the more resistance to extinction More resistance to extinction than fixed-interval schedule with same average interval Schedules of Reinforcement RATIOS = RESPONSES • • Fixed Ratio (FR) – Reinforcement occurs after a fixed number of responses – Results in a burst-pause-burst-pause pattern – Example: Receiving a free coffee after every ten you buy at Jumping Java Variable Ratio (VR) – Reinforcement occurs after an average number of responses. Number of responses required for reinforcement is unpredictable. – Results in high, steady rates of responding with almost no pauses – Example: Gambling – you must continue to play the slot machine multiple times if you want to win. No one knows how many times you’ll have to play before you’re reinforced (win). INTERVALS = TIME • • Fixed Interval (FI) – A reinforcer is delivered for the first response after a preset time interval has elapsed. – Number of responses tends to increase as the time for the next reinforcer draws near then long pause occurs after reinforcement. – Example: If the cookie dough packages says bake for 10 minutes, you don’t start to check until around the 10 minute mark. The behavior of checking increases around the time of reinforcement (eating the baked cookies). Variable Interval (VI) – A reinforcer is delivered for the first response after an average time interval has elapsed. The interval is unpredictable. – Number of responses will be consistently steady because reinforcement could occur at any time. – Example: At teacher who wants his class to study their notes every night gives random pop quizzes to the class. Students study each night because they don’t know when the quiz is actually coming. Ask Yourself: • • Can the animal speed up its reinforcement by doing the behavior? If YES - Ratio – Does the number of times the animal does the behavior vary for reinforcement? Variable – Does the animal do the behavior a set number of times for reinforcement? Fixed Is the example dealing with the amount of time that elapses from when the animal does the behavior till it gets reinforcement? - Interval – Reinforcement will NOT be sped up by doing the behavior more often – Does the amount time between the behavior and reinforcement vary? Variable – Is the amount of time between the behavior and reinforcement stay the same? Fixed