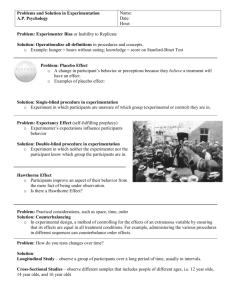

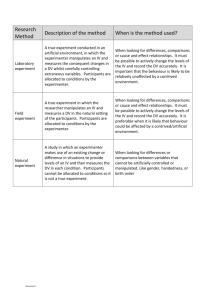

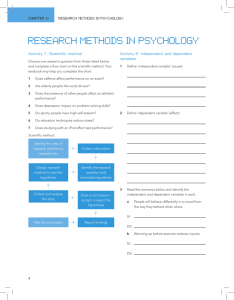

Experimentation in social psychology

advertisement