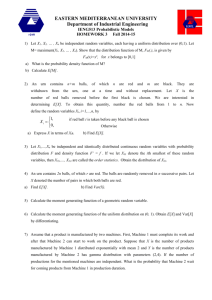

JOINT, MARGINAL, AND CONDITIONAL DISTRIBUTIONS

advertisement

PROBABILITY NOTES - PR4 JOINT, MARGINAL, AND CONDITIONAL DISTRIBUTIONS Joint distribution of random variables ? and @ : A joint distribution of two random variables has a probability function or probability density function ²%Á &³ that is a function of two variables (sometimes denoted ?Á@ ²%Á &³). If ? and @ are discrete random variables, then ²%Á &³ must satisfy (i) ²%Á &³ and (ii) ²%Á &³ ~ . % & If ? and @ are continuous random variables, then ²%Á &³ must satisfy B B (i) ²%Á &³ and (ii) cB cB ²%Á &³ & % ~ . It is possible to have a joint distribution in which one variable is discrete and one is continuous, or either has a mixed distribution. The joint distribution of two random variables can be extended to a joint distribution of any number of random variables. If ( is a subset of two-dimensional space, then 7 ´²?Á @ ³ (µ is the double summation (discrete case) or double integral (continuous case) of ²%Á &³ over the region (. Cumulative distribution function of a joint distribution: If random variables ? and @ have a joint distribution, then the cumulative distribution function is - ²%Á &³ ~ 7 ´²? %³ q ²@ &³µ . % & In the continuous case, - ²%Á &³ ~ cB cB ² Á !³ ! , % and in the discrete case, - ²%Á &³ ~ & ² Á !³ . ~cB !~cB In the continuous case, C%C C& - ²%Á &³ ~ ²%Á &³ . Expectation of a function of jointly distributed random variables: If ²%Á &³ is a function of two variables, and ? and @ are jointly distributed random variables, then the expected value of ²?Á @ ³ is defined to be ,´²?Á @ ³µ ~ ²%Á &³ h ²%Á &³ in the discrete case, and % & B B ,´²?Á @ ³µ ~ cB cB ²%Á &³ h ²%Á &³ & % in the continuous case. 1 Marginal distribution of ? found from a joint distribution of ? and @ : If ? and @ have a joint distribution with joint density or probability function ²%Á &³, then the marginal distribution of ? has a probability function or density function denoted ? ²%³, which B is equal to ? ²%³ ~ ²%Á &³ in the discrete case, and is equal to ? ²%³ ~ ²%Á &³ & cB & in the continuous case. The density function for the marginal distribution of @ is found in a B similar way ; @ ²&³ is equal to either @ ²&³ ~ ²%Á &³ or @ ²&³ ~ cB ²%Á &³ % . % If the cumulative distribution function of the joint distribution of ? and @ is - ²%Á &³, then -? ²%³ ~ lim - ²%Á &³ and -@ ²&³ ~ lim - ²%Á &³ . &¦B %¦B This can be extended to define the marginal distribution of any one (or subcollection) variable in a multivariate distribution. Independence of random variables ? and @ : Random variables ? and @ with cumulative distribution functions -? ²%³ and -@ ²&³ are said to be independent (or stochastically independent) if the cumulative distribution function of the joint distribution - ²%Á &³ can be factored in the form - ²%Á &³ ~ -? ²%³ h -@ ²&³ for all ²%Á &³. This definition can be extended to a multivariate distribution of more than 2 variables. If ? and @ are independent, then ²%Á &³ ~ ? ²%³ h @ ²&³ , (but the reverse implication is not always true, i.e. if the joint distribution probability or density function can be factored in the form ²%Á &³ ~ ? ²%³ h @ ²&³ then ? and @ are usually, but not always, independent). Conditional distribution of @ given ? ~ %: Suppose that the random variables ? and @ have joint density/probability function ²%Á &³, and the density/probability function of the marginal distribution of ? is ? ²%³. The density/probability function of the conditional distribution of @ ²%Á&³ given ? ~ % is @ O? ²&O? ~ %³ ~ ²%³ , if ? ²%³ £ . ? B The conditional expectation of @ given ? ~ % is ,´@ O? ~ %µ ~ cB & h @ O? ²&O? ~ %³ & in the continuous case, and ,´@ O? ~ %µ ~ & h @ O? ²&O? ~ %³ in the discrete case. % The conditional density/probability is also written as @ O? ²&O%³ , or ²&O%³ . If ? and @ are independent random variables, then @ O? ²&O? ~ %³ ~ @ ²&³ and ?O@ ²%O@ ~ &³ ~ ? ²%³ . Covariance between random variables ? and @ : If random variables ? and @ are jointly distributed with joint density/probability function ²%Á &³, then the covariance between ? and @ is *#´?Á @ µ ~ ,´²? c ,´?µ³²@ c ,´@ µ³µ ~ ,´²? c ? ³²@ c @ ³µ . Note that *#´?Á ?µ ~ = ´?µ . 2 Coefficient of correlation between random variables ? and @ : The coefficient of correlation between random variables ? and @ is ²?Á @ ³ ~ ?Á@ ~ *#´?Á@ µ ? @ , where ? and @ are the standard deviations of ? and @ respectively. Moment generating function of a joint distribution: Given jointly distributed random variables ? and @ , the moment generating function of the joint distribution is 4?Á@ ²! Á ! ³ ~ ,´! ?b! @ µ . This definition can be extended to the joint distribution of any number of random variables. Multinomial distribution with parameters Á Á Á À À ÀÁ (where is a positive integer and for all ~ Á Á ÀÀÀÁ and b b Ä ~ ³: Suppose that an experiment has possible outcomes, with probabilities Á Á ÀÀÀÁ respectively. If the experiment is performed successive times (independently), let ? denote the number of experiments that resulted in outcome , so that ? b ? b Ä b ? ~ . The multivariate probability function is ²% Á % Á ÀÀÀÁ % ³ ~ % [h%[[Ä% [ h % h % Ä% . ,´? µ ~ Á = ´? µ ~ ² c ³ Á *#´? ? µ ~ c . For example, the toss of a fair die results in one of ~ 6 outcomes, with probabilities ~ for ~ Á Á Á Á Á . If the die is tossed times, then with ? ~ # of tosses resulting in face "" turning up, the multivariate distribution of ? Á ? Á ÀÀÀÁ ? is a multinomial distribution. Some results and formulas related to this section are: (i) ,´ ²?Á @ ³ b ²?Á @ ³µ ~ ,´ ²?Á @ ³µ b ,´ ²?Á @ ³µ , and in particular, ,´? b @ µ ~ ,´?µ b ,´@ µ and ,´? µ ~ ,´? µ (ii) lim - ²%Á &³ ~ lim - ²%Á &³ ~ %¦cB &¦cB (iii) 7 ´²% ? % ³ q ²& @ & ³µ ~ - ²% Á & ³ c - ²% Á & ³ c - ²% Á & ³ b - ²% Á & ³ (iv) 7 ´²? %³ q ²@ &³µ ~ -? ²%³ b -@ ²&³ c - ²%Á &³ 3 (v) If ? and @ are independent, then for any functions and , ,´²?³ h ²@ ³µ ~ ,´²?³µ h ,´²@ ³µ , and in particular, ,´? h @ µ ~ ,´?µ h ,´@ µ. (vi) The density/probability function of jointly distributed variables ? and @ can be written in the form ²%Á &³ ~ @ O? ²&O? ~ %³ h ? ²%³ ~ ?O@ ²%O@ ~ &³ h @ ²&³ (vii) *#´?Á @ µ ~ ,´? h @ µ c ? h @ ~ ,´?@ µ c ,´?µ h ,´@ µ . *#´?Á @ µ ~ *#´@ Á ?µ . If ? and @ are independent, then ,´? h @ µ ~ ,´?µ h ,´@ µ and *#´?Á @ µ ~ . For constants Á Á Á Á Á and random variables ?Á @ Á A and > , *#´? b @ b Á A b > b µ ~ *#´?Á Aµ b *#´?Á > µ b *#´@ Á Aµ b *#´@ Á > µ (viii) For any jointly distributed random variables ? and @ , c ?@ (ix) = ´? b @ µ ~ ,´²? b @ ³ µ c ²,´? b @ µ³ ~ ,´? b ?@ b @ µ c ²,´?µ b ,´@ µ³ ~ ,´? µ b ,´?@ µ b ,´@ µ c ²,´?µ³ c ,´?µ,´@ µ c ²,´@ µ³ ~ = ´?µ b = ´@ µ b h *#´?Á @ µ If ? and @ are independent, then = ´? b @ µ ~ = ´?µ b = ´@ µ . For any ?Á @ , = ´? b @ b µ ~ = ´?µ b = ´@ µ b h *#´?Á @ µ (x) 4?Á@ ²! Á ³ ~ ,´! ? µ ~ 4? ²! ³ and 4?Á@ ²Á ! ³ ~ ,´! @ µ ~ 4@ ²! ³ (xi) C!C 4?Á@ ²! Á ! ³e ~ ,´?µ , C!C 4?Á@ ²! Á ! ³e ~ ,´@ µ ! ~! ~ ! ~! ~ b C C ! C ! 4?Á@ ²! Á ! ³e! ~! ~ ~ ,´? h @ µ (xii) If 4 ²! Á ! ³ ~ 4 ²! Á ³ h 4 ²Á ! ³ for ! and ! in a region about ²Á ³, then ? and @ are independent. (xiii) If @ ~ ? b then 4@ ²!³ ~ ! 4? ²!³ . (xiv) If ? and @ are jointly distributed, then for any &, ,´?O@ ~ &µ depends on &, say ,´?O@ ~ &µ ~ ²&³ . It can then be shown that ,´²@ ³µ ~ ,´?µ ; this is more usually written in the form ,´ ,´?O@ µ µ ~ ,´?µ . It can also be shown that = ´?µ ~ ,´ = ´?O@ µ µ b = ´ ,´?O@ µ µ . 4 (xv) A random variable ? can be defined as a combination of two (or more) random variables ? and ? , defined in terms of whether or not a particular event ( occurs. ?~F ? if event ( occurs (probability ) ? if event ( does not occur (probability c ) Then, @ can be the indicator random variable 0( ~ F if ( occurs (prob. ) if ( doesn't occur (prob. c) Probabilities and expectations involving ? can be found by "conditioning" over @ : 7 ´? µ ~ 7 ´? O( occursµ h 7 ´( occursµ b 7 ´? O(Z occursµ h 7 ´(Z occursµ ~ 7 ´? µ h b 7 ´? µ h ² c ³ , ,´? µ ~ ,´? µ h b ,´? µ h ² c ³ Á 4? ²!³ ~ 4? ²!³ h b 4? ²!³ h ² c ³ This is really an illustration of a mixture of the distributions of ? and ? , with ~ and ~ c . As an example, suppose there are two urns containing balls - Urn I contains 5 red and 5 blue balls and Urn II contains 8 red and 2 blue balls. A die is tossed, and if the number turning up is even then 2 balls are picked from Urn I, and if the number turning up is odd then 3 balls are picked from Urn II. ? is the number of red balls chosen. Event ( would be ( ~ die toss is even. Random variable ? would be the number of red balls chosen from Urn I and ? would be the number of red balls chosen from Urn II, and since each urn is equally likely to be chosen, ~ ~ À (xvi) If ? and @ have a joint distribution which is uniform on the two dimensional region 9 (usually 9 will be a triangle, rectangle or circle in the ²%Á &³ plane), then the conditional distribution of @ given ? ~ % has a uniform distribution on the line segment (or segments) defined by the intersection of the region 9 with the line ? ~ %. The marginal distribution of @ might or might not be uniform. Example 116: If ²%Á &³ ~ 2²% b & ³ is the density function for the joint distribution of the continuous random variables ? and @ defined over the unit square bounded by the points ²Á ³Á ²Á ³ Á ²Á ³ and ²Á ³, find 2 . Solution: The (double) integral of the density function over the region of density must be 1, so that ~ 2²% b & ³ & % ~ 2 h S 2 ~ . U Example 117: The cumulative distribution function for the joint distribution of the continuous random variables ? and @ is - ²%Á &³ ~ ²À³²% & b % & ³ , for % and & . Find ² Á ³ . Solution: ²%Á &³ ~ C%C C& - ²%Á &³ ~ ²À³²% b %&³ S ² Á ³ ~ À 5 U Example 118: ? and @ are discrete random variables which are jointly distributed with the following probability function ²%Á &³: ? c @ c Find ,´? h @ µ. Solution: ,´?@ µ ~ %& h ²%Á &³ ~ ² c ³²³² ³ b ² c ³²³² ³ b ² c ³² c ³² ³ % & b ²³²³² ³ b ²³²³²³ b ²³² c ³² ³ b ²³²³² ³ b ²³²³² ³ b ²³² c ³² ³ ~ . U Example 119: Continuous random variables ? and @ have a joint distribution with density function ²%Á &³ ~ ²c%c&³ in the region bounded by & ~ Á % ~ and & ~ c %. Find the density function for the marginal distribution of ? for % . Solution: The region of joint density is illustrated in the graph at the right. Note that ? must be in the interval ²Á ³ and @ must be in the interval ²Á ³. B Since ? ²%³ ~ cB ²%Á &³ & , we note that given a value of % in ²Á ³, the possible values of & (with non-zero density for ²%Á &³) must satisfy & c %, so that c% ²%Á &³ & ? ²%³ ~ c% ²c%c&³ & ~ ² c %³ . U ~ Example 120: Suppose that ? and @ are independent continuous random variables with the following density functions - ? ²%³ ~ for % and @ ²&³ ~ & for & . Find 7 ´@ ?µ. Solution: Since ? and @ are independent, the density function of the joint distribution of ? and @ is ²%Á &³ ~ ? ²%³ h @ ²&³ ~ & , and is defined on the unit square. The graph at the right illustrates the region for the probability % in question. 7 ´@ ?µ ~ & & % ~ . U 6 Example 121: Continuous random variables ? and @ have a joint distribution with density function ²%Á &³ ~ % b for % and & . Find 7 ´? O@ µ. %& 7 ´²? ³q²@ ³µ . 7 ´@ µ %& 7 ´²? ³ q ²@ ³µ ~ ° ° ´% b µ & % ~ . %& 7 ´@ µ ~ ° @ ²&³ & ~ ° ´ ²%Á &³ %µ & ~ ° ´% b µ % & ~ S ° 7 ´? O@ µ ~ ° ~ U . Solution: 7 ´? O@ µ ~ Example 122: Continuous random variables ? and @ have a joint distribution with density function ²%Á &³ ~ ² &³c% for % B and & . Find 7 ´? O@ ~ µ . 7 ´²?³q²@ ~ ³µ Solution: 7 ´? O@ ~ µ ~ . @ ² ³ l B B 7 ´²? ³ q ²@ ~ ³µ ~ ²%Á ³ % ~ ² ³c% % ~ h c . B B @ ² ³ ~ ²%Á ³ % ~ ² ³c% % ~ S 7 ´? O@ ~ µ ~ c . U l Example 123: ? is a continuous random variable with density function ? ²%³ ~ % b for % . ? is also jointly distributed with the continuous random variable @ , and the conditional density function of @ given ? ~ % is %b& @ O? ²&O? ~ %³ ~ for % and & . Find @ ²&³ for & . %b Solution: ²%Á &³ ~ ²&O%³ h ? ²%³ ~ Then, @ ²&³ ~ ²%Á &³ % ~ & b À %b& h ²% b ³ ~ % b & . %b U Example 124: Find *#´?Á @ µ for the jointly distributed discrete random variables in Example 118 above. Solution: *#´?Á @ µ ~ ,´?@ µ c ,´?µ h ,´@ µ . In Example 118 it was found that ,´?@ µ ~ . The marginal probability function for ? is 7 ´? ~ µ ~ b b ~ , 7 ´? ~ µ ~ and 7 ´? ~ c µ ~ , and the mean of ? is ,´?µ ~ ²³² ³ b ²³² ³ b ² c ³² ³ ~ . In a similar way, the probability function of @ is found to be 7 ´@ ~ µ ~ Á 7 ´@ ~ µ ~ Á and 7 ´@ ~ c µ ~ Á with a mean of ,´@ µ ~ c . Then, *#´?Á @ µ ~ c ² ³² c ³ ~ U . 7 Example 125: The coefficient of correlation between random variables ? and @ is , ~ Á ~ . The random variable A is defined to be A ~ ? c @ , and and ? @ it is found that A ~ . Find . Solution: A ~ = ´Aµ ~ = ´?µ b = ´@ µ c h ²³²³ *#´?Á @ µ . Since *#´?Á @ µ ~ ´?Á @ µ h ? h @ ~ h l h l ~ Á it follows that ~ A ~ b ²³ c ² ³ ~ S ~ . U Example 126: Suppose that ? and @ are random variables whose joint distribution has moment generating function 4 ²! Á ! ³ ~ ² ! b ! b ³ , for all real ! and ! . Find the covariance between ? and @ . Solution: *#´?Á @ µ ~ ,´?@ µ c ,´?µ h ,´@ µ . ,´?@ µ ~ C !C C ! 4?Á@ ²! Á ! ³e ! ~! ~ ~ ²³²³² ! b ! b ³ ² ! ³² ! ³e ~ , ! ~! ~ ~ ²³² ! b ! b ³ ² ! ³e ~ ! ~! ~ ! ~! ~ ,´@ µ ~ C!C 4?Á@ ²! Á ! ³e ~ ²³² ! b ! b ³ ² ! ³e ~ ,´?µ ~ C!C 4?Á@ ²! Á ! ³e ! ~! ~ ! ~! ~ S *#´?Á @ µ ~ c ² ³² ³ ~ c . U Á Á Example 127: Suppose that ? has a continuous distribution with p.d.f. ? ²%³ ~ % on the interval ²Á ³ , and ? ²%³ ~ elsewhere. Suppose that @ is a continuous random variable such that the conditional distribution of @ given ? ~ % is uniform on the interval ²Á %³ . Find the mean and variance of @ . Solution: This problem can be approached in two ways. (i) The first approach is to determine the unconditional (marginal) distribution of @ . We are given ? ²%³ ~ % for % , and @ O? ²&O? ~ %³ ~ % for & % . Then, ²%Á &³ ~ ²&O%³ h ? ²%³ ~ % h % ~ for % and & % . The unconditional (marginal) distribution of @ has p.d.f. B @ ²&³ ~ cB ²%Á &³ % ~ & % ~ ² c &³ for & (and @ ²&³ is elsewhere). Then ,´@ µ ~ & h ² c &³ & ~ , ,´@ µ ~ & h ² c &³ & ~ , and = ´@ µ ~ ,´@ µ c ²,´@ µ³ ~ c ² ³ ~ . 8 (ii) The second approach is to use the relationships ,´@ µ ~ ,´ ,´@ O?µ µ and = ´@ µ ~ ,´ = ´@ O?µ µ b = ´ ,´@ O?µ µ . From the conditional density ²&O? ~ %³ ~ % for & % , we have % ,´@ O? ~ %µ ~ & h & ~ % , so that ,´@ O?µ ~ ? , and, since ? ²%³ ~ %, % % ,´ ,´@ O?µ µ ~ ,´ ? µ ~ h %% ~ ~ ,´@ µ . In a similar way, = ´@ O? ~ %µ ~ ,´@ O? ~ %µ c ²,´@ O? ~ %µ³ , % where ,´@ O? ~ %µ ~ & h & ~ % , so that ,´@ O?µ ~ ? , and since % ? ? ? ? ,´@ O?µ ~ , we have = ´@ O?µ ~ c ² ³ ~ . % Then ,´ = ´@ O?µ µ ~ ,´ ? µ ~ h % % ~ , and = ´,´@ O?µµ ~ = ´ ? µ ~ = ´?µ ~ h ´,´? µ c ²,´?µ³ µ ~ h ´ c ² ³ µ ~ so that ,´ = ´@ O?µ µ b = ´ ,´@ O?µ µ ~ b ~ ~ = ´@ µ . U 9 FUNCTIONS AND TRANSFORMATIONS OF RANDOM VARIABLES Distribution of a function of a continuous random variable ? : Suppose that ? is a continuous random variable with p.d.f. ? ²%³ and c.d.f. -? ²%³, and suppose that "²%³ is a oneto-one function (usually " is either strictly increasing, such as "²%³ ~ % Á % Á l% or %, or " is strictly decreasing, such as "²%³ ~ c% ). As a one-to-one function, " has an inverse function #, so that #²"²%³³ ~ % . Then the random variable @ ~ "²?³ (@ is referred to as a transformation of ?) has p.d.f. @ ²&³ found as follows: @ ²&³ ~ ? ²#²&³³ h O#Z ²&³O . If " is a strictly increasing function, then -@ ²&³ ~ 7 ´@ &µ ~ 7 ´"²?³ %µ ~ 7 ´? #²&³µ ~ -? ²#²&³³ . Distribution of a function of a discrete random variable ? : Suppose that ? is a discrete random variable with probability function ²%³. If "²%³ is a function of %, and @ is a random variable defined by the equation @ ~ "²?³ , then @ is a discrete random variable with probability function ²&³ ~ ²%³ - given a value of &, find all values of % for &~"²%³ which & ~ "²%³ (say "²% ³ ~ "²% ³ ~ Ä ~ "²%! ³ ~ &³, and then ²&³ is the sum of those ²% ³ probabilities. If ? and @ are independent random variables, and " and # are functions, then the random variables "²?³ and #²@ ³ are independent. The distribution of a sum of random variables: (i) If ? and ? are random variables, and @ ~ ? b ? , then ,´@ µ ~ ,´? µ b ,´? µ and = ´@ µ ~ = ´? µ b = ´? µ b *#´? Á ? µ (ii) If ? and ? are discrete non-negative integer valued random variables with joint probability function ²% Á % ³ , then for an integer , 7 ´? b ? ~ µ ~ ²% Á c % ³ (this considers all combinations of ? and ? % ~ whose sum is ). If ? and ? are independent with probability functions ²% ³ and ²% ³, respectively, then 7 ´? b ? ~ µ ~ ²% ³ h ² c % ³ (this is the convolution method of % ~ finding the distribution of the sum of independent discrete random variables). 10 (iii) If ? and ? are continuous random variables with joint density function ²% Á % ³ B then the density function of @ ~ ? b ? is @ ²&³ ~ ²% Á & c % ³ % . cB If ? and ? are independent continuous random variables with density functions ²% ³ and ²% ³Á then the density function of @ ~ ? b ? is B @ ²&³ ~ cB ²% ³ h ²& c % ³ % (iv) If ? Á ? Á ÀÀÀÁ ? are random variables, and the random variable @ is defined to be @ ~ ? , then ,´@ µ ~ ,´? µ and ~ ~ ~ ~~b = ´@ µ ~ = ´? µ b *#´? Á ? µ . If ? Á ? Á ÀÀÀÁ ? are mutually independent random variables, then ~ ~ = ´@ µ ~ = ´? µ and 4@ ²!³ ~ 4? ²!³ ~ 4? ²!³ h 4? ²!³Ä4? ²!³ (v) If ? Á ? Á ÀÀÀÁ ? and @ Á @ Á ÀÀÀÁ @ are random variables and Á Á ÀÀÀÁ Á Á Á Á ÀÀÀÁ and are constants, then ~ ~ ~ ~ *#´ ? b Á @ b µ ~ *#´? Á @ µ (vi) The Central Limit Theorem: Suppose that ? is a random variable with mean and standard deviation and suppose that ? Á ? Á ÀÀÀÁ ? are independent random variables with the same distribution as ? . Let @ ~ ? b ? b Ä b ? . Then ,´@ µ ~ and = ´@ µ ~ , and as increases, the distribution of @ approaches a normal distribution 5 ²Á ³. This is a justification for using the normal distribution as an approximation to the distribution of a sum of random variables. (vii) Sums of certain distributions: Suppose that ? Á ? Á ÀÀÀÁ ? are independent random variables and @ ~ ? distribution of ? Bernoulli )²Á ³ binomial )² Á ³ ~ distribution of @ binomial )²Á ³ binomial )² Á ³ geometric negative binomial Á negative binomial Á negative binomial Á Poisson Poisson 5 ² Á ³ 5 ² Á ³ 11 Example 128: The random variable ? has an exponential distribution with a mean of 1. The random variable @ is defined to be @ ~ ? . Find @ ²&³, the p.d.f. of @ . Solution: -@ ²&³ ~ 7 ´@ &µ ~ 7 ´ ? &µ ~ 7 ´? &° µ ~ c c &° &° S @ ²&³ ~ -@Z ²&³ ~ & ² c c ³ ~ &° h c . &° Alternatively, since @ ~ ? (& ~ "²%³ ~ %, and is a strictly increasing function with inverse % ~ #²&³ ~ &° ), and ? ~ @ ° , it follows that &° &° @ ²&³ ~ ? ²&° ³ h e & e ~ &° h c . U Example 129: Suppose that ? and @ are independent discrete integer-valued random variables with ? uniformly distributed on the integers to , and @ having the following probability function - @ ²³ ~ À , @ ²³ ~ À , @ ²³ ~ À . Let A ~ ? b @ . Find 7 ´A ~ µ . Solution: Using the fact that ? ²%³ ~ À for % ~ Á Á Á Á , and the convolution method for independent discrete random variables, we have A ²³ ~ ? ²³ h @ ² c ³ ~ ~ ²³²³ b ²À³²³ b ²À³²À³ b ²À³²³ b ²À³²À³ b ²À³²À³ ~ À . U Example 130: ? and ? are independent exponential random variables each with a mean of 1. Find 7 ´? b ? µ. Solution: Using the convolution method, the density function of @ ~ ? b ? is & & @ ²&³ ~ ? ²!³ h ? ²& c !³ ! ~ c! h c²&c!³ ! ~ &c& , so that &~ ~ c c &~ 7 ´? b ? µ ~ 7 ´@ µ ~ &c& & ~ ´ c &c& c c& µe U (the last integral required integration by parts). Example 131: Given independent random variables ? Á ? Á ÀÀÀÁ ? each having the same variance of , and defining < ~ ? b ? b Ä b ?c and = ~ ? b ? b Ä b ? , find the coefficient of correlation between < and = . ; < ~ ² b b b Ä b ³ ~ ² b ³ ~ = . Since the ? 's are independent, if £ then *#´? Á ? µ ~ . Then, noting that *#´> Á > µ ~ = ´> µ, we have *#´< Á = µ ~ *#´? Á ? µ b *#´? Á ? µ b Ä b *#´?c Á ? µ Solution: <= ~ *#´< Á= µ < = ~ = ´? µ b = ´? µ b Ä b = ´?c µ ~ ² c ³ . ²c³ Then, <= ~ ²b³ ~ c b À U 12 Example 132: Independent random variables ?Á @ and A are identically distributed. Let > ~ ? b @ . The moment generating function of > is 4> ²!³ ~ ²À b À! ³ . Find the moment generating function of = ~ ? b @ b A . Solution: For independent random variables, 4?b@ ²!³ ~ 4? ²!³ h 4@ ²!³ ~ ²À b À! ³ . Since ? and @ have identical distributions, they have the same moment generating function. Thus, 4? ²!³ ~ ²À b À! ³ , and then 4= ²!³ ~ 4? ²!³ h 4@ ²!³ h 4A ²!³ ~ ²À b À! ³ . Alternatively, note that the moment generating function of the binomial )²Á ³ is ² c b ! ³ . Thus, ? b @ has a )² Á À³ distribution, and each of ?Á @ and A has a )²Á À³ distribution, so that the sum of these independent binomial distributions is )²Á À³ , with m.g.f. ²À b À! ³ . U Example 133: The birth weight of males is normally distributed with mean 6 pounds, 10 ounces, standard deviation 1 pound. For females, the mean weight is 7 pounds, 2 ounces with standard deviation 12 ounces. Given two independent male/female births, find the probability that the baby boy outweighs the baby girl. Solution: Let random variables ? and @ denote the boy's weight and girl's weight, respectively. Then, > ~ ? c @ has a normal distribution with mean c ~ c lb. and variance ? b @ ~ b ~ À Then, 7 ´? @ µ ~ 7 ´? c @ µ ~ 7 > > c²c ³ c²c ³ ? ~ 7 ´A Àµ, ° ° l l where A has standard normal distribution (> was standardized). Referring to the standard normal table, this probability is À . U Example 134: If the number of typographical errors per page type by a certain typist follows a Poisson distribution with a mean of , find the probability that the total number of errors in 10 randomly selected pages is 10. Solution: The 10 randomly selected pages have independent distributions of errors per page. The sum of independent Poisson random variables with parameters Á ÀÀÀÀÁ has a Poisson distribution with parameter . Thus, the total number of errors in the 10 randomly selected pages has a Poisson distribution with parameter . The probability of 10 errors in the 10 pages is c ² ³ . [ 13 U