Pseudoreplication Conventions Are Testable Hypotheses

advertisement

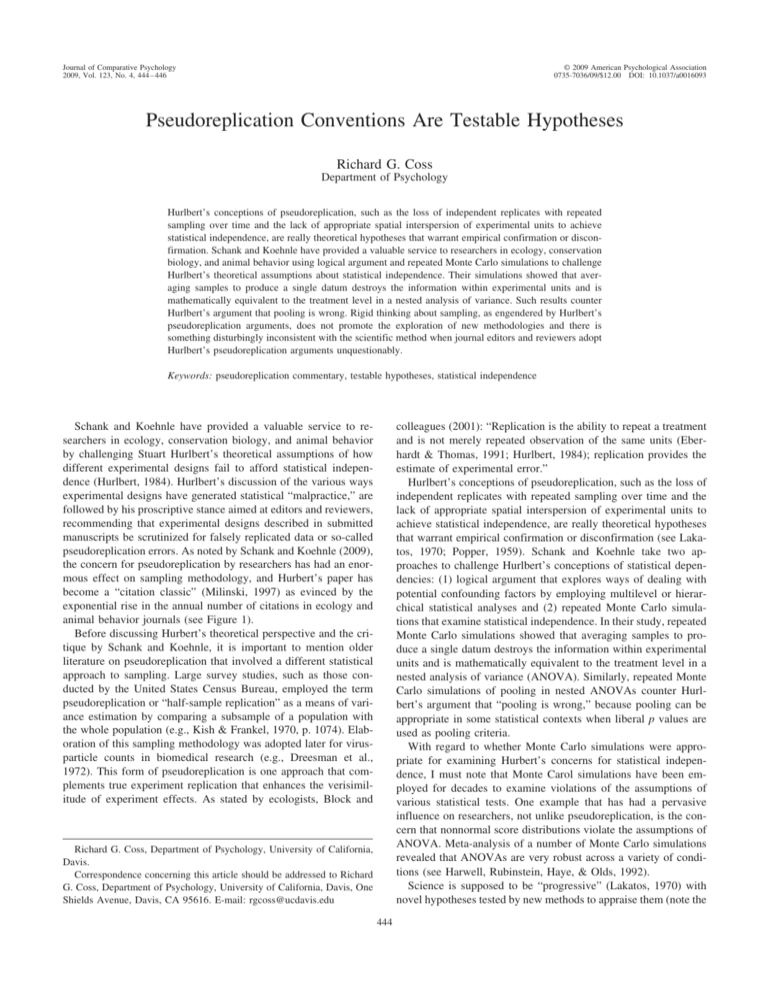

Journal of Comparative Psychology 2009, Vol. 123, No. 4, 444 – 446 © 2009 American Psychological Association 0735-7036/09/$12.00 DOI: 10.1037/a0016093 Pseudoreplication Conventions Are Testable Hypotheses Richard G. Coss Department of Psychology Hurlbert’s conceptions of pseudoreplication, such as the loss of independent replicates with repeated sampling over time and the lack of appropriate spatial interspersion of experimental units to achieve statistical independence, are really theoretical hypotheses that warrant empirical confirmation or disconfirmation. Schank and Koehnle have provided a valuable service to researchers in ecology, conservation biology, and animal behavior using logical argument and repeated Monte Carlo simulations to challenge Hurlbert’s theoretical assumptions about statistical independence. Their simulations showed that averaging samples to produce a single datum destroys the information within experimental units and is mathematically equivalent to the treatment level in a nested analysis of variance. Such results counter Hurlbert’s argument that pooling is wrong. Rigid thinking about sampling, as engendered by Hurlbert’s pseudoreplication arguments, does not promote the exploration of new methodologies and there is something disturbingly inconsistent with the scientific method when journal editors and reviewers adopt Hurlbert’s pseudoreplication arguments unquestionably. Keywords: pseudoreplication commentary, testable hypotheses, statistical independence colleagues (2001): “Replication is the ability to repeat a treatment and is not merely repeated observation of the same units (Eberhardt & Thomas, 1991; Hurlbert, 1984); replication provides the estimate of experimental error.” Hurlbert’s conceptions of pseudoreplication, such as the loss of independent replicates with repeated sampling over time and the lack of appropriate spatial interspersion of experimental units to achieve statistical independence, are really theoretical hypotheses that warrant empirical confirmation or disconfirmation (see Lakatos, 1970; Popper, 1959). Schank and Koehnle take two approaches to challenge Hurlbert’s conceptions of statistical dependencies: (1) logical argument that explores ways of dealing with potential confounding factors by employing multilevel or hierarchical statistical analyses and (2) repeated Monte Carlo simulations that examine statistical independence. In their study, repeated Monte Carlo simulations showed that averaging samples to produce a single datum destroys the information within experimental units and is mathematically equivalent to the treatment level in a nested analysis of variance (ANOVA). Similarly, repeated Monte Carlo simulations of pooling in nested ANOVAs counter Hurlbert’s argument that “pooling is wrong,” because pooling can be appropriate in some statistical contexts when liberal p values are used as pooling criteria. With regard to whether Monte Carlo simulations were appropriate for examining Hurbert’s concerns for statistical independence, I must note that Monte Carol simulations have been employed for decades to examine violations of the assumptions of various statistical tests. One example that has had a pervasive influence on researchers, not unlike pseudoreplication, is the concern that nonnormal score distributions violate the assumptions of ANOVA. Meta-analysis of a number of Monte Carlo simulations revealed that ANOVAs are very robust across a variety of conditions (see Harwell, Rubinstein, Haye, & Olds, 1992). Science is supposed to be “progressive” (Lakatos, 1970) with novel hypotheses tested by new methods to appraise them (note the Schank and Koehnle have provided a valuable service to researchers in ecology, conservation biology, and animal behavior by challenging Stuart Hurlbert’s theoretical assumptions of how different experimental designs fail to afford statistical independence (Hurlbert, 1984). Hurlbert’s discussion of the various ways experimental designs have generated statistical “malpractice,” are followed by his proscriptive stance aimed at editors and reviewers, recommending that experimental designs described in submitted manuscripts be scrutinized for falsely replicated data or so-called pseudoreplication errors. As noted by Schank and Koehnle (2009), the concern for pseudoreplication by researchers has had an enormous effect on sampling methodology, and Hurbert’s paper has become a “citation classic” (Milinski, 1997) as evinced by the exponential rise in the annual number of citations in ecology and animal behavior journals (see Figure 1). Before discussing Hurbert’s theoretical perspective and the critique by Schank and Koehnle, it is important to mention older literature on pseudoreplication that involved a different statistical approach to sampling. Large survey studies, such as those conducted by the United States Census Bureau, employed the term pseudoreplication or “half-sample replication” as a means of variance estimation by comparing a subsample of a population with the whole population (e.g., Kish & Frankel, 1970, p. 1074). Elaboration of this sampling methodology was adopted later for virusparticle counts in biomedical research (e.g., Dreesman et al., 1972). This form of pseudoreplication is one approach that complements true experiment replication that enhances the verisimilitude of experiment effects. As stated by ecologists, Block and Richard G. Coss, Department of Psychology, University of California, Davis. Correspondence concerning this article should be addressed to Richard G. Coss, Department of Psychology, University of California, Davis, One Shields Avenue, Davis, CA 95616. E-mail: rgcoss@ucdavis.edu 444 COMMENTARIES 445 Figure 1. Yearly frequencies of journal and book citations mentioning pseudoreplication since 1984 using Google Scholar to identify words and phrases via character recognition in .PDF image files. Key phrases used to locate relevant studies concerned about pseudoreplication were avoid pseudoreplication and prevent pseudoreplication, two phrases that encompass more than 90% of citations with this theme. In recent years, animal behavior research accounts for about one third of all citations. recent trend in using Bayesian statistics). Development of new analytical procedures is a feature of progressive science and Monte Carlo techniques (such as random-variable generation) play important roles in these developments. Rigid thinking about sampling, as engendered by Hurlbert’s pseudoreplication arguments, does not promote the exploration of new methodologies. Moreover, there is something disturbingly inconsistent with the scientific method when journal editors and reviewers adopt Hurlbert’s pseudoreplication arguments unquestionably. As a reviewer, I have been repeatedly dismayed by the commentaries of editors and reviewers who have rejected articles for publication because the experimental methodologies were thought to have violated pseudoreplication conventions, especially those fostered by Kroodsma and colleagues (2001). To gain information about why colleagues and editors have been eager to adopt the conventions of pseudoreplication, I recently conducted informal interviews of research colleagues and journal editors at a recent scientific meeting to reveal any of their concerns of the utility of pseudoreplication conventions in experiment planning. Several were enthusiastic and felt that these conventions increased experimental precision, but others were unconvinced, recognizing the constraints imposed by pseudoreplication like the expense of duplicating experimental rooms or field pens, or the logistical hardships of sampling additional populations in the field to reduce environmental correlations. For several who used playbacks of conspecific or heterospecific vocalizations to elicit behavior in the field, I noted expressions like “seeking ecological validity” by using multiple exemplars within sound classes and their randomized assignment to subjects. Nothing was said about the potential loss of information afforded by averaging exemplars within sound treatments because the focus was providing natural variation in ecologically relevant sounds. This approach of random assignment of exemplars does not permit systematic comparison of sound treatments that might be graded in a manner that permits comparisons as treatment levels within hierarchical designs. A final point needs to be made about the utility of conducting numerous small experiments that are easily replicated by others. Hurlbert’s concerns about nonindependence of variables and measures are often untestable in the field because of extensive logistical constraints and expense. Furthermore, advancement of knowledge is more convincing when smaller studies examine multiple auxiliary hypotheses that provide a “protective belt” of inductive support for the more encompassing core hypothesis (Coss & Charles, 2004; Lakatos, 1970; Meehl, 1990). Such an approach can incorporate meta-analytic procedures to determine the verisimilitude of core hypotheses. References Block, W. M., Franklin, A. B., Ward, J. P. Jr., Ganey, J. L., & White, G. C. (2001). Design and implementation of monitoring studies to evaluate the success of ecological restoration. Restoration Ecology, 9, 293–303. Coss, R. G., & Charles, E. P. (2004). The role of evolutionary hypotheses in psychological research: Instincts, affordances, and relic sex differences. Ecological Psychology, 16, 199–236. Dreesman, G. R., Hollinger, F. B., Suriano, J. R., Fujioka, R. S., Brunschwig, J. P., & Melnick, J. L. (1972). Biophysical and biochemical heterogeneity of purified Hepatitis B antigen. Journal of Virology, 10, 469 – 476. Eberhardt, L. L., & Thomas, J. M. (1991). Designing environmental field studies. Ecological Monographs, 61, 53–73. Harwell, M. R., Rubinstein, E. N., Haye, W. S., & Olds, C. C. (1992). Summarizing Monte Carlo results in methodological research: The one- 446 COMMENTARIES and two-factor fixed effects ANOVA cases. Journal of Educational and Behavioral Statistics, 17, 315–339. Hurlbert, S. H. (1984). Pseudoreplication and the design of ecological field experiments. Ecological Monographs, 54, 187–211. Kish, L., & Frankel, M. R. (1970). Balanced repeated replications for standard errors. Journal of the American Statistical Association, 65, 1071–1094. Kroodsma, D. E., Byers, B. E., Goodale, E., Johnson, S., & Liu, W.-C. (2001). Pseudoreplication in playback experiments, revisited a decade later. Animal Behaviour, 61, 1029 –1033. Lakatos, I. (1970). Falsificationism and the methodology of scientific research programmes. In I. Lakatos & A. Musgrave (Eds.), Criticism and the growth of knowledge (pp. 91–196). Cambridge, England: Cambridge University Press. Meehl, P. E. (1990). Appraising and amending theories: The strategy of Lakatosian defense and two principles that warrant it. Psychological Inquiry, 1, 108 –141. Milinski, M. (1997). How to avoid seven deadly sins in the study of behavior. Advances in the Study of Behavior, 26, 159 –180. Popper, K. R. (1959). The logic of scientific discovery. London: Hutchinson. Schank, J. C., & Koehnle, T. J. (2009). Pseudoreplication is a pseudoproblem. Journal of Comparative Psychology, 123, 421– 433. Received October 6, 2008 Revision received April 9, 2009 Accepted April 9, 2009 䡲