Image-based motion analysis

advertisement

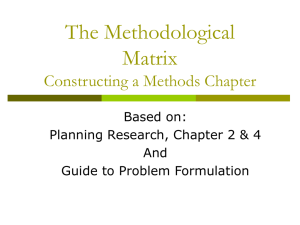

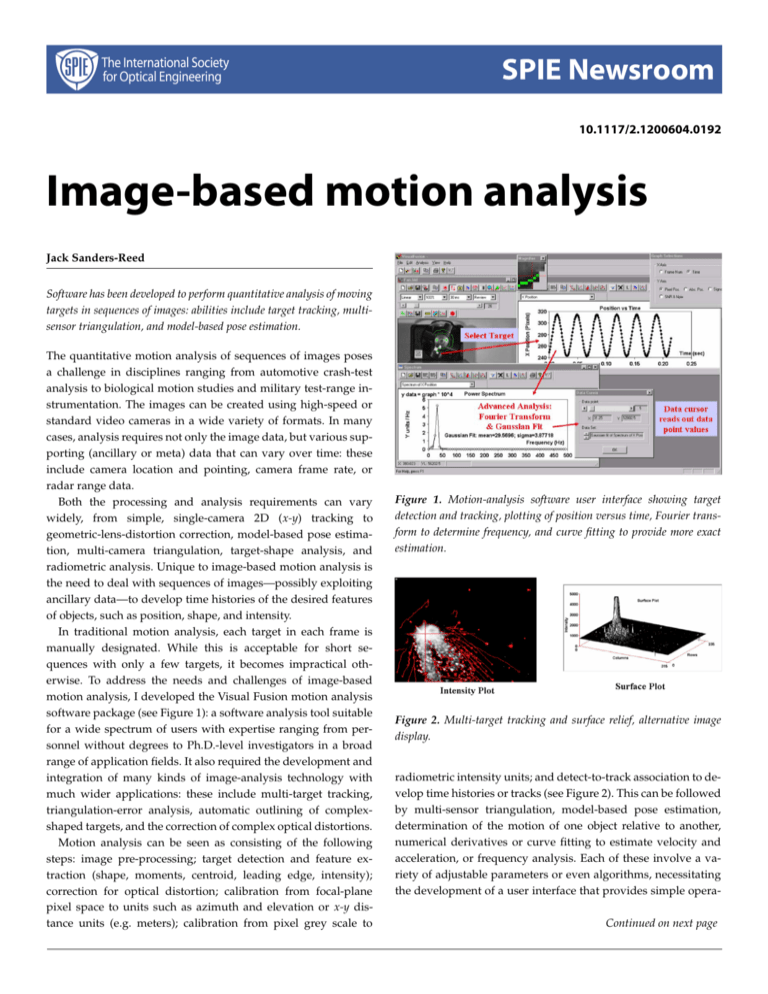

SPIE Newsroom 10.1117/2.1200604.0192 Image-based motion analysis Jack Sanders-Reed Software has been developed to perform quantitative analysis of moving targets in sequences of images: abilities include target tracking, multisensor triangulation, and model-based pose estimation. The quantitative motion analysis of sequences of images poses a challenge in disciplines ranging from automotive crash-test analysis to biological motion studies and military test-range instrumentation. The images can be created using high-speed or standard video cameras in a wide variety of formats. In many cases, analysis requires not only the image data, but various supporting (ancillary or meta) data that can vary over time: these include camera location and pointing, camera frame rate, or radar range data. Both the processing and analysis requirements can vary widely, from simple, single-camera 2D (x-y) tracking to geometric-lens-distortion correction, model-based pose estimation, multi-camera triangulation, target-shape analysis, and radiometric analysis. Unique to image-based motion analysis is the need to deal with sequences of images—possibly exploiting ancillary data—to develop time histories of the desired features of objects, such as position, shape, and intensity. In traditional motion analysis, each target in each frame is manually designated. While this is acceptable for short sequences with only a few targets, it becomes impractical otherwise. To address the needs and challenges of image-based motion analysis, I developed the Visual Fusion motion analysis software package (see Figure 1): a software analysis tool suitable for a wide spectrum of users with expertise ranging from personnel without degrees to Ph.D.-level investigators in a broad range of application fields. It also required the development and integration of many kinds of image-analysis technology with much wider applications: these include multi-target tracking, triangulation-error analysis, automatic outlining of complexshaped targets, and the correction of complex optical distortions. Motion analysis can be seen as consisting of the following steps: image pre-processing; target detection and feature extraction (shape, moments, centroid, leading edge, intensity); correction for optical distortion; calibration from focal-plane pixel space to units such as azimuth and elevation or x-y distance units (e.g. meters); calibration from pixel grey scale to Figure 1. Motion-analysis software user interface showing target detection and tracking, plotting of position versus time, Fourier transform to determine frequency, and curve fitting to provide more exact estimation. Figure 2. Multi-target tracking and surface relief, alternative image display. radiometric intensity units; and detect-to-track association to develop time histories or tracks (see Figure 2). This can be followed by multi-sensor triangulation, model-based pose estimation, determination of the motion of one object relative to another, numerical derivatives or curve fitting to estimate velocity and acceleration, or frequency analysis. Each of these involve a variety of adjustable parameters or even algorithms, necessitating the development of a user interface that provides simple operaContinued on next page 10.1117/2.1200604.0192 Page 2/2 SPIE Newsroom tions for many applications, but allows the experienced user to adjust parameters for more challenging problems. A basic requirement for most applications is to be able to manipulate and visualize both the image and ancillary data. This means the software must be able to read a wide range of different image and data formats and allow the user to easily view the imagery or data. Some of the basic tools available at this level include the ability to adjust gray scale to enhance features of interest and perform operations such as edge enhancement or convolution filtering and electronic zoom. Additionally, it is sometimes informative to display imagery in a 3D relief format where bright regions appear as higher elevations. Basic target detection involves pre-processing, followed by threshold detection (dark targets on light or light targets on dark), and then either correlation detection (in which the software finds the various user-selected targets) or detection of pre-defined targets, such as standard quadrant patterns. Simple radial polynomial optical system correction can be used to correct for wide angle lens distortion. However, I elected to implement a more robust bi-linear interpolation on a grid of points, thus allowing correction of off-axis imaging systems or external windows, such as curved windscreens. Robust multitarget detect-to-track assignment (basically a modified globalnearest-neighbor algorithm) is used to develop time histories of targets.1–4 Passive imaging sensors are 2D-angle-only sensors. To generate 3D target tracks, one must either bring in range data from radar and perform multi-sensor triangulation, or use modelbased pose estimation. While developing the former I was surprised to find that, although triangulation itself is well known, little work had been published on error analysis to determine the accuracy of triangulation results. As a result, I developed the theory for triangulation error analysis 5 and some methodology for practical application of the theory.6 Though model-based pose estimation is a well known technique,7 classical approaches suffer from the need for an initial pose guess. This is impractical in many situations and becomes unstable in important scenarios involving approximately planar objects. Recent work8, 9 has greatly improved performance in this area. Image-based motion analysis has recently moved from manual frame-by-frame point and click analysis to much more automated and sophisticated computer analysis. This brings together a rich set of image-processing and numerical analysis techniques. While commercial software is available, an understanding of the underlying techniques and principles can be valuable in accurately obtaining the most information from these tools. Author Information Jack Sanders-Reed Boeing-SVS Albuquerque, NM http://www.swcp.com/∼spsvs Jack Sanders-Reed is a Technical Fellow with the Boeing Company, specializing in image-based motion analysis, image exploitation, and electro-optic systems. Developer of the Visual Fusion motion analysis software package, he has more than 20 peer reviewed and conference publications to his name, has published numerous papers in Optical Engineering and the Proceedings of the SPIE, and teaches the image-based motion analysis course at the SPIE Defense & Security Symposium. References 1. S. S. Blackman, Multiple-Target Tracking with Radar Applications, Artech House, 1986. ISBN 0-89006-179-3 2. Y. Bar-Shalom and X.-R. Li, Multitarget-Multisensor Tracking: Principals and Techniques, 1995. ISBN 0-9648312-0-1 3. J. N. Sanders-Reed et al., Multi-target tracking in clutter, Proc. SPIE 4724, April 2002. 4. J. N. Sanders-Reed, Multi-target, multi-sensor, closed loop tracking, Proc. SPIE 5430, April 2004. 5. J. N. Sanders-Reed, Error propagation in two-sensor 3D position estimation, Opt. Eng. 40 (4), April 2001. 6. J. N. Sanders-Reed, Impact of tracking system knowledge on multi-sensor 3D triangulation, Proc. SPIE 4714, April 2002. 7. E. Trucco and A. Verri, Introductory Techniques for 3D Computer Vision, pp. 26–32, 279–292, Prentice-Hall, 1998. ISBN 0-13-261108-2 8. D. F. DeMenthon and L. S. Davis, Model-based object pose in 25 lines of code, International Journal of Computer Vision 15, pp. 123–141, June 1995. http://www.cfar.umd.edu/ daniel/ 9. D. Oberkampf, D. F. DeMenthon, and L. S. Davis, Iterative pose estimation using coplanar feature points, CVGIP: Image Understanding 63 (3), May 1996. http://www.cfar.umd.edu/ daniel/ c 2006 SPIE—The International Society for Optical Engineering