Lecture10

advertisement

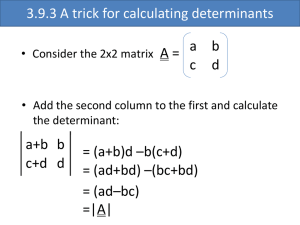

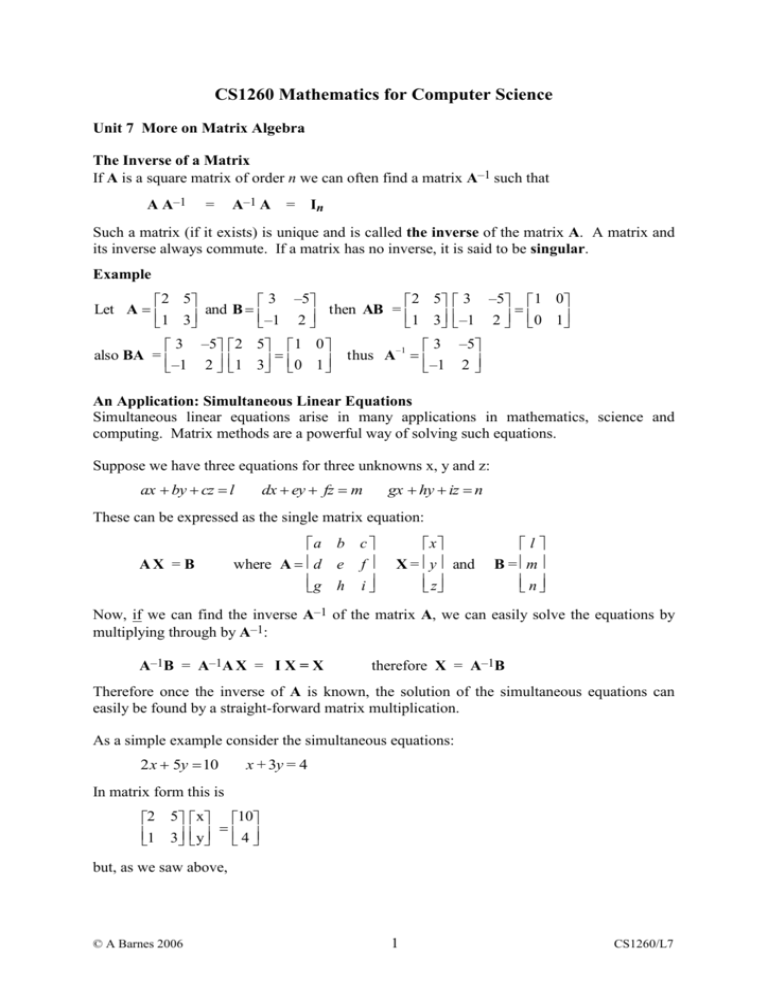

CS1260 Mathematics for Computer Science Unit 7 More on Matrix Algebra The Inverse of a Matrix If A is a square matrix of order n we can often find a matrix A–1 such that A A–1 = A–1 A = In Such a matrix (if it exists) is unique and is called the inverse of the matrix A. A matrix and its inverse always commute. If a matrix has no inverse, it is said to be singular. Example 2 5 3 –5 2 5 3 –5 1 0 Let A and B then AB = 1 3 –1 2 1 3 –1 2 0 1 3 –5 2 5 1 0 3 –5 –1 also BA = thus A –1 2 1 3 0 1 –1 2 An Application: Simultaneous Linear Equations Simultaneous linear equations arise in many applications in mathematics, science and computing. Matrix methods are a powerful way of solving such equations. Suppose we have three equations for three unknowns x, y and z: ax by cz l dx ey fz m gx hy iz n These can be expressed as the single matrix equation: AX = B a b c where A d e f g h i x X = y and z l B = m n Now, if we can find the inverse A–1 of the matrix A, we can easily solve the equations by multiplying through by A–1: A–1B = A–1A X = I X = X therefore X = A–1B Therefore once the inverse of A is known, the solution of the simultaneous equations can easily be found by a straight-forward matrix multiplication. As a simple example consider the simultaneous equations: 2x 5y 10 x + 3y = 4 In matrix form this is 2 5 x 10 1 3 y 4 but, as we saw above, © A Barnes 2006 1 CS1260/L7 –1 2 5 3 –5 1 3 –1 2 therefore the solution is x 3 –510 10 y –1 2 4 –2 that is x 10 y = –2 Sometimes the matrix A in the simultaneous equations A X = B will be singular and so will not have an inverse. In this case the simultaneous equations will either have infinitely many solutions or none at all (depending on the value of the column vector B). Of course so far the inverse matrix A–1 has 'simply been 'pulled out of the hat'. Below we will consider how to determine whether or not a matrix is singular and show how the inverse of any non-singular square matrix may be calculated. Determinants Associated with each square1 matrix A there is a scalar (that is a single number) called the determinant of A. Symbolically the determinant of A is denoted by det(A) or by |A|. When the elements of a matrix are written out explicitly and enclosed by vertical lines (rather than by square brackets) this denotes the determinant of the matrix rather than the matrix itself. For the 1 1 case: aa For the 2 2 case: a b ad – bc c d The determinant of a 3 3 matrix is defined recursively as the sum of 3 products of the elements in the first row of the matrix with certain 2 2 determinants (called minors): a b c e d e f a h g h i f i b d f g i c d e g h a(ei fh) b(di fg) c(dh eg) Note that the 3 minor determinants appearing on the right-hand side of this equation are formed by deleting the first row and one column of the original determinant; the minor of a is formed by deleting the first column, the minor of b by deleting the second column and that of c by removing the third column. Note also that the signs alternate (starting with a plus). The determinant of a 4 4 matrix is defined recursively as the sum of 4 products of the elements in the first row of the matrix with the corresponding 3 3 minors: The theory applies only to square matrices; the concept of a determinant is meaningless for non-square matrices. 1 © A Barnes 2006 2 CS1260/L7 a b c d e f g h i j k l m n p q f a j n g h e k l b i g h e k l c i f j p q p q n q m m h e l d i f j g k m n p The full expression can then be obtained by expanding out the 4 minors. Note that again the signs alternate and that the minor of the element in row 1 & column i is formed by deleting the first row and ith column of the original determinant. The extension to determinants of larger orders should now be obvious: a 5 5 determinant will be the sum of the five products (with alternating signs) of elements in the first row with the corresponding 4 4 minors. Note also that the definition of a 2 2 determinant can be regarded as a sum of products of minors; the minor of a is the 1 1 determinant d (formed by deleting row 1 column 1 form the original 2 2 determinant and the minor of b is the 1 1 determinant c. Again the signs in the products alternate. Examples 2 3 4 4 5 1 5 1 4 1 4 5 2 3 4 2 (–5) 3 10 4 (1 8) –4 1 0 –2 0 –2 1 –2 1 0 A Property of Determinants So far we have expanded determinants by the first row. However, a determinant can be expanded by any row or column and the same result is obtained. Expanding the determinant in the previous example by the first column (rather than the first row) we obtain 2 1 3 4 4 5 3 4 3 4 4 5 2 1 (–2) 2 (–5) 1 (–4) (–2) (15 –16) –4 1 0 1 0 4 5 –2 1 0 In this case, the 3 minors are formed by deleting the first column and the corresponding row of the original determinant. Again the signs of the products alternate. Similarly expanding by the second column we have 2 1 3 4 1 5 2 4 2 4 4 5 3 4 1 3 10 4 8 (10 – 4) –4 –2 0 –2 0 1 5 –2 1 0 The same result (–4) is obtained if other rows or columns are used to expand the determinant. This result is often useful in simplifying the computation of a determinant by hand; if one of the rows or columns of a determinant contains a number of zeroes then the determinant should be expanded by that row or column as the number of minors that need to be calculated is reduced. Moreover if a complete row or column of a determinant is zero we can deduce immediately (by expanding by that row or column) that the determinant is zero. © A Barnes 2006 3 CS1260/L7 For example if we expand the determinant above by row 3 (or column 3) we have only two minors to calculate as the element in row 3 & column 3 is zero and so the value of its minor is irrelevant. 2 1 3 4 3 4 2 4 2 3 4 5 (2) 1 0 (2) (1) (10 – 4) –4 4 5 1 5 1 4 –2 1 0 The signs in the expansion of a determinant alternate both horizontally and vertically starting with a plus in the top-left hand corner. An alternative way of stating this is that the sign for the minor in row i column j is (–1)i+j (thus if the sum of the row and column numbers is even the sign is +1, whereas if it is odd the sign is –1). More pictorially we have Minors and Cofactors Suppose A is a square matrix of order n. Then as we have seen the minor of the element in row i column j of A is the determinant of order n–1 formed by deleting row i and column j from A. We use the notation Mij to denote this minor. Closely related to the concept of the minor is that of cofactor; the cofactor of the element in row i column j of A is (–1)i+jMij. Thus the cofactor is, in effect, the 'minor with the alternating sign factor built-in'. We denote the cofactor of aij by Cij, thus Cij = (–1)i+jMij We can now write out the definition of an n n determinant of A symbolically as expansion by row i: det(A) = ai1 Ci1 + ai2 Ci2 + ai3 Ci3 + .... + ain Cin (1) expansion by column j: det(A) = a1j C1j + a2j C2j + a3j C3j + .... + anj Cnj (2) There is another interesting property of determinants: suppose we expand a determinant by row i but use the cofactors of row j (j ≠ i) then the result is always zero. Symbolically expanding by row i using cofactors of row j where i ≠ j we have: ai1 Cj1 + ai2 Cj2 + ai3 Cj3 + .... + ain Cjn = 0 (3) A similar result holds for columns; symbolically expanding by column i using cofactors of column j where i≠j we have: a1iC1j + a2i C2j + a3i C3j + .... + ani Cnj = 0 (4) Example Expanding by column 2, but using the cofactors of column 1 we have © A Barnes 2006 4 CS1260/L7 2 1 3 4 4 5 3 4 3 4 4 5 3 4 1 (–3) (–5) 4 (–4) (–1) (15 –16) 0 1 0 1 0 4 5 –2 1 0 Matrix Inverses Although determinants have many interesting properties in their own right the main reason for introducing determinants in this unit is that they provide a way of calculating matrix inverses. The adjoint matrix of a square matrix2 A is defined to be the transpose of the matrix of cofactors. Thus the element in row i column j of the adjoint matrix is Aji (that is the cofactor of the element in the ith row and jth column of A). The adjoint of A is denoted by adj(A). The adjoint matrix has the following important properties: A adj(A) = adj(A) A = det(A) I (5) This result follows from equations (1)–(4) above, since for example the right hand side of (1) is the element in row i column i of A adj(A) whilst the right-hand side of equation (3) is the element in row i column j of A adj(A). Thus the elements on the main diagonal of A adj(A) are all equal to det(A) and the off-diagonal elements are all zero. Equations (2) and (4) give respectively the diagonal and off-diagonal elements of adj(A) A. A matrix and its adjoint commute. From equation (5) we can deduce immediately that when det(A) ≠ 0 that A–1 = det(A)–1 adj(A) (6) that is the inverse matrix is the adjoint matrix divided by the determinant. If det(A) = 0, then the matrix is singular and has no inverse. Thus evaluating a determinant provides a straightforward of determining whether the corresponding matrix is singular or not. If the determinant is non-zero, the matrix has an inverse which can be determined by applying equation (6). Example 2 3 4 If A = 1 4 5, –2 1 0 find A–1. As we have seen above, det(A) = –4. Thus the matrix of cofactors is 5 10 9 4 8 8 1 6 5 and (taking the transpose) the adjoint matrix is 5 4 1 10 8 6 9 8 5 thus (dividing through by the determinant) the inverse is The theory applies only to square matrices; the concepts of an adjoint or inverse matrix are meaningless for non-square matrices. 2 © A Barnes 2006 5 CS1260/L7 54 A–1 = 52 9 4 1 14 2 32 2 54 and it can easily be checked that A A–1 = A–1 A = I. Matrices over Zn So far our examples have assumed that the elements of the matrix belonged to R (the real numbers) or Z (the integers). However the theory goes through almost unchanged for matrices over Zn (the integers mod n); of course in this case all the arithmetic is performed modulo n. For example if we are working in Z6 then and 2 1 4 2 3 5 3 5 2 5 2 32 1 4 1 0 1 0 1 1 1 1 0 ( 2 (3) (1) (3) 4 3) mod 6 9 mod 6 3 The only place where the theory differs significantly from the theory of matrices over the real numbers or integers is when we come to consider inverses. We can still define the adjoint matrix (as the transpose of the matrix of cofactors) and equation (5) holds (provided of course that everywhere we do our arithmetic in Zn. However there is a slight complication involving division by the determinant: this is only possible if the determinant is invertible in Zn. If n is a prime (p say), then every non-zero value in Zp is invertible, and a matrix over Zp has an inverse if and only if its determinant is non-zero. The inverse is given by equation (6). However if the modulus n is composite (i.e. has non-trivial factors) the determinant may be non-zero, but still be non-invertible. If this is the case then the matrix is singular in Zn and has no inverse. If the determinant is invertible in Zn then the matrix has an inverse given by equation (6). It turns out that a number min Zn is invertible if and only gcd(m, n) =1, that is m and n are coprime and have no factors in common (except 1). Examples 1. Over Z6 the matrix 2 1 4 A 5 2 3 1 1 0 has determinant 3. This is not invertible in Z6. Thus the matrix A is singular in Z6 and has no inverse. © A Barnes 2006 6 CS1260/L7 2. Find the inverse in Z31 of the matrix 9 3 A 10 15 det(A) = (9 15 – 3 10) mod 31 105 mod 31 12. Using trial and error multiplication3 we find 12 13 = 156 1 mod 31 so that 12–1 13 mod 31. Thus using equation (6) we have 15 3 15 28 A 1 13 13 10 9 21 9 9 23 mod 31 25 24 3 Alternatively we can use the extended Euclidean algorithm to find the required inverse. © A Barnes 2006 7 CS1260/L7