Chap-2-Random-Processes-McSheaBrandon

advertisement

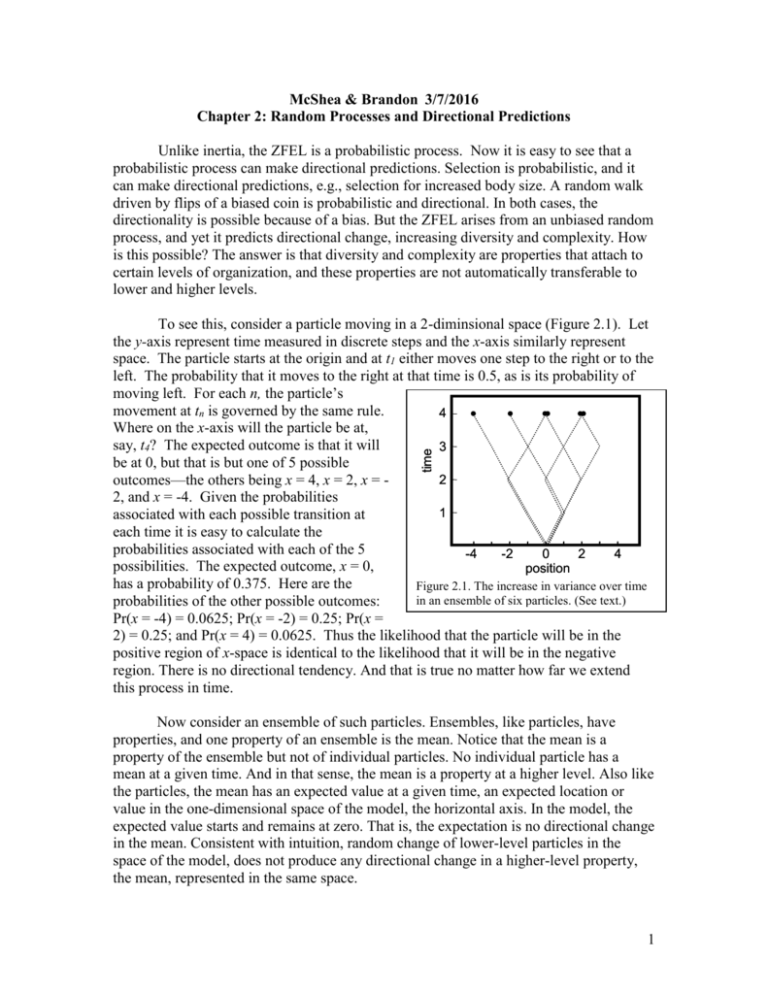

McShea & Brandon 3/7/2016 Chapter 2: Random Processes and Directional Predictions Unlike inertia, the ZFEL is a probabilistic process. Now it is easy to see that a probabilistic process can make directional predictions. Selection is probabilistic, and it can make directional predictions, e.g., selection for increased body size. A random walk driven by flips of a biased coin is probabilistic and directional. In both cases, the directionality is possible because of a bias. But the ZFEL arises from an unbiased random process, and yet it predicts directional change, increasing diversity and complexity. How is this possible? The answer is that diversity and complexity are properties that attach to certain levels of organization, and these properties are not automatically transferable to lower and higher levels. time To see this, consider a particle moving in a 2-diminsional space (Figure 2.1). Let the y-axis represent time measured in discrete steps and the x-axis similarly represent space. The particle starts at the origin and at t1 either moves one step to the right or to the left. The probability that it moves to the right at that time is 0.5, as is its probability of moving left. For each n, the particle’s movement at tn is governed by the same rule. 4 Where on the x-axis will the particle be at, say, t4? The expected outcome is that it will 3 be at 0, but that is but one of 5 possible 2 outcomes—the others being x = 4, x = 2, x = 2, and x = -4. Given the probabilities 1 associated with each possible transition at each time it is easy to calculate the probabilities associated with each of the 5 -2 0 2 4 -4 possibilities. The expected outcome, x = 0, position has a probability of 0.375. Here are the Figure 2.1. The increase in variance over time in an ensemble of six particles. (See text.) probabilities of the other possible outcomes: Pr(x = -4) = 0.0625; Pr(x = -2) = 0.25; Pr(x = 2) = 0.25; and Pr(x = 4) = 0.0625. Thus the likelihood that the particle will be in the positive region of x-space is identical to the likelihood that it will be in the negative region. There is no directional tendency. And that is true no matter how far we extend this process in time. Now consider an ensemble of such particles. Ensembles, like particles, have properties, and one property of an ensemble is the mean. Notice that the mean is a property of the ensemble but not of individual particles. No individual particle has a mean at a given time. And in that sense, the mean is a property at a higher level. Also like the particles, the mean has an expected value at a given time, an expected location or value in the one-dimensional space of the model, the horizontal axis. In the model, the expected value starts and remains at zero. That is, the expectation is no directional change in the mean. Consistent with intuition, random change of lower-level particles in the space of the model, does not produce any directional change in a higher-level property, the mean, represented in the same space. 1 Another ensemble property is the dispersion, or the variance in position of the particles. The model does make a directional prediction about variance, namely that it is expected to increase in each time step. Like the mean, the variance at a given time is a higher-level property, a property of the ensemble but not of individual particles. No individual particle has a variance at a given time. However, unlike the mean, a variance at a given time cannot be represented as a point in the space of the model. In other words, it cannot be represented by the descriptive tools adequate at the particle level. One way to say this is that the variance is a property of a higher order. And the point is that for higher-order properties, intuition is silent. We have no reason to think that random movement in the space of the particles should translate in any direct way to behavior of higher-order properties in a very different space. In particular, there is no reason why purely random behavior of particles should not produce an increase in variance. Variance Increases Indefinitely Suppose we run the model illustrated in Figure 2.1 over a longer time span, measuring the variance at each time step using some appropriate metric, say the standard deviation. The top graph in Figure 2.2 shows the result. The standard deviation trends decidedly upward. Interestingly, despite, this upward trend, the graph shows occasional downward wobbles. This is not unexpected, because the ZFEL prediction of increase is probabilistic. By chance, complexity can decrease, in that points could move closer together. And in runs of the model starting with small sets of points, this happens moderately frequently and not infrequently even with larger sets. Notice, however, this would not be true of a more realistic model with higher dimensionality. Even if the points by chance become less dispersed in one or a few dimensions, they are likely to become more dispersed in most. In a multidimensional space, the increase in overall variance will be quite reliable. The bottom graph in Figure 2.2 shows the average trajectory of the standard deviation over 100 runs of the model (with error bars showing one standard deviation on either side of that trajectory, in effect one standard deviation of the standard deviation). And it reveals something that some may find surprising. The initial rise in complexity is not surprising. At the start, when all points start collected at the same place, dispersion can only increase. There is obviously nowhere to go but up. What may be surprising is that dispersion continues to rise even later, when the points have become quite dispersed. Analytically it can be shown that this is a square-root curve and that it does not asymptote. Variance rises indefinitely. The ZFEL claim is that variance increases indefinitely in the Figure 2.2 2 absence of limits or external forces, not just for a standard deviation metric, but for most intuitively reasonable measures. We could use the statistical variance, for example, or we could use something like the average absolute difference between pairs of points. Other measures will produce trajectories of different shapes, of course. The statistical variance will produce a straight line, rather than a square root curve, for example. But almost any measure of average dispersion will increase monotonically over a number of independent runs, and will do so indefinitely. Now measures can be devised that appear to contradict this claim. For example, one could decide to carve the horizontal axis into discrete bins, and measure the variance as the number of different bins occupied. And in that case, the variance is expected to rise until every point occupies its own bin and then to increase no further. But what has happened here is that the decision to recognize only number of bins occupied, irrespective of how far apart they are, places a mathematical upper limit on the amount of variation that the metric is able to record. It is limited by the number of points. And the appearance that the variance has asymptoted is the result of that limit. It is a constraint that is built into the metric rather than a limit in the underlying process. This is a diffusion process, and unless some constraint or force is imposed, diffusion should drive variance upward indefinitely.1 1 (Footnote in preparation: on crossing over, on alternative measures, and on alternative models of introduced change. N.B. slope decreases. In other words, the tendency to increase is more pronounced at lower complexity, when the parts are more similar, than at higher, when they are more different. This feature is partly an artifact of the choice of standard deviation as the measure of complexity. (Using the statistical variance instead would produce a straight line.) But the decreasing slope is also intuitively reasonable. When parts are very similar, almost any introduced set of random changes will tend to make them more different and negligibly few sets will make them more similar. But when parts are well differentiated, a higher percentage of the introduced patterns of change will make parts more similar, and the margin by which increases outnumber decreases falls. Increases remain more probable, but the expected rate of complexity increase falls.) 3