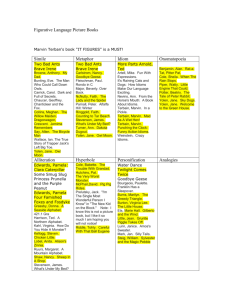

Word - ECpE Senior Design

advertisement

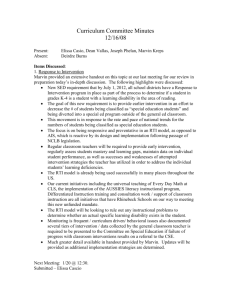

Table of index I. University of Berlin page 1–9 1. Communication system 2. Control system 3. System requirement 1–7 8–9 10 - 13 II. Official rule of Entry Governing 13 - 15 II. Standford University 1. Autonomous control of helicopter (PDF) 2. Carrier phase of GPS (PDF) 1 Communication system (University of Berlin) a. Sensors: GPS Receivers A pair of GPS receivers, type RT-2 by NovAtel, Canada, serves for determining the robot's position at a rate of 5 Hz and an accuracy of 2 cm. One of the receivers is located onboard, the other constitutes the reference point on ground. The whole system is leant to IARC participants at no cost by the manufacturer. 2½D Compass A collection of 3 magnetic field sensors (resonating fluxgates) onboard measures the direction of the earth's magnetic field relative to the helicopter. This allows the determination of 2 of 3 parameters of the robots orientation (rotation around an axis parallel to the magnetic field vector cannot be recognized). Inertial Measurement Unit 3 semiconductor acceleration sensors measure the virtual acceleration onboard, which consists of the gravity of the earth and the acceleration in the robots movement. Because of this interference it is both impossible to calculate the robot's orientation and the robot's movement solely from the 2 three measured values. This would involve 6 degrees of freedom. Rotation 3 piezo-electric rotation sensors measure the rotation rates around all axes (yaw, pitch, roll). Camera The robot carries a digital photo camera with automatic exposure control and a fixfocus wideangle lens. This camera is used to search, locate and classify the target objects with respect to the competition task. Flame Sensor A flame sensor enables MARVIN to detect fires, which may burn within the competition arena and constitute a considerable thread to the robot if approaching too close. b. Communication link Between robot and ground station, two communication links are established: DECT Data Link A pair of data communication modules based on the DECT standard stemming from cordless telephones is used to perform all the information exchange between the robot and the ground station. The modules are manufactured by SIEMENS and constitute something like a "wireless null modem cable" at 115 kbit/s bidirectionally. Even the images of the digital photo camera are downlinked via DECT. A specialized communication software is used that implements a distributed shared memory view of the state parameters of the overall system. Every piece of information is assigned a guaranteed minimal fraction of the bandwidth available, which ascertains real-time capability for the MARVIN software. Remote Control A usual remote control unit for model helicopters is required as a backup manual control and for test and development purposes. One channel of the RC unit is used for switching between remote and onboard computer control. If the onboard computer loses power, control goes back to the human pilot in any case. After the human pilot has enabled computer control, he continues steering the robot until the onboard computer starts to produce servo control signals on behalf of a software command. c. Onboard computer. A single-board computer based on the SIEMENS SAB80C167 microcontroller serves as MARVIN's onboard computer. This controller possesses enough connectivity to interface all of the robot's hardware components. It can handle lots of external signals and events at very low CPU load - or even none at all. It's low power consumption and sufficient computational power render it ideal for the tasks onboard MARVIN. The computer board has been designed and manufactured in the MARVIN project course in cooperation with the board manufacturing laboratory of the "Technische Fachhochschule Berlin" (TFH). Based on the GPS reference data and the onboard sensors, the onboard computer is able to autonomously follow a given intended course. The helicopter servos are driven directly by the controller. A new "command" from the ground station is not required as long as the intended course remains unchanged 3 MARVIN's GPS MARVIN's Global Positioning System (GPS) is from the Canadian Novatel. They lent a high-performance Differential GPS (DGPS) to every participant of the competition. A GPS is normally used to get the absolute position (of the GPS-Antenna) on earth by receiving signals from up to 12 earth orbiting satellites. These satellites can be understood as lighthouses. The GPS calculates the position by knowing which satellites it received, where it is especially how far away. A normal stand-alone non-military GPS can spot its position with an accuracy fault of 30 m. This is not enough for a flying robot to navigate in an area full of unknown obstacles, because the error is not constant but floating around the real position. The GPS baseantenna set up in front of the competition scenery and the airantenna mounted on MARVIN's rear tube. The DGPS of Novatel can spot its position relative to a second, fixed GPS station with an accuracy fault of 2 cm. If the global position of this fixed station is known with high accuracy (either by reading it out of a map or doing an position averaging for a period of some hours or a whole day) the global position of the mobile station is exact too. To execute the differential calculations, a constant stream of reference data has to flow from the fixed base- to the mobile roverstation. The older this data, the worse the position. The GPS card and its case on MARVIN. The case has a window, because the status LEDs are SMD. To fly the robot a good relative position would be sufficient, but to build a map about the obstacles, barrels, persons aso. a good global position is necessary too. In competition MARVIN detected a barrel with a position fault of 8 cm! Even if you don't keep in mind that the labels are printed in US Letter and the maximum allowed position fault was 2 m MARVIN did it very well. The GPS supplies a three-dimensional position without any information about the helicopters orientation, if it is in parallel to the ground or not, looking to north or south. A little more scientific: Only the three translational degrees of freedom are observed by the GPS. Therefore the three rotational degrees of freedom have to be observed by additional sensors. That does our self invented Inertial Measurement Unit (IMU). MARVIN's IMU The Inertial Measurement Unit (IMU) is self invented by us students for MARVIN. It uses merely cheap and light-weight sensors compared to all the high-end, high-weight lasergyros and such. Three identical boards are assembled in a right-angled arrangement, each holding an accelerometer ADXL05 from Analog Devices and a piezoelectric gyroscopes ENC-05E from Murata. Both types of sensors are needing a stable voltage of 5 V and have analog outputs. The output of each sensor is amplified to the full 5 V range and is low-pass-filtered to get rid of noise and the helicopters vibrations. This also prepares the signal to be sampled. 4 The self invented IMU The accelerometers are measuring the earth gravity and the acceleration caused by changes in movement speed of MARVIN. If there were no changes in speed, the accelerometer could be used directly for tilt measurement. The gyroscopes are measuring the angular velocity, which can be integrated to the angle. Our algorithm used in the competition worked with a combination of tilt measurement with the accelerometers averaged by sliding window algorithms over several seconds actualized with the latest fixed time integrations of the gyroscopes output. The output of the algorithm are stable, drift-free, but still fast and actual angles with a fixed integration error. The size (=time) of the sliding window is varying these attributes. A big window over a long time leads to a very stable angles since changes in speed, noise and vibrations are averaged out, but they also become less actual. Another task one has to complete when building sensors and circuits and want to have high accuracy is to find out the characteristics for every single sensor. Measuring the accelerometers is easy because a constant acceleration is available everywhere, earth gravity, and the angle between sensor and ground can be measured. What is harder to find is a variable, constant angular velocity. We modified a Technics record player to turn as slow as possible for its motor by adding a big potentiometer to its speed adjustment. It was not slow enough so we added a stepping motor driven by a PC for the low speeds. Et voilà, we had a programmable turntable. Luckily nowadays we could do a wireless setup on the turntable. The programmable turntable used for calibration A problem is, that the direction the helicopter is facing to, cannot be measured by an accelerometer and an uncompensated gyroscope drifts too much, therefore we needed a compass. MARVIN's Compass Our compass is build with three magnetometers made by SLC. They are resonating fluxgates called FGM. They have a digital output which is proportional to the measured magnetic flux density. We used three sensors to build a tiltinsensitive compass. Since the sensors are free of mechanical parts, they are also not harmed by vibrations. 5 The self invented Compass The FGM-Sensors are available in different versions with different degrees of sensitivity and packages. There are single axis and double axis sensors available. We used a double and a single axis sensor to get our compass. The output of the sensors is a digital TTL-compatible signal: frequency proportional flux density. The frequency ranges from is 40 to 150 kHz leading to a bandwidth of 20 kHz. Since we are not interested in such a high bandwidth and our microcontroller is not fast enough to resolve such high frequencies we used a counter to divide the sensor's output by 1000, so the bandwidth is reduced to 20 Hz, what is just what we need. If you followed the thread of articles until here (really!? did you?) you have read about all hardware components that the robot needs to fly. What comes next is the digital camera. MARVIN's Camera MARVIN observes its environment by a down facing digital still camera Sanyo VPC-X350EX. It is connected via a serial port to the microcontroller. The camera communication protocol was not published by its makers, but found out by a person in the net. See my DigiCam for Amiga for further information and links. The microcontroller works off the protocol and send the picture down via our all-purpose datalink mixed with all other data to be evaluated by the computers at the base station. When using a resolution of 640x480 pixels, the outputsize of the camera in jpeg is about 60 kB. In normal operation 90% of the downlink bandwidth is reserved for picture data and we get a new picture down every 9 seconds including all communication, camera set-up and shot overhead. Why we don't use a normal videocamera with one of the usual 2.4 GHz analog radio downlinks? Oh, we did ... at least we tried to, but the quality of the picture was distressing. In the first place the downlink get interfered by the engine. We tried shielding but without much improvement. Second the videocamera was disturbed by the helicopter's vibrations using a better (and heavier) camera was no solution due to lack of money and load capacity. Have a look at some of the pictures I think they speak for themselves. 6 All but last picture is taken during the competition flight. Every thing you see on the picture is real size, no models. Click on the picture to see the original. Bye the way. For some it is still unknown how many times a smartcard can be rewritten. For us it isn't anymore :). We have not really count, but it has to be something around 10000, because we did kill our first smartcard. The program did that: Take a picture, download picture, erase picture and back to the first step. That obviously killed our first smartcard, at least the beginning of the memory on it. We bought a new card and I altered the program. Now it always uses the complete card memory (55 pictures) and erases the whole card when it is full. This card should hopefully last for the next half a million pictures. Additional sensors are used to inspect the environment. One of them is the fire-sensor. MARVIN's Firesensor As the rules of the competition are prescribing, flames, which are actually up to fifty feet high, will burn in the competition area. To avoid flying into these flames a flamesensor is needed. We had luck and found a quite excellent flamesensor from Hamamatsu called UVtron. The UVtron is based on the principle of a photodiode but only sensitive in a very narrow band of the electromagnetic spectrum only reacting on spectral lines obviously only be emitted from oxidating carbon. I have to admit that we were so impressed by its performance, that we didn't requested its way of operation much. But read ahead. As like a normal photodiode the anode emits electrons when hit by a photon of enough energy, in difference to a normal photodiode the UVtron is not evacuated but filled with a special gas. When the released electron (accelerated by 400 V) crosses this gas it emits photons in the same spectral area the UVtron is sensitive in, leading to a chain reaction. The firesensor mounted on MARVIN. The lid at the left has a very narrow slit to get a very sharp angular sensitivity. To avoid a constant current floating through the tube after the first "right" photon hit it, the tube is powered by a capacitor that is charged with a constant rate. The available evaluation circuit charges with a rate of 20 Hz. The evaluation circuit counts the number of discharges per 2 seconds interprets the result and proclaims the detection of flames or not. We did some minor changes to the circuit (mainly we added an 7 output just before the counter) to get every discharge event directly and did the counting and interpreting with our microcontroller. The sensor is high-sensitive, reacting on a gas cigarette lighter 5 m in front of him with a detection rate of 5 discharges per 2 seconds (40 is maximum). The background noise while flying the robot in the sunshine is 1 tick/2s. A problem as always for an intensity sensor is that you never know if it is a small fire nearby or a big far away. To get the position of the fire it has to be triangulated with several detections. We also added three cheap infrared-sensors with daylight filter from Texas Instrument, they have a lower detection range as the UVtron and so we hoped to get in combination more information about the distance of the fire. But they still get confused by the sun, so we couldn't used them for intensity detection of fires. We still tried to use them by implementing a simple flickering detection, because if one detect quick fluctuations in intensity it can't be the sun. However, the UVtron did its job so great that we had no use of the unprecise infrared sensors. This was the last description (in respect to the thread of articles) of a component I get directly involved in the process of engineering hard- or software. Nevertheless I will describe the other components here too, since I get used to all components of the helicopter over the time. One of them is the ultrasonic rangefinder. MARVIN's Ultrasonic Rangefinder MARVIN has two ultrasonic rangefinders. The first looking forward and a second looking downward. With the first one MARVIN can detect obstacles and void crashing into them, the second is used to track the distance to the ground, its height. The GPS also tells MARVIN its height, but this is not necessarily the height over ground, but the absolute height and the ground may be uneven. The ultrasonic rangesensor mounted on MARVIN. One clicking forward and one clicking down. (Ultrasonic bursts are perceived as clicks by humans) The ultrasonic range finders are the one always used for robots and made by Polaroid. We also saw other teams equipping their robots with them. This component using the available evaluation boards from Polaroid can directly by connected to the digital I/Os of our microcontroller, the heart of the helicopter where every sensor, servo and serial device is connected to. 8 Flight Control (University of Berlin) MARVIN's Microcontroller and Powersupply MARVINs processing heart is a 16-Bit-Microcontroller Siemens C167 running at 20 MHz integrated with expandable 256 kb of SRAM memory on a custom made board. The board was completely developed and build by students exclusively for MARVIN. They also did an I/O-Board that adds four serial ports and a switchboard for the servo control lines. The switchboard consists of high-power micro relays, which are used for switching the servo control between the computer and the human pilot, who is always there as backup system. Both boards are stacked with their bus connectors. Both boards stacked with IO-Board on top and MC-Board from top and bottom. On the top of these both boards is stacked a third, the powersupply for all on-board electronics. One case keeps the boards dry and warm :). The top side of the case is used as cooler for the power supply. From the case a single cable goes to every component including all in- and output datalines and power for the component. The whole system is power efficient, working more than 20 minutes with 7.2 V 1.5 Ah rechargeable battery. This case contains all three boards mounted and connected on MARVIN. The both boards are planned and designed by students, computer engineers. The MC is a quadrilayer board, the IO a double layer board. They are manufactured at the production facilities of the TFH Berlin. The power supply and the case is designed by an electrical engineer and completely handmade. See the copyright note and the team that made the boards immortalized on the boards Every component is connected to this central point. The compass, firesensor, ultrasonic rangefinder, servos and RC-signals are using its digital I/O-Ports, the IMU is using the 10-Bit A/D-Converter inputs, GPS is using to two serial ports, the camera is using one serial port and last but not least the DECT datalink is using a serial port. 9 MARVIN's Datalink We use a two Siemens MD101 Data as our datalink. It uses DECT-Standard (Digital European Cordless Telephone), which is as its name suggests originally used for cordless telephones at home. The MD101 was one of the first, if not the very first, that can transfer any user data. By bundling 4 channels in each direction a transfer rate of 107 kbps in fullduplex is reached by a single MD101 Data. The MD101 is connected via standard V.24 serial port to the PC on the ground and the microcontroller on the robot acting as fully transparent serial connection. The DECT datalink module, one can see the counterpart on MARVIN in the back. Second picture is a close up of the logo and the antenna. The MD101 is originally thought as in-house datalink between a PC and its analog or ISDN modem. Just to avoid in-house cabling. But since most of us own a telephone using DECT and know their reliability we trust in the technology and bought two MD101 as soon it was available. The MD101 saved us a lot of trouble. Our first datalink was a spread-spectrum radiomodem (Gina), but it was interfered by the engine (probably its ignition) and useless. But even if it would not, it also needed much more power, was heavier, did less distance and had only a quarter of the datarate but four times the price. It was ancient technology. The MD101 on the other hand simply does what it should do. Transferring data under every condition, it turned out to be very unsensitive to its surrounding environment, the fault rate in flight is very low. Five errors a flight of ten minutes is interpreted as high error rate. I have to add here, that the MD101 do error detection and correction and automatic request, but we do not use any serial handshake to keep our data as new as possible. So if the MD101 resends a packet and its serial input buffer is full, some of our data is dropped and the receiving program recognize this. At this point the thread will end for now. All hardware components are now shortly described, software is a different story and should be narrated somewhen. 10 System Requirement (University of Berlin) MARVIN Multi-purpose Aerial Robot with Intelligent Navigation MARVIN flying near Berlin MARVIN is an autonomous, flying robot. Developed by students in a student project. There were more than 30 students involved in this project over the last 1,5 years. The robot was developed with the rules of the International Aerial Robotics Competition (IARC) kept in mind. This competition is annually arranged by the Association for Unmanned Vehicle Systems International (AUVS). This year the competition took place The most important thing in advance: The TU Berlin with MARVIN get on the first place in IARC this year, because Marvin was the only robot doing absolutely autonomous start, flight and landing. It also found a barrel and identified the label on it. Link to press releases and more can be found on MARVIN's official homepage (in german) or a poster about MARVIN (PDF, in german). MARVIN flying near Berlin The exact rules of the competition can be found at AUVS, in short: the robot must start and fly absolutely autonomous. Autonomous as understood by the AUVS means, that the robot has to act without any human interventions right from the beginning of the mission it has to perform. It has to be completely self-controlled, recognizing its environment and reacting on what is "sees". The needed computer power to control the 11 whole robot-system may also be on the ground at a fixed base station as long as the datalink to the robot is wireless. MARVIN flying in USA just before the competition In the first place the robot has to fly, where flying is "staying in the air" not jumping nor bouncing. Also a hovercraft would not fit the rules. As many of the other teams we came to the conclusion that a RChelicopter would be a good start to build a flying robot. MARVIN flying in USA just before the competition The robot's mission is to fly over an unknown area, that was just afflicted by a disaster. The resulting catastrophic scene encloses fires, water fountains, dropped barrels containing hazardous materials and people lying around. The robot has to build a map upon this area, to recognize and avoid flying into fire or water, to identify the contents of the barrels by their labels, to find the people and to decide if they are dead or alive. This information, presented in a human readable form, is rated by the judges in the competition and can be very helpful for human helpers that have to go in the disaster area in real life. MARVIN standing on the roof of an university building Such a robot consists of a bunch of parts working as unity. Hardware as well as software. Behind the next links you will find overviews over the single components written by myself or if available written by the people that did that part. I got personally involved in GPS, IMU, compass, fire sensor and on-board digital camera. 12 MARVIN standing on the roof of an university building The following articles are logically threaded, one can start at the first link and browse through then. At the very first, the thing that flies. The helicopter. To get the absolute position the GPS. To get the yaw-, pitch-, roll-angles the IMU. To get the orientation the Compass. To get pictures the Digital Camera. To avoid that MARVIN get burned the Firesensor. To track possible obstacles and hills the Ultrasonic Rangefinder. The system's heart the Microcontroller. And the virtual leash that takes MARVIN for a flight Datalink. GENERAL RULES GOVERNING ENTRIES 1. Vehicles must be unmanned and autonomous. They must compete based on their ability to sense the semi-structured environment of the Competition Arena. They may be intelligent or preprogrammed, but they must not be flown by a remote human operator. 2. Computational power need not be carried by the air vehicle or subvehicle(s). Computers operating from standard commercial power may be set up outside the Competition Arena boundary and uni- or bi-directional data may be transmitted to/from the vehicles in the arena. 3. Data links will be by radio, infrared, acoustic, or other means so long as no tethers are employed. 4. The air vehicles must be free-flying, autonomous, and have no entangling encumbrances such as tethers. 5. Subvehicles may be deployed within the arena to search for, and/or acquire information or objects. Subvehicle(s), must be fully autonomous, and must coordinate their actions and sensory inputs with all other components operating in the arena. Subvehicles may not act so independently that they could be considered separate, distinct entries to the competition. Any number of cooperating autonomous subvehicles is permitted, however none are required. If used, subvehicles must be deployed in the air from an autonomous aerial robot. Subvehicles may be airborne or multimode (able to operate in the air or on the ground). All vehicles must remain within the boundaries of the arena. 13 6. Air vehicles and air-deployed subvehicles may be of any size, but together may weigh no more than 90 kg/198 lbs (including fuel) when operational. 7. Any form of propulsion is acceptable if deemed safe in preliminary review by the judges. 8. So your entry form will be anticipated, and so you can be notified that it has not arrived were it to get lost in the mail, an Intention to Compete should be received no later than the date shown in the schedule at the bottom of these web pages. To avoid unnecessary delay due to the mail (particularly for international entries), a letter of intention to compete can be transmitted by E-MAIL to Robert C. Michelson, Competition organizer at robert.michelson@gtri.gatech.edu. Submission of a letter of intention to compete is not a requirement, however entries received after the deadline which are not clearly postmarked may be rejected as late unless prior intention to compete has been expressed. 9. The official World Wide Web pages for the competition are your source for all information concerning rules, interpretations, and information updates regarding the competition. In anticipation of the upcoming Qualifier, the official rules and application form will be obtained from the official World Wide Web pages and will not be mailed to potential competitors. If you have received these rules as a hard copy from some other source, be advised that the official source of information can be found at: http://avdil.gtri.gatech.edu/AUVS/IARCLaunchPoint.html The application form is available electronically All submissions must be in English. The completed application form is not considered an official entry until a check or money order for 1000 U.S. Dollars is received by mail on or before February 1, of the current year for which a team officially enters the competition (this is a one-time application fee). This application fee covers all of the qualifiers. Teams entering for the first time subsequent to 2001 are still liable for the application fee. (This fee has been instituted to discourage teams from applying that are not serious competitors). As an incentive, part of this application fee will be returned to those teams performing to a specified level during each qualifier (see the Qualification and Scoring section for details on fee rebate). A brief concept outline describing the air vehicle must be submitted for safety review by AUVSI (the application form provides space for this). AUVSI will either confirm that the submitting team design concept is acceptable, or will suggest safety improvements that must be made in order to participate. A web page showing a picture of your primary air vehicle flying either autonomously or under remote human pilot control must be posted/updated by March 1 of each year to continue to be considered as a serious entry. The page should also include sections describing the major components of your system, a description of your entry's features, the responsibilities of each of your team 14 members, and recognition for your sponsors. At least one picture of your vehicle flying is required, though additional photographs of the other components comprising the system are desirable. People accessing your page should be able to learn something about your system from the pages. Web pages that are deemed adequate will be listed with a link from the official competition web site. A research paper describing your entry will be due by the date shown at the bottom of these pages. The paper should be submitted electronically in .pdf format via E-MAIL to robert.michelson@gtri.gatech.edu (no hard copy is required). The 2002 International Aerial Robotics Competition will be hosted in the United States although plans include multiple venues in both the American Continent and Europe. Qualifiers are anticipated over 3 years with a final "fly-off" being conducted at a single location in the fourth year (AD2004) unless the mission requirements can be demonstrated completely by a team during one of the qualifying rounds.. 10. Teams may be comprised of a combination of students, faculty, industrial partners, or government partners. Students may be undergraduate and/or graduate students. Inter-disciplinary teams are encouraged (EE, AE, ME, etc.). Members from industry, government agencies (or universities, in the case of faculty) may participate, however full-time students must be associated with each team. The student members of a joint team must make significant contributions to the development of their entry. Only the student component of each team will be eligible for the cash awards. Since this fourth mission of the International Aerial Robotics Competition was announced in AD2000 and will run for several years (until the mission is completed), anyone who is enrolled in a college or university as a full-time student any time during calendar years 2000 through 2004 is qualified to be a team member. "Full-time" is defined as 27 credit hours during any one calendar year while not having graduated prior to May 2001. Graduation after May 2001 will not affect your status as a team. 15