Univariate Calculus

advertisement

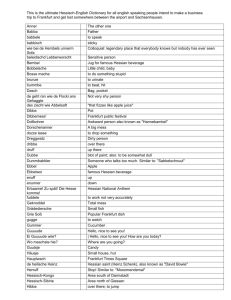

MATHEMATICAL ECONOMICS Linear Algebra Cramer’s Rule Any system of linear equations can be solved by using Cramer’s Rule Solutions are expressed as ratios of determinants. Example Y = C*+c(1-t)Y+I*-bR+G*+NX*-mY Y=(A*-bR) Y=A*-bR MS=kY-hr In Matrix Form 1 Y bR A * kY hr MS 1 b Y A * k h R MS Cramer’s Rule For any variable i, it’s value is |A(i)|/|A| where: |A| is the determinant of the matrix of coefficients; and |A(i)| is the coefficient matrix with the i’th column replaced with the vector of constants. Solution 1 b A h bk k h A* b A(1) A * h bMS MS h 1 A* A(2) MS A * k k MS Y* A * h bMS MS A * k R* h bk h bk Expansion by Cofactors How to calculate determinants for matrices with dimensions greater than 2 2 using expansion by cofactors. Expansion by cofactors works by expanding a single row or column by its associated cofactors. Each matrix is made up of a number of elements. Each element’s position in the array is described by the numerical subscripts. These refer to first, the row and second, the column. Example: 1 0 A 2 1 The element a11 = 1, the element a12 = 0, the element a21 = 2 and the element a22 = 1. Any matrix can also be subdivided into smaller matrices. These submatrices also have determinants. The determinant of a submatrix is termed a cofactor. Each element in a matrix has an associated cofactor. The cofactor is the determinant of the matrix formed by omitting the row and column of the associated element. Example 1 0 2 A 2 1 3 1 3 2 . 1 The cofactor C11 = 3 0 C21 = 3 2 3 2 or -11. 0 2 2 or -6. C31 = 1 3 or -2. EXPANSION The determinant of A can be found by expanding the first column with its associated cofactors. o In fact, any single row or column can be used. The formula is: | A | (1) i j aij Cij In this example it is: (-1)1+1 -1 (-11) + (-1)2+1 -2 (-6) + (-1)3+1 1 (-2) = (11) + (-12) + (-2) = -3 Multivariate Calculus A function f(x,y) may be differentiated to produce f´(x,y). Setting all partial derivatives equal to zero can simultaneously generate all optimal solutions. Example C 2 E 2 78 E 2 X 2 66 X 2 EX C 0 4 X 66 2 E X C 0 4 E 78 2 X E A solution can be found by applying Cramer’s Rule |A|=12, |A(1)|= 108, |A(2)|= 180. X*=9, E*=15 Hessians A maximum (minimum) can only be proved with a Hessian matrix. o A Hessian (H) is a matrix of all the partial derivatives. Cross partial derivatives have the same value (Young’s Theorem) if the endogenous variables are the same. Partial Derivatives 2C 2C 4 4 2 2 X E Cross Partial Derivatives 2C 2C 2 XE EX Hessian Matrix C XX H C XE C EX 4 2 C EE 2 4 Principle Minor Each Hessian is made up of principal minors H1,H2,…,Hn=H. The principal minors above are: H1 4 H 1 4 4 2 H 2 H 2 4 H 2 12 Second Order Condition for a maximum All principal minors alternate in sign, the first |H1| being negative Second Order Condition for a minimum All principal minors are positive Hence, the solutions above prove the optimal values are associated with a maximum point. Implicit Function Theorem (One Equation) Consider the function f ( x, y ) 0. Take the total differential of the equation: f ( x, y ) f ( x, y ) dx dy 0 x y Then rearrange to get: dy f / x dx f / y …or the implicit function theorem. Example Ax y U U Ax y f ( x, y) 0 f ( x, y ) Ax 1 y x f ( x, y ) Ax y 1 y dy Ax 1 y y 1 dx x Ax y Implicit Function Theorem (Multiple Equations) Consider the two-equation system: f ( x1 , x2 , ) 0 g ( x1 , x2 , ) 0 Take the total differentials of the two equations: f f f dx1 dx2 d 0 x1 x2 g g g dx1 dx2 d 0 x1 x2 This system can be written in matrix notation: f x g1 x1 f f d x2 dx1 g dx2 g d x2 Use Cramer’s rule to solve for dx1, f f d x2 g g d x2 dx1 f f x1 x2 g g x1 x2 dx1 d f g f g f x1 g x1 f x2 g x2 J f x2 d g x2 f x2 g x2 Constrained Optimisation Many economic problems involve the agent facing constraints. The most common approach in mathematical economics to constrained optimisation is the Lagrangean technique. The value of the Lagranegean multiplier is the shadow price of the constraint. If we change the constraint by 1 unit, the Lagrangean multiplier tells us how much the function will change by. Thus, the Lagrangean multiplier measures the marginal utility of income in the case of a consumer choice problem. Suppose we have the following production problem. max p(2 ln N 4 ln K ) N ,K s.t.2 N 3K 720 The first function above is the objective. The second function is the constraint. We create a single composite function (the Lagrangean)- which includes a third variable λ (the Lagrangean multiplier). The Lagrangean for the above problem (assuming that p=$1) is: L 2 ln N 4 ln K (720 2 N 3K ) Solution- determine the three first order conditions, eliminate λ and then solve for N and K. The first order conditions are: L 2 N 2 0 N L 4 K 3 0 K L 720 2 N 3K 0 To eliminate the Lagrangean multiplier, we rearrange the first two first-order conditions and divide the second into the first. 2 4 N K 2 3 …then 2K 2 4N 3 Cross-multiply to get: 6K 8N N 86 K 0.75 K Substitute 720 2 N 3K 720 2(0.75 K ) 3K 4.5 K 720 K 160, N 120 Bordered Hessians Hessians cannot be used to derive the second order conditions for problems of constrained optimisation. The Hessian will in some iterations, ignore the effect of the constraints. The solution is to use a Bordered Hessian Example L u ( x, y ) ( M p x x p y y ) The First Order conditions are: L u ( x, y ) p x 0 x x L u ( x, y ) p y 0 y y L M px x p y y 0 The second derivatives (not conditions) are: 2L 2L 2L U xx U yy 0 2 2 2 x y 2L 2L U xy xy yx 2L 2L px x x 2L 2L py y y The Bordered Hessian. U xx H U xy p x U xy U yy py px py 0 Note that in this case, the size (n) of the Hessian is 2, even though the matrix is a 3×3. A border-preserving minor of order r is found by: Subtracting (n-r) rows and columns from the matrix; while These rows/columns are never the border; then Calculating the sign of the determinant. A leading border-preserving minor only calculates the leading minor of order r. Example 4 10 1 H 10 2 2 1 2 0 This has two principal minors of order 1- 2 2 H1 or 2 0 4 1 1 0 The first is the leading principal minor. The Bordered Hessian has one principal minor of order 2- 4 10 1 H 2 H 10 2 2 1 2 0 The second order conditions for a maximum (minimum) are that: The border-preserving principal minor of order r of the bordered Hessian has the sign -1r (<0) for r = 2,…,n. The value of the Hessian above is 22; hence satisfy the second order conditions for a maximum.