CGTAT Notes 2010-05-17

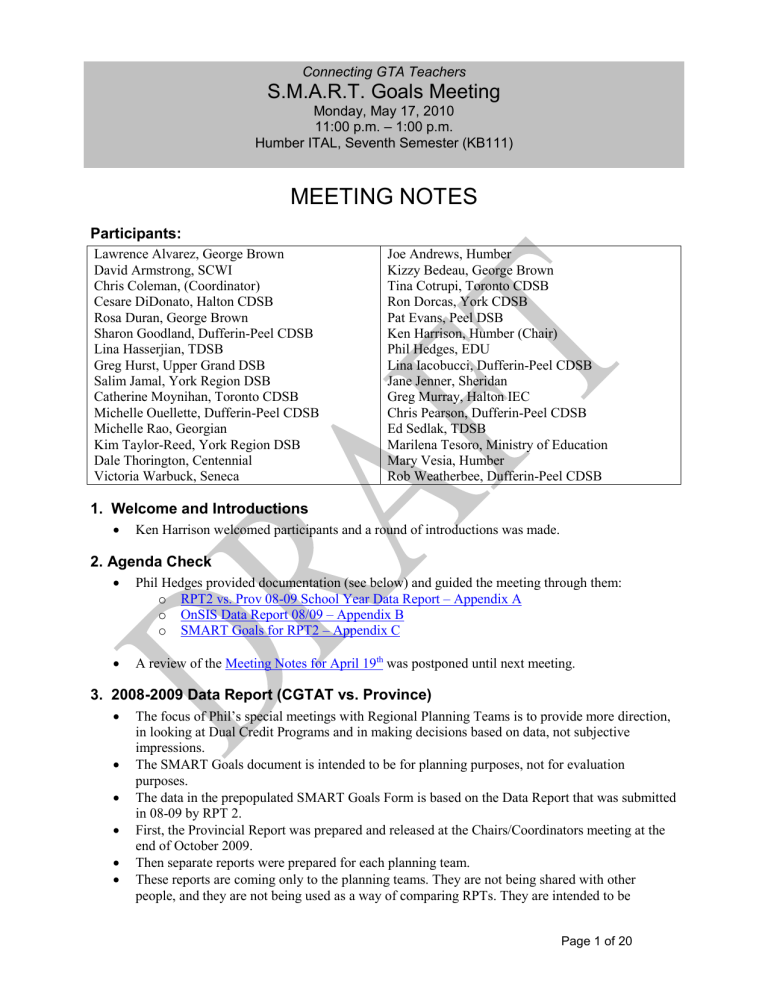

Connecting GTA Teachers

S.M.A.R.T. Goals Meeting

Monday, May 17, 2010

11:00 p.m. – 1:00 p.m.

Humber ITAL, Seventh Semester (KB111)

MEETING NOTES

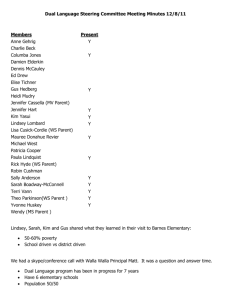

Participants:

Lawrence Alvarez, George Brown

David Armstrong, SCWI

Chris Coleman, (Coordinator)

Cesare DiDonato, Halton CDSB

Rosa Duran, George Brown

Sharon Goodland, Dufferin-Peel CDSB

Lina Hasserjian, TDSB

Greg Hurst, Upper Grand DSB

Salim Jamal, York Region DSB

Catherine Moynihan, Toronto CDSB

Michelle Ouellette, Dufferin-Peel CDSB

Michelle Rao, Georgian

Kim Taylor-Reed, York Region DSB

Dale Thorington, Centennial

Victoria Warbuck, Seneca

Joe Andrews, Humber

Kizzy Bedeau, George Brown

Tina Cotrupi, Toronto CDSB

Ron Dorcas, York CDSB

Pat Evans, Peel DSB

Ken Harrison, Humber (Chair)

Phil Hedges, EDU

Lina Iacobucci, Dufferin-Peel CDSB

Jane Jenner, Sheridan

Greg Murray, Halton IEC

Chris Pearson, Dufferin-Peel CDSB

Ed Sedlak, TDSB

Marilena Tesoro, Ministry of Education

Mary Vesia, Humber

Rob Weatherbee, Dufferin-Peel CDSB

1. Welcome and Introductions

Ken Harrison welcomed participants and a round of introductions was made.

2. Agenda Check

Phil Hedges provided documentation (see below) and guided the meeting through them: o

RPT2 vs. Prov 08-09 School Year Data Report – Appendix A

o

OnSIS Data Report 08/09 – Appendix B

o

SMART Goals for RPT2 – Appendix C

A review of the Meeting Notes for April 19 th was postponed until next meeting.

3. 2008-2009 Data Report (CGTAT vs. Province)

The focus of Phil’s special meetings with Regional Planning Teams is to provide more direction, in looking at Dual Credit Programs and in making decisions based on data, not subjective impressions.

The SMART Goals document is intended to be for planning purposes, not for evaluation purposes.

The data in the prepopulated SMART Goals Form is based on the Data Report that was submitted in 08-09 by RPT 2.

First, the Provincial Report was prepared and released at the Chairs/Coordinators meeting at the end of October 2009.

Then separate reports were prepared for each planning team.

These reports are coming only to the planning teams. They are not being shared with other people, and they are not being used as a way of comparing RPTs. They are intended to be

Page 1 of 20

“conversation starters”, to see what your RPT data looks like in comparison with the provincial picture.

The updated version of this report is scheduled to be out this week. Nothing major will be changed in this report.

The top paragraph of the form is a summary of the information SCWI had. RPT 2 was approved for 991 students of which 751 actually participated according to the information that RPT 2 submitted. In other words 75.8% participated. Provincially, that number was 80%.

We need to look at the gap between the A pproved and the Actual because when we’re messaging about how many students ‘participated’, what number should be used? Typically the number that is used is the ‘Approved’ number. So, for this year SCWI is saying 7,500 students across the province have been approved, but won’t know how many students are actually participating until the Final Data Reports are submitted this June. If it turns out the gap is large, it could cause a problem.

The Age Distribution (page1) is just for information. One RPT may focus more or less on a particular age group than another team. A breakdown by gender has been requested. There are some RPTs with some particular programs/courses focussing on increasing the percentage of males or females, and this breakdown is a way of checking to see if that’s happening.

Need to quantify the distinction between students who are “disengaged and/or underachieving but who have the potential to succeed” (the primary target group) from students who are in SHSM programs in order to “protect” the primary group. When the provincial report for 08-09 was distributed, it said 40% of students in dual credit programs in 08-09 were “disengaged and/or underachieving with the potential to succeed”. In the view of some, this meant only 40% of students fit into the target group – which is not what SCWI wants to be saying and is probably not the reality.

Part of the difficulty here is the question. Some people don’t want to label students as

“disengaged and/or underachieving with the potential to succeed”. Some might find it nice if they could label them or if they came in pre-labeled. The question on the data report was “How many students were, or may have been, ‘disengaged and/or underachieving’ prior to entering this dual credit program?” What SCWI really wants to know is “How many students in your DCP fit the primary target group?” There is increasing pressure to allow several other groups of students into dual credit programs. SCWI is in the process of refining the Student Selection Check Lists that profiles students who fit the primary target group. Nevertheless, the Ministry wants the final decisions to remain at the local level.

RPT2’s percentage of students who are “disengaged and/or underachieving with the potential to succeed” was slightly higher in 08-09 than the provincial percentage.

It’s probably easier to determine if students have dropped out, but even there, questions are being asked often at a time when the students have left and we may not know this information, but

SCWI would like to be able to point out the fact that a significant number of these students had dropped out and this is the program that got them back and hopefully went a long way toward those students being successful.

Students with an Individal Education Plan (IEP) or identified through the Identification,

Placement and Review Committee (IPRC) process are students with special educational needs.

These students are flagged in the school systems, and in the OnSIS data submissions. There is a whole range of “exceptionalities”; it could be gifted, various types of learning disabilities, behavioural issues, and so on.

It’s possible for a student to have an IEP without going through the IPRC process. Schools want to provide the support needed without having to go through the IPRC process which is very formal and time consuming. The tendancy for the last number of years has been to integrate students who have special education needs.

Page 2 of 20

In the post-secondary systems, there all sorts of supports in place for students with special learning needs. In many cases there is more equipment and more service than in the secondary school system. An issue arises when secondary school students with special needs go on to college, they must self-identify in order to get the supports. But, typically they say they don’t need the support, and three months later the assessment process has to be started.

One of the advantages of the dual credit program for those students is the connection can be made sooner.

Attention needs to be paid to whether or not the needs of these students in dual credit programs are being met. So far there’s no evidence there’s a problem. In fact, parents and students are often surprised at the level of support available at the college level. If problems do arise, the Ministry wants to know about them.

The chart on page 3 shows that RPT2’s percentage of students with special educational needs was higher than the provincial percentage. The chart indicates that students with special needs are getting into DCPs -- which is important because we don’t want to say these students are being excluded, but at the same time we don’t want to say that all of our students have special education needs.

With regard to Success and Retention Rates for Dual Credit Programs (page 4), the start date was never clear. Ten days after the start is probably the date to use, but this can be sorted out at a local level and on an individual program basis. “It’s what you normally use”, e.g. the Add/Drop date the college uses, which is two sessions.

SCWI wants to use a more-or-less consistent number across the province because they want to be able to say that, of the number of students who started the program what’s the percentage who actually finished the program, whether or not they passed or failed. If we can get target group students into these programs and they are continuing these programs, that’s important. It’s not that the colleges saying, here are the courses we’re running and saying to the boards, send us students. There is a process involved to determine who gets into the program. There are some processes involved in deciding what to run, and there are some processes for review.

Looking at Figure 7, the Success Rate in 08-09 provincially was 79.1% and for RPT2 it was

81.45%. Those are pretty high numbers, given the group we’re working with.

The next couple of pages show the retention rate and success rate and the last page (6) shows, based on the Data Report, the number of programs that were offered in 08-09 by delivery approach, the number of students, and so on. Is there information, or should we be gathering information, about why the retention rates in some approaches are higher than in others? As it is, by looking just at the data, there is a ‘danger’ that it might be decided that one approach should be used and another dropped. Without explanation, perhaps this piece should not be published?

The issue was raised about the significant difference between assessment policies in school boards and assessment policies in colleges. Technically, the Procedures and Timelines document says it’s the college full disclosure policy that applies. It’s the college mark that applies. It’s been implied from the beginning that if a student did not pass the college course, they shouldn’t be getting credit for the high school course, but there’s been some argument against this, i.e. if the student’s mark is high enough, the student should receive the high school credit. However, there are some technical difficulties involved in doing this. SCWI knows this is a “hot” issue, but needs to determine the scope of the issue. If it’s a small number of students, then perhaps it can be handled locally, but if it’s a large number of students then we are going to have to have a policy.

For example, Seneca reported a course where the students must pass the final exam in order to pass the course. In some cases, students who were getting high marks (70-80%) would fail the course. This situation raised a “huge” issue.

Some discussion ensued. The whole issue of assessment needs to be explored further. Credit integrity is essential.

Page 3 of 20

4. OnSIS Data Report

For those in the boards, Phil brought copies of the Verification Report numbers. There are two

Verification reports that can be run at the school/board level that will allow you to check what data is in there for your dual credit students. So, that would allow you to make sure that the schools that are participating are actually reporting students and that the numbers are close to the reality.

We know that the 08-09 data is “deeply flawed”, but at this point it can not be corrected. The problem is that the OnSIS data is more and more the data the Ministry will use publicly, and it is the OnSIS data that is drawn from source, so nobody on a planning team has to collect and report it. The data is entered on an individual student basis into the school’s SMS system and then reported to OnSIS.

When the data reported by the RPTs is close to, or the same as, the OnSIS data, the RPTs will no longer have to report it.

If there are specific concerns at any time about what you’re trying to report to OnSIS, if you run the report and have a problem, let Phil know.

One difficulty with OnSIS is that it is three “snap shots” at three periods in time (October 31 st ,

March 31 st , and June 30 th ) and scheduling for dual credits isn’t the same.

Dual Credits can be reported through class enrolment. If it’s on the student’s timetable and a teacher’s name is associated with it, then it works automatically, the same as any other course.

SCWI knows this can’t always happen. But, a dual credit can also be reported through “Other

Credits”. Sometimes when the reporting is done through Other Credits, it’s not picked up by

OnSIS. Other Credits should pick up a course no matter when it was completed. The fact that it fits the schedule or not doesn’t matter with Other Credits. For example, Other Credits is what you use to report “External Music”.

Some discussion ensued about the basis of funding.

5. SMART Goals Form

The SMART Goals Template has been pre-populated with data from the RPT data that was submitted.

There are some things on the form that need to be changed. For example in Item 1, “# of schools involved”, The number “84” doesn’t mean very much. It might be more useful to ask, “Of the board partners, what percentage of schools are involved for each board that’s participating.” This is information the board partners could provide. It would be useful then to say, dual credits are offered in 8 out of 8 schools, or 1 out of 8. There may be many reasons this is happening, but it would be then be something to look at.

We should ask two questions: (i) In how many schools are dual crdits offered? (ii) In how many schools are students participating? Right now, the data will not tell us, out of the 800, or so, secondary schools, how many are actually participating. This would tell us if we are getting close to capacity.

Would it be possible to break Item 2, “# of Dual Credit Programs”, down to number of students in each program? Some programs might have as few as five students; others might have several hundreds.

The term dual credit “program” is problematic. One DCP might involve just one course; in other cases, several courses. So, the number of programs doesn’t really represent the growth because in some cases courses were added to existing programs in order to get them approved.

Item 3 might be useful “down the road” in encouraging/assisting an RPT to try or strengthen a particular approach.

Items 4 and 5 are little “fuzzy”.The reason the data is needed is to show we are reaching the target group for whom this program was designed. There is no “magic number” here; we’re just using it for information.

Whenever there’s an opportunity, we need to say to SCWI, we’ve noticed that our numbers are off here, but here’s why. (a) We’re paying attention to it, and (b) Here’s an explanation.

Page 4 of 20

Item7a shows Overall Retention Rate. Then in Item 7b, using our “flawed” definition of Starting and

Ending, shows the Retention Rates for all of the programs. One program is missing, the TTOP project, which was missed after the data was submitted subsequent to the due date. RPT Reps need to focus on getting all of the data and getting as good data as possible, and if there are gaps let SCWI know so they can follow up to make sure that when things are “rolled up” and the numbers are published they are based on the best information.

Where retention rates are lower, is it known why they are lower? Can something be done to address that, or is this the nature of that group? SCWI is not about to judge programs by retention rate.

The form does not show isolated data for students who took secondary school work at a college.

School Within a College programs will be flagged on the Final Data Report so we will be able to collect better information on that.

Retention Rates and Success Rates might be good areas to start formulating SMART Goals.

Items 9 is information everybody would like to have. The hope is that, this time next year, we will have data from OCAS (Ontario College Application Service) and OUAC (the Ontario Universities’

Application Centre), in addition to the OnSIS Report and the Planning Team data, that will say here’s the number of students from this planning team, broken out by board, who participated in dual credits in 09-10 and who applied to, were granted admission, and registered in a college in 10-11.

SCWI is requesting submission of SMART Goals by June 30. The intent is to pool the information and strategies that are out there to get a sense of what other teams are doing, what’s “do-able”, what have others have had trouble with, what others have had success with.

Item 11 on Selection Criteria relates primarily to the boards. SCWI wants to be able to demonstrate, to the college partners in particular, that we are not just selecting students at random; that we are selecting students one at a time; that there is a process involved; and that the process is known. We don’t want to be subject to someone saying, “Oh, you’re just dumping kids into this program.”

Item 12 asks for three SMART Goals that will guide programming for next year. The goals should be

“Specific, Measurable, Attainable, Realistic and Time Bound.”The goals should be related to programs, not individual student achievement. For example, one SMART Goal might be to focus on the gap between approved and actuals, to reduce the gap from 11% to 9%. Next year, SCWI wants to update this document, from the RPT Data Report, so that in the fall, they can give to the RPTs that will start with their SMART Goals and then it will have the two years updated data for each of these areas. We want to be able to say to funders there’s process in place, there’s accountability in place.

6. Draft SMART Goals

Discussion was opened to the group. If participants were thinking of any potential area where a goal might be formulated, they were asked to bring it forward.

One area suggested was related to Item 6, Approved Participants vs. Actual, where the number of

Approved Participants essentially doubled from 08-09 to 09-10. It’s uncertain that the Actual number will also double. So an example of a goal might be to measure the results, evaluate them, provide reasons the numbers weren’t achieveable.

At this point, the Ministry does not have any targets/thresholds for retention, and if/when it does, much further down the road, they will have to vary according to various factors, such as whether the program is new, etc.

SCWI will meet on May 18 th to discuss the change process.

The gap between Approved and Actual may not be all that meaningful for 09-10 because the possibility of a college strike was looming and a lot of people just didn’t want to get involved in it.

It is important to make the Ministry aware of this kind of issue when data is submitted. The analysis of the report will be done by a research person in the Ministry who is not a college or school-based person, and who has little or no direct experience with dual credit programs. That, in itself, is an

Page 5 of 20

advantage because she is looking just at the data, but she is not going to know any of the reasons behind the numbers. This information could go in the “Lessons Learned” section.

Everyone was encouraged to take away these documents and our discussion, and at our next meeting

SMART Goals will be on the Agenda. At that point, we should be able to identify some goals for our

RPT, and look at how we’re going to measure them.

The final version of the pre-populated form for the 09-10 Data Report will be issued this week. Some of the Items will be clarified, but nothing new will be asked. As soon as CGTAT receives the form, it will be distributed to members and a copy placed on the CGTAT website.

It may not be a SMART Goal exactly, but it was suggested we come up with a process that better allows us to learn from each other.

Within each college and the work that we do with our board partners, there are roles that are better defined today than there were several years ago. One example is the role of the Dual Credit Teacher which is the board person. But, the colleges have now, people who are organizing, coordinating, and managing dual credits who come from a wide variety of areas and experiences. Would it be a potential goal to better define and document these roles? It’s not going to be number or a change in a percentage.

We all register students. What does this process look like? Are there commonalities, best practices? Is there a way to come up with a common registration form? Is this another example of a potential goal?

The response was that there don’t have to be three goals directly related to the report. If there is some reference to the report, that’s good. Things like refining roles, registration processes, may be able to connect with program quality, student success, and so on. The goals have to be something that makes sense to this RPT.

Participants were invited to stay after the main meeting to discuss goals in small groups.

A question was asked re funding of textbooks/equipment for students. After some discussion, it was suggested this question be addressed to our Apprenticeship Sub-Committee.

The Agenda for next meeting will include SMART goals, Apprenticeship issues, Final Report,

Invoicing.

7. Other Business

Today’s Agenda and our “Important Dates” document are in error. Our next meeting is Monday,

June 21 st , not June 22 nd .

Next Meeting

Monday, June 21, 2010

Humber ITAL, North Campus

Residence Conference Room (T123)

12:00 – 2:00 Main Meeting

2:00 – 4:00 Room available for pre-arranged sub-committee meetings and pre-arranged board/college partnership meetings.

Page 6 of 20

Appendix A

– RPT2 vs. Prov 08-09 School Year Data Report

Connecting GTA Teachers RPT –

08/09 School Year Data

In the 08/09 school year, Connecting GTA Teachers RPT had 27 Dual Credit programs in place. While

991 students were approved for enrolment in the 27 Dual Credit programs, actual enrolment was reported as 751 students. Enrolment almost doubled compared to the previous year when 376 students were enrolled.

Age Distribution

– Age distribution was consistent with the provincial average , with the highest number of students being 17 and 18 years old.

– In 08/09, 17 and 18 year olds accounted for 73% of the Dual Credit students in Connecting GTA

Teachers RPT (Figure 1)

Figure 1

Disengaged and /or Underachieving and Students Previously Dropped Out

– 42.9% (322 students) were disengaged and/or underachieving prior to entering the Dual Credit program – slightly higher than the provincial average (40.4%)

– Connecting GTA Teachers RPT reported that 6.9%% (52 students) of the Dual Credit students had previously dropped out while the provincial average was 11.31%.

Page 7 of 20

Figure 2

Figure 3

Students Identified Through an IEP or IPRC

– In 08/09 school year, 22.5% (169 students) had an Individual Education Plan while the provincial average was 19.1%. (Figure 4)

Page 8 of 20

– 13.6% (102 students) were identified through an IPRC process compared to 15.6 % at provincial level. (Figure 5)

Figure 4

Page 9 of 20

Figure 5

Success and Retention Rates for Dual Credit Programs

Retention rate represents the percentage of students who participated in the program from start to completion. These students didn’t necessarily successfully complete the program.

The provincial retention rate for Dual Credit programs stayed almost the same for both school years, at approximately 85%.

The retention rate for students in Dual Credit programs in Connecting GTA Teachers RPT was 86.6% (Figure 6)

Student success rate represents the percentage of secondary school credits attempted that were successfully completed.

In the 08/09 school year, the provincial student success rate for Dual Credit programs was 79.1% while for students enrolled through Connecting GTA Teachers RPT, the success rate was 81.5%.

Figure 6

Page 10 of 20

Figure 7

Dual Credit Programs/ Students by Approach

Team-Taught At Secondary School Or College (3)

3 programs were offered u sing the “Team-taught level 1 apprenticeship at secondary school” approach. This represented 10.12% of the number of students in Dual Credit programs (76 students)

Retention rate for this approach was 97.4% while 89.5% of credits attempted were successfully completed

College-Delivered Course at College (5)

17 programs were offered through “College-delivered course at college” approach. This accounted for 74.1% of the total number of dual credits students (555 students)

Retention rate was recorded at 82.3% while 74.1% of the credits attempted were successfully completed

College-Delivered Level 1 Apprenticeship at College Location (6A)

7 programs were offered through the College-delivered level 1 apprenticeship at college location approach . This represented 16% of total number of dual credit students (120 students)

Retention rate was recorded at 99.2% while 90.7% of credits attempted were successfully completed

Page 11 of 20

Appendix B – OnSIS Data Report 08/09

Number of Credits Earned and Number of Students Reported to OnSIS, 08/09 School Year

Board Name

Toronto DSB

York Region DSB

School Name

Bendale Business & Technical Institute

Birchmount Park Collegiate Institute

Danforth Collegiate Institute and Technical School

David and Mary Thomson Collegiate Institute

Don Mills Collegiate Institute

Downsview Secondary School

Kipling Collegiate Institute

Lakeshore Collegiate Institute

Lester B Pearson Collegiate Institute

Nelson A Boylen Collegiate Institute

Newtonbrook Secondary School

North Albion Collegiate Institute

Northview Heights Secondary School

Parkdale Collegiate Institute

R H King Academy

Scarborough Centre for Alternative Studi

Thistletown Collegiate Institute

Timothy Eaton Business & Technical Institute

Vaughan Road Academy

West Humber Collegiate Institute

Western Technical & Commercial School

Westview Centennial Secondary School

William Lyon Mackenzie Collegiate Institute

York Mills Collegiate Institute

Aurora High School

Bayview Secondary School

Dr G W Williams Secondary School

Dr John M Denison Secondary School

Earned

Credits

5

7

1

11

15

1

2

1

4

9

2

2

7

30

7

6

13

2

10

2

23

10

1

1

11

9

7

5

Students

11

15

3

17

22

1

2

2

4

9

2

3

3

34

5

6

17

2

16

2

31

13

1

2

20

13

10

5

Page 12 of 20

Upper Grand DSB

Peel DSB

Halton DSB

Toronto CDSB

Huron Heights Secondary School

Maple High School

Markville Secondary School

Middlefield Collegiate Institute

Milliken Mills High School

Newmarket High School

Pierre Elliott Trudeau High School

Richmond Green Secondary School

Richmond Hill High School

Sir William Mulock Secondary School

Stephen Lewis Secondary School

Stouffville District Secondary School

Sutton District High School

Thornhill Secondary School

Westmount Collegiate Institute

Orangeville District Secondary School

Westside Secondary School

Cawthra Park Secondary School

Clarkson Secondary School

Fletcher's Meadow Secondary School

Gordon Graydon Memorial Secondary School

Humberview Secondary School

Lincoln M. Alexander Secondary School

Peel Alternative North ISR

Port Credit Secondary School

Streetsville Secondary School

T. L. Kennedy Secondary School

The Woodlands Secondary School

West Credit Secondary School

Burlington Central High School

Gary Allan High School - Burlington

Lester B. Pearson High School

Archbishop Romero Catholic Secondary School

Bishop Marrocco/Thomas Merton Catholic Secondary S

Blessed Mother Teresa Catholic Secondary School

Don Bosco Catholic Secondary School

Page 13 of 20

2

2

2

2

4

0

4

0

14

0

25

3

1

2

17

5

2

4

3

2

5

3

4

16

2

15

2

6

3

2

2

12

4

6

10

1

2

2

2

4

4

1

4

1

8

3

36

5

1

4

41

7

3

5

6

2

6

5

4

10

1

16

1

3

1

1

1

6

2

4

6

2

York CDSB

Dufferin-Peel CDSB

Father Henry Carr Catholic Secondary School

Father John Redmond Catholic Secondary School

Jean Vanier Catholic Secondary School

Madonna Catholic Secondary School

Michael Power/St Joseph High School

Msgr Fraser-Isabella

Pope John Paul II Catholic Secondary School

St Basil The Great College

St Patrick Catholic Secondary School

Br André Catholic High School

Cardinal Carter Catholic Secondary School

Holy Cross Catholic Academy High School

Sacred Heart Catholic High School

St Elizabeth Catholic High School

St Jean de Brebeuf Catholic High School

St Joan of Arc Catholic High School

St Robert Catholic High School

St Therese of Lisieux Catholic High School

Archbishop Romero Catholic Secondary School

Ascension of Our Lord Secondary School

Total

Number of Students Reported Locally and to OnSIS, 08/09 School Year

Delivery Type

Dual credit: college delivered college course (5)

Dual credit: college-delivered apprenticeship program (6)

Dual credit: team-taught college course (3)

Total

OnSIS Reporting

533

107

1

641

Local

Reporting

555

120

76

751

5

4

4

11

1

10

7

1

14

2

21

12

4

6

1

3

1

3

48

2

641

11

8

8

13

0

6

3

1

9

0

6

7

2

10

2

6

2

5

22

2

514

Page 14 of 20

Appendix C

– SMART Goals for RPT2

SCWI Regional Planning Team SMART Goals

March / April 2010

RPT # Connecting GTA Teachers RPT

__________________________________________________________

Date of visit:

Dual Credit Programs

1. # of schools involved

Proposed 2010-11 2008-09

84

2. # of programs

2008-09

27

2009-10

2009-10

58

3a. Programs by approach 2008-09

3 4A 4B 5

17

Proposed 2010-11

59

6A

7 3

3b. Programs by approach 2009-10

3 4A 4B 5

30 6

3c. Programs by approach 2010-11

6A

22

5

30

6A

24

3

4

4A

1

4. % from primary target group

4B

2008-09

42.88%

2009-10 Proposed 2010-11

5. % in SHSM

2008-09 2009-10

6a. Participants approved

2008-09 2009-10

Proposed 2010-11

Proposed 2010-11

6B

6B

6B

Page 15 of 20

991

6b. Actual participants

2008-09

751

2113

7a. Overall Retention rate

2009-10

2008-09

86.6%

2009-10

7b. Range of retention rates by program

Program

Accel OYAP - Cook Br2

Accel OYAP- Elect Constr & Maint

AccelOYAP - AutoServTechn

AccelOYAP - Carpenter

AccelOYAP - ECE

AccelOYAP - Plumber

AccelOYAP - Res AC Sys Mech

Alternative Education

Business

Careers in Hospitality & Tourism 2

Cent + Scarborough Area SS

Centennial + D&M Thomson

Centennial + RH King SHSM

Customer Service

Deaf Studies Dual Credit

Discover Tourism

Hospitality & Tourism

Landscaping

Orangeville Project

Paths thru Adv Manuf Tech

Pathways thru Adv Elect Tech

Pathways to Accounting

Prescriptions for Health

SSOWT

STEPS to College

Technology

Transition to College

Total

Students

Start

19

23

18

23

15

14

8

17

12

13

18

16

13

13

51

26

6

15

17

14

37

5

48

163

62

64

21

751

Humber College

Centennia l

Seneca

George Brown

Sheridan

7c. Top program by retention rate

Students

End

19

23

18

23

14

14

8

1

7

9

15

16

12

12

41

26

5

14

14

14

36

5

43

125

62

53

21

650

2424

Proposed 2010-11

Proposed 2010-11

Retention

Rates

100.00%

100.00%

100.00%

100.00%

93.33%

100.00%

100.00%

5.88%

58.33%

69.23%

83.33%

100.00%

83.33%

93.33%

82.35%

100.00%

92.31%

92.31%

80.39%

100.00%

97.30%

100.00%

89.58%

76.69%

100.00%

82.81%

100.00%

86.55%

Page 16 of 20

Program

Accel OYAP - Cook Br2

Accel OYAP- Elect Constr & Maint

AccelOYAP - AutoServTechn

AccelOYAP - Carpenter

AccelOYAP - Plumber

AccelOYAP - Res AC Sys Mech

Centennial + D&M Thomson

Discover Tourism

Paths thru Adv Manuf Tech

Pathways to Accounting

STEPS to College

Transition to College

Students

Start

19

23

18

23

14

8

16

14

26

5

62

21

7d. Contributing factors

7e. Most challenging program by retention rate

Alternative Education - 5.88%

7f. Contributing factors

7g. Impact on future planning

8a. Overall Success rate

2008-09 2009-10

81.45%

8b. Range of success rates by program

Program

Accel OYAP - Cook Br2

Accel OYAP- Elect Constr & Maint

AccelOYAP - AutoServTechn

Potential

Credits

57

46

36

Credits

Earned

56

46

36

Students

End

19

23

18

23

14

8

16

14

26

5

62

21

Retention

Rates

100.00%

100.00%

100.00%

100.00%

100.00%

100.00%

100.00%

100.00%

100.00%

100.00%

100.00%

100.00%

Proposed 2010-11

Success

Rates

98.25%

100.00%

100.00%

Page 17 of 20

AccelOYAP - Carpenter

AccelOYAP - ECE

AccelOYAP - Plumber

AccelOYAP - Res AC Sys Mech

Alternative Education

Business

Careers in Hospitality & Tourism 2

Cent + Scarborough Area SS

Centennial + D&M Thomson

Centennial + RH King SHSM

Customer Service

Deaf Studies Dual Credit

Discover Tourism

Hospitality & Tourism

Landscaping

Orangeville Project

Paths thru Adv Manuf Tech

Pathways thru Adv Elect Tech

Pathways to Accounting

Prescriptions for Health

SSOWT

STEPS to College

Technology

Transition to College

Total

8c. Top program by success rate

1159 944

Program

Accel OYAP- Elect Constr & Maint

AccelOYAP - AutoServTechn

AccelOYAP - Plumber

Paths thru Adv Manuf Tech

Potential

Credits

46

36

28

40

Credits

Earned

46

36

28

40

8d. Contributing factors

8e. Most challenging program by success rates

Alternative Education – 5.88%

8f. Contributing factors

8g. Impact on future planning

6

15

17

28

12

13

18

16

46

30

28

16

17

222

5

48

163

62

64

77

13

13

51

40

5

11

14

26

7

8

12

12

40

25

28

4

1

194

4

43

97

47

53

71

10

12

42

40

Success

Rates

100.00%

100.00%

100.00%

100.00%

86.96%

83.33%

100.00%

25.00%

5.88%

58.33%

61.54%

66.67%

75.00%

83.33%

73.33%

82.35%

92.86%

76.92%

92.31%

82.35%

100.00%

87.39%

80.00%

89.58%

59.51%

75.81%

82.81%

92.21%

81.45%

Page 18 of 20

9. a % dual credit participants who graduated

2008-09 2009-10

9b. % applied to pse

2008-09 2009-10

Proposed 2010-11

Proposed 2010-11

9c. % registered in pse

2008-09 2009-10 Proposed 2010-11

10. What other data are you collecting? How are you using/planning to use it?

11. To what extent is the draft selection criteria being used? How useful is it? What modifications would you suggest? b. a.

12. SMART goals for 2010-11 (overall? Program specific?) c.

13. Challenges / Concerns / Feedback to be shared with the Ministry

Page 19 of 20

Page 20 of 20