PDD

advertisement

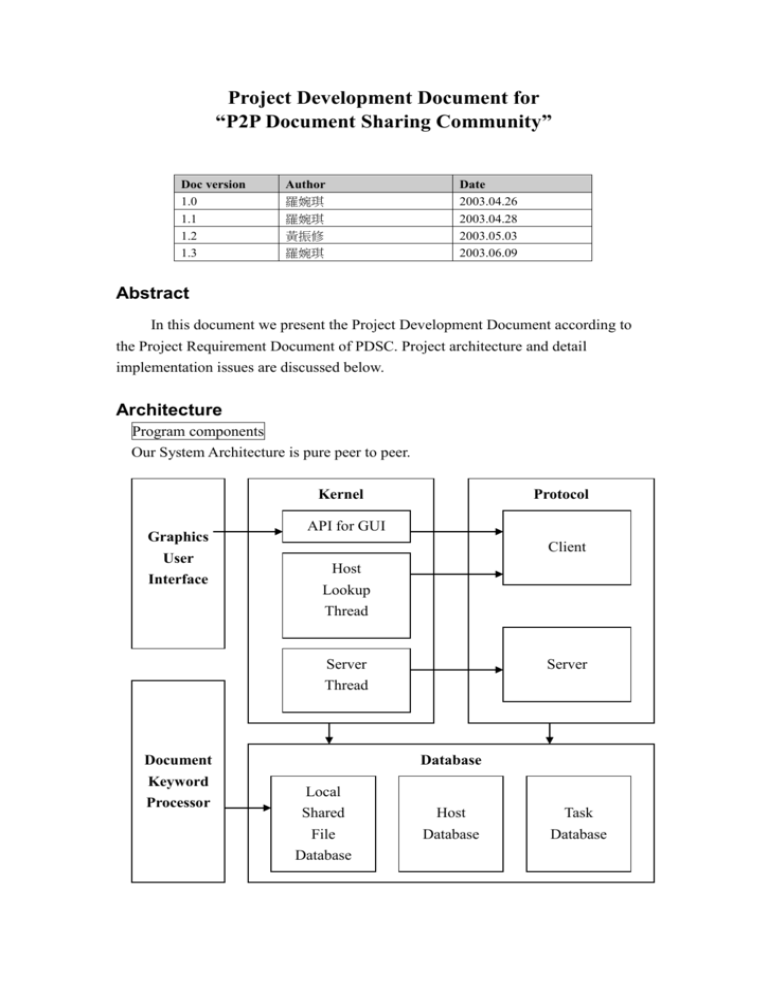

Project Development Document for “P2P Document Sharing Community” Doc version 1.0 1.1 1.2 1.3 Author 羅婉琪 羅婉琪 黃振修 羅婉琪 Date 2003.04.26 2003.04.28 2003.05.03 2003.06.09 Abstract In this document we present the Project Development Document according to the Project Requirement Document of PDSC. Project architecture and detail implementation issues are discussed below. Architecture Program components Our System Architecture is pure peer to peer. Kernel Graphics User Interface Protocol API for GUI Client Host Lookup Thread Server Server Thread Document Keyword Processor Database Local Shared File Database Host Database Task Database Component description Graphic User Interface: Pass user requests to kernel by calling corresponding APIs. Those APIs will return immediately, and the results from the kernel are reported by posting messages to GUI's window handler. Since GUI needs to distinguish from those messages, a unique id is required by every request. Kernel: API for GUI: Called by GUI. In order to return immediately, we should create a thread for each request. Then use the client protocol to get what we need, and report the result by messages. Host Lookup Thread: A thread picks up some hosts from the host database, and using the client protocol to get hosts information. Then push back to the host database. Server Thread: A thread serves the incoming connections from the server socket. It will create a thread for each connection. The new thread records the client host information, analyzes request type, call the corresponding server protocol. If the protocol is done without any error, add the client host information to the host database. Protocol: Client: Protocol implementations for client. There are five functions now implemented: get host list, get file list, download, search, and response. The search function is very special because it first forwards the request to some hosts randomly, then search the local shared file database, and finally response if something is found. To avoid getting duplicate request, it will register this request to the task database before forwarding. If the request is already registered, the function will return immediately. The forwarding is now implemented by multi-thread to speed up. Other functions except searching are just connecting to the destination host directly. Server: 2 Protocol implementations for server. There are five functions corresponding to the client protocol. The search function first get the request, then just use the search function in client protocol. The response function first get the response, then pass the result to the kernel. Document Keyword Processor: Maintain shared documents' information. Monitor every files. Re-compute keyword vector if some document is modified. Then update the local shared file database. Database: Local Shared File Database: Save keyword vectors for each document under the shared directory. Provide search function to get the file list from database. The search function is implemented as computing the score between search keyword and document keyword vector, and return the file list whose score is higher than a threshold. Host Database: Save hosts in this community. Provide some basic operations for management, such as dump, merge, load, and save. Task Database: Save requests received from server. Provide few operations to check for existence. Protocol specification Name Specification hostname host name. ip IPV4 network address, in a.b.c.d format. port service port. time time of last communication. task unique id for search request. key search keywords. count total record count. pathname path name. filename file name. filetype file type, 1 for directory, 0 for file. ctime time of last file status change, in seconds. 3 mtime time of last data modification, in seconds. lsize low four bytes of file size, convert to unsigned integer. hsize high four bytes of file size, convert to unsigned integer. data file data, use binary transmission. # after # is comment. Note: 'hsize' is not fully implemented, because not every file system can save file larger than 4GB. Former edition. System Architecture: Our System Architecture is Client-Server. Each client program gets information from server side, and only downloads or upload file peer to peer. Get Information Download File 4 Work Flow Client To Server Server To Client Get host list: 1. GET_HOST_LIST hostname ip port\n [Client] 2. time count\n [Server] repeat 3 “count” times 3. hostname ip port time\n [Server] 4. OK\n [Server] GET_HOST_LIST hostname ip port\n Time count\n For I=0 to I=(count-1) Client A hostname ip port time\n End; OK\n 5 Get file list: 1. GET_FILE_LIST hostname ip port\n [Client] 2. pathname\n [Client] 3. count\n [Server] repeat 4,5 “count” times 4. filename\n [Server] 5. filetype hsize lsize ctime mtime\n [Server] 6. OK\n [Server] GET_HOST_LIST hostname ip port\n pathname\n count\n Client A Client B For I=0 to I=(count-1) filename\n filetype hsize lsize ctime mtime\n End; OK\n Download: 1. DOWNLOAD hostname ip port\n [Client] 2. pathname/filename\n [Client] 3. lsize\n [Server] 4. data [Server] 5. OK\n [Server] DOWNLOAD hostname ip port\n pathname/filename\n lsize\n Client A data Client B OK\n 6 Search: 1. SEARCH hostname ip port\n [Client] 2. hostname ip port task\n [Client] 3. key\n [Client] 4. OK\n [Server] SEARCH hostname ip port\n hostname ip port task\n key\n OK\n Client A Client B Response: 1. RESPONSE hostname ip port\n [Client] 2. hostname ip port task\n [Client] 3. count\n [Client] repeat 4,5 “count” times 4. pathname/filename\n [Client] 5. filetype hsize lsize ctime mtime\n [Client] 6. OK\n [Client] RESPONSE hostname ip port\n hostname ip port task\n count\n Client A Client B For I=0 to I=(count-1) pathname/filename\n filetype hsize lsize ctime mtime\n End; OK\n 7 Former edition. Register 5. Connect to Server with no UID. [Client] 6. Server sends a UID to Client. [Server] Connect to Server, Protocol 00 Server sends a UID to Client A, Protocol01 Client A Server 第一次連到 Server,尚未有 md5 key. → HELLO “” “Maggie”\n . ← HELLO “efx827ad3” “Maggie”\n . p.s. Server 將 帳號,md5 key,IP 加入到 Computer List. Connect 1. User connects to server. [Client] 2. Server checks the UID of this computer. [Server] 3. Server updates some information to Computer List. [Server] p.s. Computer List contains information of UID, IP, file list, file key. 8 Former edition. Connect to Server, Protocol 02 Server checks the UID of Client A. Client A Server updates some information. Server 非第一次連到 Server,已擁有 md5 key. → HELLO “efx827ad3” “Maggie”\n . ← HELLO “efx827ad3” “Maggie”\n . p.s. Server 將 IP 更新到 Computer List. Browse Computer List 1. Connect to Server. [Client] 2. Server sends its Online Computer List. [Server] 3. Client shows the result. [Client] Connect to Server, Protocol 03 Send Online Computer List, Protocol 04 Client A Client Shows The Result → BWCOMP\n . ← BWCOMP OK\n “Maggie”\n 9 Server Former edition. “Apple”\n “Tony”\n . Browse File List 1. Client requests one computer from Online Computer List. [Client] 2. Server sends the file information of requested computer. [Server] 3. Client shows the result. [Client] Request one computer, Protocol 05 Send file information, Protocol 06 Client A Client Shows The Result → BWRES “Maggie”\n . ← BWRES OK\n “paper.doc” “2003 年 4 月 28 日” “12KB”\n “demo.ppt” “2003 年 4 月 10 日” “220KB”\n “3DmodelRetrieval.pdf” “2002 年 11 月 25 日” “5MB”\n “presentation0122.ppt” “2003 年 1 月 22 日” “34KB”\n . Search 1. User inserts the keyword. Sends the keyword to Server. [Client] 2. Server searches the keyword with its all file information. [Server] 3. Server sends the search result to Client. [Server] 4. Client shows the result. [Client] 10 Server Former edition. Send Keyword, Protocol 07 Search all file information. Send search result, Protocol 08 Client A Server Client Shows The Result → SEARCH “Mpeg7” “Maggie”\n . p.s.”Mpeg7”是 Keyword, “Maggie”是帳號名稱,option! ← SEARCH OK\n “Maggie” “Mpeg7tutorial.pdf” “2002 年 7 月 24 日” “7MB” “98”\n “Apple” “Mpeg7encoder.doc” “2002 年 10 月 8 日” “56KB” “95”\n “Maggie” “Mpeg7decoder.doc” “2002 年 11 月 27 日” “110KB” “92”\n “Tony” “decoder.doc” “2002 年 11 月 28 日” “110KB” “60”\n . Download 1. Connect to Server for IP. 2. Server sends IP to ClientA. Connect to Server and ask for IP, Protocol 09 Send IP, Protocol 10 Client A Server → GETIP “Maggie”\n 11 Former edition. . ← GETIP OK\n “140.112.223.35”\n . 1. Connect to a certain computer (client B). [Client] 2. Client B sends file to client A. [Client] Connect to Client B, Protocol 11 Search all file information. Send search result, Protocol 12 Client A Client B → GET “Mpeg7decoder.doc”\n . ← Char(0) [File Length Char] (0) [Binary File Data] ← Char(1) p.s. Char(0)代表有此檔案,Char(1)代表此檔案已不存在。 Upload 1. Connect to a certain computer (client B). [Client] 2. Client B checks if it can permit uploading. [Client] 3. Client B sends result to Client A. [Client] 4. Client A sends file to Client B or not. [Client] → CanSend\n . Connect to Client B, Protocol 13 Checks if it can permit uploading. 12 Send the result, Protocol 14 Client A Send file to Client B, Protocol 15 Client B Former edition. ← CanSend OK\n . ← CanSend FAIL\n . 若是 CanSendOK → Send “Mpeg7decoder.doc” \n . ← Send OK\n . → Char(0) [File Length Char] (0) [Binary File Data] → Char(1) Update 1. When get hook event, means that the shared directory has been modified. [Client] 2. Reprocess Client shared directory. [Client] 3. Client sends information and file list to server. [Client] 4. Server indexes this information. [Server] Get hook event. Reprocess Client shared directory. Send information to Server, Protocol 16 Client A Server Server indexes this information. → 13 Former edition. ADDFILE “Maggie” “Mpeg7decoder.doc” “2002 年 11 月 27 日” “110KB” [document vector]\n . ← ADDFILE OK\n . → DELFILE “Maggie” “Mpeg7decoder.doc” \n . ← DELFILE OK\n . Development Tools/Environment BCB 5.0 Microsoft Visual C++ 6.0 Whole Tomato Software Visual Assist 4.1 CVS server and WinCvs 1.2 as client Related Survey 1. Monitor windows events. ( hook ) 2. Index Algorithm 3. File Format of PDF Component Description Document Digest Document digest means extracting the representative portion of the sharing document for further searching. First we need to convert our supported document formats: word, power point, and pdf files into plain text format. We’ve surveyed several open source projects about this kind of conversion: xlhtml (http://chicago.sourceforge.net/xlhtml/) 14 word2x (http://www.gnu.org/directory/text/wordproc/word2x.html) wvWare (http://www.wvware.com/) antiword (http://www.winfield.demon.nl/) xpdf (pdftotext) (http://www.foolabs.com/xpdf/download.html) For those tools, we decide to use xlhtml for converting power point files, antiword for converting word files, and pdftotext for pdf files. Because most of those projects are originated form Unix platform, we also need to port them to win32 system and re-build those tools as libraries, which can be linked with other modules. Besides, we also use another library called libiconv, which is used to convert some Unicode of office document into Big5 encoding. We’re not going to implement Big5 searching in the initial version, so the Big5 characters are filtered out. After that, we parse the plain text to get the words for analysis. We also select 1764 most common English words, which are filtered out during the word frequency analysis; and Porter Stemming Algorithm is used in this digest process. A typical document digest may like: coding:3 transcoding:3 reduction:2 motion:2 video:2 scalable:2 resolution:2 spatial:2 temporal:1 bit-rate:1 dct:1 coefficients:1 vector:1 data:1 drift:1 residual:1 frame:1 architecture:1 vectors:1 blocks:1 ... We just try to do the most simplest implementation: take the word’s frequency as its importance. So each parsed word is followed by a number, which is represented for its frequency compared to the summation of all words’ frequency. For example, “coding:3” means the word “coding” has the 3% of total frequencies. Document Search/Ranking Now we are going to design a ranking algorithm for searching those document digest. First, if the keyword is not found in the document digest, absolutely we got zero point. If the keyword is found, than we extract it frequency; multiplying it with 100; plus the location of the substring comparing to length of document digest (in percentage * 100) and minus by 100. For example, if we search “transcoding” on the document digest above, we get: 15 3*100 + (100 - 9/7400 * 100) = 400 (approximately) We support multiply keywords in combination as AND logic. For each keyword’s search points, we normalize it with the highest points and then calculate the average points. If only one keyword is supplied, normalization is not performed. The typical calling convention is like: CDocDigest dg_doc; dg_doc.startDiest(“transcd_ov.ppt”); CDocSearch search; search.setDigest(dg_doc.getDigest()); int point = search.Search(“mpeg-4 transcoding”); // points are returned for each document digest, thus the server // can sort those documents according to its points Sharing Module I am in charge of using the object of Digest / Modify / Share, making them capable combined with Client / Server. The function of Digest is filtering the common words of a file, leaving the concentrated useful words in the digest of the file. The function of Search use the keyword which the user type in to search in the digest and grade the file with a point, showing how many times the keyword appear in the file. So, what I need to do is to find all of the files in the share root which the user orders, and record the digests and related information of the files in a list: setd. When a user close the file, the records of the list will save in a save_file. Next time when user opens the program, we will download the information and the digest of the files we saved last time from this save_file to the list setd. And one thing should be noted, a user may changes the files in the original root, so we have to check every file in the root with the records in the setd immediately. A user maybe want to delete / new / modify the files in the root when use the program. So we have to monitor if any change has happened immediately. To do this, 16 I use the FileChangeNotify.cpp to monitor the every file in the root with the setd list, if anything has changed, modify it immediately in setd. Then the function of Search, I have record the digest and related information of each file in the root, so I just grade every file by the digest, and sort these files by the points. We also set a throughput, if any points of the files are over the throughput, I will send these files to server. My program is digest.h / digest.cpp. The three functions follows are wrote by Chang-Chiu, I just use that. FileChangeNotifymonitor if any change in a root. CDocDigestget the digest of a file. CdocSearchgrade a file by the digest. Then I introduce every function in Digest.cpp. Digest::digest()Check the file we used to save setd records last time exits or not. Void digest::downloadfile()If the save_file exits, download the records from this file to setd we used now. Digest::~digest()When the user close the program, we write the records of setd to save_file and set 1 in begin_data.txt, showing that we have save the record of setd to the file successfully. Void digest::setdigestdata(string shared_path,string db_path) Set the root and the name of the file we save records in the two variables: shared_path and save_file. And we decide we need to initialall or compare by the save_file exit or not. Void digets::initialall() / void digest::findfile(string npath, string dipath) Find all of the files in the root and set the digest and related information for it, then save it in setd list. Here we use two functions because the function we used to find all files: FindFirstFile which only find files in one level. If we want to find all files in multilevel of folders, we should use recursive to do this, and the one more function could make us easy to deal with variables. By the way, we will filter the files which are not .ppt/ .doc/.pdf. Void digest::compare() / void digest::findcomfile(string npath, string dipath) Check if any change has happened in the root, if yes, find the changed file and monitor the record of the file in the setd. 17 Void digest::searchkey(char* key) Using the Key which the user types in to grade every file and give it a point. Then I sort the files by the points. If the point of a file is grater than the throughput we set, I send the file to server. Void digest::change_shared_path(string newpath) Deal with the situation that the user changes the share root when she or he are using our program. UI Design URL and KeyWord Edit Component all can be executed just by “Enter”. But for the reason that some users may not know this method, so we still put a button “Search” beside the Edit Component. The function list will dynamically change according to different component in List. For example, if the component belongs to “computer” or “folder”, right click will only execute “Get File List”. If the component belongs to “file”, right click will only execute “Download”. Task Assignment 1. Communication Protocol between Client and Server. – ID5 18 2. Client. – Maggie 3. Server. – ID5 4. File Format of PDF, Index Algorithm. – 振修 5. Digest, Hook. –宜儒 6. Test PDF, PPT component. –Slater 7. Test Doc. –燕君 Technical Issue 1. Peer to Peer, or Client – Server Architecture? Because we choose to do “Full Text Search”, considering search efficiency, we decide to select peer to peer system architecture. Former edition. Peer to Peer has a problem of synchronization. 第一次加入這個系統時,該如何 讓所有的 Client 都可以得知?要怎麼 lock 住正在修正 Computer List 的這個 process?討論結果,覺得用一個 Server 可能會比較好控管,程式也比較好寫。 而且不同的 Server 可以代表不同的 group。 2. How to solve the problem of non-fixed IP Client? 第一次進入系統跟 Server 握手之後, Server 送回一個 unique 的 key (ex: md5 hash),之後每次進入 community 跟 Server 握手之後,就給 Server 看 之前的 unique key,這樣 Server 就可以根據 key 知道 client 的身分,再去 更新 IP 紀錄即可,而這部分可以直接在 protocol 裡面做掉,user 完全沒感 覺。md5 hash 則有現成的 library。 3. How to update information of our indexes? We use the method of “hook” to monitor our share folder, if system detects that the share folder has been modified, it signal an event to our application. Then our application will digest those files instantly. Former edition. 原本的想法是,每隔一兩個小時就去看 shared directory 是否有被更動,如果 有被更動的話就重新掃描取出 indexes,不過這樣的作法很不即時。有一種作 法叫作 ” hook “,可以讓 windows 幫我們監控 directory,如果監控到 directory 被修改,便立即產生 event,Client 端程式收到 event 後就重新掃描取出 indexes,如此一來,更新變得比較即時,且才不會作了很多沒有必要的 check 動作。 4. Protocol for “Update”? 19 Document index vector 應該在 client 端作好,那麼 update 時,除了更新 file list 以外,該如何將 vector 也上傳?目前暫定為直接接在 ADDFILE 指令後端, 等 Index Algorithm 出來之後,再來討論。 Memo Former edition. p.s. Server 必需定期清理久未使用的帳號!! Document ends here 20