Chapter text

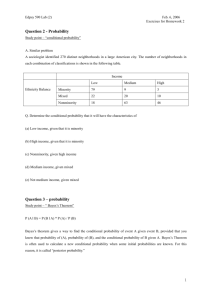

advertisement

Chapter 3

Conditional Probability and

Independence

3.1 Introduction

Conditional Probability is useful for two reasons:

for computing probability when partial information is available;

as a tool for computing other probability values.

3.2 Conditional Probability

Example 3.1 --In tossing two dice in order, given that the first die has appeared to be 3, what

is the probability that the sum of the two dice is larger than 7?

Solution 1 (by intuition):

With the 1st die known to be 3, the 2nd die must be 5 or 6 to get a sum larger

than 7.

So, for the second die, the relevant event E = {5, 6}.

The sample space for the second die is S = {1, 2, 3, 4, 5, 6}.

So, the desired probability is P(E) = 2/6 = 1/3 by Fact 2.3 of the last chapter

below:

# points in E

P( E )

.

# points in S

Solution 2 (by formal reasoning):

The condition C = “the first die is 3.”

The sample space for two tossings without the condition is S = {(1, 1), (1,

2), …, (6, 6)} (with 66=36 samples).

Assume that the each sample in S has an equal probability.

Let event F = {1st die = 3} = {(3, 1), (3, 2), …, (3, 6)}

= a new reduced sample space with condition C above satisfied.

Let event E = {sum of two dice > 7} = {(2, 6), (3, 5), (4, 4), (5, 3), (6, 2), (3,

6), (4, 5), (5, 4), (6, 3), (4, 6), (5, 5), (6, 4), (5, 6), (6, 5), (6, 6)}.

The desired event is G = {sum of two dice > 7, given that 1st die = 3}

3- 1

= {(3, 5), (3, 6)}

= EF which is the intersection of E and F.

The desired probability is P(G) = 2/6 =1/3 by Fact 2.3 of Chapter 2 below

(because the new sample space F has 6 samples instead of 36):

# points in G

P (G )

.

# points in F

However, the above P(G) may also be considered to be

P(G) = (2/36) (6/36)

# points in EF

# points in F

=

# points in S

# points in S

= P(EF) P(F)

=

P ( EF )

.

P( F )

Some definitions of notations and comments -- E|F “event E occurs given that event F has occurred.”

P(E|F) “conditional probability that E occurs given that F has occurred.”

From the above discussions, we can formally define the conditional

probability as follows.

Definition of conditional probability --P(E|F) =

P ( EF )

if P(F) > 0.

P( F )

(3.1)

Example 3.2 (Example 3.1 revisited) --In tossing two dice in order, given that the first die is 3, what is the probability

that the sum of the two dice is larger than 7?

Solution (by the definition of conditional probability):

Event E|F = {sum of two dice > 7, given that 1st die = 3}.

Event F = {1st die = 3} = {(3, 1), …, (3, 6)} with 6 samples.

Event EF = {sum > 7 & 1st die = 3} = {(3, 5), (3, 6)} with 2 samples.

P ( EF )

P(E|F) =

= [(2/36) (1/6)] = 2/6 = 1/3.

P( F )

Example 3.3 --An urn contains 10 white, 5 yellow, and 10 black marbles. A marble is chosen

randomly from the urn, and it is known that it is not black. What is the probability

that it is yellow?

Solution (by definition):

Sample space S = {10 white, 5 yellow, and 10 black}.

Event Y = {marble is yellow} = {5 yellow}.

3- 2

Event B = {marble is black} = {10 black}.

Event BC = {marble is not black} = {10 white, 5 yellow}

Desired probability

P(Y|BC) = P(YBC) P(BC)

= P(Y) P(BC) = (5/25) (15/25) = 1/3.

(Note: the complementation operation “C” has the highest priority in all set

operations, so YBC means Y∩(BC).)

Some comments -- Computation of the conditional probability according to its definition usually

deals with the original sample space.

Sometimes, it is easier to compute the conditional probability by considering

directly the reduced sample space obtained from imposing the condition,

when all outcomes are equally likely to occur, as illustrated by the following

example.

Example 3.4 --In the card game “bridge”, the 52 cards are dealt equally to 4 players --- E, W,

N, S. If N and S have a total of 8 spades, what is the probability that E has 3 of the

remaining 5 spades?

Solution (using reduced sample space):

From the last example, we see that the reduced sample space is just that

formed by the condition “N and S have a total of 8 spades,” i.e., the reduced

sample space is

S = {all possible distributions of remaining 26 cards, including

5 spades, to E & W}

(assume that all distributions are equally likely to occur).

No. of possible distributions (for E & W) in S = C(26, 13)C(13, 13) = C(26,

13)

Desired event E = {all distributions of the remaining 26 cards in which E has

3 spades}

Since E has 3 spades and N & S have 8 ones, the no. of possible distributions

in E & W is

[C(5, 3)C(21, 10)](for E) C(13, 13)(for W) = C(5, 3)C(21, 10).

By Fact 2.3 of the last chapter, the desired probability is

P( E )

# points in E

= [C(5, 3)C(21, 10)] C(26, 13)

# points in S

= … (compute by yourself!).

Formula 3.1 ---

3- 3

P(EF) = P(F)P(E|F)

(3.2)

Solution: immediate from (3.1).

A comment --Formula 3.1, i.e., Eq. (3.2), is useful for computing the probability of

intersections of events.

Example 3.5 --Draw two balls without replacement from an urn containing 8 red and 4 white

balls. Assume that all balls are equally likely to be drawn, what is the probability

that both the two drawn balls are red?

Solution 1 (by Formula 3.1):

Let event R1 = {1st ball is red};

event R2 = {2nd ball is red};

event R1R2 = {1st and 2nd balls are red}.

Desired probability P(R1R2) = P(R1)P(R2 |R1) by Formula 3.2 above.

P(R1) = 8/12 by Fact 2.3 of Chapter 2.

P(R2 |R1) = 7/11 by Fact 2.3 again (easy to figure out!)

So, desired probability is:

P(R1R2) = P(R1)P(R2 |R1) = (8/12)(7/11) = 14/33.

Solution 2:

Considering no drawing order and by Fact 2.3 of Chapter 2, a second way of

computing P(R1R2) is:

P(R1R2) = C(8, 2)C(12, 2) = 14/33.

Solution 3:

Considering the drawing order and by Fact 2.3 of Chapter 2, a third way is:

P(R1R2) = [C(8, 1)C(7, 1)][C(12, 1)C(11, 1)] = 14/33.

All of the three results are identical! Interesting!!

3.3 Bayes’ Formula

Formula 3.2 --P(E) = P(E|F)P(F) + P(E|FC)P(FC)

(3.3)

where E and F are two events in a sample space S.

Proof:

P(E) = P(ES)

(ES = E obviously);

C

( S = FUFC);

= P[E(FUF )]

= P((EF)U(EFC))

= P(EF) + P(EFC)

(by the distributive law);

(by mutual exclusiveness of EF and

3- 4

= P(E|F)P(F) + P(E|FC)P(FC).

EFC; and Fact 2.2);

(by Formula 3.1 Eq. (3.2) above).

Comments --- the above formula is useful for computing P(E) when conditional

probabilities are available.

Bayes’ Formula --P(D|E) =

P ( E | D) P( D)

P ( E | D) P( D) P ( E | D C ) P ( D C )

(3.4)

where D and E are two events in the sample space S.

Proof: Easy using Eqs. (3.1) and (3.3) above by considering the sample space to

be S = DUDC; left as an exercise.

Comments about Bayes’ Formula -- Bayers’ formula is important and serves as a basic principle in pattern

recognition, decision making, statistics, and many other fields.

The formula may be used when P(D|E) is not available but P(E|D) and the

other terms in (3.4) are. This is a possible case in many application problems.

Example 3.6 use of Bayes’ Formula (1) --In a class of 20 female and 80 male students, 4 of the female students and 8 of

the male wear glasses. Now if a student was observed to wear glasses, what is the

probability that the student is a female?

Solution:

Check what data we already have at first.

Event F = an observed student is female, with probability P(F) =

20/(20+80) = 0.2.

Event M = an observed student is male, with probability P(M) =

80/(20+80) = 0.8.

Event G|F = a student wears glasses given that the student is a female,

with probability P(G|F) = 4/20 = 0.2.

Event G|M = a student wears glasses given that the student is a male,

with probability P(G|M) = 8/80 = 0.1.

Check what we are going to compute.

Event F|G = a student is female given that the student wears glasses,

with probability P(F|G) to be computed.

The probability P(F|G) may be computed according to Bayes’ Formula

(3.4) as:

P(F|G) =

=

P(G | F ) P( F )

P(G | F ) P( F ) P(G | F C ) P( F C )

P(G | F ) P( F )

P(G | F ) P( F ) P(G | M ) P( M )

3- 5

=

0.2 0.2

0.2 0.2 0.1 0.8

= 0.04/(0.04 + 0.08) = 1/3.

Example 3.7 use of Bayes’ Formula (2) --A blood test is 95% effective in detecting a certain disease when it is, in fact,

present. However, the test also yields a “false positive” result for 1% of the

healthy persons tested. If 0.5% of the population actually has the disease, what is

the probability for a person to have the disease when the test is positive?

Solution: the process is all symmetric to that for the last example.

Check what data we already have first.

Event D = a tested person has the disease, with probability P(D) = 0.5%.

Event DC = a tested person does not have the disease (i.e., being healthy),

with probability P(DC) = 1 0.5% = 99.5%.

Event E|D = the disease is detected to be existing when the tested person

has the disease (i.e., the test result is effective, or really positive), with

probability P(E|D) = 95%.

Event E|DC = the disease is detected to be existing when the tested

person is healthy (i.e., the test result is ineffective, or false positive), with

probability P(E|D C) = 1%.

Check what we are going to compute.

Event D|E = a person has the disease given that the test is positive, with

probability P(D|E) to be computed.

The desired probability P(D|E) may be computed by Bayes’ Formula

(3.4) as:

P ( E | D) P( D)

P ( E | D) P( D) P ( E | D C ) P ( D C )

0.95 0.005

=

0.95 0.005 0.01 0.995

= 95 / 294

= 0.323.

P(D|E) =

Application of Bayes’ Formula --- Bayes decision theory

Bayes’ Formula is useful for decision making, creating the so-called Bayes

decision theory, as discussed in the following.

Bayes decision theory is the basis of pattern recognition.

Example 3.8 (Example 3.6 revisited) --In a class of 20 female and 80 male students, 4 of the female students and

8 of the male wear glasses. Now if a student was observed to wear glasses,

how will you decide the sex (性別) of the student?

Solution:

This is a pattern recognition problem with “wearing glasses” being a kind of

3- 6

“feature” used for decision making, and the “female” and the “male” being

two “classes of patterns.”

In Example 3.6, the probability P(F|G) for “the student to be a female when

the student was observed to wear glasses” has been computed to be 1/3.

Now, how is the probability P(M|G) for “the student to be a male when the

student was observed to wear glasses”? It can be computed to be:

P(M|G) =

P(G | M ) P( M )

P(G | M ) P( M ) P(G | M C ) P( M C )

=

P(G | M ) P( M )

P(G | M ) P( M ) P(G | F ) P( F )

=

0.1 0.8

0.1 0.8 0.2 0.2

= 0.08/(0.04 + 0.08) = 2/3.

Actually by the concept of Section 3.5 below (i.e., the conditional probability

denoted by P(·|G) satisfies all the properties of ordinary probabilities), P(M|G)

can be computed simply as

P(M|G) = 1 P(F|G) = 1 1/3 = 2/3

because the events M and F are mutually exclusive and MUF is just the

sample space (also, Axiom 2 is used here).

Now how is the decision about the sex of the student? A natural way for this

purpose is as follows:

if P(M|G) P(F|G), then decide the student to be male;

otherwise, female.

So the decision is: the student is male!

The criterion for decision as above is called Bayes’ decision rule which is

formally described next.

Bayes’ decision rule --Given two classes of patterns and , an unknown pattern described by

a feature (vector) X is classified as:

if P(A|X) P(B|X), then decide X A; otherwise, X B.

(Note: decision for the case of P(A|X) = P(B|X) may alternatively be made

arbitrarily.)

For the above example (Example 3.8), we have A = F(female), B = M(male),

X = G(wearing glasses), etc.

The values P(A|X) and P(B|X) are called a posteriori probabilities in pattern

recognition theory with “a posteriori” meaning “derived” (事後推導出來的).

They can be computed in terms of the a priori probabilities P(A) and P(B)

3- 7

(also called class probabilities) and the conditional probabilities P(X|A) and

P(X|B) using Bayes’ Formula (3.4).

A pattern may be any entity of interest.

Formula 3.3 (a generalized form of Formula 3.2) --n

P(E) = P(E|Fi)P(Fi)

(3.5)

i1

where E and Fi, i = 1, 2, …, n, are events in the sample space S with all

Fi mutually exclusive such that

S = F1UF2U…UFn,

i.e., all Fi together constitute the sample space S.

Proof: easy like that for proving (3.3); left as an exercise.

Generalized Bayes’ Formula --P(Fj |E) =

P( E | Fj ) P( Fj )

n

P( E | Fi ) P( Fi )

(3.5a)

i 1

where E and Fi with i = 1, 2, …, n are events in the sample space S with

all Fi mutually exclusive such that

S = F1UF2U…UFn,

like those described for Formula 3.3.

Proof: left as an exercise.

3.4 Independent Events

Concept of event independence -- Conceptually, if the knowledge of an event F does not change the probability

that another event E occurs, then we say that the two events are independent.

An example --If two fair dice are tossed in order, obviously the outcome of the first

tossing has no effect on the outcome of the second tossing. Considering the

outcomes as events, then they are said to be independent.

This concept of independence means that

P(E|F) = P(E),

3- 8

or equivalently, that

P(E|F) =

P( EF )

= P(E).

P( F )

Therefore, we get

P(EF) = P(E)P(F).

Accordingly, we have the following definition.

Definition of event independence --Two events E and F are said to be independent if

P(EF) = P(E)P(F).

(3.6)

They are said to be dependent if they are not independent.

Example 3.9 --In tossings of two fair dice,

F is the event that the first die equals 4;

E1 is the event that the sum of the dice is 6;

E2 is the event that the sum of the dice is 7,

show that F and E1 are not independent while F and E2 are.

Solution:

The sample space S has 36 samples.

F = {(4, 1), (4, 2), (4, 3), (4, 4), (4, 5), (4, 6)}.

E1 = {(1, 5), (2, 4), (3, 3), (4, 2), (5, 1)}.

E2 = {(1, 6), (2, 5), (3, 4), (4, 3), (5, 2), (6, 1)}.

E1F = {(4, 2)}.

E2F = {(4, 3)}.

P(E1F) = 1/36, P(E1) = 5/36, P(F) = 6/36 = 1/6, so P(E1F) = 1/36

(5/36)(1/6) = P(E1)P(F) which means that E1 and F are not independent.

P(E2F) = 1/36, P(E2) = 6/36 = 1/6, so P(E2F) = 1/36 = (1/6)(1/6) = P(E2)P(F)

which means that E2 and F are independent.

Proposition 3.1 --If E and F are independent, then E and FC are independent, too.

Proof:

P(E) = P(ES) = P[E(FUFC)] = P(EFUEFC)

= P(EF) + P(EFC) = P(E)P(F) + P(EFC)

= P(E)[1 P(FC)] + P(EFC) = P(E) P(E)P(FC) + P(EFC).

So, we get

P(EFC) = P(E)P(FC),

which means that E and FC are independent by definition.

3- 9

Definition of Independence of three events --Three events E, F, and G are said to be independent if the following equalities

hold:

P(EFG) = P(E)P(F)P(G);

P(EF) = P(E)P(F);

P(EG) = P(E)P(G);

P(FG) = P(F)P(G).

Generalized definitions of independence of events -- The events E1, E2, ..., En are said to independent if, for every subset E1′, E2′, ...,

Er′, r n, of these events, the following equality holds:

P(E1′E2′...Er′) = P(E1′)P(E2′)...P(Er′).

(Notes: E1′ does not mean E1; instead it means the 1st event in the r selected

ones and the index 1′ may be any of 1, 2, …, n. The remaining E2′ through Er′

are interpreted similarly. Also, recall E1′E2′...Er′ means E1′∩E2′∩...∩Er′).

An infinite set of events are said to be independent if every finite subset of

these events is independent.

Independent subexperiments --In an experiment consisting of performing a sequence of subexperiments, if

(1) Ei is an event whose occurrence is completely determined by the outcome of

the ith subexperiment where i = 1, 2, …, n, …; and

(2) E1, E2, …, En, … are independent,

then the subexperiments are said to be independent.

Definition of a term – “trial” --If an experiment consists of a sequence of identical subexperiments i.e.,

each subexperiment has the same (sub)sample space and the same probability on

its event then each of the subexperiments is called a trial.

Example 3.10 --In a continuous sequence of tossing independently a fair coin with a head (H)

and a tail (T) for two times, we have:

the experiment = the sequence of tossing the coin for two times;

a trial = a subexperiment = tossing the coin once;

the ith trial yields an event EiH for the outcome of H; or yields an event EiT for

the outcome of T, where i =1, 2;

specifically, E1H = {HT, HH}, E1T = {TT, TH}, E2H = {TH, HH}, E2T = {TT,

HT};

the event of “one H followed by one T” is E1H2T = {HT} which is just equal to

the intersection of E1H = {HT, HH} and E2T = {TT, HT} because E1H∩E2T =

{HT, HH}∩{TT, HT} = {HT};

3- 10

the sample space S = {TT, TH, HT, HH};

the probability P(E1H2T) = 1/4 = (1/2)(1/2) = P(E1H)P(E2T), i.e., E1H and E2T

are independent by definition. The other cases of independence may also be

checked to be valid.

Example 3.11 --An infinite sequence of independent identical trials is to be performed. Each

trial has a success probability of p and a failure probability of 1 p. What is the

probability for each of the following cases?

(1) At least one success occurs in the first n trials?

(2) Exactly k successes occur in the first n trials?

Solution for (1):

First, compute the probability of the event Ea of no success in the first n trials

(i.e., all n trials fail).

Let Ei denote the event of a failure in the ith trial. Then based on a reasoning

similar to that of the last example, we have Ea = E1E2...En.

By identicalness and independence of all Ei, we have

P(Ea) = P(E1E2... En) = P(E1)P(E2)...P(En) = (1 p)n.

So the desired probability of the event E(1) that at least one success occurs in

the first n trials, by the second axiom of probability, may be computed to be

P(E(1)) = 1 (1 p)n.

Solution for (2):

First, consider the event Eb of any sequence of n trials containing k successes

and n k failures.

By identicalness and independence, P(Eb) = pk(1 p)nk according to a

reasoning similar to that used for deriving the solution for (1) above.

The number of possible such events is C(n, k) which results from taking k

successful trials out of n ones.

The event E(2) of exactly k successes is just the union of all these C(n, k)

sequences.

So the probability of the event E(2) of exactly k successes occur in the first n

trials, by Fact 2.2, is just

P(E(2)) = C(n, k)pk(1 p)n-k.

3.5 P( · |F) is a Probability

A note:

Conditional probabilities satisfy all of the properties of ordinary probabilities

as proved below.

3- 11

***Proposition 3.2 --P(·|F) is a probability function satisfying the three axioms of the probability

for any event F as described below:

(1) 0 P(E|F) 1;

(2) P(S|F) = 1;

(3) for any sequence of mutually exclusive events E1, E2, ..., the following

equality holds:

P(

i 1

Ei |F) = P(Ei |F).

i 1

Proof: left as an exercise.

Two facts derived from Proposition 3.2 -- Formula 3.4 --P(E1UE2 |F) = P(E1 |F) + P(E2 |F) P(E1E2 |F).

Formula 3.5 --P(E1 |F) = P(E1 |E2F)P(E2 |F) + P(E1 |E2CF)P(E2C |F).

Proof: left as exercises.

Definition of conditional independence --Two events E1 and E2 are said to be conditionally independent given F, if

P(E1 |E2F) = P(E1 |F),

(3.7)

or equivalently, if

P(E1E2 |F) = P(E1 |F)P(E2 |F).

3- 12

(3.8)