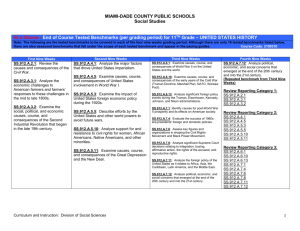

sources of software benchmarks

advertisement