2001-21 - Systems & Information Engineering, University of Virginia

advertisement

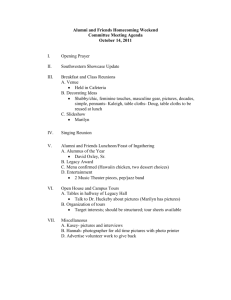

2001 Systems Engineering Capstone Conference • University of Virginia DEVELOPMENT OF AN INTEGRATION METHODOLOGY FOR LEGACY DATA SYSTEMS Student Team: Rebecca E. Gonsoulin, Wendy Lee, Donté Parks Faculty Advisors: Michel King, Department of Systems Engineering Client Advisors: Stephen Osborne Lockheed Martin Undersea Systems Manassas, Virginia. stephen.osborne@lmco.com KEYWORDS: legacy data integration, methodology, schema. ABSTRACT One of the critical problems faced by any large enterprise with significant investments in computer technology is data integration, the aggregation of information from dissimilar sources to provide increased capabilities. Data integration tends to be a very resource-intensive process, in terms of both time and money. The problem with the variety of integration options is that there is no set of best practices that can be applied to a given situation. We researched methodologies of software development and current techniques of data integration and created a methodology for integrating legacy systems for Lockheed Martin Undersea Systems. The Legacy Integration Framework (LIF) consists of five steps: Understand, Develop, Test, Implement, and Maintain. LIF can act as a guiding strategy to ensure that critical integration-specific details remain in the forefront over the course of a data integration project. INTRODUCTION One of the critical problems faced by any large enterprise with significant investments in computer technology is data integration, the aggregation of information from dissimilar sources to provide increased capabilities. The approach currently used by Lockheed Martin Undersea Systems (LMUSS) for the US Navy involves development of a federation. In this approach, the systems undergoing integration are left largely autonomous, with only a minimum of changes being made to allow for the increased functionality. While effective, federation is limited, and new computer systems demand a higher degree of integration in order to exploit their full value. Unfortunately, there is no established set of best practices that can be applied universally to data integration projects, leaving technical staff with no 69 choice but to develop a new approach for every situation. LMUSS asked our Capstone team to create a methodology for use in integrating legacy systems by researching methodologies for software development and current techniques of data integration. DATA INTEGRATION Early computing offered the opportunity to store and process large numbers of transactions on mainframes. Because the number of computing resources was small, integration was not necessary. The limited demand for applications meant that they were often written specifically to fit a particular need or requirement (Chuah). With the shift from mainframes to desktops and distributed networks, corporations have come to realize the value of integration, as the leveraging of enterprise knowledge can only occur if the widely available sources of data can be modified to work in a cooperative fashion. Early integration concentrated on overcoming the differences between systems by translating data into a common schema, the schema being the set of rules that governs the behavior of a database. While schema integration was an effective approach, the process involved was very time-consuming, prone to error, and limiting in terms of flexibility. These difficulties led to the popularity of federation. Federation involves the development of a global schema, which acts as a common language between databases in the federation (Bouguettaya, 1998). Databases use this global schema to interact with other databases. Instead of attempting full integration, federation allows for different degrees of interoperability. Federation saves a lot of effort, but is still limited in terms of its capabilities, especially in terms of scalability. Newer approaches have been developed, although many of these also involve manipulation of the schema at some level (Bouguettaya, 1998). One of the larger hurdles in integration projects is the determination of how communication is going to occur between the different systems. Different technologies have been developed to fill this void. Extensible Markup Language (XML) addresses the messaging between Development of an Integration Methodology systems. It allows users to develop their own tags to describe their data. The large benefit of XML is that in separating the representation and semantics of data, it allows for an extreme amount of flexibility. In addition, its widespread support within the commercial industry has prompted the development of a variety of tools to facilitate XML use. Another class of software easing the messaging between different systems is middleware. Middleware is used to "glue together" separate, preexisting systems. This software rests on a variety of different platforms, and is typically combined with its own messaging system. In this way, there is a defined standard for the way that the various systems can interact. By hiding the translation and details from the user, these programs increase understandability through the reduction of complexity. Enterprise Application Integration (EAI) is a method for integrating legacy applications and databases while adding or migrating to a new set of e-commerce applications. This architecture provides a fundamental structural organization for software systems by providing a set of predefined subsystems, by specifying their relationships and by including the rules and guidelines for those relationships (Lutz, 2000). It is an approach based on determining how existing applications fit into new ones and defining ways to efficiently reuse what already exists. Platform integration provides some connectivity among heterogeneous hardware, operating systems, and application platforms. It uses technologies such as Object Request Brokers (ORBs) and Remote Procedure Calls (RPCs) (Gold, 1999). CORBA, the Common Object Request Broker Architecture, is a middleware architecture and specification for creating, distributing, and managing objects in a network. It enables applications to use standards for locating and communicating with each other. The key element of CORBA, the ORB, acts as a “software bus” managing access to and from objects in an application, linking them to other objects, monitoring their function, tracking their location, and managing communications with other ORBs. Using Interface Definition Language (IDL), the ORB can interact with applications written in different languages. IDL provides objects with well defined interfaces. Objects can be written in any common language including Java, C, C++, and Cobol (“CORBA,” 2000). The main advantages of CORBA are platform, language, and location independence. 70 Data integration tends to be a very expensive process, in terms of both time and money. Its difficulties stem from the fact that many of the systems undergoing this integration were never designed for such changes. As computing grew in popularity and availability, it became increasingly easy to develop systems for specific purposes on an as-needed basis. With this sporadic approach to systems development, attention was not placed on potential needs for the future. When the need arose for greater functionality, it was therefore difficult to implement, especially in the area of integration. Schema integration, federation, and middleware all serve to fill this need, and each has been explored to determine their underlying rationale, as well as their strengths and weaknesses. METHODOLOGIES In the early days of computing and development, there was no formal design or analysis of systems. Organizing, defining, and managing the development of projects is a widely discussed topic in development of products. Organizations have conflicting needs for a well-defined, well-managed process, and for the quick delivery of the product. Existing methods attempt to provide an orderly, systematic way of development. Current legacy system integration methods rely on the tailoring of software integration methodologies. Lockheed Martin Undersea Systems has such corporate methodologies, but these are proprietary and were unavailable for use in this project. This section describes general approach for integration projects. Software developers commonly use the spiral and waterfall methods, while the Gibson method is a problem solving method taught in Systems Engineering. The Waterfall Method While steps in the waterfall method often vary, the general breakdown is as follows: requirements analysis, specifications, design, implementation, test, maintenance, and iterate. The graphic representation of these phases, shown in Figure 1, resembles a waterfall. The first step, requirements analysis, defines the requirements of the project as stated by the customer. In the specification stage, analysts develop the specifications (i.e. processor speed, disk space needed) for the system. Next, the developers outline and design the system, including the requirements and the specifications from the previous phases. Implementation is the next step, followed by testing and maintenance of the system. Designers can perform iterations of the process in order to make certain they included all requirements and specifications. The 2001 Systems Engineering Capstone Conference • University of Virginia waterfall method emphasizes completing each phase of development before proceeding to the next phase [Sorenson, 1995]. Requirements Specifications Design Implementation Test Maintenance Iterate Figure 1: The Waterfall Method. This method emphasizes the completion of one phase before proceeding to the next [Sorenson, 1995]. The Spiral Method The spiral method contains four major stages: planning, risk analysis, engineering, and customer evaluation [Spiral, 2001]. Figure 2 shows a diagram of the spiral method. Each cycle begins with a planning period, which consists of determining the objectives, and the alternatives and constraints of the project. The planning stage also includes defining the requirements important to the customer. The risk analysis stage analyzes the alternatives and attempts to identify and resolve any risks. The engineering stage consists of prototyping, developing and testing the product. The customer evaluation stage is an assessment by the customer of the products of the engineering stage [Spiral Method, 2001]. The Gibson Method The Gibson Method, created by John Gibson, is a sixphase approach to systems analysis that provides an organized way to approach a problem objectively and to justify the rationale behind a decision. Figure 3 shows a table of the six steps included in this method. Determining the goals is critical to the success of a project. If the goals and objectives of the project are not clear, then the outcome of the project will not meet expectations of the customer. The next step, establishing criteria for ranking alternative solutions involves developing indices of performance, or IPs, which judge the performance of a design option. Possible indices of performance include the impact the solution will have on existing systems, cost, and whether the solution is harmful to the environment. Developing alternative solutions is also an important step in the Gibson method. This allows a system designer to include all possible options for an unbiased selection. Ranking the alternative solutions involves using IPs to choose the best possible solution to use in the project. Iterating through the method allows for a more careful problem analysis. Taking action is the final step in the Gibson method. This step includes producing the solution and testing the final product. This step should include a formal procedure for achieving the goals stated in the first step [Gibson, 1991]. The Gibson Method for Systems Analysis Determine Goals of System Establish Criteria for Ranking Alternative Candidates Develop Alternative Solutions Rank Alternative Candidates Iterate Action Figure 3: The Gibson Method. This method for systems analysis is a robust strategy for approaching complex problems [Gibson, 1999] Figure 2: The Spiral Method. Every cycle goes through each stage of the method, creating several iterations of each stage. [Lutz, 2001] 71 Any legacy system integration methodology needs to fit into current practices. While current methodologies view legacy system integration through a software engineering lens, an integration methodology cannot be blind to the fact that much of legacy system integration involves software engineering, in addition to traditional engineering. An integration methodology, rather than recommending a specific technology, forms a framework for approaching integration projects. Development of an Integration Methodology An integration methodology should be both flexible and extensible. Therefore, the integration methodology will be able to accommodate different types of integration projects. One of the reasons that legacy system integration is difficult is because many integration projects require different integration technologies. Any methodology should leave room for improvement as well. There will undoubtedly be innovation in the field of legacy system integration. A methodology should allow for incorporation of new ideas. THE LEGACY INTEGRATION FRAMEWORK The Legacy Integration Framework (LIF – pronounced LĪF) is an amalgam of the Waterfall, Spiral, and Gibson methods, in addition to incorporating information from client interviews. LIF is a guiding strategy to ensure that critical integration-specific details remain in the forefront over the course of a data integration project. It is also meant to ensure that legacy integration projects are seen in the context of the lifetime of a system, rather than as a solution to an immediate need. The LĪF Methodology Understand Develop Test Implement Maintain Each step of the LIF strategy incorporates ideas from pre-existing methodologies. It is linear in many respects like the Waterfall Method, but incorporates the idea of iteration found in the Spiral Method. The understanding of problem context is largely based on the Gibson Method. The Gibson method, a cornerstone of the University of Virginia’s Systems Engineering curriculum, was developed primarily for decision analysis. Gibson systems analysis is largely based on an understanding of the client’s needs. The understanding phase incorporates the risk analysis approach of the Spiral Method. This step should also allow for revisiting earlier steps, another key feature of the Spiral Method, since client needs and risks should repeatedly be considered. 72 Understand Prior to any development on a legacy system, it is important to understand the role that it is intended to play in the final system. This requires understanding the purpose of the system, and how that purpose has changed over its lifetime. A complete understanding of the legacy in the future is needed as well. This understanding should include both technical details and information regarding the users and others affected by any potential changes. The goal of this step is to understand the context of the legacy system, and to prepare the developers for future change. A key portion of this step is to ensure that the contextual information does not stay with the Systems Engineer Work Group (SEWG) that handles the early requirements analysis and design work. The software engineers need some understanding of the system as well, so they can make informed decisions, and it is imperative that this information be passed. During client interviews, LMUSS employee Steve Mitchell described a project where a member of the SEWG team made presentations at the start of each development phase, giving the software engineers the bigger picture surrounding their work. While this particular solution may not be the only one that will work, knowledge of the legacy, its users, and the role of the integrated system should be held by all members of the integration team. This has the added benefit of providing buy-in for those involved, as each team member will better recognize the importance of their work. Risk Analysis: A key portion of the Understand phase is risk analysis. The goal of risk analysis is to assess overall project risk by identifying and managing specific risks. Risk analysis addresses and eliminates risk items before they become a threat to the success of the project. It determines the extent of the possible risks, how they relate to each other, and which risks are most important (Buttigieg). Risk analysis benefits projects in several ways. Developers and analysts can eliminate risks before they become problematic, which allows the development of the product to proceed smoothly. Projects that use risk analysis techniques have more predictable schedules. By identifying and eliminating risks before they become a problem, they encounter fewer surprises. Because risk analysis prevents schedule delays, the project cost is often lower than expected. Although this method introduces risk analysis as part of the first phase, analysts should be aware of potential risks associated with each phase of the project. The process of risk analysis should begin early, but should continue to affect decision-making throughout the project. 2001 Systems Engineering Capstone Conference • University of Virginia Defining the Problem: Defining the problem accurately from the beginning is one of the most important steps in the understand step. If the analysts do not adequately define the problem, the project will most likely fail. Correct phrasing of the problem and placing it properly in context allow both the customer and the analyst to agree on the problem. Requirements Analysis: Requirements elicitation is an important aspect to determining the requirements and needs of the customer. It allows the stakeholders to reveal and understand the needs of the systems. Identifying the relevant sources of information includes not only talking to the client, but involves the users, the support staff and the suppliers (Mehalik, 1999). Each group has something unique to add to the system; the requirements that the managers give to the developers could actually be very different from those of the actual users of the system. There are several obvious outcomes from a good elicitation process. The most beneficial outcome is an informed client. The client is able to distinguish the wants of the project from the needs. The client understands the procedure for creating the product, including the structure, the constraints, the risks, and the function of the finished product. The project is feasible in terms of time, necessary equipment, money, and available personnel (Mehalik, 1999). This combination leads to a high likelihood of success for the project. the Understand phase. The components of the system are the application platform, operating system, database, and application programming interface (API) and adapters. For each project, the integrator should build an integration architecture that ties all systems together including application and technical components. Unlike common methods, LIF consists of an information analysis section where the integrator defines the data format, structure and contents of events that will pass between applications (WebMethods, 2000). Finally, the integrator should identify the characteristics that the integration architecture must have in order to meet goals (Concept Five, 2000). An advantage of the LĪF Strategy is that it can help in determining which products, adapters, connectors, vendors, and technologies best fit in the integration architecture. Test Prototyping can be considered a form of testing. In the process of risk mitigation, prototypes should have been built to ensure project feasibility, essentially testing the capabilities of the system. Yet tests should also be conducted to ensure that the different modules are operating as expected. This will not be possible in all cases, especially in projects with parallel development, but each phase of development should include testing of the integrated system. These tests should not be left until actual system delivery. It may require development of a different approach to testing to handle these parallel development situations adequately. Develop Implement Once the role of the integration system has been defined, it can be developed. This process is largely mature, as technical difficulty was not mentioned in interviews as a hindrance in integration projects. Rather, the difficulties came in requirements definition, which should be addressed in the previous step. For development, there must be an assessment of project risks. In this step these risks should be officially addressed, although this should also be a continual process through the project. Technical pitfalls should be identified and remedied. In addition, changing requirements should be freely communicated to all members of the team who could be affected. Architecture Analysis: Perhaps the most unique part of this method is in Architecture Analysis. The goal of this phase is for the integrator to fully understand the current states and components of the integration system. It is the technical counterpart to 73 After development has been completed, it can be implemented in its intended environment. At this point it is critical to ensure that the implemented solution operates as expected. This step should be simple if in the previous steps engineers have kept the client needs and objectives in mind. Maintain The completion of one integration project probably will not mark the end of the changes to a system. The system must then be maintained through later integration with other systems. Design decisions should be made with consideration for future changes. CONCLUSIONS Legacy system integration is a pervasive problem in both the public and private sectors. It is made all the Development of an Integration Methodology more difficult because there is no organized collection of best practices that can be applied to integration situations. This project collected these best practices and developed a strategy called the Legacy Integration Framework that can be used in conjunction with current methods of development to improve the capabilities of the client, Lockheed Martin Undersea Systems. Any legacy system integration methodology needs to fit into current practices. The Legacy Integration Framework, rather than representing an entirely new strategy, forms more of a framework for approaching integration projects. While not detailing every aspect of the legacy integration process, LIF can act as a guiding strategy to ensure that critical integrationspecific details remain in the forefront over the course of the project. Lutz, J. “EAI Architecture Patterns.” EAI Journal. March 2000. Sorenson, Reed. “Comparison of Software Development Methodologies.” January 1995. 15 February 2001. http://stsc.hill.af.mil/crosstalk/1995/jan/comparis.asp. “Spiral Method.” 16 February 2001. www.cstp.umkc.edu/personal/cjweber/spiral.html. “Spiral Model.” 15 February 2001. http://www.isds.jpl.nasa.gov/cwo/cwo_23/handbook/s piral.htm. WebMethods, Inc. Overview of the Application Integration Methodology (AIM) Process. August 2000. BIOGRAPHIES REFERENCES Bouguettaya, A., et. al. Interconnecting Heterogeneous Information Systems. Kluwer Academic Publishers: Boston, 1998. Chuah, M., Juarez, O., Kolojejchick, J., and Roth, S. “Searching Data-Graphics by Content.”http://www.cs.cmu.Edu/Web/Groups/sa ge/Papers/SageBook/SageBook.htmlSageBook. Concept Five Technologies. Strategic Business Applications Through Application Integration. 2000. http://www.concept5.com/docs/wp02_appint.pdf “Corba or XML; Conflict or Cooperation?” 15 October 2000. http://www.omg.org/news/whitepapers/index.htm Gibson, John E. How to do a Systems Analysis. Ivy, VA: P.S. Publishing: July 1991. Glossbrenner, A. and E. Glossbrenner. Search Engines for the WWW. Berkeley: Peachpit Press: 1998. Gold-Bernstein, Beth. “EAI Market Segmentation.” EAI Journal. July/August 1999. King, Nelson. “The New Integration Imperative.” Intelligent Enterprise. 5 October 1999:24. 74 Rebecca Gonsoulin is a fourth-year Systems Engineering major from Williamsburg, VA, concentrating in Communications. Her focus for the project was in the research of search and retrieval techniques and in software development methodologies. She has accepted a position with Applied Signal Technology, Inc. as a Systems Engineer in Annapolis Junction, MD. She’d tell you more about her job, but then she’d have to kill you. Wendy Lee is a fourth-year Systems Engineering major concentrating in Management Information Systems from Gaithersburg, MD. Her focus for the project was the research in integration methodologies and development of the checklists for the evaluation of products and middleware. She will be working for PricewaterhouseCoopers as an IT Consultant in the Washington Consulting Practice Division after graduation. We tried to add something funny to this bio, but she wouldn’t let us put it in. Donté “The Man” Parks is a fourth-year Systems Engineering major from Hampton, VA, completing a selfdesigned concentration in Internet Information Management. His principle project contribution was research on legacy integration techniques in addition to providing uplifting bits of wit and sarcasm. He has accepted a position with Microsoft, and will begin work there after spending a few (more) months sitting around not doing much besides watching Cartoon Network and playing his Playstation 2.