ICFASCIC_DDExecReport_V4.2

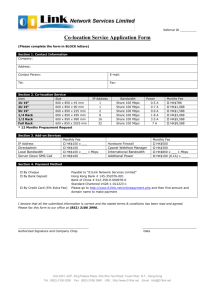

advertisement