Section 8.2 Markov and Chebyshev Inequalities and the Weak Law

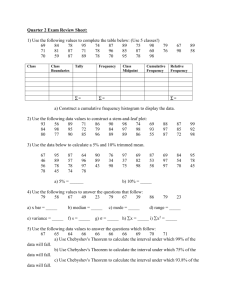

advertisement

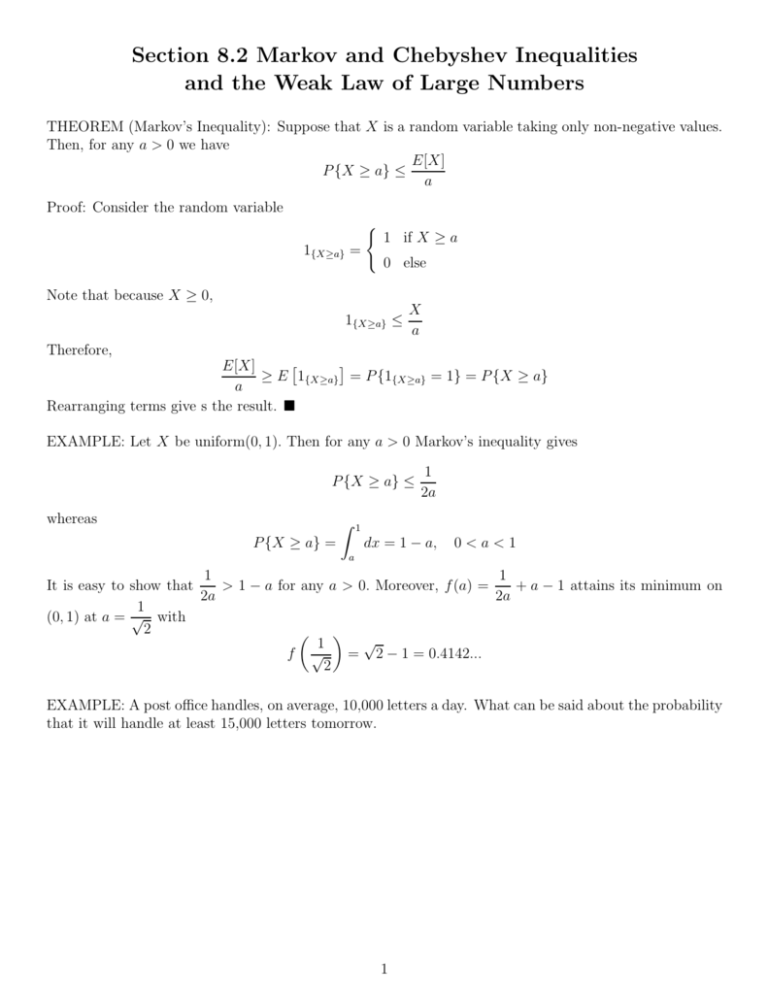

Section 8.2 Markov and Chebyshev Inequalities

and the Weak Law of Large Numbers

THEOREM (Markov’s Inequality): Suppose that X is a random variable taking only non-negative values.

Then, for any a > 0 we have

E[X]

P {X ≥ a} ≤

a

Proof: Consider the random variable

1{X≥a} =

Note that because X ≥ 0,

(

1 if X ≥ a

0 else

1{X≥a} ≤

X

a

Therefore,

E[X]

≥ E 1{X≥a} = P {1{X≥a} = 1} = P {X ≥ a}

a

Rearranging terms give s the result. EXAMPLE: Let X be uniform(0, 1). Then for any a > 0 Markov’s inequality gives

P {X ≥ a} ≤

whereas

P {X ≥ a} =

Z

1

2a

1

a

dx = 1 − a,

0<a<1

1

1

> 1 − a for any a > 0. Moreover, f (a) =

+ a − 1 attains its minimum on

It is easy to show that

2a

2a

1

(0, 1) at a = √ with

2

√

1

f √

= 2 − 1 = 0.4142...

2

EXAMPLE: A post office handles, on average, 10,000 letters a day. What can be said about the probability

that it will handle at least 15,000 letters tomorrow.

1

EXAMPLE: A post office handles, on average, 10,000 letters a day. What can be said about the probability

that it will handle at least 15,000 letters tomorrow.

Solution: Let X be the number of letters handled tomorrow. Then E(X) = 10, 000. Further, by Markov’s

inequality we get

10, 000

2

E(X)

=

=

P (X ≥ 15, 000) ≤

15, 000 15, 000

3

THEOREM (Chebyshev’s Inequality): Let X be a random variable with finite mean µ and variance σ 2 ,

then for any k > 0 we have

σ2

P {|X − µ| ≥ k} ≤ 2

k

2

THEOREM (Chebyshev’s Inequality): Let X be a random variable with finite mean µ and variance σ 2 ,

then for any k > 0 we have

σ2

P {|X − µ| ≥ k} ≤ 2

k

Proof: Note that (X − µ)2 ≥ 0, so we may use Markov’s inequality. Apply with a = k 2 to find that

P {(X − µ)2 ≥ k 2 } ≤

E[(X − µ)2 ]

σ2

=

k2

k2

But (X − µ)2 ≥ k 2 if and only if |X − µ| ≥ k, so

P {|X − µ| ≥ k} ≤

σ2

k2

REMARK: Note that if k = aσ in Chebyshev’s inequality, then

P (|X − µ| ≥ aσ) ≤

1

a2

This gives that probability of being a deviates from the expected value drops like 1/a2 . Of course, we also

have

1

P (|X − µ| < aσ) ≥ 1 − 2

a

EXAMPLE: Let X be uniform(0, 1). Then for any a > 0 Chebyshev’s Inequality gives

1

1

P X − < aσ ≥ 1 − 2

2

a

whereas

1

1

1

1

P X − < aσ = P −aσ < X − < aσ = P −aσ + < X < aσ +

2

2

2

2

=

aσ+1/2

2a

a

dx = 2aσ = √ = √ ,

12

3

−aσ+1/2

Z

0<a≤

√

3

a

a

1

1

One can show that √ > 1 − 2 for any a > 0. Moreover, f (a) = √ + 2 − 1 attains its minimum on

a

3

3 a

√

6

(0, ∞) at a = 12 = 1.513... with

√ 6

12 = 0.3103...

f

√

1

1

REMARK: Note that P X − < aσ = 1 if a ≥ 3 and 1 − 2 → 1 as a → ∞.

2

a

EXAMPLE: A post-office handles 10,000 letters per day with a variance of 2,000 letters. What can be

said about the probability that this post office handles between 8,000 and 12,000 letters tomorrow? What

about the probability that more than 15,000 letters come in?

3

EXAMPLE: A post-office handles 10,000 letters per day with a variance of 2,000 letters. What can be

said about the probability that this post office handles between 8,000 and 12,000 letters tomorrow? What

about the probability that more than 15,000 letters come in?

Solution: We want

P (8, 000 < X < 12, 000) = P (−2, 000 < X − 10, 000 < 2, 000)

= P (|X − 10, 000| < 2, 000) = 1 − P (|X − 10, 000| ≥ 2, 000)

By Chebyshev’s inequality we have

P (|X − 10, 000| ≥ 2, 000) ≤

1

σ2

=

= 0.0005

2

(2, 000)

2, 000

Thus,

P (8, 000 < X < 12, 000) ≥ 1 − 0.0005 = 0.9995

Now to bound the probability that more than 15,000 letters come in. We have

P {X ≥ 15, 000} = P {X − 10, 000 ≥ 5, 000} ≤ P {(X − 10, 000 ≥ 5, 000) ∪ (X − 10, 000 ≤ −5, 000)}

= P {|X − 10, 000| ≥ 5, 000} ≤

1

2, 000

=

= 0.00008

2

5, 000

12500

Recall, that the old bound (no information about variance) was 2/3.

EXAMPLE: Let X be the outcome of a roll of a die. We know that

E[X] = 21/6 = 3.5 and Var(X) = 35/12

Thus, by Markov,

0.167 ≈ 1/6 = P (X ≥ 6) ≤

E[X]

21/6

=

= 21/36 ≈ 0.583

6

6

Chebyshev gives

P ( X ≤ 2 or X ≥ 5) = 2/3 = P (|X − 3.5| ≥ 1.5) ≤

THEOREM: If Var(X) = 0, then

P {X = E[X]} = 1

4

35/12

≈ 1.296

1.52

THEOREM: If Var(X) = 0, then

P {X = E[X]} = 1

Proof. We will use Chebyshev’s inequality. Let E[X] = µ. For any n ≥ 1 we have

1

=0

P |X − µ| ≥

n

1

Let En = |X − µ| ≥

. Note that

n

E1 ⊆ E2 ⊆ . . . ⊆ En ⊆ En+1 ⊆ . . .

so this is an increasing sequence of sets. Therefore,

(∞

)

[

1

1

0 = lim P |X − µ| ≥

= P lim |X − µ| ≥

=P

En = P {X 6= µ}

n→∞

n→∞

n

n

n=1

Hence,

P {X = µ} = 1 − P {X 6= µ} = 1 THEOREM (The Weak Law of Large Numbers): Let X1 , X2 , . . . be a sequence of independent and identically distributed random variables, each having the finite mean E[Xi ] = µ. Then for any ǫ > 0 we

have

X1 + . . . + Xn

− µ ≥ ǫ → 0 as n → ∞

P n

5

THEOREM (The Weak Law of Large Numbers): Let X1 , X2 , . . . be a sequence of independent and identically distributed random variables, each having the finite mean E[Xi ] = µ. Then for any ǫ > 0 we

have

X1 + . . . + Xn

− µ ≥ ǫ → 0 as n → ∞

P n

Proof: We will also assume that σ 2 is finite to make the proof easier. We know that

X1 + . . . + Xn

σ2

X1 + . . . + Xn

E

= µ and Var

=

n

n

n

Therefore, a direct application of Chebyshev’s inequality shows that

X1 + . . . + Xn

σ2

− µ ≥ ǫ ≤ 2 → 0 as n → ∞ P n

nǫ

Statistics and Sample Sizes

Suppose that we want to estimate the mean µ of a random variable using a finite number of independent

samples from a population (IQ score, average income, average surface water temperature, etc.).

Major Question: How many samples do we need to be 95% sure our value is within .01 of the correct

value?

Let µ be the mean and σ 2 be the variance and let

X=

X1 + . . . + Xn

n

be the sample mean. We know that

E[X] = µ,

X1 + . . . + Xn

Var(X) = Var

n

=

σ2

n

By Chebyshev we have

P {|X − µ| ≥ ǫ} ≤

σ2

ǫ2 n

Now, we want that for some n

P {|X − µ| < .01} ≥ .95

=⇒

P {|X − µ| ≥ .01} ≤ .05

Thus, it is sufficient to guarantee that

σ2

= .05

(.01)2 n

=⇒

n≥

σ2

= 200, 000σ 2

(.01)2 (.05)

To be 95% sure we are within .1 of the mean we need to satisfy

σ2

= .05

(.1)2 n

=⇒

n≥

6

σ2

= 2, 000σ 2

(.1)2 (.05)

Section 8.3 The Central Limit Theorem

EXAMPLE: Consider rolling a die 100 times (each Xi is the output from one roll) and adding outcomes.

We will get around 350, plus or minus some. We do this experiment 10,000 times and plot the number of

times you get each outcome. The graph looks like a bell curve.

EXAMPLE: Go to a library and go to the stacks. Each row of books is divided into n ≫ 1 pieces. Let Xi

n

X

be the number of books on piece i. Then

Xi is the number of books on a given row. Do this for all

i=1

rows of the same length. You will get a plot that looks like a bell curve.

Recall, if X1 , X2 , . . . are independent and identically distributed random variables with mean µ, then by

the weak law of large numbers we have that

1

[X1 + . . . + Xn ] → µ

n

or

1

[X1 + . . . + Xn − nµ] → 0

n

In a sense, this says that scaling by n was “too much.” Why? Note that the random variable

X1 + X2 + . . . + Xn − nµ

has mean zero and variance

Var(X1 + . . . + Xn ) = nVar(X)

Therefore,

X1 + . . . + Xn − nµ

√

σ n

has mean zero and variance one.

THEOREM (Central Limit Theorem): Let X1 , X2 , . . . be independent and identically distributed random

variables with mean µ and variance σ 2 . Then the distribution of

X1 + . . . + Xn − nµ

√

σ n

tends to the standard normal as n → ∞. That is, for −∞ < a < ∞ we have

Z a

1

X1 + . . . + Xn − nµ

2

√

e−x /2 dx as n → ∞

≤a → √

P

σ n

2π −∞

To prove the theorem, we need the following:

LEMMA: Let Z1 , Z2 , . . . be a sequence of random variables with distribution functions FZn and moment

generating functions MZn , n ≥ 1; and let Z be a random variable with a distribution function FZ and a

moment generating function MZ . If

MZn (t) → MZ (t)

for all t, then FZn (t) → FZ (t) for all t at which FZ (t) is continuous.

7

REMARK: Note, that if Z is a standard normal, then

2 /2

MZ (t) = et

which is continuous everywhere, so we can ignore that condition in our case. Thus, we now see that if

2 /2

MZn (t) → et

as n → ∞

for each t, then

FZn (t) → Φ(t)

Proof: Assume that µ = 0 and σ 2 = 1. Let M(t) denote

√ the moment generating function of the common

distribution. The moment generating function of Xi / n is

√

t

tXi / n

]=M √

E[e

n

n

n

X

t

Xi

√ is M √

. We need

Therefore, the moment generating function of the sum

n

n

i=1

M

t

√

n

n

t2 /2

→e

⇐⇒

n t2

t

→

ln M √

2

n

Let

L(t) = ln M(t)

Note that

L′ (0) =

L(0) = ln M(0) = 0,

and

L′′ (0) =

M ′ (0)

=µ=0

M(0)

M ′′ (0)M(0) − M ′ (0)2

= E[X 2 ] − (E[X])2 = 1

[M(0)]2

Putting pieces together, we get

n t

t

t

= n ln M √

= nL √

ln M √

n

n

n

Finally, we obtain

√

√

L(t/ n)

−L′ (t/ n)n−3/2 t

lim

= lim

n→∞

n→∞

n−1

−2n−2

√

L′ (t/ n)t

= lim

n→∞

2n−1/2

√

−L′′ (t/ n)t2 n−3/2

= lim

n→∞ −2 · 2 · (1/2) · n−3/2

√

L′′ (t/ n)t2

= lim

n→∞

2

=

t2

2

8

(by L’Hopital’s rule)

(by L’Hopital’s rule)

So, we are done if µ = 0 and σ 2 = 1. For the general case, just consider

Xi∗ =

Xi − µ

σ

which has mean zero and variance one. Then,

∗

X1 + . . . + Xn∗

√

P

≤ t → Φ(t) =⇒

n

P

X1 + . . . + Xn − nµ

√

≤ t → Φ(t)

σ n

which is the general result. EXAMPLE: An astronomer is measuring the distance to a star. Because of different errors, each measurement will not be precisely correct, but merely an estimate. He will therefore make a series of measurements

and use the average as his estimate of the distance. He believes his measurements are independent and

identically distributed with mean d and variance 4. How many measurements does he need to make to be

95% sure his estimate is accurate to within ±.5 light-years?

Solution: We let the observations be denoted by Xi . From the central limit theorem we know that

Pn

Xi − nd

Zn = i=1 √

2 n

has approximately a normal distribution. Therefore, for a fixed n we have

)

(

√

√ n

n

n

1X

Xi − d ≤ .5 = P −.5

≤ Zn ≤ .5

P −.5 ≤

n i=1

2

2

√ √ n

n

−Φ −

=Φ

4

4

√ n

= 2Φ

−1

4

To be 95% certain we want to find n∗ so that

√ ∗ √ ∗ n

n

− 1 = .95 or Φ

= .975

2Φ

4

4

Using the chart we see that

√

n∗

= 1.96 or n∗ = (7.84)2 = 61.47

4

Thus, he should make 62 observations.

It’s tricky to know how good this approximation is. However, we could also use Chebyshev’s inequality if

we want a sharp bound. Since

#

!

" n

n

4

1X

1X

Xi = d and Var

Xi =

E

n i=1

n i=1

n

Chebyshev’s inequality yields

)

( n

1 X

4

16

Xi − d > .5 ≤

=

P 2

n

n(.5)

n

i=1

9

Therefore, to guarantee the above probability is less than or equal to .05, we need to solve

16

= .05

n

=⇒

n=

16

= 320

.05

That is, if he makes 320 observations, he can be 95% sure his value is within ±.5 light-years of the true

value.

EXAMPLE: Let X1 , X2 , . . . be independent and identically distributed random variables with mean µ and

standard deviation σ. Set Sn = X1 + . . . + Xn . For a large n, what is the approximate probability that Sn

is between E[Sn ] − kσSn and E[Sn ] + kσSn (the probability of being k deviates from the mean)?

Solution: We have

E[Sn ] = E[X1 + . . . + Xn ] = nµ and σSn =

Thus, letting Z ∼ N(0, 1), we get

p

Var(Sn ) =

p

√

Var(X1 + . . . + Xn ) = σ n

√

√

P {E[Sn ] − kσSn ≤ Sn ≤ E[Sn ] + kσSn } = P {nµ − kσ n ≤ Sn ≤ nµ − kσ n}

√

√

= P {−kσ n ≤ Sn − nµ ≤ kσ n}

= P {−k ≤

Sn − nµ

√

≤ k}

σ n

≈ P (−k ≤ Z ≤ k)

Compare: Chebyshev’s inequality gives

Z k

1

2

e−x /2 dx

=√

2π −k

.6826

k=1

.9545

k=2

=

.9972

k=3

.9999366 k = 4

√

√

P {|Sn − nµ| < kσ n} = 1 − P {|Sn − nµ| ≥ kσ n}

≥1−

σ2 n

k2 σ2n

=1−

1

k2

0

.75

=

.8889

.9375

10

k

k

k

k

=1

=2

=3

=4

Section 8.4 The Strong Law of Large Numbers

THEOREM (The Strong Law of Large Numbers): Let X1 , X2 , . . . be a sequence of independent and

identically distributed random variables, each having a finite mean µ = E[Xi ] and finite fourth moment

E[Xi4 ] = K. Then, with probability one,

X1 + . . . + Xn

→ µ as n → ∞

n

Compare: By the Weak Law we have

X1 + . . . + Xn

P − µ ≥ ǫ → 0 as n → ∞

n

Imagine you are rolling a die and you are tracking

Xn =

X1 + . . . + Xn

n

The Weak Law says that for large n, the probability that X n is more than ǫ away from µ goes to zero.

However, we could still have

|X 100 − µ| > ǫ,

|X 1000 − µ| > ǫ,

|X 10000 − µ| > ǫ,

|X 100000 − µ| > ǫ, etc.,

it’s just that it happens very rarely.

The Strong Law says that with probability one for a given ǫ > 0, there is an N such that for all n > N

|X n − µ| ≤ ǫ

Proof: As we did in the proof of the central limit theorem, we begin by assuming that the mean of the

random variables is zero. That is

E[Xi ] = 0

Now we let

Sn = X1 + . . . + Xn

and consider

E[Sn4 ] = E[(X1 + . . . + Xn )4 ]

Expanding the quartic results in terms of the form

Xi4 ,

Xi3 Xj ,

Xi2 Xj2 ,

Xi2 Xj Xk ,

Xi X k X j X ℓ

However, we know that they are independent and have a mean of zero. Therefore, lots of the terms are

zero

E[Xi3 Xj ] = E[Xi3 ]E[Xj ] = 0

E[Xi2 Xj Xk ] = E[Xi2 ]E[Xi ]E[Xj ] = 0

E[Xi Xk Xj Xℓ ] = E[Xi ]E[Xk ]E[Xj ]E[Xℓ ] = 0

Therefore, the only terms that remain are those of the form

Xi4 ,

Xi2 Xj2

11

Obviously,

there are exactly n terms of the form Xi4 . As for the other, we note that for each pair of i and

4

j, 2 = 6 ways to choose i two times from the four. Thus, each Xi2 Xj2 will appear 6 times. Also, there

are n2 ways to choose 2 objects from n, so the expansion is

n

4

4

E[Xi2 ]E[Xj ]2 = nK + 3n(n − 1)(E[Xi2 ])2

(1)

E[Sn ] = nE[Xi ] + 6

2

We have

0 ≤ Var(Xi2 ) = E[Xi4 ] − (E[Xi2 ])2

and so

(E[Xi2 ])2 ≤ E[Xi4 ] = K

Therefore, by (1) we get

E[Sn4 ] ≤ nK + 3n(n − 1)K

or

Sn4

K

3K

E 4 ≤ 3+ 2

n

n

n

Hence,

E

#

"∞

X S4

n=1

Could

n

n4

=

∞

X

i=1

X

∞ Sn4

K

3K

E 4 ≤

+ 2 <∞

3

n

n

n

n=1

∞

X

S4

n

n=1

n4

=∞

with probability p > 0? If so, then the expected value would be infinite, which we know it isn’t. Therefore,

with a probability of one we have that

∞

X

Sn4

<∞

4

n

n=1

But then, with a probability of one,

Sn4

→ 0 as n → ∞

n4

Sn

→ 0 as n → ∞

n

or

which is precisely what we wanted to prove.

In the case when µ 6= 0, we apply the preceding argument to Xi − µ to show that with a probability of

one we have

n

X

Xi − µ

=0

lim

n→∞

n

i=1

or, equivalently,

lim

n→∞

which proves the result. n

X

Xi

i=1

12

n

=µ