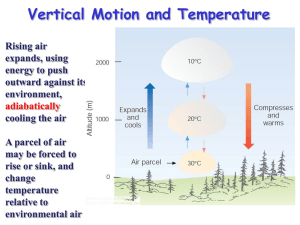

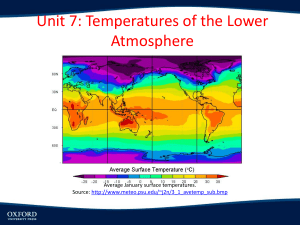

MODELING LAPSE RATES Investigating the Variables that Drive

advertisement