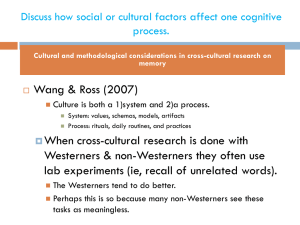

Do better schools lead to more growth? Cognitive skills, economic

advertisement