ARTICLE IN PRESS

+

MODEL

Radiography (2008) xx, 1e7

available at www.sciencedirect.com

journal homepage: www.elsevier.com/locate/radi

Radiographic interpretation of the appendicular

skeleton: A comparison between casualty officers,

nurse practitioners and radiographers

Liz Coleman a,*, Keith Piper b

a

Radiology Department, Royal Surrey County Hospital, Egerton Road, Guildford, Surrey GU2 7XX, United Kingdom

Allied Health Professions Department, Canterbury Christ Church University, Canterbury,

Kent CT1 1QU, United Kingdom

b

Received 25 October 2007; revised 27 November 2007; accepted 5 December 2007

KEYWORDS

Image interpretation;

Appendicular skeleton;

AFROC;

Casualty officer;

Nurse practitioner;

Radiographer

Abstract Aim: To assess how accurately and confidently casualty officers, nurse practitioners and radiographers, practicing within the emergency department (ED), recognize and

describe radiographic trauma within an image test bank of 20 appendicular radiographs.

Method: The participants consisted of 7 casualty officers, 13 nurse practitioners and 18 radiographers. All 20 radiographic examinations selected for the image test bank had been acquired

following trauma and included some subtle, yet clinically significant abnormalities. The test

bank score (maximum 40 marks), sensitivity and specificity percentages were calculated

against an agreed radiological diagnosis (reference standard). Alternative Free-response Receiver Operating Characteristic (AFROC) analysis was used to assess the overall performance

of the diagnostic accuracy of these professional groups. The variation in performance between

each group was measured using the analysis of variance (ANOVA) test, to identify any statistical significant differences in the performance in interpretation between these groups. The

relationship between the participants’ perceived image interpretation accuracy during clinical

practice and the actual accuracy of their image test bank score was examined using Pearson’s

Correlation Coefficient (r).

Results: The results revealed that the radiographers gained the highest mean test bank score

(28.5/40; 71%). This score was statistically higher than the mean test bank scores attained by

the participating nurse practitioners (21/40; 53%) and casualty officers (21.5/40; 54%), with

p < 0.01 and p Z 0.02, respectively. When compared with each other, the scores from these

latter groups showed no significant difference (p Z 0.91). The mean ‘area under the curve’

(AUC) value achieved by the radiographers was also significantly higher (p < 0.01) in comparison to the AUC values demonstrated by the nurse practitioners and casualty officers, whose

results, when compared, showed no significant difference (p Z 0.94). The radiographers’ results demonstrated a moderate positive correlation (r Z 0.51) between their clinical practice

* Corresponding author. Tel.: þ44 1483 571122x4165.

E-mail address: lizcoleman@hotmail.co.uk (L. Coleman).

1078-8174/$ - see front matter ª 2007 The College of Radiographers. Published by Elsevier Ltd. All rights reserved.

doi:10.1016/j.radi.2007.12.001

Please cite this article in press as: Coleman L, Piper K, Radiographic interpretation of the appendicular skeleton: A comparison between

casualty officers, nurse practitioners and radiographers, Radiography (2008), doi:10.1016/j.radi.2007.12.001

ARTICLE IN PRESS

+

MODEL

2

L. Coleman, K. Piper

estimations and their actual image test bank scores (p Z 0.02); however, no significant correlation was found for the nurse practitioners (r Z 0.41, p Z 0.16) or casualty officers (r Z 0.07,

p Z 0.87).

Conclusion: The scores and values achieved by the radiographers were statistically higher than

those demonstrated by the participating nurse practitioners and/or casualty officers. The

results of this research suggest that radiographers have the ability to formally utilise their

knowledge in image interpretation by providing the ED with a written comment (initial interpretation) to assist in the radiographic diagnosis and therefore replace the ambiguous ‘red

dot’ system used to highlight abnormal radiographs.

ª 2007 The College of Radiographers. Published by Elsevier Ltd. All rights reserved.

Introduction

The majority of Emergency Department (ED) X-ray departments within the United Kingdom (UK) operate a ‘red dot’

scheme to highlight radiographic trauma to the referring

practitioner.1 However, unless evidence of radiographic

trauma is generally obvious, clarification or identification

of the abnormality (as indicated by the red dot) may be

required from the radiographer. As a result, some radiology

departments now provide the ED practitioners with a written

opinion of the radiographic appearances.2,3 This initiative is

strongly supported by the Society and College of Radiographers (SCoR, UK), who stated in 2004 (p. 4) that ‘‘.the widespread and popular implementation of ‘red dot’ systems

should now be encouraged to evolve into an expectation

that a first line interpretation (e.g. radiographer comment)

should be a standard expectation of all radiographers, incorporated in pre-registration training and become accepted as

normal practice’’.4

The evolution of the nurse practitioner’s role enables

these professionals to hold a more autonomous post that

involves the assessment, treatment and discharge of patients, extending the nursing role beyond conventional

boundaries.5,6 This has increased the number of professional groups that request and interpret ED radiographs as

part of their normal working practice, a role traditionally

undertaken by a senior house officer (SHO) or casualty officer. Many nurse practitioners have formed an established

role within the ED, however, there is at present, no requirement to gain further qualifications.7 There is also no nationally accepted training scheme in place for SHOs beginning

their rotation within the ED department.8 However, the

diagnosis and subsequent treatment of the patient often

relies upon the accuracy of the radiographic interpretation

by the ED practitioners.

Performance studies to date that have compared the

image interpretation accuracy of casualty officers and

nurse practitioners reveal little, if any difference in the

accuracy between these professional groups.9e12 These

papers generally regarded the casualty officers as the

‘benchmark comparator’ and appeared to imply that nurse

practitioners who achieved comparable scores of accuracy

were then deemed to possess a satisfactory and safe level

of practice in image interpretation.1

Over 20 years ago, a study by Berman 13 compared the

image interpretation ability of casualty officers and radiographers. The casualty officers achieved a mean accuracy of

88.9%, which was similar to the performance achieved by

the radiographers (87.4%). Since then the majority of the

published research appears to report upon the image interpretation accuracy of radiographers independently or in

comparison to radiologists.14,15 A more recent study by

Piper and Paterson16 compared the image interpretation

ability of radiographers and nurses (working in either ED

or a Minor Injuries Unit (MIU)), yet to date there has been

no study published which has compared the performance

of casualty officers, nurse practitioners and radiographers.

Aims

To investigate

if there was any significant difference in the performance between the casualty officers, nurse practitioners and radiographers when interpreting a bank of

20 appendicular skeleton (trauma) images, in terms of:

(a) the image test bank score, sensitivity and specificity;

(b) the area (A1) under the Receiver Operating Characteristic (ROC) curve (AUC) generated following

Alternative Free-response ROC (AFROC) analysis;

if there was any correlation between the confidence

and performance demonstrated by the three different

professional groups, in terms of their perceived level

of accuracy during normal working practice and the

image test bank score demonstrated during this study.

Methodology

Ethical approval

Appropriate approval for this research to be undertaken

was gained from the Research and Development (R&D)

department within the NHS Trust and the Local Research

Ethics Committee (LREC).

Radiograph selection

All 20 skeletal examinations selected for the test bank were

based on a selection of images similar to those utilised in

two previous studies (Table 1).10,16 The radiographic cases

required for the test bank were generated from an image

library within the radiology department of an NHS trust in

South East England. The original anonomised reports were

retained for all images selected and three further reports

Please cite this article in press as: Coleman L, Piper K, Radiographic interpretation of the appendicular skeleton: A comparison between

casualty officers, nurse practitioners and radiographers, Radiography (2008), doi:10.1016/j.radi.2007.12.001

ARTICLE IN PRESS

+

MODEL

Radiographic interpretation of the appendicular skeleton

Table 1

Radiographic test bank

1. Salter Harris I fracture of distal radius

2. Minimally displaced fracture at the base of second

proximal phalanx

3. Comminuted fracture at the base of fifth metacarpal

with displaced fractures at the base of fourth and fifth

distal phalanges

4. Dislocation of calcaneocuboid joint

5. Perilunate dislocation and scaphoid fracture

6. Normal thumb

7. Calcaneal fracture

8. Normal elbow

9. Normal hand

10. Elbow with elevated fat pads, no fracture (lateral view)

11. Minimally displaced colles’ fracture of distal radius

12. Normal ankle of 14-year old

13. Buckle fracture of distal radius

14. Undisplaced posterior malleolar fracture

15. Lipohaemarthrosis on knee film (lateral view only)

16. Fracture of third metatarsal

17. Fracture of neck of fourth metacarpal

18. Dislocation of first carpometacarpal joint

19. Normal foot of 14-year old

20. Normal shoulder

were obtained from a consultant radiologist with many

years of skeletal reporting experience, a senior radiology

registrar and an advanced practitioner radiographer with

five years of plain film reporting experience. A consensus

of diagnosis was reached on all images selected and an

expected answer (reference standard) generated for all

cases. Each individual case was defined as abnormal if

a clinically significant radiographic finding was apparent

as outlined in Table 1. Cases 6, 8, 9, 12, 19 and 20 were

classified as normal. These decisions were all agreed prior

to observation by the study participants.

The examinations were acquired following trauma and

featured radiographs of the arm distal to, and inclusive of

the shoulder and radiographs of the leg, distal to, and

inclusive of the knee. The test bank included images that

demonstrated radiographic trauma outside the primary

area of interest, as well as those that included more than

one radiographic injury. This was used to test the observers’

‘satisfaction of search’ and ‘failure to search’ errors.17,18

Participants were informed that some of the images were

normal, to be consistent with normal clinical practice.

Observer groups

A total of 38 participants, all of whom were based in the

same district general hospital, completed the interpretation of the image test bank. The study group consisted of 18

radiographers (Bands 5 and 6), 13 nurse practitioners and

one cohort of 7 casualty officers. All the radiographers

rotate within the ED X-ray department and participate in

the ‘red dot’ scheme. All nurse practitioners in the study

request and interpret radiographs. From the sample demographics it was found that 67% of the radiographers had five

years or more radiography experience, compared with 31%

of the nurse practitioners who had held their current position for the equivalent length of time.

3

Data collection

All participants completed the image test bank independently. A numbered answer booklet and an additional information sheet were issued to each participant. Patient

details were removed from each image which were numbered and accompanied by a brief clinical history in the

corresponding numbered answer booklet. The clinical history was based on that provided at the time of the original

referral and identified the symptomatic anatomical area on

the corresponding image.

Participants were asked to specify if the radiographic

examination was normal or abnormal, giving the certainty

of their decision using a five-point scale (definitely normal,

probably normal, possibly abnormal, probably abnormal or

definitely abnormal). Participants were also instructed that

all answers, other than those considered to be ‘definitely or

probably normal’ were to be supported by text that

identified the nature and location of the abnormality.

Before the image interpretation began the participants

were asked to clarify their present position, title and grade

and then specify, on a scale of 1e10, how accurately they

perceived their image interpretation skills to be during

normal working practice. The answer booklets were randomised before marking and the additional information

sheets were not made available at this time, ensuring that

the scorers were blind to the profession of individual

participants. All answer booklets were marked by the first

author (LC), an experienced radiographer specialised in

plain film reporting, and the first 10% of the answer booklets

were also marked by a second scorer independently. The

second scorer (KP) is an experienced academic radiographer

and the director of established post-graduate radiography

programmes. The scoring system was followed and produced clear agreement of scores, however, any discrepancies or queries that arose was discussed and resolved to

maintain consistency.

Data analysis

Test bank scores

The films were marked using a scoring system employed in

similar studies.10,16,19 A maximum score of two marks were

available for each question. Full marks were awarded if the

image was correctly classified as normal or abnormal, and if

both the location and description of the radiographic abnormality or abnormalities were correct. One mark was

awarded when the answer was partially correct.16 No marks

were deducted for poor spelling or abbreviations. A maximum total of 40 marks per participant was therefore

possible.

Comparison of estimated and actual accuracy

The relationship between the participants’ perceived

accuracy during clinical practice and the actual accuracy

of their image test bank score was examined using Pearson’s Correlation Coefficient (r) and the significance

calculated.20

Please cite this article in press as: Coleman L, Piper K, Radiographic interpretation of the appendicular skeleton: A comparison between

casualty officers, nurse practitioners and radiographers, Radiography (2008), doi:10.1016/j.radi.2007.12.001

ARTICLE IN PRESS

+

MODEL

4

Sensitivity and specificity

The accuracy of a diagnostic test is measured by sensitivity

and specificity.21 Sensitivity is the proportion of positive

cases correctly identified as being abnormal; conversely

specificity is the proportion of negative cases correctly

identified as being normal.22 Accuracy is defined as true

positives and true negatives divided by all results.23 To enable these calculations to be completed each response was

marked as a true positive (TP), true negative (TN), false

positive (FP) or false negative (FN) classification.18 Some

answers, if partially correct were awarded fractional marks

(for instance, 1/2 TP and 1/2 FN).19 This occurred when

a radiographic abnormality was detected or described,

but the observer failed to identify all key elements that

had been agreed in the expected answer (reference standard). Where partial marks were awarded, the constituent

partial marks amounted to one full mark.

AFROC performance

Alternative Free-response Receiver Operating Characteristic (AFROC) analysis was used to assess the correct localisation of true positive events. AFROC methodology will

allow for multiple abnormalities on a film, and therefore

more than one observed response for every image.24 The

data collated from the results of the image interpretation

were coded and processed using a web-based calculator.25

This produced an AFROC curve to enable direct visual comparison between the accuracy of the three professional

groups. The measure of accuracy, based on the area under

the curve (AUC or A1), can range from a value of 0.0 to

1.0.21

Statistical analysis of values

The variation in performance between each group, in terms

of image test bank score, sensitivity, specificity and AUC

value, was measured by use of the analysis of variance

(ANOVA) statistic and a web-based calculator.26,27

Results

The results shown in Table 2 reveal that the radiographers

gained the highest mean test bank score (28.5/40; 71%).

Table 3 compares the performance of the three groups

and demonstrates that this score was statistically higher

than the mean test bank scores attained by the participating nurse practitioners (21/40; 53%) and casualty officers

(21.5/40; 54%), with p < 0.01 and p Z 0.02, respectively.

When compared with each other, the scores from these latter groups showed no significant difference (p Z 0.91).

The specificity values follow a similar pattern. The

radiographers’ mean value (80.5%) was significantly higher

than those achieved by the nurse practitioners (54%,

p < 0.01) and the casualty officers (57%, p Z 0.04), whose

values were not significantly different from each other

(p Z 0.99).

A significant difference (p Z 0.03) was demonstrated, by

ANOVA testing (Table 3), between the mean sensitivity

values achieved by the three groups. The radiographers’

L. Coleman, K. Piper

sensitivity (67%) was significantly higher (p Z 0.02) in comparison to the mean sensitivity demonstrated by the nurse

practitioners (49%) and approached statistical significance

(p Z 0.06) when compared to the sensitivity achieved by

the casualty officers (51%), whose results when compared

to the nurse practitioners showed no significant difference

(p Z 0.86), as seen in Table 3.

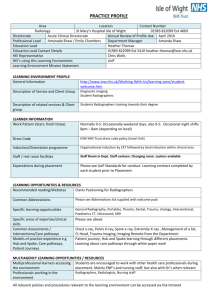

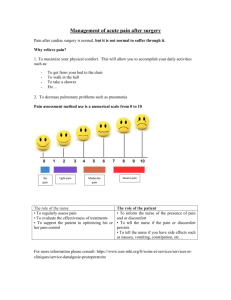

The mean AUC value of 0.75 achieved by the radiographers was found to be significantly more accurate (p < 0.01)

in comparison to the AUC values of 0.58 and 0.54 achieved

by the nurse practitioners and casualty officers, respectively. The closer the AUC to 1.0, the more accurate the

classification, therefore, a curve lying above and to the

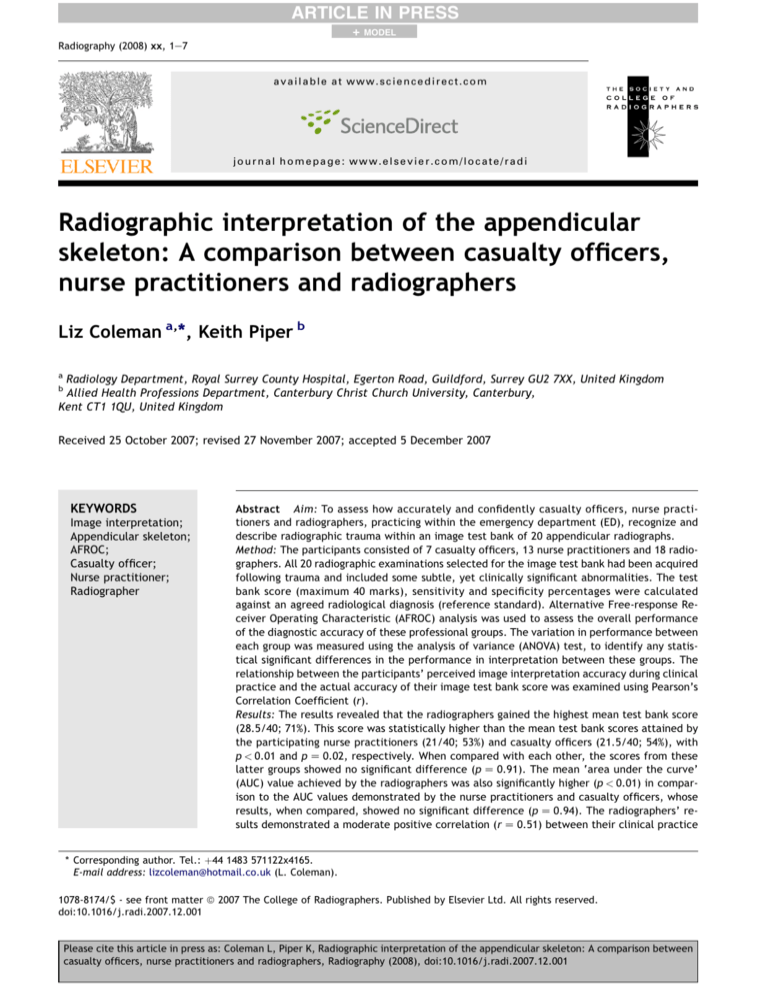

left of another shows greater accuracy in the related classification system.28 Typical AFROC curves that represent the

accuracy achieved by all three groups are shown in Fig. 1.

There is no significant difference between the mean AUC

values achieved by the nurse practitioners and casualty officers; therefore one illustrative AFROC curve is included

to represent the accuracy achieved by these two groups.

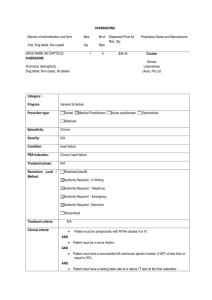

The radiographers’ results demonstrated a moderate

positive correlation (r Z 0.51) between their clinical practice estimations and their actual test bank scores

(p Z 0.02); however, no significant correlation was found

between the nurse practitioners’ (r Z 0.41, p Z 0.16) and

casualty officers’ (r Z 0.07, p Z 0.87) clinical practice estimations when compared to their actual test bank scores, as

can be seen in Table 4.

Discussion

The test bank scores achieved by the casualty officers and

nurse practitioners in this research are comparable to those

scores achieved in similar studies.10,16 The nurse practitioners in this research achieved a mean test bank score

of 53% comparing reasonably well with the nurse practitioners in the study by Meek et al.10 who gained a marginally

more accurate mean test bank score of 57%. The nurse

practitioners in the study by Piper and Paterson16 achieved

similar results in their pre- and post-training mean scores of

53% and 64%, respectively. The casualty officers who participated in this research and the study by Meek et al.10 also

appeared to follow this trend giving comparable results.

The inexperienced casualty officers in the study by Meek

et al.10 achieved a mean test bank score of 50% followed

closely by the casualty officers in this research who gained

a mean test bank score of 54%.

This research, and both the studies by Meek et al.10 and

Piper and Paterson16 shared many common features; all

studies were based on the same scoring system, all images

were of the appendicular skeleton, all images were supplied with a brief clinical history, all participants could

achieve a maximum of 40 marks in their test bank score

and several of the images used in this research replicated

those used in the studies by Meek et al.10 and Piper and

Paterson.16 These similarities appear to reflect in the similarity of the scores that were achieved.

The mean test bank scores achieved by the casualty

officers and nurse practitioners in this research are similar

to the results reported by these professionals in other

studies.10,16 However, a significant difference (p Z 0.02

Please cite this article in press as: Coleman L, Piper K, Radiographic interpretation of the appendicular skeleton: A comparison between

casualty officers, nurse practitioners and radiographers, Radiography (2008), doi:10.1016/j.radi.2007.12.001

ARTICLE IN PRESS

+

MODEL

Radiographic interpretation of the appendicular skeleton

Table 2

5

Test bank scores, sensitivity, specificity and AUC values

Group

Statistics

Test bank score

(max. 40)

Sensitivity (%)

Specificity (%)

AUC

Radiographers

Mean

SD

95% CI

28.5

4.5

26.4e30.8

67

13.4

60.3e73.7

80.5

16.2

72.5e88.6

0.75

0.1

0.7e0.8

Nurse practitioners

Mean

SD

95% CI

21

4.5

18.1e23.6

49

11.7

42.2e56.4

54

20.0

41.9e66.1

0.58

0.1

0.5e0.7

Casualty officers

Mean

SD

95% CI

21.5

5.4

16.5e26.5

51

11.9

40.0e62.0

57

30.6

28.8e85.4

0.54

0.1

0.5e0.6

and p < 0.01, respectively) was seen when the mean test

bank scores achieved by the casualty officers (54%) and

nurse practitioners (53%) in this research were compared

with the mean test bank score of 71% achieved by the

radiographers in this research. The radiographers’ mean

test bank score is also similar to the post-training mean

test bank score of 73% achieved by the radiographers in

the study by Piper and Paterson,16 who also found the

radiographers’ results to be significantly higher than the

post-training mean test bank score of 64% achieved by

the nurse practitioners.

The ‘area under the curve’ (AUC or A1 as denoted in

AFROC analysis) values achieved in this research were

very similar to the AUC values identified in the study by

Piper and Paterson.16 However, a marked difference was

seen when comparison was made with the higher AUC

values reported in a previous study by Overton-Brown and

Anthony.29 When the assessment method of these studies

were compared, it was found that the standard Receiver

Operating Characteristic (ROC) observer performance approach used in the study by Overton-Brown and Anthony29

was not as rigorous as the more accurate Alternative

Free-response Receiver Operating Characteristic (AFROC).

The participants in the study by Overton-Brown and Anthony29 may have identified an abnormality; however, it

Table 3

may not have been the true abnormality. The study by

Overton-Brown and Anthony29 also excluded films of the

shoulder, elbow and knee. These exclusions and the simple

use of ROC methodology may well account for the higher

values reported in the study. Research has shown that the

ability to indicate a radiographic abnormality varies greatly

in comparison to the ability to write a comment to convey

this appearance.30

The mean specificity achieved by the radiographers in

this research was greater or very similar to the mean

specificity achieved by the radiographers in several recognized articles.16,30e32 The mean sensitivity of the radiographers in this research was relatively low in comparison to

the mean values of sensitivity achieved by these professionals in similar studies.31e33 However, much of the literature found that the values of sensitivity increased

immediately after a period of training.16,30e33 The sample

demographics obtained from the participants of this study

revealed that none of the radiographers had received any

in-house image interpretation teaching in the last six

months. Other research found that a radiographer’s ability

to accurately identify abnormalities reduced six months

after training was completed,32 suggesting that training

is essential if radiographers are to increase their ability

to correctly identify abnormalities on positive films.

Statistical comparison of performance measures

Measure

Group

ANOVA

Tukey multiple

comparison test

(p value)

Radiographers

(Group A)

Nurse practitioners

(Group B)

Casualty officers

(Group C )

F

p

Test bank score (max. 40)

28.5

21

21.5

6.6

<0.01

Sensitivity (%)

67

49

51

4.2

0.03

Specificity (%)

80.5

54

57

4.2

0.03

AUC (A1)

0.75

0.58

0.54

7.3

<0.01

A

A

B

A

A

B

A

A

B

A

A

B

vs

vs

vs

vs

vs

vs

vs

vs

vs

vs

vs

vs

B:

C:

C:

B:

C:

C:

B:

C:

C:

B:

C:

C:

p < 0.01

p Z 0.02

p Z 0.91

p Z 0.02

p Z 0.06

p Z 0.86

p < 0.01

p Z 0.04

p Z 0.99

p < 0.01

p < 0.01

p Z 0.94

Please cite this article in press as: Coleman L, Piper K, Radiographic interpretation of the appendicular skeleton: A comparison between

casualty officers, nurse practitioners and radiographers, Radiography (2008), doi:10.1016/j.radi.2007.12.001

ARTICLE IN PRESS

+

MODEL

6

L. Coleman, K. Piper

AFROC curves

1

AUC = 0.77 (Illustrative curve).

Mean: radiographers = 0.75

0.9

True positive fraction

0.8

AUC = 0.58 (Illustrative curve).

Mean: NPs and COs; 0.58

and 0.54, respectively

0.7

0.6

0.5

0.4

0.3

0.2

Radiographers

0.1

Nurse practitioners

/ casualty officers

0

0

0.2

0.4

0.6

0.8

1

False positive fraction

Figure 1

Illustrative AFROC curves.

There appears to be limited research available that

identifies the sensitivity and specificity of casualty officers

and nurse practitioners in their accuracy of image interpretation. The study by Freij et al. 9 that documents

this information found these professionals achieved similar

scores of sensitivity and specificity; however, a marked

difference was seen when these values were compared to

those achieved by the nurse practitioners and casualty officers in this research, whose scores were notably lower.

Upon closer inspection of the methodology used in the

study by Freij et al.,9 it was found that those casualty officers and nurse practitioners were involved in an audit

assessment during normal clinical practice, as opposed to

interpretation of an image test bank. Although the results

of this research were based on a small sample of images,

not representative of clinical practice, the test bank was

intended to be sufficiently discriminatory to adequately

assess the performance of the professional groups. The results in this research may appear low in comparison to the

findings of Freij et al.,9 however, this may be misleading, as

a study by Brown,34 which used both audit and test bank

Table 4

methodologies, found a correlation between the apparently

low test bank scores (pre: 63.5%; post: 69.8%) and audit

accuracy (pre: 82.9%; post: 90%), before and after training

(pre-test: r Z 0.82, p Z 0.04; post-test: r Z 0.77, p Z 0.08)

for a small group of radiographers.

‘Failure to search’ and ‘satisfaction of search’ accounted for a common error within all three groups in this

research. All participants identified the fracture at the base

of the fourth metacarpal; however, the majority of the

radiographers (67%), nurse practitioners (85%) and all of the

casualty officers failed to note the fractures at the base of

the fourth and fifth distal phalanges.

The results from this research found the casualty

officers and nurse practitioners ability to interpret radiographs was not as accurate as they perceived, as seen in

Table 4. A moderate positive correlation was found between the radiographers’ estimate of their image interpretation abilities during normal working practice when

compared to their test bank score (p Z 0.02). However,

no correlation was found for the casualty officers or nurse

practitioners (p Z 0.87 and p Z 0.16, respectively). This

suggests that radiographers may be more realistic in their

abilities, when compared to casualty officers and nurse

practitioners who appear to overrate their ability in image

interpretation, which could potentially lead to misdiagnosis. Although, the lack of correlation between the confidence in, and accuracy of, clinical prediction has been

noted previously in the field of medical decision making,35

the reasons for this phenomenon are complex.36

Conclusion

The radiographers in this research produced significantly

higher scores during the image interpretation test in

comparison to both the casualty officers and nurse practitioners who participated in this study. This may not be

surprising as radiographers generally have the most experience in viewing radiographic images when compared to

both nurse practitioners and casualty officers. Interpretation of radiographic images is only one aspect of the role

undertaken by casualty officers and nurse practitioners, yet

understanding, performing and undertaking interpretation

of plain film images is the fundamental basis of radiography. However, in normal clinical practice, it is the casualty

Comparison of mean estimated and actual accuracy

Group

Statistics

Accuracy (%)

Clinical practice

estimation

Actual test

bank score

Pearson’s

correlation

coefficient (r)

p value

Radiographers

Mean

SD

95% CI

67.8

12.6

61.5e74.1

71.5

11.2

65.9e77.0

0.51

0.02

Nurse practitioners

Mean

SD

95% CI

63.9

12.6

56.2e71.4

52.1

11.2

45.3e58.9

0.41

0.16

Casualty officers

Mean

SD

95% CI

64.2

14.0

51.4e77.2

53.8

13.4

41.4e66.2

0.07

0.87

Please cite this article in press as: Coleman L, Piper K, Radiographic interpretation of the appendicular skeleton: A comparison between

casualty officers, nurse practitioners and radiographers, Radiography (2008), doi:10.1016/j.radi.2007.12.001

ARTICLE IN PRESS

+

MODEL

Radiographic interpretation of the appendicular skeleton

officers and nurse practitioners who routinely interpret

images and document their findings as part of their role in

diagnosing and treating ED patients.

The results of this research suggest that radiographers

have the ability to formally utilise their knowledge in image

interpretation by providing the ED with a written comment

(initial interpretation) to assist in the radiographic diagnosis

and therefore replace the ambiguous ‘red dot’ system used

to highlight abnormal radiographs. It is suggested that the

comment form should comprise a succinct range of tick box

options, with space for additional comments. This would

allow radiographers to develop their vocabulary and comment structure as knowledge and confidence builds.

Maximising the role of the radiographer would possibly

improve job satisfaction and provide stimulus for further

education and development, by recognizing the value of

the radiographer as a vital member of the ED team. It

is vitally important that radiographers feel supported in

this role and it is crucial that continuous training and audit

is undertaken for this to be successful.

The results of this research, although similar to previous

findings, constitute a relatively small sample of data that

may not be representative of the image interpretation

abilities of these professionals in clinical practice; however,

the image test bank was designed to assess the performance and knowledge in image interpretation of these

three groups. The performance of these professional groups

may differ throughout the UK, therefore caution should be

applied regarding the generalisation of the findings.

References

1. Hardy M, Barrett C. Interpretation of trauma radiography by

radiographers and nurses in the UK: a comparative study. Br

J Radiol 2004;77:657e61.

2. Keane D. Radiographer reporting. Paper presented at the European Congress of Radiology; 2005.

3. Snaith B. Are trusts replacing the red dot? Br J Radiol 2003;

(UKRC Suppl.):46e7.

4. Evans R. UK council meeting report. Synergy News; June 2004::4.

5. McLaren P. The evolution of the nurse practitioner. EvidenceBased Healthc Public Health 2005;9:265e6.

6. Read S, Jones N, Williams B. Nurse practitioners in accident

and emergency departments: what do they really do? BMJ

1992;305:1466e70.

7. Mason S, Fletcher A, McCormick S, Perrin J, Rigby A. Developing assessment of emergency nurse practitioner competence e

a pilot study. J Adv Nurs 2005;50(4):425e32.

8. Morris F, Cope A, Hawes S. Training in accident and emergency:

views of senior house officers. BMJ 1990;300:165e6.

9. Freij RM, Duffy T, Hackett D, Cunningham D, Fothergill J.

Radiographic interpretation by nurse practitioners in a minor

injuries unit. J Accid Emerg Med 1996;13:41e3.

10. Meek S, Kendall J, Porter J, Freij R. Can accident and emergency nurse practitioners interpret radiographs? A multicentre

study. J Accid Emerg Med 1998;15:105e7.

11. Sakr M, Angus J, Perrin J, Nixon C, Nicholl J, Wardrope J. Care of

minor injuries by emergency nurse practitioners or junior doctors: a randomised controlled trial. Lancet 1999;354:1321e6.

12. Tachakra S, Deboo P. Comparing performance of ENP’s and

SHO’s. Emerg Nurse 2001;9(7):36e9.

13. Berman L, deLacey G, Twomey E, Twomey B, Welch T, Eban R.

Reducing errors in the accident department: a simple method

using radiographers. BMJ 1985;290:421e2.

7

14. Brealey S, Scally A, Hahn S, Thomas N, Godfrey C, Crane S.

Accuracy of radiographers red dot or triage of accident and

emergency radiographs in clinical practice: a systematic

review. Clin Radiol 2006;61(7):604e15.

15. Brealey S, Scally A, Hahn S, Thomas N, Godfrey C,

Coomarasamy A. Accuracy of radiographer plain film reporting in clinical practice: a meta-analysis. Clin Radiol 2005;

60(2):233e41.

16. Piper K, Paterson A. Initial image interpretation of appendicular skeletal radiographs: a comparison between nurses and

radiographers. Radiography, in press. doi:10.1016/j.radi.

2007.10.006.

17. Berbaum KS. Satisfaction of search in diagnostic radiology.

Invest Radiol 1990;25(2):133e40.

18. Renfrew DL, Franken EA, Berbaum KS, Weigelt FH,

Abu-Yousef MM. Error in radiology: classification and lessons

in 182 cases presented at a problem case conference. Radiology 1992;183:145e50.

19. Piper KJ, Paterson AM, Godfrey RC. Accuracy of radiographers’

reports in the interpretation of radiographic examinations of

the skeletal system: a review of 6796 cases. Radiography

2005;11:27e34.

20. Miller S. Experimental design and statistics. 2nd ed. London:

Methven & Co Ltd; 1984.

21. Obuchowski NA. Receiver operating characteristic curves and

their use in radiology. Radiology 2003;229(1):3e8.

22. Robinson PJA. Radiology’s Achilles heel: error and variation in

the interpretation of accident and emergency radiographs. Br J

Radiol 1997;70:1085e98.

23. Goddard P, Leslie A, Jones A, Wakeley C, Kabala J. Error in

radiology. Br J Radiol 2001;74:949e51.

24. Chakraborty D. Statistical power in observer-performance

studies: comparison of the receiver operating characteristic

and free-response methods in tasks involving localization.

Acad Radiol 2002;9(2):147e56.

25. Eng J. ROC analysis: web-based calculator for ROC curves.

Baltimore: John Hopkins University [updated 2006 May 24].

Available from: <www.jrocfit.org> [accessed 22.06.06].

26. Rorden C. EZANOVA: web-based calculator for analysis of

variance (version 0.97). Available from: <www.mricro.com>

[accessed 22.06.06].

27. Bland M. An introduction to medical statistics. 3rd ed. Oxford:

Oxford University Press; 2000.

28. Anthony D. Receiver operating characteristic analysis: an overview. Nurse Res 1996;4(2):75e88.

29. Overton-Brown P, Anthony D. Towards a partnership in care:

nurses’ and doctors’ interpretation of extremity trauma

radiology. J Adv Nurs 1998;27(5):890e6.

30. Hardy M, Culpan G. Accident and emergency radiography:

a comparison of radiographer commenting and ‘red dotting’.

Radiography 2007;13:65e71.

31. McConnell JR, Webster AJ. Improving radiographer highlighting

of trauma films in the accident and emergency department

with a short course of studydan evaluation. Br J Radiol

2000;73:608e12.

32. Mackay S. The impact of a short course of study on the performance of radiographers when highlighting fractures on trauma

radiographs: ‘‘the red dot system’’. Br J Radiol 2006;79:468e72.

33. Hargreaves J, Mackay S. The accuracy of the red dot system:

can it improve with training? Radiography 2003;9:283e9.

34. Brown V. An investigation of radiographer’s abnormality detection skills focusing on the appendicular skeleton in a trauma

department of a district general hospital. MSc thesis. Canterbury Christ Church University; 2003.

35. Kee F. Confidence limits and the limits of confidence. QJM

2000;93:121e4.

36. Dowie J, Elstein A. Professional judgment. a reader in clinical

decision making. Cambridge University Press; 1994.

Please cite this article in press as: Coleman L, Piper K, Radiographic interpretation of the appendicular skeleton: A comparison between

casualty officers, nurse practitioners and radiographers, Radiography (2008), doi:10.1016/j.radi.2007.12.001