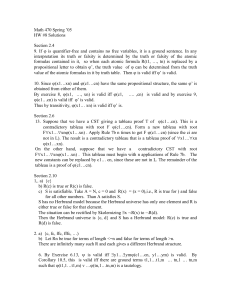

Semantics Boot Camp: Predicate Calculus & Formal Language

advertisement

Semantics Boot Camp

Elizabeth Coppock

Compilation of handouts from NASSLLI 2012, Austin Texas

(revised December 15, 2012)

Contents

1

Semantics

2

Predicate calculus

2.1 Syntax of Predicate Calculus . . . . . . . .

2.1.1 Atomic symbols . . . . . . . . . . .

2.1.2 Syntactic composition rules . . . .

2.2 Semantics of Predicate Calculus . . . . . .

2.2.1 Sets . . . . . . . . . . . . . . . . . .

2.2.2 Ordered pairs and relations . . . . .

2.2.3 Functions . . . . . . . . . . . . . . .

2.2.4 Set theory as meta-language . . . .

2.2.5 Models and interpretation functions

2.2.6 Interpretation rules . . . . . . . . .

3

4

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4

. 4

. 4

. 5

. 6

. 6

. 7

. 7

. 9

. 9

. 10

English as a formal language

3.1 A fragment of English with set denotations . . . . .

3.1.1 Models and the Lexicon . . . . . . . . . . .

3.1.2 Composition rules . . . . . . . . . . . . . . .

3.2 A fragment of English satisfying Frege’s conjecture

3.3 Transitive and ditransitive verbs . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

11

11

11

13

14

19

Fun with Functional Application

4.1 Rick Perry is conservative . .

4.2 Rick Perry is in Texas . . . .

4.3 Rick Perry is proud of Texas .

4.4 Rick Perry is a Republican . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

20

20

21

22

22

.

.

.

.

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

5

Predicate Modification

23

5.1 Rick Perry is a conservative Republican . . . . . . . . . . . . . . . . 23

5.2 Austin is a city in Texas . . . . . . . . . . . . . . . . . . . . . . . . . 24

6

The definite article

25

6.1 The negative square root of 4 . . . . . . . . . . . . . . . . . . . . . . 25

6.2 Top-down evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

6.3 Bottom-up style . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

7

Predicate calculus: now with variables!

32

8

Relative clauses

34

9

Quantifiers

38

9.1 Type e? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

9.2 Solution: Generalized quantifiers . . . . . . . . . . . . . . . . . . . . 41

10 The problem of quantifiers in object position

10.1 The problem . . . . . . . . . . . . . . . . . . . .

10.2 An in situ approach . . . . . . . . . . . . . . . .

10.3 A Quantifier Raising approach . . . . . . . . .

10.4 Arguments in favor of the movement approach

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

42

42

42

44

47

11 Free and Bound Variable Pronouns

48

11.1 Toward a unified theory of anaphora . . . . . . . . . . . . . . . . . . 48

11.2 Assignments as part of the context . . . . . . . . . . . . . . . . . . . 52

12 Our fragment of English so far

53

12.1 Composition Rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

12.2 Additional principles . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

12.3 Lexical items . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

1

Semantics

Semantics: The study of meaning. What is meaning? How can you tell whether

somebody or something understands? Does Google understand language?

An argument that it does not: Google can’t do inferences:

(1)

Everyone who smokes gets cancer → No heavy smoker avoids cancer

2

(cf. “No alleged smoker avoids cancer”...)

A hallmark of a system or agent that understands language / grasps meaning is that

it can do these kinds of inferences.

Another way to put it: A good theory of meaning should be able to account for the

conditions under which one sentence implies another sentence.

Some different kinds of inferences:

1. Entailment (domain of semantics): A entails B if and only if whenever A is

true, B is true too. E.g. Obama was born in 1961 entails Obama was born in

the 1960s.

2. Presupposition (semantics/pragmatics): A presupposes B if and only if an

utterance of A takes B for granted. E.g. Sue has finally stopped smoking

presupposes that Sue smoked in the recent past.

3. Conversational Implicature (pragmatics): A conversationally implicates B

if and only if a hearer can infer B from an utterance of A by making use of the

assumption that the speaker is being cooperative. E.g. Some of the students

passed conversationally implicates that not all of the students passed.

The primary responsibility for a theory of semantics is to account for the conditions

under which one sentence entails another sentence.

Other nice things a theory of semantics could do: Account for presuppositions,

contradictions, equivalences, semantic ill-formedness, distribution patterns.

Strategy: Assign truth conditions to sentences. The truth conditions are the conditions under which the sentence is true. Knowing the meaning of a sentence does

not require knowing whether the sentence is in fact true; it only requires being able

to discriminate between situations in which the sentence is true and situations in

which the sentence is false.

(Cf. Heim & Kratzer’s bold first sentence: “To know the meaning of a sentence is

to know its truth conditions.”)

The strategy of assigning truth conditions will allow us to account for entailments.

If the circumstances under which A is true include the circumstances under which

B is true, then A entails B.

3

How to assign truth conditions to sentences of natural languages like English?

Montague’s idea: Let’s pretend that English is a formal language.

I reject the contention that an important theoretical difference exists between formal and natural languages. ... In the present paper

I shall accordingly present a precise treatment, culminating in a theory of truth, of a formal language that I believe may reasonably be

regarded as a fragment of ordinary English. ... The treatment given

here will be found to resemble the usual syntax and model theory (or

semantics) [due to Tarski] of the predicate calculus, but leans rather

heavily on the intuitive aspects of certain recent developments in intensional logic [due to Montague himself]. (Montague 1970b, p.188

in Montague 1974)

2

Predicate calculus

Predicate calculus is a logic. Logics are formal languages, and as such they have

syntax and semantics.

Syntax: specifies which expressions of the logic are well-formed, and what their

syntactic categories are.

Semantics: specifies which objects the expression correspond to, and what their

semantic categories are.

2.1 Syntax of Predicate Calculus

2.1.1

Atomic symbols

Formulas are built up from atomic symbols drawn from the following syntactic

categories:

• individual constants: J OHN, M ARY, T EXAS, 4...

• variables: x, y, z, x′ , y ′ , z ′ , ...

• predicate constants

– unary predicate constants:

EVEN , ODD , SLEEPY ,..

– binary predicate constants:

LOVE, OWN ,

– ...

4

>, ...

• function constants:

– unary function constants:

– binary function constants:

MOTHER , ABSOLUTE VALUE,

DISTANCE,

...

+, −,...

– ...

• logical connectives: ∧, ∨, →, ↔, ¬

• quantifiers: ∀, ∃

I write constants in SMALL CAPS and variables in italics. But I will ignore quantifiers and variables for now (they will be discussed later).

2.1.2

Syntactic composition rules

How to build complex expressions (Greek letters are metalanguage variables):

• If π is a n-ary predicate and α1 ...αn are terms, then π(α1 ,...αn ) is an atomic

formula.

– If π is a unary predicate and α is a term, then π(α) is an atomic formula.

– If π is a binary predicate and α1 and α2 are terms, then π(α1 , α2 ) is an

atomic formula.

• If α1 ...αn are terms, and γ is a function constant with arity n, then γ(α1 , ..., αn )

is a term.

• If φ is a formula, then ¬φ is a formula.

• If φ is a formula and ψ is a formula, then [φ ∧ ψ] is a formula, and so are

[φ ∨ ψ], [φ → ψ], and [φ ↔ ψ].

Expression

J OHN ,M ARY

x

HAPPY , EVEN

LOVE , >

LOVE (J OHN , M ARY )

HAPPY (x)

x>1

MOTHER

MOTHER (J OHN )

Syntactic category

(individual) constant

variable

unary predicate constant

binary predicate constant

(atomic) formula

(atomic) formula

(atomic) formula

unary function constant

term

5

2.2 Semantics of Predicate Calculus

Each expression belongs to a certain semantic type. The types of our predicate

calculus are: individuals, sets, relations, functions, and truth values.

2.2.1

Sets

Set. An abstract collection of distinct objects which are called the members or

elements of that set. Elements may be concrete (like the beige 1992 Toyota Corolla

I sold in 2008, David Beaver, or your computer) or abstract (like the number 2, the

English phoneme /p/, or the set of all Swedish soccer players). The elements of a

set are not ordered, and there may be infinitely many of them or none at all.

You can specify the members of a set in two ways:

1. By listing the elements, e.g.:

{Marge, Homer, Bart, Lisa, Maggie}

2. By description, e.g: {x∣x is a human member of the Simpsons family}

Element.

Empty set.

We write ‘is a member of’ with ∈.

The empty set, written ∅ or {}, is the set containing no elements.

Subset. A is a subset of B, written A ⊆ B, if and only if every member of A is a

member of B.

A ⊆ B iff for all x: if x ∈ A then x ∈ B.

Proper subset. A is a proper subset of B, written A ⊂ B, if and only if A is a

subset of B and A is not equal to B.

A ⊂ B iff (i) for all x: if x ∈ A then x ∈ B and (ii) A ≠ B.

Powerset.

The powerset of A, written ℘(A), is the set of all subsets of A.

℘(A) = {S∣S ⊆ A}

Set Union. The union of A and B, written A ∪ B, is the set of all entities x such

that x is a member of A or x is a member of B.

A ∪ B = {x∣x ∈ A or x ∈ B}

6

Set Intersection. The intersection of A and B, written A ∩ B, is the set of all

entities x such that x is a member of A and x is a member of B.

A ∩ B = {x∣x ∈ A and x ∈ B}

2.2.2

Ordered pairs and relations

Ordered pair.

Sets are not ordered.

{Bart, Lisa} = {Lisa, Bart}

But the elements of an ordered pair written ⟨a, b⟩ are ordered. Here, a is the first

member and b is the second member.

⟨Bart, Lisa⟩ ≠ ⟨Lisa, Bart⟩

We can also have ordered triples.

{Bart, Lisa, Maggie} = {Maggie, Lisa, Bart}

⟨Bart, Lisa, Maggie⟩ ≠ ⟨Maggie, Lisa, Bart⟩

Relation. A binary relation is a set of ordered pairs. In general, a relation is a set

of ordered tuples. For example, the ‘older-than’ relation among Simpsons kids:

{⟨Bart, Lisa⟩, ⟨Lisa, Maggie⟩, ⟨Bart, Maggie⟩}

Note that this is a set. How many elements does it have?

Domain. The domain of a relation is the set of entities that are the first member

of some ordered pair in the relation.

Range. The range of a relation is the set of entities that are the second member

of some ordered pair in the relation.

2.2.3

Functions

Function. A function is a special kind of relation. A relation R from A to B is a

function if and only if it meets both of the following conditions:

1. Each element in the domain is paired with just one element in the range.

2. The domain of R is equal to A

7

A function gives a single output for a given input.

Are these relations functions?

f1 = {⟨Bart, Lisa⟩, ⟨Lisa, Maggie⟩, ⟨Bart, Maggie⟩}

f2 = {⟨Bart, Lisa⟩, ⟨Lisa, Maggie⟩}

Easier to see when you notate them like this:

⎤

⎡ Bart → Lisa

⎥

⎢

⎥

⎢

→ Maggie ⎥

f1 = ⎢

⎥

⎢

⎢ Lisa → Maggie ⎥

⎦

⎣

f2 = [

Bart → Lisa

]

Lisa → Maggie

Function notation. Just as sets can be specified either by listing the elements or

by description, functions can be described either by listing the ordered pairs that

are members of the relation or by description. Example:

[x ↦ x + 1]

• x is the argument variable

• x + 1 is the value description

Function application. F (a) denotes ‘the result of applying function F to argument a’ or F of a’ or ‘F applied to a’. If F is a function that contains the ordered

pair ⟨a, b⟩, then:

F (a) = b

This means that given a as input, F gives b as output.

The result of applying a function specified descriptively to its argument can normally be written as the value description part, with the argument substituted for the

argument variable.

[x ↦ x + 1](4) = 4 + 1 = 5

8

2.2.4

Set theory as meta-language

All these set-theoretic symbols are formal symbols, but they are not part of the

language for which we are giving a semantics. They are being used to characterize

the values that expressions of predicate calculus will have.

In that sense, we are using the language of set theory as a meta-language.

2.2.5

Models and interpretation functions

Interpretation with respect to a model. Expressions of predicate calculus are

interpreted in models. Models consist of a domain of individuals D and an interpretation function I which assigns values to all the constants:

M = ⟨D, I⟩

A valuation function [[]]M , built up recursively on the basis of the basic interpreation function I, assigns to every expression α of the language (not just the

constants) a semantic value [[α]]M .

Here are two models, Mr and Mf (r for “real”, and f for “fantasy”/“fiction”/“fake”):

Mr = ⟨D, Ir ⟩

Mf = ⟨D, If ⟩

They share the same domain:

D = {Maggie, Bart, Lisa}

In Mr , Bart is happy, but Maggie and Lisa are not:

Ir (HAPPY ) = {Bart}

In Mf , everybody is happy:

If (HAPPY ) = {Maggie, Bart, Lisa}

Both interpretation functions map the constant M AGGIE to the individual Maggie:

Ir (M AGGIE ) = Maggie

If (M AGGIE ) = Maggie

9

What is the semantic value of HAPPY (M AGGIE )? It should come out as false in

Mr , and true in Mf . So what we want to get is:

[[HAPPY (M AGGIE )]]Mr = 0

[[HAPPY (M AGGIE )]]Mf = 1

What tells us this? We need rules for determining the values of complex expressions from the values of their parts. So far all we have is rules for determining the

values of constants.

2.2.6

Interpretation rules

• Constants

If M = ⟨D, I⟩ and α is a constant, then [[α]]M = I(α).

• Complex terms

If α1 ...αn are terms and γ is a function constant with arity n, then [[γ(α1 , ...αn )]]M

is [[γ]]M ([[α1 ]]M , ..., [[αn ]]M ).

• Atomic formulae

If π is an n-ary predicate and α1 ...αn are terms, [[π(α1 , ..., αn )]]M = 1 iff

⟨[[α1 ]]M , ..., [[αn ]]M ⟩ ∈ [[π]]M

If π is a unary predicate and α is a term, then [[π(α)]]M = 1 iff

[[α]]M ∈ [[π]]M

• Negation

[[¬φ]]M = 1 if [[φ]]M = 0; otherwise [[¬φ]]M = 0.

• Connectives

[[φ ∧ ψ]]M = 1 if [[φ]]M = 1 and [[ψ]]M = 1; 0 otherwise. Similarly for

[[φ ∨ ψ]]M , [[φ → ψ]]M , and [[φ ↔ ψ]]M .

Example. Because HAPPY is a unary predicate and M AGGIE is a term, we can

use the rule for atomic formulae to figure out the value of HAPPY (M AGGIE ).

[[HAPPY (M AGGIE )]]Mf = 1 iff [[M AGGIE ]]Mf ∈ [[HAPPY ]]Mf ,

i.e. iff Maggie ∈ {Maggie, Bart, Lisa}.

10

[[HAPPY (M AGGIE )]]Mr = 1 iff [[M AGGIE ]]Mr ∈ [[HAPPY ]]Mr ,

i.e. iff Maggie ∈ {Bart}.

How about: ¬HAPPY (BART ) ∨ LOVE (BART, M AGGIE ) ?

3

English as a formal language

Montague: “I reject the contention that an important theoretical difference exists

between formal and natural languages.”

So we will put (parsed) English inside the denotation brackets, instead of logic.

Example:

⎡⎡

⎤⎤M

S

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢ NP

⎥⎥

VP

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

N

V

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢ Barack

⎥

smokes ⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎣⎣

⎦⎦

(2)

(Wrong: [[Barack smokes]]M )

Following Heim & Kratzer, I use bold face for object language inside denotation

brackets here, but often I am lazy about this.

3.1 A fragment of English with set denotations

3.1.1

Models and the Lexicon

Again, a model M = ⟨D, I⟩ is a pair consisting of a domain D and an interpretation

function I. For simplicity, let us assume that all of our models have the same

domain D. There are two important subsets of D:

• De , the set of individuals

• Dt , the set of truth values, 0 and 1

11

As in predicate calculus, models come with an interpretation function that specifies

the values of all the constants.

For concreteness, assume:

(3)

De = {Barack Obama, Angela Merkel, Hugh Grant}

The constants of natural language are the lexical items: the proper names, the intransitive verbs, the adjectives, etc. Thus the interpretation function I will map

lexical items to their values in a model.

For example, let M1 = ⟨D, I1 ⟩, and let I1 be defined such that:

(4)

a.

I1 (Barack) = Barack Obama

b.

I1 (Angela) = Angela Merkel

c.

I1 (Hugh) = Hugh Grant

d.

e.

f.

I1 (smokes) = {Barack Obama, Angela Merkel}

I1 (drinks) = {Barack Obama, Hugh Grant}

I1 (likes) = {⟨Barack Obama, Hugh Grant⟩,⟨Hugh Grant, Barack

Obama⟩,⟨Hugh Grant, Hugh Grant⟩ }

For example, let M2 = ⟨D, I2 ⟩, and let I2 be defined such that:

(5)

a.

I2 (Barack) = Barack Obama

b.

I2 (Angela) = Angela Merkel

c.

I2 (Hugh) = Hugh Grant

d.

e.

f.

I2 (smokes) = {Angela Merkel}

I2 (drinks) = {}

I2 (likes) = {⟨Hugh Grant, Hugh Grant⟩}

Here, as in predicate calculus, we are defining the semantic values of intransitive

verbs as sets, and we are defining the semantic values of transitive verbs as relations. But stay tuned; later we will interpret intransitive verbs as the characteristic

functions of sets instead, in order to explore Frege’s conjecture, that all semantic

composition can be done with one single rule.

Now we want to define a valuation function that assigns semantic values to all

expressions of the language, not just constants. For constants, it’s easy; just use the

interpretation function. For example:

12

(6)

[[smokes]]M1 = I1 (smokes) = {Barack Obama, Angela Merkel}

In general, if α is a constant and M = ⟨D, I⟩ is a model, then:

(7)

Interpretation Rule: Lexical Terminals (LT)

[[α]]M = I(α)

But what about complex expressions like the tree in (10)?

3.1.2

Composition rules

For non-branching nodes, we use the semantic value of the daughter:

(8)

Interpretation Rule: Non-branching Nodes

γ

If α has the form

then [[α]]M = [[β]]M .

β

Sentences are true iff the value of the subject NP is an element of the value of the

set denoted by the VP:

(9)

Interpretation Rule: Sentences

S

then [[α]]M = 1 iff [[β]]M ∈ [[γ]]M .

If α has the form

β

γ

So it will turn out that:

⎡⎡

⎤⎤M1

S

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢ NP

⎥

VP ⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎥⎥ = 1

⎢

N

V

(10) ⎢

⎥⎥

⎢⎢

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢ Barack

⎥

smokes ⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎣⎣

⎦⎦

because Barack Obama is an element of [[smokes]]M1 .

But what do we do about transitive verbs?

(11) Interpretation Rule: Transitive VPs

VP

If α has the form

then [[α]]M = ???

β

γ

13

We have said that the verb denotes a relation, but what does the verb combined with

the object denote? Intuititively, it is a relation that is incompletely saturated. We

can solve this problem by treating transitive verbs as functions that, when applied

to an individual (the one denoted by the object NP), yield something corresponding

to a set of individuals. Generalizing this idea, we will also treat VPs as functions.

When applied to their subject NP, they will give us a truth value. So intransitive

verbs will denote functions from individuals to truth values and intransitive verbs

will denote functions from individuals to functions from individuals to truth values.

3.2 A fragment of English satisfying Frege’s conjecture

Scientific question: What semantic interpretation rules do we need in order to

calculate the values of complex expressions from the values of their parts?

Frege’s conjecture:

We only need one composition rule: Functional application.

This will help us with our VP problem, but we have to change how verbs are

interpreted a little bit. Now we will have interpretations like the following. Recall

that before we said that I1 (smokes) = {Barack Obama, Angela Merkel}. Now we

will treat smokes as the characteristic function of that set, with domain D.

(12) f is the characteristic function of a set S iff for all x in the relevant domain,

f (x) = 1 if x ∈ S, and f (x) = 0 otherwise.

(13) I1 (smokes) = {⟨Barack Obama, 1⟩, ⟨Angela Merkel, 1⟩, ⟨Hugh Grant, 0⟩}

⎡ Barack Obama → 1 ⎤

⎢

⎥

⎢

⎥

= ⎢ Angela Merkel → 1 ⎥

⎢

⎥

⎢ Hugh Grant

→ 0 ⎥

⎣

⎦

Hence, by the Lexical Terminals rule given in (7):

M1

(14) [[smokes]]

⎡ Barack Obama → 1 ⎤

⎢

⎥

⎢

⎥

= ⎢ Angela Merkel → 1 ⎥

⎢

⎥

⎢ Hugh Grant

→ 0 ⎥

⎣

⎦

Now we have to change our interpretation rule for sentences:

(15) Interpretation Rule: Sentences

S

then [[α]]M = [[γ]]M ([[β]]M ).

If α has the form

β

γ

14

And we can add an interpretation rule for VPs:

(16) Interpretation Rule: VPs

VP

If α has the form

β

γ

then [[α]]M = [[β]]M ([[γ]]M ).

Instead of treating transitive verbs as relations, we will say that the semantic value

of the transitive verb likes is a function from individuals to functions from individuals to truth values For example:

⎡ Barack Obama

⎡

⎢

⎢

⎢ Barack Obama → ⎢

⎢ Angela Merkel

⎢

⎢

⎢

⎢ Hugh Grant

⎢

⎣

⎢

⎡ Barack Obama

⎢

⎢

⎢

⎢

⎢

(17) I1 (likes) = ⎢ Angela Merkel → ⎢ Angela Merkel

⎢

⎢

⎢ Hugh Grant

⎢

⎣

⎢

⎢

⎡

Barack Obama

⎢

⎢

⎢

⎢

⎢ Hugh Grant

→ ⎢ Angela Merkel

⎢

⎢

⎢

⎢ Hugh Grant

⎣

⎣

→

→

→

→

→

→

→

→

→

0

0

1

0

0

0

1

0

1

⎤

⎥

⎥

⎥

⎥

⎥

⎦

⎤

⎥

⎥

⎥

⎥

⎥

⎦

⎤

⎥

⎥

⎥

⎥

⎥

⎦

⎤

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎦

Notice that (16) and (15) are very similar. Both say that the value of a tree whose

top node is a branching node is the value of one daughter – the one that denotes a

function – applied to the value of the other daughter, which works out if it denotes

something in the domain of the function. So we can combine these two rules into

one single rule.1

(18) Interpretation Rule: Functional Application (FA)

If α is a branching node, {β, γ} is the set of α’s daughters, and [[β]]M is a

function whose domain contains [[γ]]M , then [[α]]M = [[β]]M ([[γ]]M ).

Since FA does not make any reference to the syntactic categories of the nodes in

the phrase to be interpreted, and only makes reference to the semantic type of those

phrases, it is a type-driven interpretation rule.

1

The phrase function(al) application has two meanings: The process of applying a function to

an argument, and the composition rule that allows us to compute the semantic value of a phrase

given the semantic values of its parts. I will refer to the composition rule using title capitalization

(‘Functional Application’) and the process of applying a function to its argument using lowercase

letters (‘function(al) application’).

15

Types. All of our denotations are individuals, truth values, or functions. Individuals have type e and they are in the domain of individuals. Put more formally:

(19) Barack Obama ∈ De

The truth values 0 and 1 have type t and they are elements of Dt .

A function from individuals to truth values is type ⟨e, t⟩. By (14), [[smokes]]M1 is

of type ⟨e, t⟩ because its domain is the set of individuals, and its range is the set of

truth values. Hence:

(20) [[smokes]]M1 ∈ D⟨e,t⟩

[[likes]] will have type ⟨e, ⟨e, t⟩⟩ because its domain is the set of individuals and its

range is D⟨e,t⟩ . So:

(21) [[likes]]M1 ∈ D⟨e,⟨e,t⟩⟩

Inventory of types. We will only need the following types for the meanings of

expressions in natural language:

• e, the type of individuals

• t, the type of truth values

• ⟨σ, τ ⟩, where σ and τ are types

Function notation

For the purposes of talking about the composition rules that are necessary for building up the meanings of sentences from the meanings of their parts, it is not necessary to think about which individuals are actually mapped to 1 or 0 in in which

actual models. So we make our lives easier and skip all that by writing:

(22) [[smokes]]M =[x ↦ 1 iff x smokes in M ]

where M is an arbitrary model, and the natural language sentence ‘x smokes’

means that the individual x is designated as a smoker according to the interpretation function I in M .

In fact, we can just leave off M entirely for the present purposes:

(23) [[smokes]] = [x ↦ 1 iff x smokes]

16

The domain of the function denoted by smokes is the set of individuals. Generally,

we use ‘x’ as a variable over individuals, so the domain of the function is clear from

our choice of variable. But sometimes it is useful to be able to state conditions on

the domain. Taking inspiration from Heim and Kratzer, we will specify domain

restrictions with a colon, using expressions of the form:

[α ∶ φ ↦ γ]

where

• α is the argument variable, as before (a letter that stands for an arbitrary

argument of the function we are defining)

• φ is the domain condition (places a condition on possible values for α)

• γ is the value description (specifies the value that our function assigns to α)

So the more expanded notation would be:2

(24) [[smokes]] = [x : x ∈ De ↦ 1 iff x smokes]

We will sometimes abbreviate this as:

(25) [[smokes]] = [x ∈ De ↦ 1 iff x smokes]

And we will leave off the domain condition entirely when we use the variables x,

y, and z.

The lexical entry for a transitive verb looks like this:

(26) [[likes]] = [x ↦ [ y ↦ 1 iff y likes x]]

(Notice that it’s ‘y likes x’, not ‘x likes y’, because the verb combines first with its

object, and then with its subject.)

To express the result of applying the function to an argument, we write just the

value description, with the argument substituting for all instances of the argument variable.

(27) [x ↦ [ y ↦ 1 iff y likes x]](Barack Obama)

= [y ↦ 1 iff y likes Barack Obama]

2

In Heim and Kratzer’s notation, the same function would be written: ‘λx : x ∈ De . x smokes’.

We avoid λ notation in the meta-language here, because it is more customary in mathematics to

describe functions by description using the ↦ symbol, and it avoids confusion over whether our

metalanguage expressions are supposed to be treated as object language expressions of some variant

of Church’s lambda calculus.

17

Note: Two kinds of formal stuff. Notice that (lambdified) English, along with

set-theory talk, is the meta-language, the language with which we describe the semantic values that object language expressions may have.

So there’s two kinds of formal stuff:

• meta-language (↦, symbols of set theory)

• object language: when we were giving a syntax and semantics for predicate

calculus, symbols of predicate calculus were part of the object language.

Now that we are giving a semantics for natural language directly, logic is no

longer part of the picture.

Example. Overview of how the compositional derivation of the truth conditions

for Barack smokes will go:

S: t (FA)

(28)

VP: ⟨e, t⟩ (NN)

NP: e (NN)

V: ⟨e, t⟩ (NN)

N: e (NN)

Barack: e (TN)

smokes: ⟨e, t⟩ (TN)

Compositional derivation of the truth conditions:

⎡⎡

⎤⎤

S

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢ NP

⎥⎥

VP

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

N

V

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎥⎥

⎥

⎢⎢ Barack

smokes ⎥

⎣⎣

⎦⎦

⎡⎡ VP ⎤⎤ ⎛⎡⎡ NP

⎥⎥ ⎢⎢

⎢⎢

⎥⎥ ⎜⎢⎢

⎢⎢

⎥⎥ ⎜⎢⎢

⎢⎢

⎥⎥ ⎜⎢⎢

⎢

N

V

=⎢

⎥⎥ ⎜⎢⎢

⎢⎢

⎥⎥ ⎜⎢⎢

⎢⎢

⎥⎥ ⎢⎢

⎢⎢

⎢⎢ smokes ⎥⎥ ⎝⎢⎢ Barack

⎦⎦ ⎣⎣

⎣⎣

⎤⎤M0 ⎞

⎥⎥

⎥⎥ ⎟

⎥⎥ ⎟

⎥⎥ ⎟

⎥⎥ ⎟

⎥⎥ ⎟

⎥⎥

⎥⎥ ⎠

⎦⎦

by Functional Application

18

⎤⎤M0

⎡

⎛⎡

⎢⎢ NP ⎥⎥ ⎞

⎡⎡

⎥⎥ ⎟

⎤⎤ ⎜⎢⎢

V

⎥⎥ ⎟

⎢⎢

⎥⎥ ⎜⎢⎢

⎥⎥ ⎟

⎢

⎢

⎥

⎥

⎢

⎢

N

= ⎢⎢

⎥⎥ ⎟

⎥⎥ ⎜

⎢

⎢

⎥⎥ ⎟

⎢⎢ smokes ⎥⎥ ⎜

⎢⎢

⎜

⎥⎥

⎣⎣

⎦⎦ ⎢⎢

⎢ Barack ⎥⎥ ⎠

⎝⎢

⎦⎦

⎣⎣

by Non-branching Nodes

= [[ smokes ]] ([[ Barack ]])

by Non-branching Nodes (× 3)

= [x ↦ 1 iff x smokes]([[Barack]])

by Terminal Nodes

= [x ↦ 1 iff x smokes](Barack)

by Terminal Nodes

= 1 iff Barack Obama smokes.

by function application

Note: The last step does not involve any composition rules; we’re done breaking

down the tree and it’s just a matter of simplifying the expression at this point.

3.3 Transitive and ditransitive verbs

Semantics for transitive verbs.

Example:

S

NP

N

VP

V

Angela likes

NP

N

Barack

Exercise: Derive the truth conditions for this using FA for all the branching nodes.

Start by decorating the tree with the semantic the VP should be a function from

individuals to truth values (type ⟨e, t⟩).

Ditransitive verbs. Suppose we have a phrase structure rule for ditransitive verbs

like tell, that generates sentences like X told Y about Z. It could generate trees like

this for example:

19

S

NP

N

(29)

VP

V

NP

Barack Obama told

N

PP

P

Angela Merkel about

NP

N

Barack Obama

What type should tell be? What order should the arguments come in? This is where

linking theory comes in.

4

Fun with Functional Application

Composition rules

For branching nodes:

(30) Functional Application (FA)

If α is a branching node and {β, γ} the set of its daughters, then, for any

assignment a, if [[β]] is a function whose domain contains [[γ]], then [[α]] =

[[β]]([[γ]]).

For non-branching nodes:

(31) Non-branching Nodes (NN)

If α is a non-branching node and β its daughter, then, for any assignment a,

[[α]]=[[β]].

(32) Terminal Nodes (TN)

If α is a terminal node occupied by a lexical item, then [[α]] is specified in

the lexicon.

4.1 Rick Perry is conservative

(33) [[Rick Perry]] = Rick Perry

(34) [[conservative]] = [x ↦ 1 iff x is conservative]

20

What to do with is? How about just an identity function:

(35) [[is]] = [f ∈ D⟨e,t⟩ ↦ f]

S: t

(36)

VP: ⟨e, t⟩

NP: e

N: e

Rick Perry

V: ⟨⟨e, t⟩, ⟨e, t⟩⟩

A: ⟨e, t⟩

is

conservative

[[[S [NP [N Rick Perry ] ] [VP [V is ] [A conservative ] ] ]]]

= [[ [VP [V is ] [A conservative ] ]]]([[ [NP [N Rick Perry ] ]]])

FA

= [[[V is ]]]([[[A conservative ]]]) ([[ [NP [N Rick Perry ] ]]])

FA

= [[is]]([[conservative]]) ([[Rick Perry]])

NN

= [f ∈ D⟨e,t⟩ ↦ f]([ x ↦ 1 iff x is conservative])(Rick Perry)

TN

= [x ↦ 1 iff x is conservative](Rick Perry)

= 1 iff Rick Perry is conservative

function application

(function application)

4.2 Rick Perry is in Texas

(37) [[in]] = [y ↦ [x ↦ 1 iff x is in y]]

(38) [[Texas]] = Texas

21

S: e

VP: ⟨e, t⟩

NP: e

N: e

Rick Perry

PP: ⟨e, t⟩

V: ⟨⟨e, t⟩, ⟨e, t⟩⟩

is

P: ⟨e, ⟨e, t⟩⟩

NP: e

in

N: e

Texas

4.3 Rick Perry is proud of Texas

(39) [[proud]] = [y ↦ [x ↦ 1 iff x is proud of y]]

(40) [[of]] = [x ↦ x]

S: t

NP: e

N: e

Rick Perry

VP

V: ⟨⟨e, t⟩, ⟨e, t⟩⟩

is

AP: ⟨e, ⟨e, t⟩⟩

A: ⟨e, ⟨e, t⟩⟩

proud

PP: e

P: ⟨e, e⟩

NP: e

of

N: e

Texas

4.4 Rick Perry is a Republican

(41) [[Republican]] = [x ↦ 1 iff x is a Republican]

Hey, let’s make the indefinite article vacuous too:

22

(42) [[a]] = [f ∈ D⟨e,t⟩ ↦ f]

S: t

(43)

VP: ⟨e, t⟩

NP: e

N: e

Rick Perry

is

5

NP: ⟨e, t⟩

V: ⟨⟨e, t⟩, ⟨e, t⟩⟩

D: ⟨⟨e, t⟩, ⟨e, t⟩⟩

N′ : ⟨e, t⟩

a

Republican

Predicate Modification

5.1 Rick Perry is a conservative Republican

(44)

S: t

VP: ⟨e, t⟩

NP: e

N: e

Rick Perry

V: ⟨⟨e, t⟩, ⟨e, t⟩⟩

NP: ⟨e, t⟩

is

D: ⟨⟨e, t⟩, ⟨e, t⟩⟩

a

N′ : ⟨e, t⟩

A: {

⟨e, t⟩

}

⟨⟨e, t⟩, ⟨e, t⟩⟩

conservative

Our lexical entry for conservative from above is type ⟨e, t⟩:

(45) [[conservative1 ]] = [x ↦ 1 iff x is conservative]

23

N′ : ⟨e, t⟩

Republican

If we want to use Functional Application here, we need conservative to be a function of type ⟨⟨e, t⟩, ⟨e, t⟩⟩.

(46) [[conservative2 ]] = [f ∈ D⟨e,t⟩ ↦ [x ↦ 1 iff f(x) = 1 and x is conservative]]

Now, [[[N′ [A conservative2 ] [N Republican ] ]]]

= [[[A conservative2 ]]]([[[N Republican ]]])

FA

= [[conservative2 ]]([[Republican]])

NN

= f ∈ D⟨e,t⟩ ↦ x ↦ 1 iff f(x) = 1 and x is conservative ( x ↦ 1 iff x is a Republican ) TN

= x ↦ 1 iff x is a Republican and x is conservative

function application

This is Montague’s strategy. All adjectives are ⟨⟨e, t⟩, ⟨e, t⟩⟩ for him, and in predicate position, they combine with a silent noun. Despite the ungrammaticality of

*Rick Perry is conservative Republican.

Alternative strategy: Use another composition rule.

(47) Predicate Modification (PM)

If α is a branching node, {β, γ} is the set of α’s daughters, and [[β]] and [[γ]]

are both in D⟨e,t⟩ , then [[α]] = [x ↦ 1 iff [[β]](x) = 1 and [[γ]](x) = 1

Now, [[[N′ [A conservative1 ] [N Republican ] ]]]

= [x ↦ 1 iff [[[A conservative1 ]]](x) = [[[N Republican ]]](x) = 1]

PM

= [x ↦ 1 iff [[conservative1 ]](x) = [[Republican]](x) = 1]

NN

= [x ↦ 1 iff x is conservative and x is a Republican]

5.2 Austin is a city in Texas

We can also use Predicate Modification with PP modifiers:

24

function application

(48)

S: t

VP: ⟨e, t⟩

NP: e

N: e

Austin

NP: ⟨e, t⟩

V: ⟨⟨e, t⟩, ⟨e, t⟩⟩

is

D: ⟨⟨e, t⟩, ⟨e, t⟩⟩

a

N′ : ⟨e, t⟩

N′ : ⟨e, t⟩

city

6

PP: ⟨e, t⟩

P: ⟨e, ⟨e, t⟩⟩

NP: e

in

Texas

The definite article

What if we have the governor of Texas instead of Rick Perry?

6.1 The negative square root of 4

Regarding the negative square root of 4, Frege says, “We have here a case in which

out of a concept-expression [i.e., an expression whose meaning is of type ⟨e, t⟩] a

compound proper name is formed [that is to say, the entire expression is of type e]

with the help of the definite article in the singular, which is at any rate permissible

when one and only one object falls under the concept.”

“Permissible”: the denotes a function of type ⟨⟨e, t⟩, e⟩ that is only defined for

input predicates that characterize one single entity. In other words, the presupposes

existence and uniqueness. This can be implemented as a restriction on the domain

(highlighted with boldface type).

(49) [[the]] = [f ∈ D⟨e,t⟩ : there is exactly one x such that f(x) = 1 ↦ the unique

y such that f(y) = 1]

So the is not a function from D⟨e,t⟩ to De ; it is a partial function from D⟨e,t⟩ to

De . But we can still give it the type ⟨⟨e, t⟩, e⟩ if we interpret this to allow partial

25

functions.

To flesh out Frege’s analysis of this example further, Heim and Kratzer suggest that

square root is a “transitive noun”, with a meaning of type ⟨e, ⟨e, t⟩⟩, and that “of is

vacuous, [[square root]] applies to 4 via Functional Application, and the result of

that composes with [[negative]] under predicate modification.”

(50) [[negative]] = [x ↦ 1 iff x is negative]

(51) [[square root]] = [y ↦ [x ↦ 1 iff x is the square root of y]]

(52) [[of]] = [x ∈ De ↦ x]

(53) [[four]] = 4

So the constituents will have denotations of the following types:

NP: e

D: ⟨⟨e, t⟩, e⟩

the: ⟨⟨e, t⟩, e⟩

N: ⟨e, t⟩

A: ⟨e, t⟩

negative: ⟨e, t⟩

N: ⟨e, t⟩

N: ⟨e, ⟨e, t⟩⟩

square root: ⟨e, ⟨e, t⟩⟩

PP: e

P: ⟨e, e⟩

NP: e

of: ⟨e, e⟩

N: e

four: e

6.2 Top-down evaluation

To compute the value “top-down”, we put the whole tree in one big old pair of

denotation brackets, and use composition rules to break down the tree. Here I am

putting the name of the rule I used as a subscript on the equals sign, because I don’t

have enough room to put them off to the right.

26

⎡⎡

NP

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢ D

N

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢ the

A

N

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

negative

N

PP

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

square root P

NP

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

of

N

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

⎢⎢

four

⎣⎣

⎡⎡

N

⎢

⎛⎢

⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

A

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎢⎢ negative

⎡⎡ D ⎤⎤ ⎜

N

⎢⎢

⎢⎢

⎥⎥ ⎜

⎢

⎜

⎢⎢

⎥⎥ ⎜⎢

⎢⎢

⎢

⎢

⎥

⎥

⎢⎢

=F A ⎢⎢

⎥⎥ ⎜

⎢⎢

⎢⎢

⎥⎥ ⎜

⎢

⎜

⎢⎢ the ⎥⎥ ⎜⎢

⎢

square root

⎣⎣

⎦⎦ ⎜⎢

⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎢

⎝⎢

⎢⎢

⎣⎣

27

⎤⎤

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎥⎥

⎦⎦

⎤⎤

⎥⎥⎞

⎥⎥

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

N

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

PP

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎟

P

NP ⎥

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎟

of

N ⎥

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎠

⎥

four ⎥

⎦⎦

⎤⎤

⎥⎥⎞

⎥⎥

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

A

N

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

negative

N

PP

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

=N N

⎥⎥⎟

⎥⎥⎟

⎥⎟

square root P

NP ⎥

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎟

of

N ⎥

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎟

⎥⎥⎠

⎥

four ⎥

⎦⎦

⎤⎤

⎡⎡

N

⎥⎥

⎢⎢

⎞

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎟

⎥

⎥

⎢⎢

N

PP

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎟

⎥

⎥

⎢

⎢

⎡⎡

⎤⎤

A

⎟

⎥

⎥

⎢

⎢

⎢⎢

⎥⎥

⎟

⎥⎥

⎢⎢

⎢⎢

⎥⎥

⎟

⎥

⎥

⎢

⎢

⎢⎢

⎥⎥ (x) = ⎢⎢

square root P

NP ⎥⎥ (x) = 1⎟

=P M

⎢⎢

⎥⎥

⎟

⎥

⎥

⎢

⎢

⎢⎢

⎥⎥

⎟

⎥⎥

⎢⎢

⎢⎢ negative ⎥⎥

⎟

⎥

⎥

⎢

⎢

⎣⎣

⎦⎦

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

of

N

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎟

⎥⎥

⎢⎢

⎥⎥

⎢⎢

⎠

⎥⎥

⎢⎢

four

⎦⎦

⎣⎣

⎤

⎤

⎡⎡

PP

⎥⎥⎞

⎢

⎞

⎛⎢

⎛

⎥⎥

⎢⎢

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎟

⎥

⎥

⎜⎢⎢ P

⎜

NP ⎥⎥⎟

⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎥⎥⎟ (x) = 1⎟

=N N,F A [[ the ]] ⎜x ↦ [[ negative ]] (x) = [[square root]] ⎜⎢⎢

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢ of

⎜

N

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎟

⎥⎥⎟

⎜⎢⎢

⎜

⎥

⎥

⎢

⎢

⎠

⎝⎢⎢

⎝

⎥⎥⎠

four

⎦⎦

⎣⎣

⎡⎡

⎢

⎛⎢

⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

[[ the ]] ⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎜⎢⎢

⎢

⎝⎢

⎢⎢

⎣⎣

⎛

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

[[ the ]] ⎜x ↦

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎝

N

28

⎡

⎛

⎛

⎛⎡

⎢⎢ NP

⎢

⎜⎡⎡ P ⎤⎤ ⎜⎢

⎜

⎢

⎜⎢⎢

⎜

⎜⎢

⎥

⎢

⎥

⎢

⎜⎢⎢

⎜

⎥⎥ ⎜

⎢⎢

⎜⎢⎢

⎜

⎜

⎥

⎢

⎥

⎢ N

=F A [[ the ]] ⎜x ↦ [[ negative ]] (x) = [[square root]] ⎜⎢⎢

⎥⎥ ⎜

⎢

⎜⎢⎢

⎜

⎜⎢

⎥

⎢

⎥

⎢

⎜⎢⎢

⎜

⎢⎢

⎥⎜

⎜⎣⎣ of ⎥

⎜

⎜

⎦

⎢

⎦

⎜

⎜

⎜⎢⎢

⎢

⎢

⎝

⎝

⎝⎢

⎣⎣ four

⎤⎤⎞⎞

⎞

⎥⎥

⎥⎥⎟⎟

⎟

⎥⎥⎟⎟

⎟

⎥⎥⎟⎟

⎟

⎥⎥⎟⎟

⎥⎥⎟⎟ (x) = 1⎟

⎟

⎥⎥⎟⎟

⎟

⎥⎥⎟⎟

⎟

⎥⎥⎟⎟

⎟

⎥⎥⎟⎟

⎟

⎥⎥

⎥⎥⎠⎠

⎠

⎦⎦

=N N [[ the ]] (x ↦ [[ negative ]] (x) = [[square root]] ([[ of ]] ([[ four ]])) (x) = 1)

Now we are done breaking down the tree. No more composition rules.

[[the]]([x ↦ 1 iff [[negative]](x) = [[square root ]]([[of]]([[four]]))(x) = 1])

= [[the]]([x ↦ 1 iff [[negative]](x) = [[square root ]]([[of]](4))(x) = 1])

= [[the]]([x ↦ 1 iff [[negative]](x) = [[square root ]]([x ↦ x](4))(x) = 1])

= [[the]]([x ↦ 1 iff [[negative]](x) = [[square root ]](4)(x) = 1])

= [[the]]([x ↦ 1 iff [[negative]](x) = [y ↦ [z ↦ 1 iff z is a square root of y]](4)(x) = 1])

= [[the]]([x ↦ 1 iff [[negative]](x) = [z ↦ 1 iff z is a square root of 4](x) = 1])

= [[the]]([x ↦ 1 iff [[negative]](x) = 1 and x is a square root of 4])

= [[the]]([x ↦ 1 iff [[z↦ 1 iff z is negative]](x) = 1 and x is a square root of 4])

= [[the]]([x ↦ 1 iff x is negative and x is a square root of 4])

= [ f ∈ D⟨e,t⟩ : there is exactly one x such that f(x) = 1 ↦ the unique y such that f(y)

= 1]([x ↦ 1 iff x is negative and x is a square root of 4])

= the unique y such that y is negative and x is a square root of 4

= -2

29

6.3 Bottom-up style

NP: e

D: ⟨⟨e, t⟩, e⟩

the: ⟨⟨e, t⟩, e⟩

N: ⟨e, t⟩

A: ⟨e, t⟩

negative: ⟨e, t⟩

N: ⟨e, t⟩

N: ⟨e, ⟨e, t⟩⟩

square root: ⟨e, ⟨e, t⟩⟩

PP: e

P: ⟨e, e⟩

NP: e

of: ⟨e, e⟩

N: e

four: e

We need to compute a semantic value for every subtree/node. For convenience, we

can group the nodes according to the string of words that they dominate, and start

from the most deeply embedded part of the tree.

Note: I reserve the right to abbreviate e.g. [PP [P of ] [NP [N four ] ] ] as [PP of four],

omitting all but the outermost brackets.

Bottom-up derivation

four

• [[four]] = 4

TN

• [[[NP [N four] ]]] = [[[N four]]] = [[four]] = 4

NN

of

• [[[P of ]]] = [[of]] = x ↦ x

TN, NN

of four

• [[[PP of four]]] = [[[P of ]]]([[[NP 4]]])

= [x ↦ x](4) = 4

30

FA

square root

• [[[N square root]]] = [[square root]]

= [y ↦ [x ↦ 1 iff x is a square root of y]]

NN

TN

square root of four

• [[[N square root of four]] = [[[N square root]]]([[[PP of four]]])

= [y ↦ [x ↦ 1 iff x is a square root of y]](4)

= [x ↦ 1 iff x is a square root of 4]

= [x ↦ 1 iff x ∈ {2,-2}]

FA

negative

• [[[A negative]]] = [[negative]] = x ↦ 1 iff x is negative

TN, NN

negative square root of four

• [[[N negative square root of four]]]

= x ↦ 1 iff [[[A negative]]](x) = [[[N square root of four]]](x)=1

= x↦ 1 iff x is a square root of 4 and x is negative

= x↦ 1 iff x ∈ {-2}

PM

the

• [[[D the ]]] = [[the]]

TN, NN

= f ∈ D⟨e,t⟩ : there is exactly one x s t. f(x) = 1 ↦ the y such that f(y) = 1

the negative square root of four

• [[[NP the negative square root of four]]]

= [[[D the ]]]([[[N negative square root of four]]])

FA

= [f ∈ D⟨e,t⟩ : there is exactly one x such that f(x) = 1 ↦ the unique y such

that f(y) = 1](x↦ 1 iff x ∈ {-2})

= the unique y such that y ∈ {-2}

= -2

Note that we used the same composition rules as we did using the top-down style!

This style is a little bit simpler and cleaner. But it is not as convenient when you

have to manipulate variable assignments... which we will cover next!

31

7

Predicate calculus: now with variables!

Previously, I ignored formulas of predicate calculus with quantifiers and variables

like these:

∀x HAPPY (x)

∃x ¬HAPPY (x)

‘for all x, x is happy’

‘there exists an x such that it is not the case that x is happy’

In order to interpret formulas with variables, we need to make interpretation relative to a model and an assignment function:

[[φ]]M,g

An assignment function assigns individuals to variables. Examples:

⎡ x → Maggie

⎢

⎢

g1 = ⎢ y → Bart

⎢

⎢ z → Bart

⎣

⎡ x → Bart ⎤

⎢

⎥

⎢

⎥

g2 = ⎢ y → Bart ⎥

⎢

⎥

⎢ z → Bart ⎥

⎣

⎦

⎤

⎥

⎥

⎥

⎥

⎥

⎦

Informally, ∀x HAPPY (x) is true iff: no matter which individual we assign to x,

HAPPY (x) is true. In other words, for all elements in the domain d, d ∈ [[HAPPY ]].

Informally, ∃x HAPPY (x) is true iff: we can find some individual to assign to x

such that HAPPY (x) is true. In other words, there is some element in the domain d

such that d ∈ [[HAPPY ]].

The assignment function determines what x is assigned to. Formally:

[[x]]M,g = g(x)

This in turn influences the value of a formula containing x in which x is not bound

by a quantifier (a formula in which x is free).

Let’s interpret HAPPY (x) using the reality model Mr and the assignment functions

g1 and g2 .

[[HAPPY (x)]]Mr ,g1 = 1

iff [[x]]Mr ,g1 ∈ [[HAPPY ]]Mr ,g1

32

iff g1 (x) ∈ Ir (HAPPY )

iff Maggie ∈ {Bart}.

Maggie /∈ {Bart} so [[HAPPY (x)]]Mr ,g1 = 0.

[[HAPPY (x)]]Mr ,g2 = 1

iff [[x]]Mr ,g2 ∈ [[HAPPY ]]Mr ,g2

iff g2 (x) ∈ Ir (HAPPY )

iff Bart ∈ Ir (HAPPY ).

Bart ∈ {Bart} so [[HAPPY (x)]]Mr ,g2 = 1.

Intuitively, this means that ∃x HAPPY (x) is true, but ∀x HAPPY (x) is false in Mr .

New interpretation rules:

• Constants

If α is a constant, then [[α]]M,g = I(α).

• Variables – all new!

If α is a variable, then [[α]]M,g = g(α).

• Atomic formulae

If π is an n-ary predicate and α1 , ...αn are terms, then [[π(α1 , ..., αn )]]M,g =

1 iff

⟨[[α1 ]]M,g , ..., [[αn ]]M,g ⟩ ∈ [[π]]M,g

If π is a unary predicate and α is a term, then [[π(α)]]M,g = 1 iff [[α]]M,g ∈

[[π]]M,g .

• Negation

[[¬φ]]M,g = 1 if [[φ]]M,g = 0; otherwise [[¬φ]]M,g = 0.

• Connectives

[[φ ∧ ψ]]M,g = 1 if [[φ]]M,g = 1 and [[ψ]]M,g = 1; 0 otherwise. Similarly for

[[φ ∨ ψ]]M,g , [[φ → ψ]]M,g , and [[φ ↔ ψ]]M,g .

• Universal quantification – all new!

′

[[∀vφ]]M,g = 1 iff for all d ∈ D, [[φ]]M,g = 1, where g′ is an assignment

function exactly like g except that g′ (v) = d.

33

• Existential quantification – all new!

′

[[∃vφ]]M,g = 1 iff there is a d ∈ D such that [[φ]]M,g , where g′ is an assignment function exactly like g except that g′ (v) = d.

[[∀x HAPPY (x)]]Mr ,g1 = 1

′

iff for all d ∈ D, [[HAPPY (x)]]Mr ,g = 1, where g′ is an assignment function exactly

like g1 except that g′ (x) = d

This can be falsified by setting d equal to Maggie, so g′ (x) =Maggie.

′

[[HAPPY (x)]]Mr ,g = 0 in this case.

But there is a d ∈ D such that [[HAPPY (x)]]Mr ,g , where g ′ is an assignment function exactly like g1 except that g′ (x) = d.

′

As shown above, there is such a d: Bart.

8

Relative clauses

Heim and Kratzer use assignment functions for the interpretation of relative clauses

such as the following:

(54) The car that Joe bought is very fancy.

(55) The woman who admires Joe is very lovely.

Semantically, relative clauses are just like adjectives:

(56) The red car is very fancy.

(57) The Swedish woman is very lovely.

They are type ⟨e, t⟩ and combine via Predicate Modification.

NP: ⟨e, t⟩

(58)

NP: ⟨e, t⟩

CP: ⟨e, t⟩

car

that Joe bought

CP stands for “Complementizer Phrase” and Heim and Kratzer assume the following syntax for relative clause CPs:

34

(59)

CP

C′

whichi

S

C

that

DP

VP

V

DP

bought

ti

Joe

(60)

CP

C′

whoi

C

S

that

VP

DP

ti

V

DP

likes

Joe

The text that is struck out like so is deleted. Heim and Kratzer assume that either

the relative pronoun which or who or the complementizer that is deleted.

Interpretation of variables

(61) Traces Rule (TR)

If αi is a trace and g is an assignment, [[αi ]]g = g(i)

⎡ 1 → Maggie ⎤

⎢

⎥

⎢

⎥

⎥

g1 = ⎢ 2 → Bart

⎢

⎥

⎢ 3 → Maggie ⎥

⎣

⎦

⎡ 1 → Lisa

⎤

⎢

⎥

⎢

⎥

⎥

g2 = ⎢ 2 → Bart

⎢

⎥

⎢ 3 → Maggie ⎥

⎣

⎦

[[t1 ]]g1 = Maggie

[[t1 ]]g2 = Lisa

So now we interpret everything with respect to an assignment.

35

(62) [[ [VP [V abandoned ] [DP t1 ] ]]]g = x ↦ 1 iff x abandoned g(1)

But there are assignment-independent denotations too.

(63) Bridge to assignment-independence (BI)

For any tree α, α is in the domain of [[]] iff for all assignments g and g′ ,

′

[[α]]g = [[α]]g .

If α is in the domain of [[]], then for all assignments g, [[α]]= [[α]]g .

So we can still have assignment-independent lexical entries like:

(64) [[laugh]] = x ↦ 1 iff x laughs

and then by (63), we have:

(65) [[laugh]]g1 = x ↦ 1 iff x laughs

(66) [[laugh]]g2 = x ↦ 1 iff x laughs

We need to redo the composition rules now too:

(67) Lexical Terminals (LT)

If α is a terminal node occupied by a lexical item, then [[α]] is specified in

the lexicon.

(68) Non-branching Nodes (NN)

If α is a non-branching node and β its daughter, then, for any assignment g,

[[α]]g =[[β]]g .

(69) Functional Application (FA)

If α is a branching node and {β, γ} the set of its daughters, then, for any

assignment g, if [[β]]g is a function whose domain contains [[γ]]g , then [[α]]g

= [[β]]g ([[γ]]g ).

(70) Predicate Modification (PM)

If α is a branching node and {β, γ} the set of its daughters, then, for any

assignment g, if [[β]]g and [[γ]]g are both functions of type ⟨e, t⟩, then [[α]]g

= x ↦ 1 iff [[β]]g (x) = [[γ]]g (x) = 1.

36

Predicate abstraction.

CP to have type ⟨e, t⟩?

The S in a relative clause is type t. How do we get the

(71)

CP : ⟨e, t⟩

C′ :

which1

S: t

C:

that

VP: ⟨e, t⟩

DP: e

Joe

V: ⟨e, ⟨e, t⟩⟩

DP: e

bought

t1

Heim and Kratzer:

• The complementizer that is vacuous; that S = S

or [[that]] = p ∈ Dt ↦ p

• The relative pronoun is vacuous too, but it triggers a special rule called Predicate Abstraction

(72) Predicate Abstraction (PA)

If α is a branching node whose daughters are a relative pronoun indexed i

x/i

and β, then [[α]]g = x ↦ [[β]]g

gx/i is an assignment that is just like g except that x is assigned to i.

Note that x is a variable that is part of the meta-language, bound by the metalanguage operator λ, ranging over objects in the domain.

So [[(71)]] = x ↦ 1 iff Joe bought x.

In case you don’t believe me:

[[[CP which1 [C′ [C that ] [S [DP Joe ] [VP [V bought ] [DP t1 ] ] ] ] ]]]

= [[[CP which1 [C′ [C that ] [S [DP Joe ] [VP [V bought ] [DP t1 ] ] ] ] ]]]g (any g)

= [x ↦ [[[C′ [C that ] [S [DP Joe ] [VP [V bought ] [DP t1 ] ] ] ]]]g

37

x/1

]

BI

PA

= [x ↦ [[[C that ]]]g

x/1

([[[S [DP Joe ] [VP [V bought ] [DP t1 ] ] ]]]g

= [x ↦ [[[C that ]]]([[[S [DP Joe ] [VP [V bought ] [DP t1 ] ] ]]]g

x/1

= [x ↦ [p ∈ Dt ↦ p]([[[S [DP Joe ] [VP [V bought ] [DP t1 ] ] ]]]g

= [x ↦ [[[S [DP Joe ] [VP [V bought ] [DP t1 ] ] ]]]g

= [x ↦ [[[VP [V bought ] [DP t1 ] ]]]g

x/1

= [x ↦ [[[VP [V bought ] [DP t1 ] ]]]g

x/1

= [x ↦ [[[VP [V bought ] [DP t1 ] ]]]g

x/1

= [x ↦ [[[V bought ]]]g

x/1

([[[DP t1 ]g

= [x ↦ [[[V bought ]]]([[[DP t1 ]g

x/1

x/1

x/1

([[[DP Joe ]]]g

]

x/1

x/1

)]

FA

)]

x/1

BI

)]

LT

function application

)]

FA

([[[DP Joe ]]])]

BI

(Joe)]

NN, LT

)]](Joe)]

FA

)]](Joe)]

BI

= [x ↦ [z ↦ [y ↦ 1 iff y bought z]]([[[DP t1 ]]]g

= [x ↦ [z ↦ [y ↦ 1 iff y bought z]]([[t1 ]]g

x/1

x/1

)(Joe)]

LT, NN

)(Joe)]

NN

= [x ↦ [z ↦ [y ↦ 1 iff y bought z]](x)(Joe)]

TR

= [x ↦ [y ↦ 1 iff y bought x](Joe)]

function application

= [x ↦ 1 iff Joe bought x]

function application

9

Quantifiers

How do we analyze sentences like the following:

(73) Somebody is happy.

(74) Everybody is happy.

(75) Nobody is happy.

(76) {Some, every, at least one, at most one, no} linguist is happy.

(77) {Few, some, several, many, most, more than two} linguists are happy.

38

S: t

NP: ?

VP: ⟨e, t⟩

...

is happy

9.1 Type e?

Most of the DPs we have seen so far have been of type e:

• Proper names: Mary, John, Rick Perry, 4, Texas

• Definite descriptions: the governor of Texas, the square root of 4

• Pronouns and traces: it, t

Exception: indefinites like a Republican after is.

Should words and phrases like Nobody and At least one person be treated as type

e? How can we tell?

Predictions of the type e analysis:

• They should validate subset-to-superset inferences

• They should validate the law of contradiction

• They should validate the law of the excluded middle

Subset-to-superset inferences

(78) John came yesterday morning.

Therefore, John came yesterday.

This is a valid inference if John is type e. Proof: [[came yesterday morning]] ⊆

[[came yesterday]] (everything that came yesterday morning came yesterday), and

if the subject denotes an individual, then the sentence means that the subject is an

element of the set denoted by the VP. If the first sentence is true, then the subject

is an element of the set denoted by the VP, which means that the second sentence

must be true. QED.

(79) At most one letter came yesterday morning.

Therefore, at most one letter came yesterday.

This inference is not valid, so at most one letter must not be type e.

39

The law of contradiction

(¬[P ∧ ¬P ])

This sentence is contradictory:

(80) Mount Rainier is on this side of the border, and Mount Rainier is on the

other side of the border.

The fact that it is contradictory follows from these assumptions:

• [[Mount Rainier]] ∈ De

• [[is on this side of the border]] ∩ [[is on the other side of the border]] = ∅

(Nothing is both on this side of the border and on the other side of the border)

• When the subject is type e, the sentence means that it is in the set denoted

by the VP

• standard analysis of and

This sentence is not contradictory:

(81) More than two mountains are on this side of the border, and more than two

mountains are on the other side of the border.

So more than two mountains must not be type e.

The law of the excluded middle (P ∨ ¬P )

(82) I am over 30 years old, or I am under 40 years old.

This is a tautology. That follows from the following assumptions:

• [[I]] ∈ De

• [[over 30 years old]] ∪ [[under 40 years old]] = D (everything is either over 30

years old or under 40 years old)

• When the subject is type e, the sentence means that it is in the set denoted

by the VP

• standard analysis of or

This sentence is not a tautology:

(83) Every woman in this room is over 30 years old, or every woman in this room

is under 40 years old.

So every woman must not be of type e

40

9.2 Solution: Generalized quantifiers

(84) [[nothing]] = f ∈ D⟨e,t⟩ ↦ 1 iff there is no x ∈ De such that f(x) = 1

(85) [[everything]] = f ∈ D⟨e,t⟩ ↦ 1 iff for all x ∈ De , f(x) = 1

(86) [[something]] = f ∈ D⟨e,t⟩ ↦ 1 iff there is some x ∈ De such that f(x) = 1

S: t

(87)

DP: ⟨⟨e, t⟩, t⟩

everything

S: t

vs.

VP: ⟨e, t⟩

DP: e

V: ⟨e, t⟩

Mary

VP: ⟨e, t⟩

V: ⟨e, t⟩

vanished

vanished

(88) [[every]] = f ∈ D⟨e,t⟩ ↦ [g ∈ D⟨e,t⟩ ↦ 1 iff for all x ∈ De such that f(x) = 1,

g(x)=1 ]

(89) [[no]] = f ∈ D⟨e,t⟩ ↦ [g ∈ D⟨e,t⟩ ↦ 1 iff there is no x ∈ De such that f(x) = 1

and g(x)=1 ]

(90) [[some]] = f ∈ D⟨e,t⟩ ↦ [g ∈ D⟨e,t⟩ ↦ 1 iff there is some x ∈ De such that f(x)

= 1 and g(x)=1 ]

S: t

(91)

DP: ⟨⟨e, t⟩, t⟩

D: ⟨⟨e, t⟩, ⟨⟨e, t⟩, t⟩⟩

NP: ⟨e, t⟩

every

thing

41

VP: ⟨e, t⟩

V: ⟨e, t⟩

vanished

10 The problem of quantifiers in object position

10.1 The problem

S: t

(92)

DP: ⟨⟨e, t⟩, t⟩

VP: ⟨e, t⟩

D: ⟨⟨e, t⟩, ⟨⟨e, t⟩, t⟩⟩

NP: ⟨e, t⟩

every

linguist

(93)

V: ⟨e, ⟨e, t⟩⟩

DP: e

offended

John

S: ?????????

DP: e

VP: ???????????

John

V: ⟨e, ⟨e, t⟩⟩

offended

DP: ⟨⟨e, t⟩, t⟩

D: ⟨⟨e, t⟩, ⟨⟨e, t⟩, t⟩⟩

NP: ⟨e, t⟩

every

linguist

Two types of approaches to the problem:

1. Move the quantifier phrase to a higher position in the tree (via Quantifier

Raising), leaving a DP trace of type e in object position. (Or simulate movement via Cooper Storage, as in Head-Driven Phrase Structure Grammar.)

2. Interpret the quantifier phrase in situ. In this case one can apply a typeshifting operation to change its type.

10.2 An in situ approach

Multiple versions of lexical items:

[[everybody1 ]] = f ∈ D⟨e,t⟩ ↦ 1 iff for all persons x ∈ D, f(x) = 1

[[everybody2 ]] = f ∈ D⟨e,⟨e,t⟩⟩ ↦ [x ∈ De ↦ 1 iff for all persons y ∈ De , f(y)(x) = 1 ]

[[somebody1 ]] = f ∈ D⟨e,t⟩ ↦ 1 iff there is some person x ∈ De such that f(x) = 1

42

[[somebody2 ]] = f ∈ D⟨e,⟨e,t⟩⟩ ↦ [x ∈ De ↦ 1 iff there is some person y ∈ De such

that f(y)(x) = 1 ]

(94)

S: t

VP: ⟨e, t⟩

DP: e

John

V: ⟨e, ⟨e, t⟩⟩

DP: ⟨⟨e, ⟨e, t⟩⟩, ⟨e, t⟩⟩

offended

everybody2

(95)

S: t

VP: ⟨e, t⟩

DP: ⟨⟨e, t⟩, t⟩

Everybody

V: ⟨e, ⟨e, t⟩⟩

DP: ⟨⟨e, ⟨e, t⟩⟩, ⟨e, t⟩⟩

offended

somebody2

Note: This only gets one of the readings.

We need a new everybody for ternary relations:

(96)

S

VP

DP

Ann

V′

PP

V

DP

introduced

everybody

What type are the determiners (note: et = ⟨e, t⟩)?

43

P

DP

to

Maria

(97)

S: t

VP: et

DP: e

John

DP: ⟨⟨e, et⟩, et⟩

V: ⟨e, et⟩

offended

D:⟨et, ⟨⟨e, et⟩, et⟩⟩

NP: et

every

linguist

How do we get this every from our normal ⟨et, ⟨et, t⟩⟩ every? A lexical rule.

(98) For every lexical item δ1 with a meaning of type ⟨et, ⟨et, t⟩⟩, there is a (homophonous and syntactically identical) item δ2 with the following meaning

of type ⟨et, ⟨⟨e, et⟩, et⟩⟩:

[[δ2 ]] = f ∈ D⟨e,t⟩ ↦ [g ∈ D⟨e,et⟩ ↦ [x ∈ De ↦ [[δ1 ]](f)(z ∈ De ↦ g(z)(x)) ] ]

10.3 A Quantifier Raising approach

Several levels of representation:

• Deep Structure (DS): Where the derivation begins

• Surface Structure (SS): Where the order of the words is what we see

• Phonological Form (PF): Where the words are realized as sounds

• Logical Form (LF): The input to semantic interpretation

Transformations map from DS to SS, and from SS to PF and LF. (Since the transformations from SS to LF happen “after” the order of the words is determined, we

do not see the output of these transformations. These movement operations are in

this sense covert.)

A transformation called QR (Quantifier Raising) maps the SS structure in (99a) to

something like the LF structure in (99b)

44

(99) a.

S

VP

DP

John

DP

V

offended

b.

D

NP

every

linguist

S

S

DPi

D

NP

DP

every

linguist

John

VP

V

DP

offended

ti

Actually, Heim and Kratzer propose the following, so that they can make it work

with Predicate Abstraction:

(100)

S

DP

1

D

NP

every

linguist

S

DP

John

VP

V

DP

offended

t1

(101) Predicate Abstraction (PA) (revised)

Let α be a branching node with daughters β and γ, where β dominates only

a numerical index i. Then for any variable assignment g, [[α]]g =x ∈ De ↦

x/i

[[γ]]g .

Example. Let’s give every node of the tree a unique category label so we can

refer to the denotation of the tree rooted at that node using the category label.

45

(102)

S1

?

DP1

D

NP

every

linguist

1

S2

VP

DP2

John

V

DP3

offended

t1

The task is to analyze the truth conditions of S1 (or, to be more precise, the tree

rooted at the node labelled S1 ). The basic idea is straightforward – Predicate Abstraction at the mystery-category node (labelled ‘?’ here), Pronouns and Traces rule

at the trace, and Functional Application everywhere else – but it is a bit tricky to

go between assignment-dependent and assignment-independent denotations. The

trick is to start with the Bridge to Independence.

[[Sg1 ]]

=[[S1 ]]g , for all g

=[[DP1 ]]g ([[?]]g )

x/1

=[[DP1 ]]g (x ↦ [[S2 ]]g )

x/1

x/1

=[[DP1 ]]g (x ↦ [[VP]]g ([[DP2 ]]g ))

x/1

x/1

x/1

=[[DP1 ]]g (x ↦ [[V]]g ([[DP3 ]]g )([[DP2 ]]g ))

x/1

x/1

x/1

=[[DP1 ]]g (x ↦ [[offended]]g ([[t1 ]]g )([[John]]g ))

x/1

x/1

=[[DP1 ]]g (x ↦ [[offended]]g (x)([[John]]g ))

=[[DP1 ]]g (x ↦ [[offended]](x)([[John]]))

=[[DP1 ]]g (x ↦ [y ↦ [z ↦ 1 iff z offended y ]](x)(John))

=[[DP1 ]]g (x ↦ John offended x)

=[[D]]g ([[NP]]g )(x ↦ 1 iff John offended x)

=[[every]]g ([[linguist]]g )(x ↦ 1 iff John offended x)

=[[every]]([[linguist]])(x ↦ 1 iff John offended x)

= [f ∈ D⟨e,t⟩ ↦ g ∈ D⟨e,t⟩ ↦ 1 iff for all y, if f(y) then g(y)]([[linguist]])(x ↦ 1 iff John offended x)

= [g ∈ D⟨e,t⟩ ↦ 1 iff for all y, if y is a linguist then Q(y)](x ↦ 1 iff John offended x)

=1 iff for all y, if y is a linguist then John offended x

46

BI

FA

PA

FA

FA

NN, TN

TR

BI

TN

β-R

TN

FA

BI

TN

TN, β-R

β-R

10.4 Arguments in favor of the movement approach

Argument #1: Scope ambiguities. In order to get both readings of Everybody

loves somebody, we have to introduce yet even more complicated types. Scope

ambiguities are trivially derived under the movement approach.

Argument #2: Inverse linking. There is one class of examples that cannot be

generated under an in situ approach:

(103) One apple in every basket is rotten.

This does not mean: ‘One apple that is in every basket is rotten’. That is the only

reading that an in situ analysis can give us.

QR analysis:

(104)

S

DP

every basket

1

S

VP

DP

D

one

is rotten

NP

N

apple

PP

P

t1

in

Argument #3: Antecedent-contained deletion

(105) I read every novel that you did.

Like regular VP ellipsis:

(106) I read War and Peace before you did.

except that the antecedent VP is contained in the elided VP!

To create an appropriate antecedent, you have to QR the object.

47

Argument #4: Quantifiers that bind pronouns

(107) a.

b.

(108) a.

b.

Mary blamed herself.

Mary blamed Mary.

Every woman blamed herself.

Every woman blamed every woman.

(109) No man noticed the snake next to him.

Treat pronouns as variables and use QR ⇒ no problem.

(110) Traces and Pronouns Rule (TP) (p. 116)

If α is a pronoun or trace and g is an assignment and i is in the domain of g,

[[αi ]]g = g(i)

(111)

S

DP

1

D

NP

every

woman

S

VP

DP

t1

V

DP

blamed

herself1

But how do we get the truth conditions on the in-situ approach?

(112) [[ [VP [V blamed ] [DP herself1 ] ] ]]g = x ↦ 1 iff x blamed g(1)

How do we combine this with every woman? We cannot get an assignmentindependent denotation.

11 Free and Bound Variable Pronouns

11.1 Toward a unified theory of anaphora

A deictic use of a pronoun:

(113) [after a certain man has left the room:]

I am glad he is gone.

48

An anaphoric use of a pronoun:

(114) I don’t think anybody here is interested in Smith’s work. He should not be

invited.

“Anaphoric and deictic uses seem to be special cases of the same phenomenon: the

pronoun refers to an individual which, for whatever reason, is highly salient at the

moment when the pronoun is processed.” (Heim and Kratzer 1998, p. 240)

Hypothesis 1: All pronouns refer to whichever individual is most salient at the

moment when the pronoun is processed.

It can’t be that simple for all pronouns:

(115) the book such1 that Mary reviewed it1

(116) No1 woman blamed herself1 .

So not all pronouns are referential.3

Hypothesis 2:

All pronouns are bound variables.

Then in (114) we would have to QR Smith to a position where it QR’s He in the

second sentence somehow.

Plus, the strict/sloppy ambiguity exemplified in (117) can be explained by saying

that on one reading, we have a bound pronoun, and on another reading, we have a

referential pronoun.

In the movie Ghostbusters, there is a scene in which the three Ghostbusters Dr.

Peter Venkman, Dr. Raymond Stanz, and Dr. Egon Spengler (played by Bill Murray, Dan Akroyd, and Harold Ramis, respectively), are in an elevator. They have

just started up their Ghostbusters business and received their very first call, from a

fancy hotel in which a ghost has been making disturbances. They have their proton

packs on their back and they realize that they have never been tested.

(117) Dr Ray Stantz: You know, it just occurred to me that we really haven’t had

a successful test of this equipment.

3

Sometimes it is said that No woman and herself are “coreferential” in (116) but this is strictly

speaking a misuse of the term “coreferential”, because, as Heim and Kratzer point out, “coreference

implies reference.”

49

Dr. Egon Spengler: I blame myself.

Dr. Peter Venkman: So do I.

Strict reading: Peter blames himself.

Sloppy reading: Peter blames Egon.

LF of antecedent for sloppy reading:

(118)

S

DP

1

S

I

VP

DP

t1

V

DP

blame

myself1

LF of antecedent for strict reading:

(119)

S

DP

I1

VP

V

DP

blame

myself1

Heim and Kratzer’s hypothesis: All pronouns are variables, and bound pronouns are interpreted as bound variables, and referential pronouns are interpreted

as free variables.

What does it mean for a variable to be bound or free?

• The formal definition (p. 118): Let αn be an occurrence of a variable α in

a tree β. Then αn is free in β if no subtree γ of β meets the following two

conditions: (i) γ contains αn , and (ii) there are assignments g such that α is

not in the domain of [[]]g , but γ is.

• More intuitively: A variable is free in a tree β if the value of [[β]]g depends

on what g assigns to the variable’s index.

50

• With the Predicate Abstraction rule, we make semantic values independent

of assignments, so we can use the following shortcut to determine whether a

variable is bound or free: A variable is bound if there is a node that meets the

structural description for Predicate Abstraction dominating it and its index;

otherwise it is free.

Examples:

(120)

S

VP

DP

DP

V

A

She1

is

nice

[feminine]

(121)

S

DP

Every boy

1

S

VP

DP

t1

D

V

loves

DP

[masculine]

NP

D

his1

51

father

(122)

S

DP

VP

John

V

DP

hates

D

[masculine]

NP

D

father

his1

(123)

S

DP

John

1

S

DP

VP

t1

D

V

hates

DP

[masculine]

NP

D

father

his1

11.2 Assignments as part of the context

A consequence: “Treating referring pronouns as free variables implies a new way

of looking at the role of variable assignments. Until now we have asssumed that an

LF whose truth-value varied from one assignment to the next could ipso facto not

represent a felicitous, complete utterance. We will no longer make this assumption.

Instead, let us think of assignments as representing the contribution of the utterance