Scalable Earthquake Simulation on Petascale Supercomputers

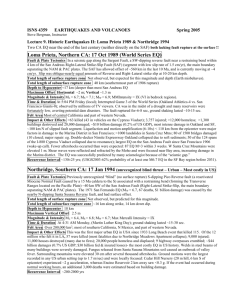

advertisement