1 There are any number of political rumors running rampant at a

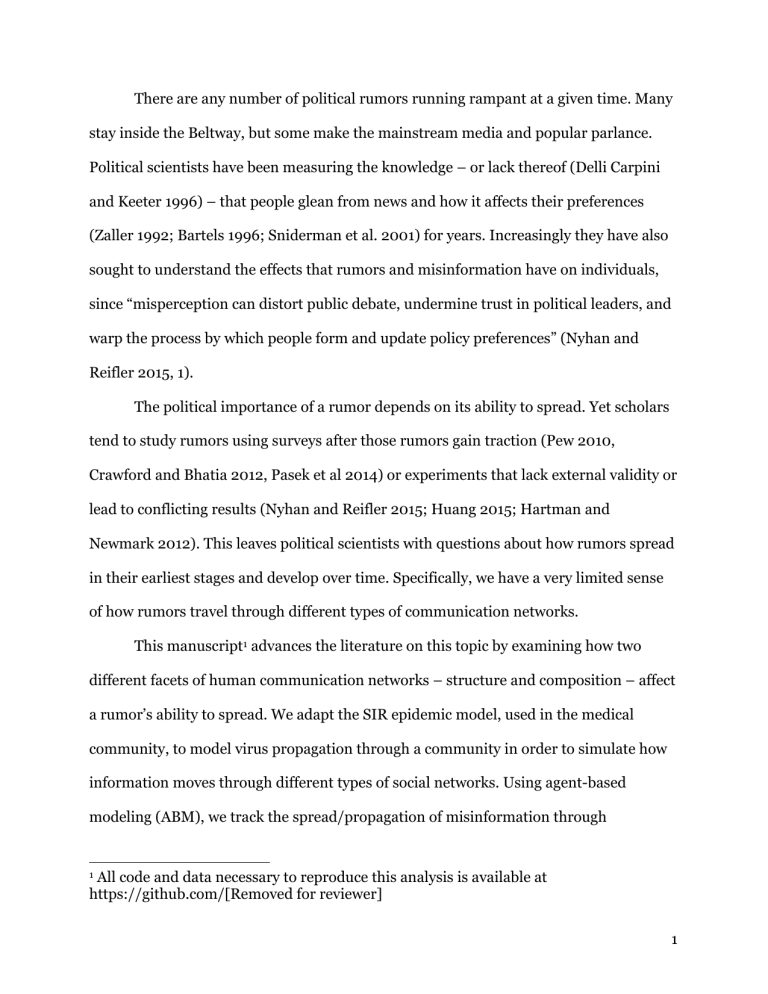

There are any number of political rumors running rampant at a given time. Many stay inside the Beltway, but some make the mainstream media and popular parlance.

Political scientists have been measuring the knowledge – or lack thereof (Delli Carpini and Keeter 1996) – that people glean from news and how it affects their preferences

(Zaller 1992; Bartels 1996; Sniderman et al. 2001) for years. Increasingly they have also sought to understand the effects that rumors and misinformation have on individuals, since “misperception can distort public debate, undermine trust in political leaders, and warp the process by which people form and update policy preferences” (Nyhan and

Reifler 2015, 1).

The political importance of a rumor depends on its ability to spread. Yet scholars tend to study rumors using surveys after those rumors gain traction (Pew 2010,

Crawford and Bhatia 2012, Pasek et al 2014) or experiments that lack external validity or lead to conflicting results (Nyhan and Reifler 2015; Huang 2015; Hartman and

Newmark 2012). This leaves political scientists with questions about how rumors spread in their earliest stages and develop over time. Specifically, we have a very limited sense of how rumors travel through different types of communication networks.

This manuscript 1 advances the literature on this topic by examining how two different facets of human communication networks – structure and composition – affect a rumor’s ability to spread. We adapt the SIR epidemic model, used in the medical community, to model virus propagation through a community in order to simulate how information moves through different types of social networks. Using agent-based modeling (ABM), we track the spread/propagation of misinformation through

1 All code and data necessary to reproduce this analysis is available at https://github.com/[Removed for reviewer]

1

populations of connected individuals. Our findings indicate that certain conditions appear most amenable for widespread rumor exposure and acceptance. In particular, networks comprised of dense clustering of individuals who trust one another yield faster rumor penetration, dovetailing with what is known about Balkanization and selfselection among contemporaries (Frey 1995). But, in networks in which a few centrally important people connect otherwise disconnected communities, Twitter for example, the probability of rumor spread depends entirely on the beliefs of those in central hubs.

Those importantly positioned individuals can make or break a rumor. Populations without substantial numbers of “rumor Rejectors” – or without Rejectors in opinion leader or crux (node) positions – appear most vulnerable to widespread rumor acceptance.

Examination of the effects of social media often draw conclusions from the survey responses of users. This method, however, involves serious limitations. People may not be fully aware of, remember how, or be honest about how they use social media, particularly concerning controversial issues. In using agent-based modeling (ABM) to examine how rumors through different types of social networks, we provide another way of exploring rumor dissemination through social media. While ABM has its own limitations, in connection with other more commonly used methodologies, this method provides us with information about the dissemination and spread of information through networks in a way that survey research and experiments cannot.

Political scientists have long expressed fears that new media would lead to increasing polarization (Sunstein 2009). The results presented here suggest that networks in which the like-minded deal closely and only with each other provide optimal conditions for rumors to spread rapidly and widely, unless individuals who will reject

2

the rumors disperse themselves evenly throughout these new media networks, which we expect is unlikely. And stopping the spread of erroneous information depends in other networks on factually-informed individuals hearing that misinformation early enough to put the brakes on it. These side-by-side findings suggest that we may be entering a new political era partly characterized by the widespread existence and acceptance of rumors among subsets of the population.

The life and times of rumors

Rumors are “claims of fact – about people, groups, events, and institutions – that have not been shown to be true, but that move from one person to another and hence have credibility not because direct evidence is known to support them, but because other people seem to believe them” (Sunstein 2009, 6). Decades of political science scholarship has examined who possesses political knowledge, how people acquire that knowledge, and how they process and store their knowledge. Political scientists know that information dissemination is a complex process that occurs at two levels: The individual level and the societal level. One person believing a questionable claim or story is merely misinformed. What characterizes the misinformation as rumor is the social transmission mechanism by which a claim gains additional believers (Bhavnani, Findley and Kuklinski 2009). In the case of political rumors, they most easily become prevalent in a population when they come from a trusted source of information as well as when they affirm the political views of those listening (Berinsky 2012, 11).

A number of individual characteristics influence whether an individual exposed to a piece of information accepts it as true and might then use it later to evaluate a political object. Institutional trust reflects an individual’s general feelings about the probability

3

that those in positions of institutional power will do what is right (Hetherington 2005,

Stimson 1991). Some individuals predisposed to accepting rumors, particularly those likely subscribe to conspiracy theories, exhibit tendencies to believe that individuals in positions of power construct “facts” to hide their actual activities (Barkun 2003). That individuals tend to trust members of the opposing political party less than their own may also underlie people’s tendencies to believe rumors about the members of the opposing party more than rumors about members of their own party (Berinsky 2012,

18). Some evidence suggests that trust in government actually trumps party identification in its association with rumors (Lupton and Hare 2015). For instance, individuals of both parties exhibit a greater tendency to believe that vaccines cause autism when they have lower levels of institutional trust (ibid).

The fact that individuals exist within complex social networks further complicates the process of information acceptance. Social networks shape how citizens receive and interpret political information (Huckfeldt and Sprague 1987; McClurg 2006; Mutz

2006). Interactions between individuals in a community determine the speed by which a rumor is transmitted through the group, as well as the scope for its acceptance.

Weak ties may spread information (Granovetter 1973), but belief is a function of trust; and individuals often receive politically relevant information from acquaintances in their social network. If a person is unable or unwilling to form an opinion(s), that person may look to those that she trusts in a particular domain for information and interpretation. Thus, interpersonal trust, or the “expectancy of positive (or nonnegative) outcomes that one can receive based on the expected action of another party in an interaction characterized by uncertainty” (Bhattacharya, Devinney and Pillutla 1998,

4

462) 2 , between individuals within a social network becomes an important factor in whether one accepts, uses and/or passes on political information. In addition, “the distribution of [trust] is skewed … which is logical in the social context. Users choose the people with whom they form social connections, and it is reasonable that people are more likely to connect with people they trust highly than people for whom they have little trust” (Golbeck 2005, 76-77). Put another way, if one person chooses to associate with another, then she likely trusts highly, not slightly. For example, “rumors e-mailed to friends/family are more likely to be believed and shared with others” (Garrett 2011,

255). The process of information or misinformation dissemination, then, seems at least partly based on perceptions of a friend or colleague’s expertise and trustworthiness in a particular area.

3 Research in multiple disciplines has further shown that personal trust and political trust differ greatly and cannot be considered along the same dimension

(Ruscio 1999, 641). Nor does trust correlate or have any association with socioeconomic or demographic markers (Delhey and Newton 2003, 112). It is instead its own distinct portion of that which ultimately comprises an attitude or opinion.

Word-of-mouth was the primary mechanism for transmission in the pre-digital age (Allport and Postman 1947, cited in Berinsky 2012), thereby also limiting the

2 The authors actually denote six separate elements of trust: (1) “Trust exists in an uncertain and risky environment” (461); (2) “Trust reflects an aspect of predictability -- that is, expectancy” (461); (3) “Any definition of trust must account for the strength … of trust” (462); (4) “Any definition of trust must account for … the importance of trust”

(462); (5) “Trust exists in an environment of mutuality -- that is, it is situation and person specific” (462); and (6) “Trust is ‘good’” (462).

3 In looking at corruption rumors, Weitz-Shapiro and Winters note that “source credibility is defined by the relationship between a source and the information it disseminates … In expectation, then, a credible source is more likely to be accurate than a non-credible source. The link between credibility and accuracy means that understanding when citizens discern source credibility is crucial for understanding political accountability.” (2015, 1-2). In other words, people relate the accuracy of information heard to the trustworthiness of the speaker.

5

recipient pool to relatively close acquaintances. However, the digital age has fundamentally reshaped the channels through which rumors emerge, increasing both the speed for disseminating the misinformation as well as its potential audience (Delli

Carpini 2000). In the analog era, a person could incur significant personal and social costs related to gossip (Piazza and Bering 2008), which may have lead to hesitation when repeating a rumor to someone less familiar (O’Kane and Hargie 2007, 24). A lack of face-to-face interactions (or at least an immediate physical presence) seems to increase a person’s willingness to disseminate information to more tenuous connections in her network. Put another way, the removal of the human filter seems to make people’s comments less grounded and more extreme.

4

Since most of the research outlined above emerges from surveys and experiments, its findings only partly explain the dissemination of rumors and misinformation. Researchers must be able to incorporate inferences about the interaction of individual and social characteristics to create better models in which information (rumor) dissemination can be studied. Such models open new avenues of inquiry, such as to study how information travels between people, not just what happens to the information when one person hears it.

New models require innovative tools and Agent-Based Modeling (ABM) provides scholars a unique tool with which the interaction of individual- and social-level characteristics can be modeled. In this paper, we explore the conditions under which rumors will most likely spread, focusing on how interpersonal trust and institutional trust interact within an individual as well as within a group of people.

4 Ruiz et al find that online commentaries on news beget a “dialogue of the deaf” rife with malicious comments in some situations (2011, 480).

6

Modeling the Spread of Rumors

Misinformation, or specifically, rumors, constitute a specific type of information whose growth, spread, and evolution might be tracked. As discussed above, rumor emergence, or the state in which the majority of a group comes to believe in an unsubstantiated claim is a social phenomenon by nature (Bhavnani Findley and

Kuklinski 2009, 878). While individual-level characteristics define whether a person comes to believe in a rumor and will pass it forward, group-level properties constrain the opportunities and the audience to which the believer has access.

Rumors, similar to the propagation of factual information, rely on two mechanisms to ensure emergence and survival: transmission and updating (Banerjee

1993; Kosfeld 2005). “Transmission requires that two agents interact with each other, with one conveying a belief to the other,” while updating occurs only when the receiver of the transmitted belief replaces her existing (presumably different) belief with the new information (Bhavnani Findley and Kuklinski 2009, 878). Thus, the spread of any rumor is dependent on both the speaker and the receptor.

While transmission can happen without updating, the same is usually not true of the reverse.

5 Berinsky argues that updating, or the acceptance of rumor, is a function of both the “generalized propensity to adopt a conspiratorial orientation and of the content of the rumor vis-a-vis the political beliefs of a given individual” (2015, 8). We represent updating in our model as an additive function of a person’s trust of political institutions and also her trust of the person who passed on the rumor. A person’s trust or its inverse, mistrust of political institutions is highly correlated with her propensity to

5 Not unless the person updating in isolation is the instigator of the rumor.

7

adopt a conspiratorial orientation 6 as well as with negative political beliefs (Barkun

2003). The higher the mistrust, the more likely that she will be receptive to the contents of the rumor, which we assume in the paper to be negative. The second component, a person’s trust of the speaker who tells her the rumor, is our way of adapting a Downsian

(1957) heuristic to this model. Assuming people to be uninterested in politics and/or cognitively lazy (Kahneman 2011) and/or choosing to associate with others like themselves, if a speaker (a rumor transmitter) is trusted, then the receptor should be more likely, for any myriad of reasons, to believe the rumor.

By contrast, transmission is largely automatic, with believers eager by nature to disseminate rumors to their social connections. Berinsky (2012)’s analysis finds that a majority of people (65%) will believe at least one rumor, with a smaller percentage

(25%) ambivalent and small contingents who will always accept (5%) or reject (5%) a rumor. We follow this typology to predict that an individual’s tendency to disseminate the rumor to someone else is a function of her receptivity to the information contained within. Rejectors and those who have not yet heard the rumor will not transmit a rumor, while everyone else who has heard (including Ambivalent hearers who will never believe or reject a rumor) 7 will always pass this information forward.

We use agent-based modeling (ABM) to build our model, as the method

“embod[ies] elaborate and interlaced feedback relationships, leading to nonlinear, path-

6 Berinsky examines dogmatism and political disengagement as operationalizations of his proposed process, since psychologists and political scientists have found that those personality traits tend people “toward conspiratorial anti-government thinking” (2012,

7

8).

We hold that ambivalence in belief does not lessen the (usually) salacious content of a rumor, making it an appealing topic of conversation, especially in the digital age.

Content analysis has also shown that negative news is more likely to be retweeted and go viral (Hansen et al 2011). Therefore, disinterested or ambivalent listeners are nonetheless presumed to pass on information, even if they will never believe it.

8

dependent dynamics, where small changes in individual heuristics, characteristics, or interaction patterns generate large changes in collective outcomes, a hallmark in complex adaptive systems” (Bhavnani, Findley, and Kuklinski 2009, 881). Further, it is

“especially valuable when the variables and assumptions built into it represent conditions that empirical observation has already identified as crucial” (ibid). Our goal is to study the individual and group preconditions that are most conducive to rumor emergence 8 and, conversely, to note those factors that are most effective to stymie any rumor’s propagation.

Most of the research on political misinformation employed surveys or experiments to examine dispersion and subsequent effects. This provides us insight into the phenomenon at the individual and aggregate levels, independent of each other.

Agent-based modeling supplements this research by studying how the interaction between these two levels affects the spread of misinformation. Such models allow scholars to explore information as a social phenomenon, providing a way to methodologically capture a reality of human behavior – the fact that individuals operate within communities (Bourdieu 1979; Krippendorf 2005).

The Model

Our framework is a modified SIR (susceptible-infected-recovered) epidemic model with carrier state used to simulate disease propagation in a community

(Anderson and May 1979; Kermack and McKendrick 1927). Its goal is to predict the number of patients infected with a contagious disease in a closed population over time.

8 This term is used in complexity literature to mean the spread or dissemination of rumors, not their initial start point.

9

In the basic model, agents can are assigned to one of four groups: (1) susceptible

(never infected with a disease and can become sick, not currently infectious); (2) infected (currently sick and infectious); or (3) removed (recovered from disease or immunized against disease, neither types can be infected again). The carrier state (4) adds additional individuals who have recovered but remain infectious to others (Kemper

1978). After initial assignment, the population is assumed to be closed, with no agents created or destroyed during the simulation. During model setup, each agent is randomly assigned a membership in any of the four groups, with probability of assignment determined by previously collected data. Each disease is associated with aggregate-level characteristics such as probability β for infectiousness, time t s

for average sickness duration, and probability β c

for becoming a carrier.

In a typical run, sick individuals expose their x neighbors to the disease. Immune and removed individuals cannot become infected while β * x susceptible neighbors become infected for time t s

. At time t s

+1, x infected agents recover to become one of (1-

β c

) * x removed agents or β c

* x carrier agents.

Speaking abstractly, viruses (as well as other diseases) are nothing more than long strands of information seeking to reproduce to ensure survival. A rumor would then seem to be a virus without a capsid, or protective protein coating. To stretch the metaphor further, a rumor seeks to survive by infecting as many people as possible with belief in its truth, but some people have more immunity (skepticism) than others to it. In this view, the SIR model seems strangely apt to model rumor emergence as well.

In our adaptation, group membership defines an agent’s propensity to believe the rumor as well as its willingness to pass on the rumor to someone else. Our framework adopts the virus propagation method as follows: (1) susceptible persons who have not

10

yet heard the rumor; (2) infected who begin the run believing the rumor or believe it by the end of the run; (3) removed who possess sufficient factual information or have other reasons to never believe a rumor; and (4) ambivalent carriers who do not believe the rumor but nonetheless pass it on. Because no one is equally likely to accept a rumor, we define the probability that an agent will come to believe a political rumor as a function of both its trust in the source agent as well as its trust in political institutions, with lower trust in the institutions equating to more openness to believing a rumor. In other words, if an agent distrusts the government, that agent has a higher probability of believing any

(negative) rumor. Because minds are very difficult to change once made up even when presented with corrective information (Gaines et al 2007; Nyhan and Reifler 2015), we specify no recovery time t s

. Once an agent believes a rumor, said agent remains permanently persuaded.

9

[Table 1 about here]

During initial setup for a model run, we specify global parameters as seen in

Table 1. Set A controls the propensity of individuals to believe in a particular rumor.

Continued analytical work on the World Values Survey have shown that people exhibit very different levels of trust in each other as well as in their government across borders

(Inglehart and Welzel 2005). Thus, we set an average group trust parameter as well as an average trust of political institutions parameter, to mirror these findings, generating individual levels of both from a normal distribution centered on the specified levels. We

9 We acknowledge that the brain may have barriers to accepting information, much like any person may have T-cells that impede falling sick after virus transmission. We contend that such individual variation is built into our model, in this case for example with variation in who recognizes the erroneous facts in any given rumor.

11

set the third, the threshold for believing the rumor to specify the stringency of criteria that a person applies when trying to decide whether to accept a rumor.

Set B define parameters for the “outbreak” and transmission of the rumor. The

“outbreak” starts when N b

agents hear the rumor and believe it.

10 Following belief, agents who believe will only make y attempts at telling other agents before giving up.

Set C controls the size of the community affected and the size of the initial rumor

“outbreak”. We derive the range of agents possible from Dunbar (1993)’s work on the sustainable social group size, from 100 (lower bound of very cohesive groups) to 1500

(upper limit on acquaintances to whom one person can put faces to names) 11 .

Network type sets the rules by which agents create links to each other, which consequently controls how quickly and easily information can be disseminated throughout a network. We examine rumor emergence in two idealized network types:

Small World and Scale-Free 12 .

Small World networks have been immortalized in popular culture as the “Six

Degrees of Kevin Bacon,” which claims that any actor can be connected to Kevin Bacon through, at most, six acquaintance links. Small World networks have high clustering coefficients (meaning that friends of any agent tend also to be friends with each other) and short average path-lengths (meaning that an agent requires a very short number of hops to reach another agent). Social networks are often described as being Small World

10 In this version of the model, the initial spreaders hear the rumor from outside the community, possibly via media or some other source. We then examine its dissemination through the spreaders’ acquaintances.

11 See Konnikova (2014) for an explanation of Dunbar’s number in modern society.

12 Several other ideal network types exist, including Star, Wheel and Random. Random models are particularly computationally intensive.

12

networks, with friends of a person often being friends as well as strangers being linked together by a small number of mutual acquaintances.

Scale-Free networks, also known as Preferential Attachment networks, follow a power law function with regard to the distribution of links. Specifically, the configuration attempts to reproduce the observation that the number of links per agent is not normally distributed in a group. During formation, agents are more likely to form connections with agents who already have connections, thus most agents have a small number of links while a few have many. In this type of network, friends of friends are usually not friends, and path lengths vary greatly depending on one’s connectedness in the network. Academic collaborations among American mathematicians, for example, have been shown to be a scale-free network (Clauset, Shalizi and Newman 2009).

13,14

Running the Model

We adopt Berinsky (2012)’s typology of rumor susceptibility as well as his experimental findings about the frequency of occurrence when assigning agents to groups. Susceptible agents (eponymous in the original model) are not yet exposed to a rumor but are capable of believing a rumor. Believers (infected) have already incorporated the rumor into their beliefs and will always pass it on. A subset of

13 An example of a scale-free network in politics might be legislation writing. Many people might be involved in the writing of a bill, but the legislation is ultimately dependent on the actions and information of a few in positions of power (Koniaris,

Anagnostopoulos and Vassiliou 2015).

14 There is one more type of common network formation in ABM literature that we considered including: The star network, consisting of a central speaker with many connections who are not connected to each other. We suspect this is much how rumor transmission through media might work. However, selective exposure literature would suggest that readers/listeners/viewers of any outlet would likely trust that outlet and be prone to believe the (center of the star) speaker, leaving little variation in the model for us to consider.

13

Susceptible agents, the Gullible, are not yet exposed to the rumor but will always believe it upon exposure. Once the agents above have adopted a belief (infection), they will never change their minds. Skeptics (removed) will never believe a rumor and will never pass it on. Lastly, individuals who cannot make up their minds, called Ambivalents

(carrier), will always pass on a rumor, even if they cannot decide whether to believe it themselves. This innovation to the model allows us to more accurately simulate how rumors reportedly move in real-life, especially in the social media environment (Hansen et al 2011). Agents are initially distributed to approximate Berinsky’s experimental findings: 65% Susceptible, 5% Gullible, 5% Skeptical, and 25% Ambivalent. Each agent keeps track of whether it has been exposed to the rumor and whether it believes it.

At initial setup, all agents are assigned a group membership and are then randomly scattered across a digital landscape. N b non-Skeptic agents are randomly designated as having heard and, in the case of Susceptible and Gullible agents, believed the rumor. All agents then randomly make undirected connections to each other according to rules of the network type, as previously set by the user.

Each agent then determines its distrust in government as well as calculates its trust of its connected neighbors. Each agent calculates a TP i

, its trust in political institutions, drawn from a normal distribution with µ = TP , with TP preset by the user.

15 The trust of a neighbor is a function of social distance between two agents, represented by distance d ij

, and also the average interpersonal trust value of the group

TG . Closer agents are usually family or close friends, and those further away are increasingly tenuous (but still familiar) acquaintances. We restrict our study to those networks with high average interpersonal trust, as we aim to study circumstances that

15 The model presented tests institutional trust at the low-medium-high level.

14

are optimal for maximal rumor penetration (Golbeck 2005).

16 In real-world networks in which people are connected tenuously to others with less trust, rumors should propagate less than in this simulation.

During one iteration or “tick” of the model, Susceptible, Gullible and

Ambivalent agents who have already heard the rumor randomly select one of their undecided neighbors to tell. Each agent updates whether it has been exposed to the rumor. If the recipient is Gullible, then it believes the rumor without any deliberation. If the recipient is Susceptible, then it determines acceptance of the rumor based on its trust of the teller and trust of political institutions rendered mathematically as A i

= TG i

+

TP i

. If A i

>= A , where A is the previously set threshold of acceptance, then the agent believes the rumor.

The model ends run when all agents have heard the rumor, but this may not occur due to the randomized configurations of agents and connections. Thus, in repeated simulation, we specify that the model will end regardless of its state after 500 ticks. We generate 30 models for each parameter configuration for a total of 14580 runs.

Findings

The extent to which rumors penetrate social groups varies based on the type and composition of networks (Tables 2 and 3).

17 Rumors spread quickly through Small

World networks but struggle to penetrate Scale-Free configurations. As important, the number and location of Rejectors greatly affect rumor penetration. The speed at which

16 To draw an analogy to survey research, this limits the range to what is considered reasonable or probable. We narrow our model to when trust would be a valid assumption.

17 Percentages are calculated exempting Rejectors and the Ambivalent, as our model specifies these agents will never believe rumors.

15

rumors penetrate varies based on network type and composition as well. These findings suggest a great deal of complexity underlying rumor emergence.

[Tables 2 and 3 about here]

Rumors spread particularly well in communities in which acceptance thresholds are low. Rumors spread most widely in communities that exhibit two types of network characteristics: first, those communities that are very tightly knit and trusting 18 ; and, second, those communities with few Rejectors or where Rejectors are spread unevenly throughout the population.

In communities characterized as tightly knit and trusting, individuals demonstrate a willingness to believe information as a result of their bonds with other individuals within their networks. In such a Small World configuration, dense clustering of agents facilitates rumor penetration, generally within approximately twenty ticks of the model (Figure 1a). This holds true even in very large acquaintance networks, with upper limit at approximately 1500 people. By contrast, Scale-Free models generally times out before rumor saturation is reached.

19 Even given equal settings for trust and the likelihood of rumor acceptance, Tables 2 and 3 show that the variation between the two model types is notable. Assuming equal starting parameters, we find an approximately 23 percent difference in rumor acceptance across the nine scenarios.

[Figures 1a and 1b about here]

The unequal distribution of connections among agents in the Scale-Free network means that it takes much longer for information to move through there, as information

18 Varying the aggregate acceptance threshold could be a function of individual trust, institutional trust or a mix of both at the individual level.

19 Note the difference in Y-axis scale, as it indicates how much more slowly information moves in a Scale-Free environment.

16

penetration depends heavily on the position of the rumor spreader in the network. If she is “important” and has many connections, then she can get out the information faster.

Information only spreads widely in a Scale-Free network if it starts from one of these important central points. Conversely, depending on their position in the network, some agents will never hear the rumor no matter how long they wait, as that same information will not spread if Rejectors are critically placed.

In communities with few Rejectors or those with Rejectors unevenly distributed through the population, rumor penetration increases as well. The position of Rejectors within the network is critical in determining whether a rumor successfully penetrate it.

This may be the most interesting or notable finding. In a Scale-Free network, a clear negative relationship exists between the percent believing a rumor and Rejector connectedness (Figure 2a).

20 The more central or connected that Rejectors are, the less a rumor spreads. This is graphically most visible in the small-size Scale-Free network at the lowest acceptance thresholds.

21 In the Small World network though (Figure 2b), all simulations tended to cluster around a similar number of connections, with model size barely impacting rumor spread. All that really matters in a Small World network is the established acceptance threshold, not Rejector connectedness.

22

20 Personal trust levels were held constant – and placed high – to show the best-case scenario graphically. Lower levels of personal trust would elicit even less rumor spread.

21 The pink dots in left-hand column of Figure 2

22 In terms of the difference in average Rejector connectedness between the models, a s networks acquire more agents (and hence more Rejectors), the variation in connectedness flattens until it converges on some value. Small World agents tend to be well and equally connected, and clustering is a symptom of that. Conversely, that also explains why Scale-Free Rejectors tend to have a smaller number of links, and Rejectors are, on average, very sparsely connected. Thus, even small perturbations in average connectedness exert relatively larger effects on how well information flows. This narrows the range of variation over time and also makes the average converge to a smaller number of links than is observed in a Small World network.

17

[Figures 2a and 2b about here]

The position of Rejector is so critical, in fact, that rumor penetration is a rare state for a Scale-Free network. Most models, even smaller ones, time out before rumors penetrate. In Scale-Free Networks, rumors are more likely to gain traction when

Rejector connectedness is very low in other words, when there are few well-connected

Rejectors that can intercept the rumor or when the network is small. Interestingly, the connectedness of Susceptible agents does not matter in either type of network (Figures

3a, 3b and 3c). It is only the positioning of the Rejectors that affects the rumor’s strength.

23

[Figures 3a, 3b and 3c about here]

To give an example that might make the findings of these simulations more tangible – again using the virus mechanism – Scale-Free networks are analogous to the hub-and-spoke airport system, with central nodes such as O’Hare or Heathrow. A virus released at a major airport will easily spread not only to other hubs, but also to smaller airports that rely on those hubs. But a virus released at a small airport, for example in

Rochester or Cape Cod, must spread first to the hub to have any hope of reaching further. If that virus is contained, in other words stopped at the hub, it will not spread. A

Rejector in a hub position then, can stop a rumor from spreading, whilst a rumor spreader in a hub position will quickly get a rumor out to a given population.

The speed of rumor penetration also varies. For both model types, as is intuitive, increasing the number of initial rumor spreaders increases the speed at which the rumor

23 A cursory look at Figures 3a and 3b may lead an individual to assume a strong correlation between connectedness and speed of rumor penetration in a Small World network. This is, in fact, untrue. When the model parameters are broken out, these characteristics show no correlation. The average level of connectedness increases just because the number of nodes increases..

18

penetrates the network (Figure 4a, 4b and 4c). But, in the case of a Scale-Free network, only with a small network can we actually tease out the number of iterations a rumor might take to spread. With a medium- or large-sized network, the simulation runs out of ticks before a rumor has fully spread (Figure 4b) 24 . In other words, even when thinking about time, not just saturation, a rumor only truly penetrates in a Small World situation.

In a Scale-Free environment of medium or large size, a rumor may never penetrate.

[Figures 4a, 4b and 4c about here]

Conclusion

The research presented above suggests ABM offers scholars insight into how rumors spread. Political scientists studying rumor dissemination need to understand how rumors spread outside the controlled conditions of the laboratory setting and need to understand how they exist at the community, not atomized, level. Context matters for rumors or information of any kind to gain traction and become the modal belief in a population. By incorporating recent experimental and survey findings with large-scale simulations, we gain leverage in explaining the life cycle of a rumor, making a unique contribution to both Political Science and Complexity literatures. By using Agent-Based

Modeling, we believe that scholars may be able to better approximate the complex process through which human beings, immersed within their social networks, receive and spread true or false information.

The model results discussed, though limited as they as ideal types, above indicate the importance of the position of Rejectors in slowing down or stopping the

24 Note the clustering at the top of the graph, at 500 ticks of the model, when the program was coded to stop.

19

dissemination of rumors in any given community. To the extent that some individuals that comprise this group reject rumors based on their possession of enough political information to reject rumors considered blatantly untrue, this finding has substantial implications for normative concerns in the democratic theory literature. Empirical research demonstrates that who possesses political information differs across demographic groups (Delli Carpini and Keeter 1996). Bluntly stated, some groups tend to be more politically knowledgeable than others. Since Rejectors will not be equally distributed across groups, our findings suggest that rumor dissemination will be widest or most probable among particular sociodemographic groups. The result may be a further distortion of the preferences of members of these disadvantaged groups, as they then must form political preferences with insufficient and inaccurate information.

How far a rumor will spread depends not only on the types of networks in which it is introduced, but also the connectedness of information disseminators. This suggests that the presence of social media, which allows for far more expansive social networks than those occurring based on face-to-face interaction, provides an important environmental stimuli for rumor spread, particularly if individuals sharing misinformation are trusted within their networks. Such trusted individuals likely make up at least part of individuals social networks on platforms like Twitter. Sixty-three percent of American Twitter users get political news via Twitter (Barthel et al 2015).

Contrary to popular assumptions, this group includes both older (over 35) and younger

(under 35) Americans (ibid).

20

For example, Donald Trump’s retweeting slander against Jeb Bush’s Mexican wife spread that message to Trump’s 3.3 million followers 25 , at least some of whom presumably trusted Trump and wanted to believe negative information about his opponent for the Republican presidential nomination. Trump’s later deleting the tweet, however, would not undo or put the brakes on the spreading rumor. Given that

“incorrect information travels farther [and] faster on Twitter than corrections” do

(Silverman 2014), as Twitter continues to grow, it provides an ideal forum for rumor dissemination and acceptance, in addition to the churches, social groups, workplaces and other small worlds in which rumors already spread. Only when a highly connected and broadly trusted individual shuts down a rumor very early in its life cycle might this increasingly important news forum stop a rumor from spreading.

26

The normative importance of these findings should not be underestimated.

Rumors in Scale-Free (hubbed) situations have a limited lifespan, given a Rejector in a central position early in the rumor’s spread. But factual information against any rumor will matter very little in a tightly-knit community, particularly if highly connected individuals or other opinion leaders initially believe an inaccurate rumor. Nearly all that matters in a Small World is trust; and nearly all that matters in a Scale-Free environment is what the hubbed agents believe.

Human networks remain incredibly complex. In actuality, one type of network might be embedded within or otherwise connected to another type of network. To use the analogy of Google Maps, we can zoom in or out on any given place. When we do so,

25 See Koffler 2015 for details about Trump’s retweet.

26 There are some developers working on platforms to track the veracity of rumors, a big data version of politifact, basically (Garber 2014). But they acknowledge there is little commercial incentive to do so.

21

we change the level of detail depicted to the eye, but the pictures nonetheless represent part of the same spatial reality. In this paper, we have argued that political scientists have zoomed in on networks when studying rumor dissemination, capturing this phenomenon at a very local level. ABM promises to provide scholars with insight as to what rumor dissemination looks like at higher levels, improving our overall understanding of how rumors more through populations.

Rumors and misinformation are not new to American politics. Politicians have long contended with the spread of rumors. John and Abigail Adams frequently complained about rumors linking John Adams to support for re-establishing a monarchy in the U.S. (Ellis 2010). Andrew Jackson blamed rumors about his marriage being bigamous for the death of his wife (Meacham 2009). Yet what politicians have been complaining about for almost three hundred years, political scientists are really only beginning to systematically study. The results presented here suggest that we may be entering a new political era, where rumors play a more central role in politics spreading to more individuals and becoming increasingly difficult to halt when inaccurate. To the extent that we accept good political decisions to rest on the possession of accurate information, this poses a substantial problem within American democracy.

22

References

Allport, Gordon W. and Leo Postman. 1947. The Psychology of Rumor . New York:

Henry Holt & Co.

Anderson, Roy M. and Robert M. May. 1979. “Population Biology of Infectious Diseases:

Part I.” Nature 280: 361-367. DOI: 10.1038/280361a0

Banerjee, Abhijit. 1993. “The Economics of Rumours.” Review of Economic Studies

60(2): 309–27.

Barkun, Michael. 2003. A Culture of Conspiracy: Apocalyptic Visions in Contemporary

America . Berkeley: University of California Press.

Bartels, Lawrence M. 1996. “Uninformed votes: Information effects in presidential elections.” American Journal of Political Science 40(1): 194-230.

--- 2008. Unequal Democracy: The political economy of the new Gilded Age . Princeton:

Princeton University Press.

Barthel, Michael, Elisa Shearer, Jeffrey Gottfried and Amy Mitchell. 2015. “The Evolving

Role of News on Twitter and Facebook.” Pew Research Center’s Project for

Excellence in Journalism. (July 14). http://www.journalism.org/2015/07/14/theevolving-role-of-news-on-twitter-and-facebook/ (July 23, 2015).

Berinsky, Adam J. 2012. “Rumors, Truths and Reality: A study of political misinformation.” http://web.mit.edu/berinsky/www/files/rumor.pdf (January 21,

2015)

-- 2015. “Rumors and Health Care Reform: Experiments in political misinformation.”

British Journal of Political Science . Forthcoming.

Bhattacharya, Rajeev, Timothy M. Devinney and Madan M. Pillutla. 1998. “A Formal

Model of Trust Based on Outcomes.” Academy of Management Review 23(3): 459-

472

Bhavnani, Ravi, Michael Findley and James Kuklinski. 2009. “Rumor Dynamics in

Ethnic Violence.” Journal of Politics 71(3): 876 - 892. DOI:

10.1017/S002238160909077X

Bourdieu, Pierre. 1993. “Public Opinion Does Not Exist.” In Sociology in Question , ed.

Pierre Bourdieu. London: SAGE, 149-158.

Cappella, Joseph N. and Kathleen Hall Jamieson. 1997. Spiral of Cynicism: The press and the public good . New York: Oxford University Press.

Clauset, Aron, Cosma Rohilla Shalizi and M. E. J. Newman. 2009. “Power-Law

Distributions in Empirical Data.” Siam Review 51(4): 661-703.

Coronel, Jason C., Kara D. Federmeier and Brian D. Gonsalves. 2014. “Event-related potential evidence suggesting voters remember political events that never happened.” Social Cognitive and Affective Neuroscience 9(3): 358-366. DOI:

10.1093/scan/nss143

Coronel, Jason C. and James H. Kuklinski. 2012. “Political Psychology at Stony Brook: A retrospective.” Critical Review 24(2): 185-198. DOI: 10.1080/08913811.2012.711021

Crawford, Jarret T. and Anuschka Bhatia. 2012. “Birther Nation: Political Conservatism is Associated with Explicit and Implicit Beliefs that President Barack Obama is

Foreign.” Analyses of Social Issues and Public Policy 12(1): 364-376. DOI:

10.1111/j.1530-2415.2011.01279.x

23

Delhey, Jan and Kenneth Newton. 2003. “Who Trusts? The origins of social trust in seven societies.” European Societies 5(2): 93–137.

DOI:10.1080/1461669032000072256

Delli Carpini, Michael. 2000. “Gen.com: Youth, Civic Engagement, and the New

Information Environment.” Political Communication 17(4), 341-349. DOI:

10.1080/10584600050178942

--- and Scott Keeter. 1996. What Americans Know about Politics and Why It Matters .

New Haven, Conn.: Yale University Press.

Downs, Anthony. 1957. An Economic Theory of Democracy . New York: Harper and

Row.

Dunbar, RIM. 1993. “Coevolution of Neocortical Size, Group Size and Language in

Humans.” Behavioral and Brain Sciences 16: 681-735. DOI:

10.1017/S0140525X00032325

Ellis, Joseph .J. 2010. First Family: Abigail and John Adams.

New York, New York:

Knopf.

Frey, William H. 1995. “The New Geography of Population Shifts: Trends Toward

Balkanization.” In State of the Union: America in the 1990s, Vol. 2 , ed. Reynolds

Farley. New York: Russell Sage, 271–336.

Gaines, Brian J., James H. Kuklinski, Paul J. Quirk, Buddy Peyton and Jay Verkuilen.

2007. “Same Facts, Different Interpretations: Partisan Motivation and Opinion on

Iraq.” Journal of Politics 69(4): 957–974. DOI: 10.1111/j.1468-2508.2007.00601.x

Gaines, Brian J., James H. Kuklinski and Paul J. Quirk. 2007. “The Logic of the Survey

Experiment Reexamined.” Political Analysis 15(1): 1-20. DOI: 10.1093/pan/mpl008

Garber, Megan. 2014. “How to Stop a Rumor Online (Before the Rumor Becomes a

Lie).” The Atlantic (September 26). http://www.theatlantic.com/technology/archive/2014/09/how-to-stop-a-rumoronline-before-the-rumor-becomes-a-lie/380778/ (July 23, 2015).

Garrett, R. Kelly. 2011. “Troubling Consequences of Online Political Rumoring.” Human

Communication Research 37(2): 255–274. DOI: 10.1111/j.1468-2958.2010.01401.x

Golbeck, Jennifer Ann. 2005. “Computing and Applying Trust in Web-Based Social

Networks.” Ph.D. diss. University of Maryland.

Granovetter, Mark S. 1973. “The Strength of Weak Ties.” American Journal of Sociology

78(6): 1360–1380.

Hansen, Lars Kai, Adam Arvidsson, Finn Aarup Nielsen, Elanor Colleoni and Michael

Etter. 2011. “Good Friends, Bad News: Affect and Virality in Twitter.” In Future

Information Technology Communications in Computer and Information Science

Volume 185, eds James J. Park, Laurence T. Yang and Changhoon Lee. New York:

Springer, 34-43. http://arxiv.org/pdf/1101.0510.pdf (July 23, 2015).

Hartman, Todd K. and Adam J. Newmark. 2012. “Motivated Reasoning, Political

Sophistication, and Associations between President Obama and Islam.” PS 45(3):

449-455. DOI: http://dx.doi.org/10.1017/S1049096512000327

Hetherington, Marc J. 2005. Why Trust Matters: Declining Political Trust and the

Demise of American Liberalism . Princeton: Princeton University Press.

Huang, Haifeng . 2015. “A War of (Mis)Information: The Political Effects of Rumors and

Rumor Rebuttals in an Authoritarian Country.” British Journal of Political Science .

Forthcoming.

24

http://faculty.ucmerced.edu/sites/default/files/hhuang24/files/rumors_and_rebutt als_ssrn.pdf (July 23, 2015).

Huckfeldt, Robert and John Sprague. 1987. “Networks in Context: The Social Flow of

Political Information.” American Political Science Review 81(4): 1197-1216. DOI:

10.2307/1962585

Inglehart, Ronald and Christian Welzel. 2005. Modernization, Cultural Change and

Democracy: The human development sequence . New York: Cambridge University

Press.

Kahneman, Daniel. 2011. Thinking Fast and Slow . New York: Farrar, Straus and Giroux.

Kemper, John T. 1978, “The effects of asymptotic attacks on the spread of infectious disease: A deterministic model”. Bulletin of Mathematical Biology 40: 707–718.

Kermack, William Ogilvy and Anderson Gray McKendrick. 1927. “A Contribution to the

Mathematical Theory of Epidemics”. Proceedings of the Royal Society A:

Mathematical, Physical and Engineering Sciences 115(772): 700-721.

Koffler, Jacob. 2015. “Donald Trump Tweets Racially Charged Jab at Jeb Bush’s Wife.”

Time (July 6). http://ti.me/1Cl0Hut (July 23, 2015).

Koniaris, Marios, Ioannis Anagnostopoulos and Yannis Vassiliou. 2015. “Network

Analysis in the Legal Domain: A complex model for European Union legal sources.”

(January 21). http://arxiv.org/pdf/1501.05237.pdf (April 11, 2015).

Konnikova, Maria. 2014. “The Limits of Friendship.” The New Yorker (October 7). http://www.newyorker.com/science/maria-konnikova/social-media-affect-mathdunbar-number-friendships (April 5, 2015).

Kosfeld, Michael. 2005. “Rumours and Markets.” Journal of Mathematical Economics

41: 646–64. DOI: 10.1016/j.jmateco.2004.05.001

Krippendorff, Klaus. 2005. The Social Construction of Public Opinion. Departmental

Papers (ASC) , 75.

Kuklinski, James H., Paul J. Quirk, Jennifer Jerit, David Schwieder and Robert F.Rich.

2000. “Misinformation and the Currency of Democratic Citizenship.” The Journal of

Politics 62(3): 790-816 DOI: 10.1111/0022-3816.00033

Lippmann, Walter. 1922. Public opinion . New York: Free Press.

Lupton, Robert and Christopher Hare. 2015. Conservatives are more likely to believe that vaccines cause autism. WashingtonPost.com

(March 1). http://www.washingtonpost.com/blogs/monkeycage/wp/2015/03/01/conservatives-are-more-likely-to-believe-that-vaccines-causeautism/ (May 6, 2015).

Meacham, Jon. 2009.

American Lion: Andrew Jackson in the White House . New York,

New York: Random House.

--- 2013. Thomas Jefferson: The Art of Power . New York, New York: Random House.

McClurg, Scott D. 2006. “Political disagreement in context: The conditional effect of neighborhood context, disagreement and political talk on electoral participation.”

Political Behavior 28(4): 349-366. DOI: 10.1007/s11109-006-9015-4

Mutz, Diana. 2006. Hearing the Other Side: Deliberative versus Participatory

Democracy . New York: Cambridge University Press.

Nyhan, Brendan and Jason Reifler. 2015. “When Corrections Fail: The persistence of political misperceptions.” Political Behavior . Forthcoming. https://www.dartmouth.edu/~nyhan/nyhan-reifler.pdf (March 18, 2015)

25

O’Kane, Paula and Owen Hargie. 2007.“Intentional and unintentional consequences of substituting face-to-face interaction with e-mail: An employee-based perspective.”

Interacting with Computers 19(1): 20–31. DOI:10.1016/j.intcom.2006.07.008

Pasek, Josh, Tobias H. Stark , Jon A. Krosnick and Trevor Tompson. 2014. “What motivates a conspiracy theory? Birther beliefs, partisanship, liberalconservative ideology, and anti-Black attitudes.” Electoral Studies . Forthcoming. DOI:

10.1016/j.electstud.2014.09.009

Pew Center for Excellence in Journalism. 2010. “Growing Number of Americans Say

Obama is a Muslim.” (August 18). http://www.pewforum.org/2010/08/18/growingnumber-of-americans-say-obama-is-a-muslim/ (July 23, 2015).

--- 2014. “Public Trust in Government: 1958-2014.” (November 13). http://www.peoplepress.org/2014/11/13/public-trust-in-government/ (March 18, 2015)

Piazza, Jared and Jesse M. Bering. 2008. “Concerns about reputation via gossip promote generous allocations in an economic game.” Evolution and Human

Behavior 29: 172–178. DOI: 10.1016/j.evolhumbehav.2007.12.002

Putnam, Robert D. 2015. Our Kids: The American dream in crisis . New York: Simon &

Schuster.

Ruiz, Carlos, David Domingo, Josep Lluís Micó, Javier Díaz-Noci , Koldo Meso and Pere

Masip. 2011. “Public Sphere 2.0? The Democratic Qualities of Citizen Debates in

Online Newspapers.” International Journal of Press/Politics 16(4): 463–487. DOI:

10.1177/1940161211415849

Ruscio, Kenneth P. 1999. “Jay’s Pirouette, or Why Political Trust is Not the Same as

Personal Trust.” Administration & Society 31(5): 639-657. DOI:

10.1177/00953999922019274

Silverman, Craig. 2014. “Visualized: Incorrect information travels farther, faster on

Twitter than corrections.” Poynter.org (November 25). http://www.poynter.org/news/mediawire/165654/visualized-incorrect-informationtravels-farther-faster-on-twitter-than-corrections/ (July 23, 2015).

Sniderman, Paul M., Philip E. Tetlock and Laurel Elms. 2001. “Public Opinion and

Democratic Politics: The problem of non-attitudes and the social construction of political judgment.” In Citizens and politics: Perspectives for citizens and politics , ed. J. H. Kuklinski. New York: Cambridge University Press, 254-288.

Stimson, James A. 1991. Public opinion in America: Moods, cycles, and swings.

Boulder: Westview Press.

Sunstein, Cass R. 2009. On Rumors: How falsehoods spread, why we believe them, what can be done . Princeton: Princeton University Press.

Weitz-Shapiro, Rebecca and Matthew S. Winters. 2015. “Can Citizens Discern?

Information Credibility, Political Sophistication, and the Punishment of Corruption in Brazil.” Brown University.

Zaller, John. (1992). The nature and origins of mass opinion . Cambridge: Cambridge

University Press.

26

Table 1: Model Parameters

Set A: Individual

Parameters

1.

Average Interpersonal

Trust ( TG )

2.

Average trust of political institutions

( TP )

3.

Threshold for believing the rumor ( A )

Set B: Rumor

Parameters

1.

believers (

Number of initial

N b

)

Set C: Community

Parameters

1.

Number of agents ( N )

2.

Type of network

Table 2: Percent Rumor Belief in a Small World Network As A Function of Network

Size and Rumor Acceptance Threshold

Rumor

Acceptance

Threshold

Minimum Average Maximum

N = 125 Low 57.14 95.18 100

N = 500

Medium

High

Low

8.75

4.88

66.77

64.27

21.35

95.73

100

77.78

100

N = 1500

Medium

High

Low

Medium

High

90.65

3.38

69.49

7.55

4.53

65.25

20.71

95.79

65.03

20.79

100

76.23

100

99.90

75.47

27

Table 3: Percent Rumor Belief in a Scale Free Network As A Function of Network

Size and Rumor Acceptance Threshold

Rumor

Acceptance

Threshold

Minimum Average Maximum

N = 125 Low 0 67.33 100

Medium

High

0

0

37.84

12.55

97.67

55.56

N = 500

N = 1500

Low

Medium

High

Low

0

0

0

0

65.14

39.30

10.34

62.96

99.44

93.75

45.86

97.91

Medium

High

0

0

31.21

9.33

94.57

45.17

28

Figure 1a: Speed of Rumor Penetration in a Small World Network

Figure 1b: Speed of Rumor Penetration in a Scale-Free Network

29

Figure 2a: Proportion of Believers as a Function of Rejector Connectedness in a

Scale-Free Network

Figure 2b: Proportion of Believers as a Function of Rejector Connectedness in a

Small World Network

30

Figure 3a: Speed of Rumor Penetration as a Function of Susceptible Agent

Connectedness in a Small World Network

Figure 3b: Speed of Rumor Penetration as a Function of Susceptible Agent

Connectedness in a Scale-Free Network

31

Figure 3c: Speed of Rumor Penetration as a Function of Susceptible Agent

Connectedness in a Scale-Free Network (Enlarged to show detail)

Figure 4a: Speed of Rumor Penetration in a Small World Network

32

Figure 4b: Speed of Rumor Penetration in a Scale-Free Network

Figure 4c: Speed of Rumor Penetration in a Scale-Free Network (Enlarged to show detail)

33