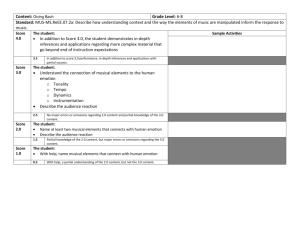

Emotional responses to music - NEMCOG

advertisement