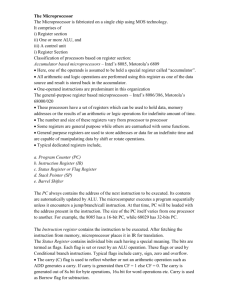

Central Processing Unit

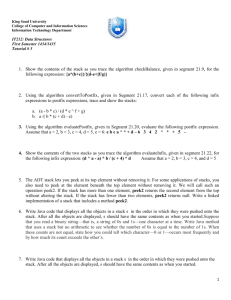

advertisement