Parallel Extensions to the Matrix Template Library*

advertisement

Parallel Extensions to the Matrix Template Library

Andrew Lumsdainey

Brian C. McCandlessy

Abstract

We present the preliminary design for a C++ template library to enable the compositional

construction of matrix classes suitable for high performance numerical linear algebra computations. The library based on our interface definition — the Matrix Template Library (MTL) — is

written in C++ and consists of a small number of template classes that can be composed to represent commonly used matrix formats (both sparse and dense) and parallel data distributions. A

comprehensive set of generic algorithms provide high performance for all MTL matrix objects

without requiring specific functionality for particular types of matrices. We present performance data to demonstrate that there is little or no performance penalty caused by the powerful

MTL abstractions.

1 Introduction

There is a common perception in scientific computing that abstraction is the enemy of performance.

Although there is continual interest in using languages such as C or C++ and the powerful

data abstractions that those languages provide, the conventional wisdom is that data abstractions

inherently carry with them a (perhaps severe) performance penalty.

Our thesis is that this is not necessarily the case and that, in fact, abstraction can be an effective

tool in enabling high performance — but one must choose the right abstractions.

The misperception about abstraction springs from numerous examples of C++ libraries that

provide a very nice user interface through polymorphism, operator overloading, and so forth so

that the user can implement an algorithm or a library in a “natural” way (see, e.g., SparseLib++

and IML++ [8]). Such an approach will (by design) hide computational costs from the user and

degrade performance. One approach to providing performance and abastraction is through the use

of lazy evaluation (see, e.g., [2]), but this approach can have other performance penalties as well as

implementation difficulties.

One of the most important concerns in obtaining high-performance on modern workstations

is proper exploitation of the memory hierarchy. That is, a high-performance algorithm must be

cognizant of the costs of memory accesses and must be structured to maximize use of registers

and cache and to minimize cache misses, pipeline stalls, etc. Most importantly, data abstractions

can be made that explicitly account for hierarchical memory and which enable a programmer to

readily exploit it. The particular set of abstractions that we present here to bridge the performanceabstraction gap is the Matrix Template Library (MTL), written in C++. In the following sections,

we describe the basic design of MTL, discuss parallel extensions to MTL, and present experimental

To appear in Proc. 8th SIAM Conference on Parallel Processing for Scientific Computing. This work was supported

by NSF cooperative grant ASC94-22380. Computational resources provided to the University of Notre Dame under the

auspices of the IBM SUR program.

y Department of Computer Science and Engineering, University of Notre Dame, Notre Dame, IN 46556;

Lumsdaine.1,McCandless.1 @nd.edu; http://www.cse.nd.edu/ lsc/research/mtl/.

f

g

1

2

results showing that MTL provides performance competitive with (or better than) traditional

mathematical libraries.

We remark that this work is decidedly not an attempt to “prove” that a particular language (in

our case, C++) offers higher performance than another language (e.g., Fortran). Such arguments

are, ultimately, pointless. Any language with a mature compiler can offer high performance (the

PhiPAC effort definitively settles this question [4]). Software development, even scientific software

development, is about more than just performance and, except for academic situations, one must

necessarily be concerned with the costs of software over its entire life-cycle. Thus, we contend

that the only relevant discussion to have about languages is how particular languages enable the

robust construction, maintenance, and evolution of complex software systems. In that light, modern

high-level languages have a distinct advantage — most of them were developed specifically for

the development of complex software systems and the more widely-used ones have survived only

because they are able to meet the needs of software developers.

2 The Standard Template Library

2.1 A New Paradigm, Not Just a New Library

The idea for the Matrix Template Library was inspired to a large extent by the Standard Template

Library (STL) for C++ [12]. STL has become extremely popular because of its elegance, richness,

and versatility. The original motivation for STL, however, was not to provide yet another library of

standard components, but rather to introduce a new programming paradigm [14, 15].

This new paradigm was based on the observation that many algorithms can be abstracted away

from the particular representations of the data structures upon which they operate. As long as the

data structures provide a standard interface for algorithms to use, algorithms and data structures can

be freely mixed and matched. Moreover, this paradigm realizes that this process of abstraction can

be done without sacrificing performance.

To realize an implementation of an algorithm which is independent of data structure representation requires language support, however. In particular, a language must allow algorithms (and data)

to be parameterized not only by the values of the formal parameters (the arguments), but also by the

type of the data. Few languages offer this capability, and it has only (relatively) lately become part

of C++. In C++, functions and object classes are parameterized through the use of templates [19],

hence the realization of a generic algorithm library in C++ as the Standard Template Library.

2.2 The Structure of STL

STL provides the following sets of components: Containers, Generic Algorithms, Iterators,

Adapters, Function Objects (“Functors”), and Allocators. Since these types of components also

form the framework for MTL, we discuss them briefly here.

Containers are objects that contain other objects (e.g., a list of elements). STL uses templates

to parameterize what is contained. Thus, the same template list code can be used to implement a

list of integers, or a list of doubles, or a list of lists, etc.

The generic algorithms defined by STL are a set of data-format independent algorithms and

are parameterized by the type of their arguments. The particular algorithms provided by the STL

specification are general computer-science type algorithms (e.g., sorting, searching, etc.).

Iterators are objects that generalize access to other objects (iterators are sometimes called

“generalized pointers”). The definition of the iterator classes in STL provide the uniform interface

between algorithms and containers necessary to enable genericity. That is, each container class has

certain iterators which can be used to access and perhaps manipulate its contents. STL algorithms

are in turn written solely in terms of iterators.

3

The remaining components are perhaps less pre-eminent in STL. Adapter classes are used

to provide a new interfaces to other components. Just as an iterator generalizes and make more

powerful the concept of a pointer, a function object generalizes and makes more powerful the

concept of a function pointer. Finally, allocators are classes that manage the use of memory.

2.3 Example

The following code fragment demonstrates the use of the generic inner product() algorithm.

Note that the inner-product can be taken between containers of arbitrary type.

int

x1[100];

vector<double> x2(100);

list<double>

x3;

// ... initialize data ...

// Compute inner product of x1 and x2 -- an array and a vector

double result = inner_product(&x1[0], &x1[100], x2.begin(), 0.0);

// Compute inner product of x2 and x3 -- a vector and a list

result = inner_product(x2.begin(), x2.end(), x3.begin(), 0.0);

3 The Matrix Template Library

MTL is by no means the first attempt to bring abstraction to scientific programming [3], nor is

it the first attempt at a mathematical library in C++. Other notable efforts include HPC++ [20],

LAPACK++ [9], SparseLib++/IML++ [8], and the Template Numerical Toolkit [16]. MTL is

unique, however, in its general underlying philosophy (see below) and in its particular commitment

to self-contained high-performance. Other libraries, if they are concerned about performance at all,

attain high-performance by making (mixed-language) calls to BLAS subroutines. The higher-level

C++ code merely provides a syntactically pleasing means for gluing high-performance subroutines

together, but does not provide flexible means for obtaining high-performance (as MTL does).

3.1 The MTL Philosophy

The goal of MTL is to introduce the philosophy of STL to a particular application domain, namely

high-performance numerical linear algebra. The underlying ideas remain the same: to provide

a framework in which algorithms and data structures are separated, to provide a classification of

standard components, to define a set of interfaces for those components, and to provide a reference

implementation of a conforming library.

The basic architectural design of MTL (from the bottom up) begins with a fundamental

arithmetic type — this organizes bytes into (say) doubles. Next, we collect groups of the arithmetic

types into one-dimensional containers. Finally, we use a two-dimensional container to organize the

one-dimensional containers. Note what we have not done to this point. We have not indicated

how this two-dimensional container of arithmetic types corresponds to a mathematical matrix

of elements. With the two-dimensional container, however, we do explicitly know how data is

arranged in memory and hence where opportunities for high performance will be greatest. The

transformation of one- and two-dimensional containers into (respectively) vectors and matrices is

accomplished through the use of adapter classes.

4

3.2 The Design of MTL

MTL provides the following sets of components: one-dimensional containers, two-dimensional

containers, orientation, shape, generic algorithms, allocators, iterators, and a matrix adapter.

Because of space limitations, we can only describe each of them briefly. These components are

described more fully in the MTL specification [13].

One-Dimensional Containers As with containers in STL, one-dimensional containers in MTL

are objects that contain other objects. We distinguish these containers as being “one-dimensional”

because elements within such containers can be accessed using a single index. The declarations for

the MTL's one-dimensional containers are as follows:

namespace mtl {

template <class

template <class

template <class

template <class

template <class

};

T,

T,

T,

T,

T,

class

class

class

class

class

Allocator

Allocator

Allocator

Allocator

Allocator

=

=

=

=

=

allocator>

allocator>

allocator>

allocator>

allocator>

class

class

class

class

class

vector;

pair_vector;

compressed;

list;

map;

These declarations may seem somewhat formidable to the C++ novice1 . The statement

template <class T, class Allocator = allocator>

simply indicates that the following class is parameterized by class T and by class

Allocator. The class T may be either an object class or a fundamental C++ type, such

as int or double, and parameterizes the type of object contained by the container class. The

class Allocator template argument parameterizes the class that the container uses to allocate

memory. In this case, the Allocator class has a default value; containers that are declared with

a single template argument (T) will use the default allocator class for Allocator.

The MTL vector class is similar to the STL vector class (which is similar to a C++ array)

with an interface tailored to MTL requirements, and provides the basis for dense (mathematical)

vectors and matrices. The remaining container classes are associative containers (they explicitly

store index-value pairs) and form the basis for different types of sparse matrices. The different

container classes have different computational complexity (computational complexity of particular

container operations is part of the formal MTL specification), allowing users to choose a format

that is most effective for particular applications.

Two-Dimensional Containers Two-dimensional containers in MTL are containers of other

containers. We distinguish these containers as being “two-dimensional” because elements within

such containers must be accessed with a pair of indices. MTL provides the following twodimensional containers:

namespace mtl {

template <class OneD, class Allocator = allocator> vector_of;

template <class OneD, class Allocator = allocator> pointers_to;

};

The two-dimensional container classes have as a template argument a one-dimensional container and provide a linear arrangement of those containers. The fundamental difference between

these two classes is in the complexity required to interchange one-dimensional containers — the

vector of class requires linear time whereas the pointers to class requires constant time.

1

We remark that we are somewhat forward-looking in our definition of MTL in that we use features, such as

namespace and default template arguments, that are new to C++ and not yet widely supported by available compilers.

5

Orientation and Shape MTL provides two types of components to map from two-dimensional

containers to matrix structure — orientation and shape. The shape class describes the

large-scale non-zero structure of the matrix. MTL provides the following shape classes (with the

obvious interpretations):

namespace mtl {

class general;

class upper_triangle;

class lower_triangle;

class unit_upper_triangle;

class unit_lower_triangle;

class banded;

};

The orientation class maps matrix indices to two-dimensional container indices. MTL

provides the following orientation classes:

namespace mtl {

class row;

class col;

class diagonal;

class anti_diagonal;

};

The row, col, diagonal, and anti diagonal classes align the one-dimensional container along (i.e., the one dimensional container iterators vary fastest along) the matrix row, column,

diagonal, and anti-diagonal, respectively.

Generic Algorithms MTL provides a number of high-performance generic algorithms for

numerical linear algebra. These algorithms can be generally classified as vector arithmetic, operator

application, and operator update operations and they supply a superset of the functionality provided

in level-1, level-2, and level-3 BLAS [11, 7, 6]. It is important to understand here at what

level these algorithms are generic, however. To obtain high-performance on a modern highperformance computer, an algorithm must exploit and manage the memory layout of its data. Thus,

MTL provides generic algorithm interfaces at the matrix level, but the algorithms themselves are

implemented generically at the (one-dimensional or two-dimensional) container level. Note that

the dispatch from matrix-level to container-level is done at compile-time; there is no run-time

performance penalty.

Allocators As with STL, MTL allocators are used to manage memory. The default MTL allocator

class allows the elements of the two-dimensional matrix to be laid out contiguously in memory.

This is an important feature for interfacing MTL to external libraries (such as LAPACK [1]), which

expect data to be laid out in one-dimensional fashion.

Iterators In addition to the native container iterators, MTL one-dimensional containers provide

a value iterator for accessing container values, and an index iterator for accessing

container indices. Moreover, a block iterator component is provided at the two-dimensional

container level to allow iteration over two-dimensional regions. The structure of MTL is thus

compatible (and is in fact an early test vehicle) for the notion of a “lite” BLAS [18].

6

Matrix Adapter The matrix adapter class provides a uniform linear algebra interface to MTL

classes by wrapping up a two-dimensional container class, an orientation class, and a shape class.

namespace mtl {

template <class TwoD, class Orientation, class Shape = general> matrix;

};

3.3 Example

The following MTL code fragment computes the product between a compressed-row sparse matrix

and a column-oriented dense matrix:

using namespace mtl;

double alpha, beta;

matrix<pointers_to<compressed<double> >, row, general> A;

matrix<pointers_to<vector<double> >, col, general> B, C;

// ... initialize scalars and matrices ...

multiply(C, A, B, alpha, beta);

4

// C <- alpha*A*B + beta*C

Parallel Extensions to MTL

To extend MTL for parallel programming, we introduce four new components: distribution,

processor map, distributed vector, and distributed matrix.

Distribution An MTL distribution class maps global indices to local indices and is thus analagous

in function to an orientation class. Presently, MTL includes the following distribution:

namespace mtl {

template <class ProcMap> class block_cyclic;

};

The distribution class is parameterized by the type of underlying processor topology. Note

that although MTL presently only provides this single distribution, the block cyclic distribution is

general enough to subsume many other types of distributions [17].

Processor Map The MTL processor map component provides a topological structure to the

processors of a parallel computer. MTL includes the following procesor maps:

namespace mtl {

namespace pmap {

class one_d;

class two_d;

class three_d;

};

};

These maps provide one, two, and three-dimensional Cartesian topologies, respectively.

Distributed Vector and Distributed Matrix The distributed vector and distributed matrix

components are adapter classes that respectively wrap up sequential MTL one-dimensional and twodimensional containers together with a distribution. The MTL distributed vector and distributed

matrix classes are declared as follows:

7

namespace mtl {

template <class Dist, class SeqTwoD> class dist_vector;

template <class Dist, class SeqTwoD> class dist_matrix;

};

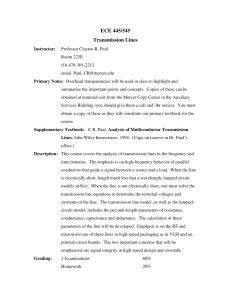

5 Performance Results

To demonstrate the performance of MTL, we present performance results from multiplying two

column-oriented dense matrices. Sequential results were obtained on an IBM RS/6000

model 590 workstation; parallel results were obtained on a thin-node IBM SP-2 (which has slightly

lower single-node performance than the 590). All modules were compiled with the highest available

level of optimization for the particular language of the module.

Table 1 shows a comparison of IBM's (non-ESSL) version of DGEMM, the “DMR” version of

DGEMM obtained from netlib [10], the PhiPAC version of DGEMM, and MTL. In the sequential

case, MTL consistently provided the highest performance (except in one instance where it lagged

DMR by an insignficant amount). In the parallel case, MTL showed very good scalability as the

number of processors was increased.

The blocking parameters for the PhiPAC DGEMM were the “optimal” parameters for the

RS6000 590, as obtained from the PhiPAC web page. Unfortunately, we were not able to reproduce

the near-peak performance of PhiPAC as reported on the web page. Evidently, the parameters

reported produce near-peak performance for certain matrix sizes, but that performance can fall off

dramatically for other matrix sizes. We did not attempt to find blocking parameters with more

consistently high performance, but we are fairly certain that such parameters exist and that they

would make PhiPAC competitive with the MTL results. Similar exploration of the blocking design

space would presumably also allow MTL to eke out a few more Mflops. The point here is not that

MTL has the fastest matrix-matrix product, but that it can be made as fast as other subroutines —

all within a framework that is more conducive to modern software engineering practice.

N N

6 Conclusion

In this paper we have presented a (very brief) description of MTL and its parallel extensions. It

should be clear from the discussion and from the performance results that abstractions are not

barriers to high perfomance. Although not shown here due to space limitations, results from sparse

matrix computations showed MTL to have superior performance to standard sparse libraries (i.e.,

the NIST sparse BLAS [5]).

N

128

256

512

1024

2048

Sequential Mflop rate

DGEMM DMR PhiPAC

158.56 215.18

209.52

159.62 218.80

207.34

161.25 220.92

47.58

162.71 216.77

47.47

162.44 218.94

47.41

MTL

215.04

221.63

225.61

226.30

226.14

MTL(1)

202.6

215.5

218.8

219.8

218.1

Parallel Mflop rate

MTL(2) MTL(4)

167.8

246.7

243.1

398.3

314.0

553.2

363.3

681.7

N/A

759.2

MTL(8)

301.7

552.8

885.9

1173.5

1388.8

TABLE 1

Comparison of Mflop rates for dense matrix-matrix product. Sequential results are shown for DGEMM,

the “DMR” version of DGEMM, the PhiPAC version of DGEMM, and MTL. Parallel results are shown for MTL

on 1, 2, 4, and 8 SP-2 nodes. Results were not obtained for = 2048 on 2 nodes because of memory limits.

N

8

Present work focuses on the development of specific mathematical libraries using (sequential

and distributed) MTL: a direct sparse solver library and a preconditioned iterative sparse solver

library. Future work will include the complete specification of a low-level “lite” BLAS level in

C++ to provide the foundation for MTL generic algorithms.

The current release of MTL (documentation and reference implementation) can be found at

http://www.cse.nd.edu/lsc/research/mtl/.

References

[1] E. Anderson, Z. Bai, C. Bischoff, J. Demmel, J. Dongarra, J. DuCroz, A. Greenbaum, S. Hammarling,

A. McKenney, and D. Sorensen, LAPACK: A portable linear algebra package for high-performance

computers, in Proc. Supercomputing ' 90, IEEE Press, 1990, pp. 1–10.

[2] S. Atlas et al., POOMA: A high performance distributed simulation environment for scientific

applications, in Proc. Supercomputing ' 95, 1995.

[3] S. Balay, W. D. Gropp, L. C. McInnes, and B. F. Smith, Efficient management of parallelism in objectoriented numerical software libraries, in Modern Software Tools in Scientific Computing, E. Arge,

A. M. Bruaset, and H. P. Langtangen, eds., Birkhauser, 1997.

[4] J. Bilmes, K. Asanovic, J. Demmel, D. Lam, and C.-W. Chin, Optimizing matrix multiply using

PHiPAC: A portable, high-performance, ANSI C coding methodology, Tech. Rep. CS-96-326, University of Tennessee, May 1996. Also available as LAPACK working note 111.

[5] S. Carney et al., A revised proposal for a sparse BLAS toolkit, Preprint 94-034, AHPCRC, 1994.

SPARKER working note #3.

[6] J. Dongarra, J. D. Croz, I. Duff, and S. Hammarling, A set of level 3 basic linear algebra subprograms,

ACM Transactions on Mathematical Software, 16 (1990), pp. 1–17.

[7] J. Dongarra, J. D. Croz, S. Hammarling, and R. Hanson, Algorithm 656: An extended set of basic linear

algebra subprograms: Model implementations and test programs, ACM Transactions on Mathematical

Software, 14 (1988), pp. 18–32.

[8] J. Dongarra, A. Lumsdaine, X. Niu, R. Pozo, and K. Remington, A sparse matrix library in C++ for

high performance architectures, in Proc. Object Oriented Numerics Conference, Sun River, OR, 1994.

[9] J. Dongarra, R. Pozo, and D. Walker, LAPACK++: A design overview of object-oriented extensions for

high performance linear algebra, in Proc. Supercomputing ' 93, IEEE Press, 1993, pp. 162–171.

[10] J. J. Dongarra, P. Mayes, and G. R. di Brozolo, The IBM RISC System/6000 and linear algebra

operations, Computer Science Technical Report CS-90-122, University of Tennessee, 1990.

[11] C. Lawson, R. Hanson, D. Kincaid, and F. Krogh, Basic linear algebra subprograms for fortran usage,

ACM Transactions on Mathematical Software, 5 (1979), pp. 308–323.

[12] M. Lee and A. Stepanov, The standard template library, tech. rep., HP Laboratories, February 1995.

[13] A. Lumsdaine and B. C. McCandless, The matrix template library, BLAIS Working Note #2, University

of Notre Dame, 1996.

[14] D. R. Musser and A. A. Stepanov, Generic programming, in Lecture Notes in Computer Science 358,

Springer-Verlag, 1989, pp. 13–25.

[15]

, Algorithm-oriented generic libraries, Software–Practice and Experience, 24 (1994), pp. 623–

642.

[16] R. Pozo, Template numerical toolkit for linear algebra: high performance programming with C++ and

the standard template library, in Proc. ETPSC III, August 1996.

[17] M. Sidani and B. Harrod, Parallel matrix distributions: Have we been doing it all right?, Tech. Rep.

CS-96-340, University of Tennessee, November 1996. LAPACK working note #116.

[18] A. Skjellum and A. Lumsdaine, Software engineering issues for linear algebra standards, BLAIS

Working Note #1, Mississippi State University and University of Notre Dame, 1995.

[19] B. Stroustrup, The C++ Programming Language, Addison-Wesley, Reading, Massachusetts, second ed., 1991.

[20] The HPC++ Working Group, HPC++ white papers, tech. rep., Center for Research on Parallel

Computation, 1995.