Speech Coding II.

advertisement

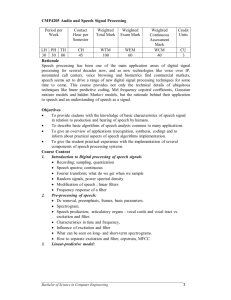

Speech Coding II.

Jan Černocký, Valentina Hubeika

{cernocky|ihubeika}@fit.vutbr.cz

DCGM FIT BUT Brno

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

1/29

Agenda

• Tricks used in coding – long-term predictor, analysis by synthesis, perceptual filter.

• GSM full rate – RPE-LTP

• CELP

• GSM enhanced full rate – ACELP

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

2/29

EXCITATION CODING

• LPC reaches a low bit-rate but the quality is very poor (“robot” sound).

• caused by primitive coding of excitation (only voiced/unvoiced), whilst people have

both components (excitation and modification) varying over time.

• in modern (hybrid) coders a lot of attention is paid to coding of excitation.

• following slides: tips in excitation coding.

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

3/29

Long-Term Predictor (LTP)

The LPC error signal attributes behaviour of noise, however only within short time

intervals. For voiced sounds in longer time span, the signal is correlated in periods of the

fundamental frequency:

2

x

4

10

1.5

1

0.5

0

−0.5

−1

−1.5

0

20

40

60

80

samples

100

120

140

160

8000

6000

4000

2000

0

−2000

−4000

−6000

0

Speech Coding II

20

40

60

80

samples

100

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

120

140

160

4/29

⇒ long-term predictor (LTP) with the transfer function −bz −L . Predicts the sample s(n)

from the preceding sample s(n − L) (L is the pitch period in samples – lag).

Error signal: e(n) = s(n) − ŝ(n) = s(n) − [−bs(n − L)] = s(n) + bs(n − L),

thus B(z) = 1 + bz −L .

s(n)

A(z)

Speech Coding II

B(z)

e(n)

Q

^e(n)

Q

-1

1/B(z)

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

1/A(z)

^s(n)

5/29

Analysis by Synthesis

In most coders, the optimal excitation (after removing short-term and long-term

correlations) cannot be found analytically. Thus all possible excitations have to be

generated, the output (decoded) speech signal produced and compared to the original

input signal. The excitation producing minimal error wins. The method is called

closed-loop. To distinguish between the excitation e(n) and the error signal between the

generated output and the input signals, the later (error signal) is designated as ch(n).

s(n)

^

excitation e(n) G

encoding

1/B(z)

1/A(z)

^s(n)

-

+

Σ

ch(n)

miniumum

search

Speech Coding II

energy

computation

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

W(z)

6/29

Perceptual filter W (z)

In the closed-loop method, the synthesized signals is compared to the input signal. PF –

modifies the error signal according to human hearing.

Comparison of the error signal spectrum to the smoothed speech spectrum:

20

4

S(f)

CH(f)

2

15

0

log−frequency response

log−spectrum

10

5

−2

−4

−6

0

−8

−5

−10

W(z)

−10

0

500

1000

Speech Coding II

1500

2000

frequency

2500

3000

3500

4000

−12

0

500

1000

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

1500

2000

frequency

2500

3000

3500

4000

7/29

in formant regions, speech is much “stronger” than the error ⇒ the error is masked. In

“valleys” between formants, there is an opposite situation: for example in the 1500-2000 H

band, the error is stronger than speech - leads to an audible artifacts.

⇒ a filter that attenuates error signal in formant (not important) regions and amplify it in

“sensitive” regions.

A(z)

, where γ ∈ [0.8, 0.9]

W (z) =

A(z/γ)

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

(1)

8/29

How does it work ?

• the nominator A(z) (which is actually an “inverse LPC filter”) has the characteristic

inverse to the smoothed speech spectrum.

1

1

• filter A(z/γ)

has a similar characteristic as A(z)

(smoothed speech spectrum), but as

the poles are placed close to the origin of the unit circle, the picks of the filter are not

sharp.

• multiplication of these components results in TF W (f ) with the required

characteristic.

10

4

2

5

0

log−frequency response

log−frequency response

0

−5

−2

−4

−6

−10

−8

−15

−10

A(z)

1/A(z/γ)

−20

0

500

Speech Coding II

1000

1500

2000

frequency

2500

3000

3500

4000

W(z)

−12

0

500

1000

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

1500

2000

frequency

2500

3000

3500

4000

9/29

poles of the transfer function:

1

A(z)

and

1

A(z/γ) :

90

1/A(z)

1/A(z/γ)

1

60

120

0.8

0.6

30

150

0.4

0.2

180

0

210

330

240

300

270

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

10/29

Excitation Coding in Shorter Frames

While LP analysis is done over a frame with a “standard” length (usually 20 ms – 160

samples for Fs = 8 kHz), excitation is often coded in shorter frames – typically 40

samples.

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

11/29

ENCODER I: RPE-LTP

Reflection

coefficients coded as

Log. - Area Ratios

(36 bits/20 ms)

Short term

LPC

analysis

Input

signal

Preprocessing

Short term

analysis

filter

(1)

(2)

+

RPE parameters

(47 bits/5 ms)

RPE grid

selection

and coding

(3)

Long term

analysis

filter

(4)

(5)

+

LTP

analysis

(1) Short term residual

(2) Long term residual (40 samples)

(3) Short term residual estimate (40 samples)

(4) Reconstructed short term residual (40 samples)

(5) Quantized long term residual (40 samples)

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

RPE grid

decoding and

positioning

LTP parameters

(9 bits/5 ms)

To

radio

subsystem

12/29

• Regular-Pulse Excitation, Long Term Prediction, GSM full-rate ETSI 06.10

• Short-term analysis (20 ms frames with 160 samples), the coefficients of a LPC filter

are converted to 8 LAR.

• Long-time prediction (LTP - in frames of 5 ms 40 samples) - lag a gain.

• Excitation is coded in frames of 40 samples, where the error signal is down-sampled

with the factor 3 (14,13,13), and only the position of the first impulse is quantized

(0,1,2,3(!))

• The sizes of the single impulses are quantized using APCM.

^e(n)

e(3)

e(1)

e(6)

e(4)

2

0

n

e(2)

e(5)

• The result is a frame on 260 bits × 50 = 13 kbit/s.

• For more information see the norm 06.10, available for downloading at

http://pda.etsi.org

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

13/29

Decoder RPE-LTP

encoder

Reflection coefficients coded

as Log. - Area Ratios

(36 bits/20 ms)

RPE grid

decoding and

positioning

RPE

parameters

(47 bits/5 ms)

Short term

synthesis

filter

+

Postprocessing

Output

signal

Long term

synthesis

filter

LTP

parameters

(9 bits/5 ms)

From

radio

subsystem

Figure 1.2: Simplified block diagram of the RPE - LTP decoder

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

14/29

CELP – Codebook-Excited Linear Prediction

Excitation is coded using code-books

Basic structure with a perceptual filter is:

...

excitation

codebook

G

s(n)

^s(n)

+

1/A(z)

− Σ

1/B(z)

q(n)

miniumum

search

energy

computation

. . . Each tested signal has to be filtered by W (z) =

Speech Coding II

A(z)

A⋆ (z)

W(z)

— too much work!

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

15/29

Perceptual filter on the Input

Filtering is a linear operation, thus W (z) can be moved to both sides: to the input and to

A(z)

1

1

1

1

the signal after the sequence B(z)

– A(z)

. Can be simplified to: A(z)

A⋆ (z) = A⋆ (z) — this

is a new filter that has to be used after

1

B(z) .

s(n)

excitation

codebook

...

W(z)

p(n)

e(n)

x(n)

+

^p(n)

G

1/B(z)

1/A*(z)

- Σ

miniumum

search

Speech Coding II

energy

computation

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

16/29

Null Input Response Reduction

Filter A⋆1(z) responds not only to excitation but also has memory (delayed samples) Denote

the impulse response as h(i):

p̂(n) =

n−1

X

h(i)e(n − i) +

i=0

|

∞

X

h(i)e(n − i)

(2)

i=n

{z

}

tento rámec

|

{z

minulé rámce

}

p̂0 (n)

Response from the previous samples does not depend on the current input – the response

can be calculated only once and then subtracted from the examined signal (input filtered

by W (z)), which results in :

p̂(n) − p̂0 (n) =

n−1

X

h(i)e(n − i)

(3)

i=0

Further approach: consider long-term predictor:

1

1

,

=

B(z)

1 − bz −M

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

(4)

17/29

where M is the optimal lag. e(n) can be defined as

e(n) = x(n) + be(n − M ),

(5)

in the filter equation thus instead of e(n − i) we get:

p̂(n) − p̂0 (n) =

n−1

X

h(i)[x(n − i) + be(n − M − i)].

(6)

i=0

then expand:

p̂(n) − p̂0 (n) =

n−1

X

i=0

h(i)x(n − i) + b

n−1

X

h(i)e(n − M − i),

(7)

i=0

because filtering is a linear operation. The second expression represents past excitation

filtered by A⋆1(z) and multiplied by LTP gain b. The LTP in the CELP coder can be

interpreted as another code-book containing past excitation. Here, it is considered as an

actual code-book with rows e(n − M ) (or e(M ) in vector notation). In real applications, it

is just a delayed exciting signal.

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

18/29

CELP Equations

p̂(n)−p̂0 (n) =

n−1

X

i=0

h(i)e(n−i) =

n−1

X

i=0

h

i

(j) (j)

h(i) g u (n − i) + be(n − i − M ) = p̂2 (n)+p̂1 (n),

(8)

Task is to find:

• gain for the stochastic code-book g

• the best vector from the stochastic code-book u(j)

• gain of the adaptive code-book b

• the best vector from the best adaptive code-book e(M ) .

Theoretically, all the combinations have to be tested ⇒ no ! Suboptimal procedure:

• first find, p̂1 (n) by searching in the adaptive code-book. The result is lag M and gain

b.

• Subtract p̂1 (n) from p1 (n) and get “an error signal of the second generation”, which

should be provided by the stochastic code-book.

• Find p̂2 (n) in the stochastic code-book. The result is the index j and gain g.

• As the last step usually, gains are optimized (contribution from both code-books).

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

19/29

Final Structure of CELP:

s(n)

W(z)

^p0

+

b

adaptive

CB

...

e(n−M)

stochastic

CB

g

...

u(n,j)

−

p1

1/A*(z)

^p1

minimum

search

1/A*(z)

−

p2

^p2

−

minimum

search

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

20/29

CELP equation – optional. . .

Searching the adaptive codebook

We want to minimize the error E1 that is (for one frame):

E1 =

N

X

(p1 (n) − p̂1 (n))2 .

(9)

N =0

We can rewrite it in more convenient vector notation and develop p̂1 into the gain g and

excitation signal q(n − M ), where q(n) is the filtered version of e(n − M ):

q(n) =

n−1

X

h(i)e(n − i − M )

(10)

i=0

When we multiply this signal by the gain b, we get the signal

p̂1 (n) = bq(n).

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

(11)

21/29

The goal is to find the minimum energy:

E1 = ||p1 − p̂1 ||2 = ||p1 − bq(M ) ||2 .

(12)

which we can rewrite using inner products of involved vectors:

E1 =< p1 − bq(M ) , p1 − bq(M ) >=< p1 , p1 > −2b < p1 , q(M ) > +b2 < q(M ) , q(M ) > .

(13)

We have to begin by finding an optimal gain for each M by a derivation with respect to b

(the derivation must be zero for minimum energy):

i

δ h

< p1 , p1 > −2b < p1 , q(M ) > +b2 < q(M ) , q(M ) > = 0

(14)

δb

from here, we can directly write the result for lag M :

b(M )

Speech Coding II

< p1 , q(M ) >

=

< q(M ) , q(M ) >

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

(15)

22/29

We need now to determine the optimal lag. We will substitute found b(M ) in Eq. 13 and

we obtain: the minimization of

·

¸

(M )

(M )

(M )

2

(M )

(M )

2 < p1 , q

>< p1 , q

> < p1 , q

> <q

,q

>

min < p1 , p1 > −

.

+

(M

)

(M

)

(M

)

(M

)

2

<q

,q

>

<q

,q

>

(16)

we can drop the first term, as it does not influence the minimization and convert

minimization into the maximization of:

Speech Coding II

< p1 , q(M ) >2

M = arg max

< q(M ) , q(M ) >

(17)

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

23/29

Searching the stochastic codebook

First, all codebook vectors u(j) (n) must be filtered by the filter

have to search p̂2 (n) minimizing the energy of error:

1

A⋆ (z)

−→ q(j) . Then, we

E2 = ||p2 − p̂2 ||2 = ||p2 − gq(j) ||2

Solution:

• < p2 − gq(j) , q(j) >= 0

< p2 , q(j) >

g=

.

(j)

(j)

< q ,q >

(j)

• min E2 = min < p2 − gq

2

, p2 >= ||p2 || −

<p2 ,q(j) >2

.

<q(j) ,q(j) >

< p2 , q(j) >2

.

j = arg max

< q(j) , q(j) >

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

24/29

Example of a CELP encoder ACELP – GSM EFR

• classical CELP with an “intelligent code-book”.

• Algebraic Codebook Excited Linear Prediction GSM Enhanced Full-rate ETSI 06.60

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

25/29

IUDPH

3UHSURFHVVLQJ

/3&DQDO\VLV

WZLFHSHUIUDPH

3UHSURFHVVLQJ

VQ

ZLQGRZLQJ

DQG

DXWRFRUUHODWLRQ

5>@

/HYLQVRQ

'XUELQ

$]

5>@

$]

/63

LQGLFHV

/63

/63

TXDQWL]DWLRQ

LQWHUSRODWLRQ

IRUWKH

VXEIUDPHV

A

/63 $]

Speech Coding II

VXEIUDPH

2SHQORRSSLWFKVHDUFK

WZLFHSHUIUDPH

LQWHUSRODWLRQ

IRUWKH

VXEIUDPHV

/63 $]

FRPSXWH

$]

ZHLJKWHG

VSHHFK

VXEIUDPHV

$]

,QQRYDWLYHFRGHERRN

VHDUFK

$GDSWLYHFRGHERRN

VHDUFK

A

$]

7R

KQ

FRPSXWHWDUJHW

IRUDGDSWLYH

FRGHERRN

[Q

$]

A

$]

SLWFK

LQGH[

ILQGEHVWGHOD\

DQGJDLQ

TXDQWL]H

/73JDLQ

FRPSXWH

DGDSWLYH

FRGHERRN

FRQWULEXWLRQ

ILQG

RSHQORRSSLWFK

[Q

FRPSXWH

LPSXOVH

UHVSRQVH

/73

JDLQ

LQGH[

KQ

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

FRPSXWHWDUJHW

IRU

LQQRYDWLRQ

[Q

ILQGEHVW

LQQRYDWLRQ

)LOWHUPHPRU\

XSGDWH

XSGDWHILOWHU

PHPRULHVIRU

QH[WVXEIUDPH

FRGH

LQGH[

FRPSXWH

H[FLWDWLRQ

IL[HG

FRGHERRN

JDLQ

TXDQWL]DWLRQ

IL[HGFRGHERRN

JDLQLQGH[

26/29

• Again frames of 20 ms (160 samples)

• Short-term predictor – 10 coefficients ai in two sub-frames, converted to line-spectral

pairs, jointly quantized using split-matrix quantization (SMQ).

• Excitation: 4 sub-frames of 40 samples (5 ms).

• Lag estimation first as open-loop, then as closed-loop from the rough estimation,

fractional pitch with the resolution of 1/6 of a sample.

• stochastic codebook: algebraic code-book - can contain only 10 nonzero impulses,

that can be either +1 or -1 ⇒ fast search (fast correlation - only summation, no

multiplication), etc.

• 244 bits per frame × 50 = 12.2 kbit/s.

• for more information see norm 06.60, available fro download at

http://pda.etsi.org

• decoder. . .

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

27/29

IUDPH

/63

LQGLFHV

GHFRGH/63

LQWHUSRODWLRQ

RI/63IRUWKH

VXEIUDPHV

/63

Speech Coding II

VXEIUDPH

SLWFK

LQGH[

JDLQV

LQGLFHV

FRGH

LQGH[

SRVWSURFHVVLQJ

GHFRGH

DGDSWLYH

FRGHERRN

GHFRGH

JDLQV

FRQVWUXFW

H[FLWDWLRQ

A

V\QWKHVLV VQ

ILOWHU

SRVWILOWHU

AV

Q

GHFRGH

LQQRYDWLYH

FRGHERRN

A

$]

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

28/29

Additional Reading

• Andreas Spanias (Arizona University):

http://www.eas.asu.edu/~spanias

section Publications/Tutorial Papers provides a good outline: “Speech Coding: A

Tutorial Review”, a part of it is printed in Proceedings of the IEEE, Oct. 1994.

• at the same pade in section Software/Tools/Demo - Matlab Speech Coding

Simulations, software for FS1015, FS1016, RPE-LTP and more. Nice playing.

• Norm ETSI for cell phones are available free for download from:

http://pda.etsi.org/pda/queryform.asp

As the keywords enter fro instance “gsm half rate speech”. Many norms are provided

with the source code of the coders in C.

Speech Coding II

Jan Černocký, Valentina Hubeika, DCGM FIT BUT Brno

29/29