Information Retrieval Based Analysis of Software Artifacts

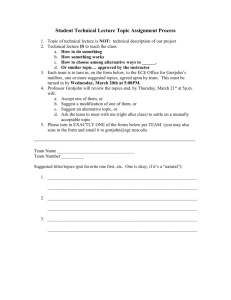

advertisement

Advanced Research In Software Engineering (ARiSE)

ECE, UT Austin

Information Retrieval Based Analysis

of Software Artifacts

Ripon K. Saha, Lingming Zhang, Sarfraz Khurshid,

Dewayne E Perry

ARiSE – UT Austin

© 2005, Dewayne E Perry

S5 2014

1

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Introduction

Threats to Safety, Security, Reliability

Poor software engineering

Software faults

Inherent because of complexity

Even with best SE teams with best SE practices

Software artifacts

Source code, software fault reports, test cases, etc

Text based

Have structure

Semantics-infused names and words

© 2005, Dewayne E Perry

S5 2014

2

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Exciting Results

Significant improvements gained using well-understand

and standard IR techniques

Scalable approaches that are

Text based

Lightweight

Language independent

In structured retrieval we do exploit language structure

Independent of special built structures

Computationally efficient

Will look at two types of fault related analyses

Bug Localization

Test case prioritization

© 2005, Dewayne E Perry

S5 2014

3

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Bug Localization Approaches

Dynamic

+ precise detection

- needs information such as test coverage, execution traces

- computationally expensive

Static

+

+

-

computationally efficient

less requirements, e.g., source code and/or bug reports

generally less accurate than dynamic approaches

high level detection

IR-based approach

Input:

Document collection: source code files

Query: bug reports

Output: ranked list of source code files

© 2005, Dewayne E Perry

S5 2014

4

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

A Bug Report and Corresponding Fix

Bug ID: 80720

Summary: Pinned console does not

remain on top

Description: Open two console views, ...

Pin one console. Launch another

program that produces output. Both

consoles display the last launch. The

pinned console should remain pinned.

Source code file: ConsoleView.java

public class ConsoleView extends PageBookView

implements IConsoleView, IConsoleListener {...

public void display(IConsole console) {

if (fPinned && fActiveConsole != null){return;}

}...

public void pin(IConsole console) {

if (console == null) {

setPinned(false);

} else {

if (isPinned()) {

setPinned(false);

}

display(console);

setPinned(true);

}

}

}

Figure: A real bug report from Eclipse Project and the corresponding fixed source code [taken from Zhou et al., ICSE 2012]

© 2005, Dewayne E Perry

S5 2014

5

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Steps in IR-based Bug Localization

Preprocessing source code and bug report

Extract program constructs

Class name, method name, variable name, comments

Bug report summary, bug report description

Tokenization

Removing stop words

Stemming

Indexing preprocessed source code

Used Lemur/Indri toolkit for indexing

Indexed both CamelCase identifiers as-is and tokenized

identifiers

Retrieving source code based on bug report

TF.IDF based upon BM25 Term weighting

© 2005, Dewayne E Perry

S5 2014

6

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

BLUiR: Architecture

© 2005, Dewayne E Perry

S5 2014

7

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Case Study 1 - Java

Data Set

Eclipse: 3075 bug reports, 12863 Java files

Aspectj: 286 bug reports, 6485 Java files

Used as the training set

SWT:

ZXing:

98 bug reports, 484 Java files

20 bug reports, 391 Java files

Evaluation Metrics

Threats to validity

Recall in Top 1

Recall in Top 5

Recall in Top 10

Mean Average Precision (MAP)

Mean Reciprocal Rank (MRR)

Structure of OOP

Quality of bug reports and source code

Open source code & system code bias

© 2005, Dewayne E Perry

S5 2014

8

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Does indexing the exact identifier names improve

bug localization?

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0

0

Top 1

Top 5

Top 10

SWT

MAP

MRR

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

Token

Both

Top 1

Top 5

Top 10

MAP

MRR

Top 1

Top 5

Top 10

MAP

MRR

Eclipse

0

0

Top 1

© 2005, Dewayne E Perry

Top 5

Top 10

AspectJ

MAP

MRR

S5 2014

ZXing

9

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Does modeling source code structure help improve

accuracy?

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0

0

Top 1

Top 5

Top 10

MAP

MRR

No

Yes

Top 1

Top 5

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0

0

© 2005, Dewayne E Perry

Top 5

Top 10

AspectJ

MAP

MRR

MAP

MRR

Eclipse

SWT

Top 1

Top 10

MAP

Top 1

MRR

S5 2014

Top 5

Top 10

ZXing

10

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Does BLUiR outperform other bug localization tools

and models?

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0

0

No

SB

SB

Top 1

No

SB

SB

Top 5

No

SB

SB

Top 10

No

SB

SB

MAP

No

SB

Top 1

MRR

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0

0

© 2005, Dewayne E Perry

Top 10

AspectJ

MAP

Top 10

MAP

MRR

Eclipse

No SB SB No SB SB No SB SB No SB SB No SB SB

No SB SB No SB SB No SB SB No SB SB No SB SB

Top 5

Top 5

1

0.8

Top 1

BLUiR

No SB No SB No SB No SB No SB

SB

SB

SB

SB

SB

SB

SWT

1

BugLocator

Top 1

MRR

S5 2014

Top 5

Top 10

ZXing

MAP

MRR

11

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Does BLUiR outperform other bug localization tools

and models?

50

Rank Improvemnt

40

30

20

10

0

1

11

21

31

41

51

61

71

81

91

-10

-20

Query Number

Query-wise comparison for SWT

© 2005, Dewayne E Perry

S5 2014

12

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Case Study 2 – C

With Julia Lawall

Same metrics used

Two data sets used:

For comparison with Java, the previous data set (less Zxing)

For analysis of C code, the following data set:

Systems

Python 3.4.0

GDB 7.7

WineHQ

GCC 4.9.0

GCC NT

Linux Kernal 3.14

© 2005, Dewayne E Perry

SLOC

380k

1982k

2340k

2571k

2062k

11829k

Bugs

Files

3407

195

2350

216

1548

S5 2014

488

2655

2815

22678

2473

19853

Bugs

Functions

177

2218

193

1178

41298

89430

75746

40684

347057

13

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: IR-related Properties of C & Java

© 2005, Dewayne E Perry

S5 2014

14

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: IR-related Properties of C & Java

Term Type

Python

GDB

WineHQ

GCC

GCC NT

Linux

SWT

AspectJ

Eclipse

© 2005, Dewayne E Perry

Function/Method-Terms*

Actual%

Unique%

66%

67%

72%

71%

76%

59%

44%

32%

20%

30%

46%

20%

95%

92%

97%

85%

67%

75%

S5 2014

Identifier-Terms

Actual%

Unique%

67%

59%

59%

69%

72%

59%

33%

18%

12%

21%

25%

10%

84%

91%

94%

48%

52%

48%

15

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: IR-related Properties of C & Java

C file sizes larger

C file median indicates file size highly skewed

Number of terms in C files considerably larger than

in Java classes

English words considerably higher in Java code than

in C code

C and Java quite different

Different language paradigms

Different naming styles (affecting IR issues)

© 2005, Dewayne E Perry

S5 2014

16

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Accuracy of Language Independent Retrieval

Project

Python

GDB

WineHQ

GCC

Linux

Top 1 Top 5 Top 10

45% 68% 76%

26% 41% 48%

20% 40% 50%

31% 52% 61%

25% 44% 53%

MAP

0.508

0.249

0.273

0.314

0.307

SWT

AspectJ

Eclipse

38% 72% 86%

26% 47% 54%

16% 35% 44%

0.465 0.539

0.200 0.360

0.190 0.253

© 2005, Dewayne E Perry

S5 2014

MRR

0.555

0.341

0.301

0.406

0.343

17

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Accuracy of Language Independent Retrieval

Get best results from smallest projects

Recall at Top 1, MAP, and MRR consistently higher

for C

However, Java gains a larger improvement in Recall

at Top 10 than C

High correlation between the use of English words

and accuracy of retrieval

Hence, the greater use of English words in C programs leads

to higher accuracy in localizing bugs

© 2005, Dewayne E Perry

S5 2014

18

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Accuracy of Flat-Text Retrival

Project Top 1

Top 5

Top 10

MAP

MRR

Python 47% (+4%)

72% (+6%)

80%(+5%)

0.532 (+5%)

0.581 (+5%)

49% (+2%)

0.231 (-7%)

0.315 (-8%)

GDB

22% (-15%) 42% (+2%)

WineHQ 26% (+30%) 47% (+18%) 55% (+10%) 0.322 (+18%) 0.358 (+19%)

GCC

29% (-6%)

52% (0%)

61% (0%)

0.300 (-4%)

0.401 (-1%)

Linux

23% (-8%)

44% (0%)

53% (0%)

0.299 (-3%)

0.333 (-3%)

SWT

38% (0%)

72% (0%)

86% (0%)

0.465 (0%)

0.539 (0%)

AspectJ 30% (+15%) 51% (+9%)

61% (+13%) 0.221 (+10%) 0.407 (+13%)

Eclipse 24% (+50%) 45% (+29%) 54% (+23%) 0.265 (+39%) 0.340 (+34%)

© 2005, Dewayne E Perry

S5 2014

19

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Accuracy of Flat-Text Retrival

Parse source code and extract only

class/file names

method/function names

Identifiers

Comment words

Discard language keywords and tokens

Again, small projects have highest recall, MAP & MRR

But removing keywords does not bring much

improvement to C code retrieval

© 2005, Dewayne E Perry

S5 2014

20

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Accuracy of Structured Retrival

Project

Top 1

Python

53%(+13%) 77%(+7%) 85%(+6%)

GDB

28%(+27%) 46%(+10%) 55%(+15%) 0.261(+13%) 0.366(+16%)

WineHQ 25%(-4%)

Top 5

Top 10

MAP

MRR

0.586(+10%) 0.640(+10%)

47%(+0%)

56%(+4%)

0.325(+1%)

0.360 (+1%)

GCC

34%(+17%) 55%(+6%)

63%(+3%)

0.319(+6%)

0.441(+10%)

Linux

27%(+17%) 49%(+11%) 57%(+8%)

0.337(+13%) 0.375(+15%)

SWT

55%(+45%) 77%(+7%)

86%(+0%)

0.557(+19%) 0.645(+20%)

61%(0%)

0.240(+20%) 0.414(+11%)

AspectJ 32%(+7%)

Eclipse

51%(0%)

31%(+29%) 53%(+18%) 63%(+17%) 0.316(+19%) 0.416(+22%)

© 2005, Dewayne E Perry

S5 2014

21

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Accuracy of Structured Retrieval

Distinguish between

class/file names

Method/function names

Identifiers

Comment words

Case Study 1 showed improvement for Java

Structure more effective than Flat for all C projects

except WineHQ –

13% to 27% in Recall at Top 1 for C; 7% to 45% for Java

MAP: up to 13% for C; 20% for Java

Less improvement over Language-Independent for C

25% for WineHQ but less than 10% for most others

Eclipse: 94% improvment

© 2005, Dewayne E Perry

S5 2014

22

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ:Accuracy of Function-Level Structured Retrieval

Project

GDB

WineHQ

GCC

Linux

Top1

7%

8%

9%

11%

Top5

21%

16%

18%

19%

Top10

27%

21%

25%

24%

MAP

0.073

0.085

0.081

0.127

MRR

0.145

0.122

0.144

0.159

Top 1 Recall 7 – 11%; Top 10 – 21 – 27%

Not surprising: function has a lot less information than a file

Retrieval at function level more expensive than at file

Effective strategy:

Use file level retrieval – 80% accuracy for Top 100

Then within that set, function retrieval with almost the same level of

accuracy as at the file level

© 2005, Dewayne E Perry

S5 2014

23

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

RQ: Importance of Information Sources

All information sources important

Method/function names and identifier names most so

© 2005, Dewayne E Perry

S5 2014

24

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Conclusions: IR Based Bug Localization

Challenges for C-based IR Bug Localization

Less structure than Java

C preprocessor directives

Macros

Historically, fewer English words used in C programs

Still, IR-based bug localization still effective in C

programs

But C programs benefit less from the use of program

structure in IR retrieval

© 2005, Dewayne E Perry

S5 2014

25

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

IR-based Regression Test Prioritization

Dynamic/static analysis of regression test case

prioritization

Precise but significant overhead:

Instrumentation

Dynamic profiling of test coverage

Static analysis

REPiR – IR-based regression test prioritization tool

Document collection

Query

Test

Difference between two versions

Results of our study of REPiR

Computationally efficient

Significantly getter than many existing dynamic and static

analysis techniques

At least as well for others

© 2005, Dewayne E Perry

S5 2014

26

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

REPiR Approach

Used the same IR approach as in bug localization

Off the shelf, state of the art, Indri toolkit

Same techniques: tokenization, stemming, removing stop

words

Evaluation

7 open source Java projects with real regression faults

Compare against dynamic and static strategies

Document Collection

Structured Document has 4 kinds of terms: class, method

and identifier names, and comments

Queries

Low level differences using diff against versions in git

High level differences – 9 types of changes

© 2005, Dewayne E Perry

S5 2014

27

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

REPiR Architecture

© 2005, Dewayne E Perry

S5 2014

28

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Empirical Evaluation

Is REPiR more effective than random or untreated

test orders?

Do high level program differences help improve the

performance of REPiR?

Is structured retrieval more effective for RTP?

How does REPiR perform compared to the existing

RPT techniques?

How does REPiR perform when it ignores language

level information?

© 2005, Dewayne E Perry

S5 2014

29

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Evaluation

Subject Systems – range from in size

Dynamic and static analysis strategies

Time and money

Joda-Time

2.7k sloc & 3k loc test code

32.9k sloc & 55.9k loc test code

UT - untreated test prioritization

keeps the order of the original test cases

RT – random test prioritization

rearranges test cases randomly

Dynamic coverage-based test prioritization

CMA - method coverage with the additional strategy

CMT – method coverage with the total strategy

CSA – statement coverage with the additional strategy

CST – statement coverage with the total strategy

JUPTA – static program analysis base on TA, test ability

Analogously, JMA, JMT, JSA, JST

© 2005, Dewayne E Perry

S5 2014

30

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

REPiR (Ldiff) at Test method and class level

© 2005, Dewayne E Perry

S5 2014

31

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

REPiR at Test method and class level

REPiR LDiff performs significantly better than UT or

RT

REPiR is fairly independent of the length of program

differences

At method level, HDiff works a bit better than LDiff

At class level, LDiff works a bit better than HDiff

Structured retrieval works basically the same as

unstructured retrieval

© 2005, Dewayne E Perry

S5 2014

32

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

REPiR vs JUPTA/Coverage-based RPT

Test Method level and Test Class level

© 2005, Dewayne E Perry

S5 2014

33

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

REPiR vs JUPTA/Coverage-based RTP

REPiR with HDiff at method level and LDiff at class

level outperform JUPTA and coverage based RTP

At test method level

APFD for REPiR HDiff is

APFD for JUPTA (JMA) is

APFD for CSA is

0.76

0.72

0.73

APFD for REPiR LDiff is

APFD for JUPTA (JMA) is

APFD for CSA is

0.81

0.76

0.7

At test class level

Significance of results

REPiR LDiff & HDiff working better than any total

strategies was statistically significant

REPiR’s better performance against additional strategies was

not statistically significant

© 2005, Dewayne E Perry

S5 2014

34

Advanced Research in Software Engineering (ARiSE)

ECE, UT Austin

Summary and Future

IR-based analysis of SW artifacts is an exciting

area of research

Initial results are very encouraging

Language independent, scalable, efficient, and competitive

Future

Explore other areas of SW artifact analysis

Explore hybrid approaches – eg, using IR-based in

conjunction with dynamic and/or static techniques

Explore with IR colleagues (Matt Lease, UT; Dave Binkley

and Dawn Lawrie, Loyola) new IR search and IR evaluation

techniques

© 2005, Dewayne E Perry

S5 2014

35