Chapter 7

advertisement

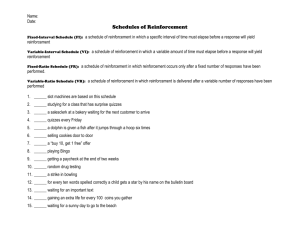

Learning: Chapter 7: Instrumental Conditioning W. J. Wilson, Psychology November 8, 2010 1. Reinforcement vs. Contiguity • Thorndike (a reinforcement theorist): – Law of effect: positive consequences strengthen responses; negative consequences weaken responses – Cat in “puzzle box” — cat gradually learns to solve, because successful Rs become more likely. • Guthrie (a contiguity theorist): – Reinforcement not necessary: S & R occur together and they become associated. – Learning requires only 1 trial ∗ But what about gradual learning curve? ∗ Stimulus elements: Portions of S are associated with R on each trial (only those portions being attended) – What about obvious effects of reinforcement (e.g., rat runs faster when reinforced at end of runway)? – *** Reinf acts as an especially salient stimulus, and is thus available to be associated with an R. Runway alone is associated with running (last R with runway alone was running). Post-reward competing Rs are associated with runway + food. therefore, competing Rs are NOT associated with runway by itself, so rat runs in runway alone. 2. Reinforcement as motivation (Tolman) • Behavior is a means to an end — it is flexible. 1 • Rs are not learned, rather S is learned about. • S-S theorist (Thorndike & Guthrie were S-R theorists) • Macfarlane (1930): trained rats to swim through a maze for a reward, then drained maze. Rats ran to the goal. R of swimming had not been learned. • Tolman et al. 1946: two important studies: – Rats learn circuitous path to a feeding station. When this path is replaced by straight arms, they run directly to the station. – Rats in plus maze learn either Always Go West or Always Turn Left. First is much easier for them to learn. Learning about the location of food, not the R to make. • Tolman & Honzik (1930) – Rats in 14-unit T-maze. – Reinf group, NotReinf group, and NotReinf-Reinf group. On Trial 11, rats in NR-R group get food reward for the first time. – R rats learn maze (as indicated by gradual reduction in errors) – NR rats never reduce errors much – NR-R rats perform better than R rats once they are reinforced. – Latent learning! – Cognitive map – Reinforcer was a motivator. • Tolman emphasized the Learning/Performance distinction. 3. Skinner’s Operant psychology • Skinner was “atheoretical” - focussed entirely on S & R; believed the mind (and even the brain) was irrelevant to an understanding of behavior. • Operants: Rs that operate on the environment (& can be made whenever the animal wants, hence they seem voluntary) • Superstitious behaviors • Operants are under stimulus control: SD and S∆ 2 • Conditioned reinforcers are important — they can become SD s for additional Rs, leading to behavior chains • Skinner developed cumulative recorder (on cumulative record, slope represents rate of responding) 4. Schedules of reinforcement • Fixed Ratio (FR1 is special case: continuous reinforcement) • Fixed Interval — scalloped cumulative record • Varied Ratio — highest rate of responding • Varied Interval — slow, steady rate • others are possible: e.g., DRL • Partial Reinforcement Effect 5. Choice • Concurrent schedules allow examination of choice behavior • Herrnstein (1961) Matching Law: B1 /(B1 + B2 ) = R1 /(R1 + R2 ) (1) • e.g., behavior occurs proportionally to the extent to which it is reinforced. • Choice is everywhere — even if only 1 behavior is being measured, many others are always available to the subject. • Herrnstein (1970) Quantitative Law of Effect: B1 = KxR1 /(R1 + RO ) (2) • K: constant reflecting all possible behavior in a given situation • RO : constant reflecting total reinforcement value of all other behaviors. • K & R will vary from animal to animal • Bouton suggests implications for understanding attraction of drugs to some people, especially those with low RO 3 6. Impulsiveness • Animals (human and non-human) will select small soon reward over large delayed reward • If choice is made well in advance of the rewards, larger one is usually chosen, even if it comes later. • Rachlin suggests that “pre-commitment” can avoid impulsive choice. • Implications for behavior on “Deal or No Deal?” 7. Behavioral Economics: Are All Reinforcers alike? • Tinklepaugh (1928): monkeys, banana, lettuce. Tells us about substitutability, cognition. • Reinforcers can be substitutes, independent, or complements. • Meta-analysis of drugs as reinforcers: – PCP cost goes up, alcohol substitutes – Alcohol cost goes up, PCP independent! – Alcohol cost goes up, cigarettes act as complements. • An understanding of substitutability is necessary to an understanding of reinforcement. 8. Theories of Reinforcement’ • Hull’s Drive Reduction – Drive arises from need — shortage of a biological essential – Drive reduction is reinforcing – BUT: many reinforcers unrelated to need • Premack Principle – More-preferred R will reinforce a less-preferred R. – Measure which of two Rs is more preferred, can safely predict that it will reinforce the other one. – Sometimes adequate or appropriate measure of preference is difficult 4 • Behavior Regulation Theory (response deprivation hypothesis) – Preferred level for every R – If R is prevented to a point below its preferred level, animal will engage in it more when given the opportunity. – Result is that a “ledd-preferred” R, if prevented, will then reinforce a more-preferred R. – “Bliss point” illustrates preferred level of each of two Rs when preference is measured. – When sched of reinf constrains extent to which Rs can occur, the level of each will achieve the “minimum distance” to the bliss point. – Behav Regul theory works well for ratio scheds, but Matching does better for interval scheds. • Selection by Consequences – Recent interest in application of natural selection to behavioral choice and reinforcement. – Variation in behavior ensures that multiple Rs are available; consequences select the ones that “survive.” – Remains to be seen how valuable this approach is to understanding reinforcement & onstrumental conditioning. 5