Application of Signal Processing to the Analysis of Financial Data

advertisement

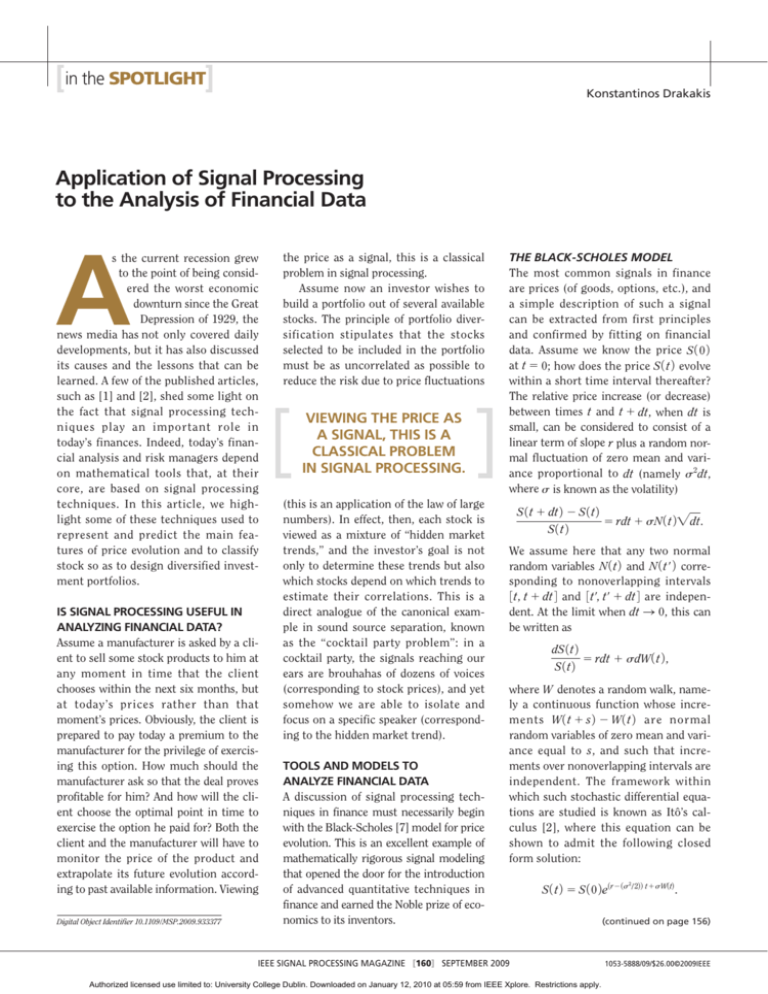

[in the SPOTLIGHT] Konstantinos Drakakis Application of Signal Processing to the Analysis of Financial Data A s the current recession grew to the point of being considered the worst economic downturn since the Great Depression of 1929, the news media has not only covered daily developments, but it has also discussed its causes and the lessons that can be learned. A few of the published articles, such as [1] and [2], shed some light on the fact that signal processing techniques play an important role in today’s finances. Indeed, today’s financial analysis and risk managers depend on mathematical tools that, at their core, are based on signal processing techniques. In this article, we highlight some of these techniques used to represent and predict the main features of price evolution and to classify stock so as to design diversified investment portfolios. IS SIGNAL PROCESSING USEFUL IN ANALYZING FINANCIAL DATA? Assume a manufacturer is asked by a client to sell some stock products to him at any moment in time that the client chooses within the next six months, but at today’s prices rather than that moment’s prices. Obviously, the client is prepared to pay today a premium to the manufacturer for the privilege of exercising this option. How much should the manufacturer ask so that the deal proves profitable for him? And how will the client choose the optimal point in time to exercise the option he paid for? Both the client and the manufacturer will have to monitor the price of the product and extrapolate its future evolution according to past available information. Viewing Digital Object Identifier 10.1109/MSP.2009.933377 the price as a signal, this is a classical problem in signal processing. Assume now an investor wishes to build a portfolio out of several available stocks. The principle of portfolio diversification stipulates that the stocks selected to be included in the portfolio must be as uncorrelated as possible to reduce the risk due to price fluctuations VIEWING THE PRICE AS A SIGNAL, THIS IS A CLASSICAL PROBLEM IN SIGNAL PROCESSING. (this is an application of the law of large numbers). In effect, then, each stock is viewed as a mixture of “hidden market trends,” and the investor’s goal is not only to determine these trends but also which stocks depend on which trends to estimate their correlations. This is a direct analogue of the canonical example in sound source separation, known as the “cocktail party problem”: in a cocktail party, the signals reaching our ears are brouhahas of dozens of voices (corresponding to stock prices), and yet somehow we are able to isolate and focus on a specific speaker (corresponding to the hidden market trend). TOOLS AND MODELS TO ANALYZE FINANCIAL DATA A discussion of signal processing techniques in finance must necessarily begin with the Black-Scholes [7] model for price evolution. This is an excellent example of mathematically rigorous signal modeling that opened the door for the introduction of advanced quantitative techniques in finance and earned the Noble prize of economics to its inventors. THE BLACK-SCHOLES MODEL The most common signals in finance are prices (of goods, options, etc.), and a simple description of such a signal can be extracted from first principles and confirmed by fitting on financial data. Assume we know the price S 1 0 2 at t 5 0; how does the price S 1 t 2 evolve within a short time interval thereafter? The relative price increase (or decrease) between times t and t 1 dt, when dt is small, can be considered to consist of a linear term of slope r plus a random normal fluctuation of zero mean and variance proportional to dt (namely s2dt, where s is known as the volatility) S 1 t 1 dt 2 2 S 1 t 2 5 rdt 1 sN 1 t 2 "dt. S1t2 We assume here that any two normal random variables N 1 t 2 and N 1 tr 2 corresponding to nonoverlapping intervals 3 t, t 1 dt 4 and 3 tr, tr 1 dt 4 are independent. At the limit when dt S 0, this can be written as dS 1 t 2 5 rdt 1 sdW 1 t 2 , S1t2 where W denotes a random walk, namely a continuous function whose increments W 1 t 1 s 2 2 W 1 t 2 are normal random variables of zero mean and variance equal to s, and such that increments over nonoverlapping intervals are independent. The framework within which such stochastic differential equations are studied is known as Itô’s calculus [2], where this equation can be shown to admit the following closed form solution: S 1 t 2 5 S 1 0 2 e1r2 1s / 222 t1sW1t2. IEEE SIGNAL PROCESSING MAGAZINE [160] SEPTEMBER 2009 Authorized licensed use limited to: University College Dublin. Downloaded on January 12, 2010 at 05:59 from IEEE Xplore. Restrictions apply. 2 (continued on page 156) 1053-5888/09/$26.00©2009IEEE [in the SPOTLIGHT] continued from page 160 This is the basic form of the BlackScholes model. Comparison to real data reveals a certain degree of oversimplification: it is not the final answer, but it certainly is a very successful first step, which admits further extensions. For example, to model price evolution over longer time intervals, the obvious solution is to extend the model to include time-varying (or even stochastic, as a further step) r and s, so that dS 1 t 2 5 r 1 t 2 dt 1 s 1 t 2 dW 1 t 2 . S1t2 Even this extension, however, cannot account for the large price jumps occasionally observed over very small time intervals and that are not captured by normal fluctuations. We can include such jumps in the model by adding a jump process J 1 t 2 , namely a piecewise constant function whose interjump times are independent and whose jumps are typically given by a distribution that lacks a variance and often even a mean (e.g., a Cauchy distribution) dS 1 t 2 5 r 1 t 2 dt 1 s 1 t 2 dW 1 t 2 1 dJ 1 t 2 . S1t2 Establishing a rigorous model for price evolution is important from a theoretical point of view, but, in practice, we seek signal processing techniques to analyze data sets (e.g., of actual price evolution). For example, a technique found to perform very well in the cocktail party problem/portfolio creation mentioned in the introduction is nonnegative matrix factorization (NMF). NONNEGATIVE MATRIX FACTORIZATION NMF is very simple: we collect the m observed signals of length n into an m 3 n array Y (adding perhaps a constant to each signal to form nonnegative signals), and we determine an m 3 k array A (the mixing array) and a k 3 n array X (the sources array), such that the factorization error e 5 Y 2 AX is minimized. The number of sources k is usually determined through a “threshold effect:” increasing k beyond a certain value does not reduce the error significantly. The arrays A and X can be determined through an iterative process. Based on A, a clustering technique (e.g., k-means clustering) can be used to group the observed signals according to the sources they depend on. In practical terms, this means that we can now choose one stock out of each group and build our portfolio. In the traditional approach to investing, stock groups were practically a nonissue, because they were taken (by mere convention, apparently) to reflect different sectors: in other words, IT stocks were grouped together in a group, pharmaceuticals stocks were grouped together in a separate group, etc. Applications of TODAY’S RECESSION IS WIDELY RECOGNIZED TO BE A MANIFESTATION OF A CHANGE IN THE RULES OF THE FINANCIAL GAME. NMF on actual stock data (e.g., the Dow-Jones index [4]), however, led to the perhaps shocking result that, contrary to common belief, grouping does not reflect sector partition but rather spans across sectors. This result stresses the importance of analyzing and monitoring the market instead of relying on intuition. EMPIRICAL MODE DECOMPOSITION The Black-Scholes model expresses the price variation of a stock in terms of a trend plus a fluctuation. As mentioned above, though, this is a theoretical model that oversimplifies reality. In actual data sets, fluctuation components over different time scales are observed: coarse time scales reflect periodicities meaningful to analysis and prediction, while fine time scales reflect noise. Both the trend and the fluctuations are important for the prediction of future values of the stock. How can all these components (trend and fluctuations) be meaningfully and reliably determined? Periodicities are usually detected through application of the Fourier transform, which, in our case, however, suffers from two obvious drawbacks: a) it cannot cope with the nonstationarity introduced by the presence of the trend, and b) it chooses its expansion functions (sines and cosines) in advance, hence it tends to over-decompose: for example, a periodic function that is close to (but not exactly) a sine will be expanded into an infinite series. Localized expansions, such as the windowed Fourier transform or the wavelet transform, address the former issue but still suffer from the latter. The empirical mode decomposition (EMD) [5] is specifically designed to expand a function into a trend plus a number of intrinsic mode functions (IMFs), whose defining features are a) that all local minima and maxima are negative and positive, respectively, and b) that their local mean is zero. Clearly, sines and cosines fall in this class, so IMFs can be viewed as “sines with slowly varying amplitude and frequency.” To apply EMD, fit a (cubic) spline interpolant through all local maxima of the function (upper envelope), and do the same for local minima (lower envelope); then, compute the pointwise average of the two interpolants (the local mean) and subtract it from the function. Repeat this process until the local mean is zero everywhere (within some preset tolerance). The resulting function is the first IMF; this process is depicted in Figure 1 for an example signal. Subtract this IMF from the original function and repeat on the remainder function to obtain the second IMF. Repeat until all IMFs have been recovered and the remainder does not have enough local extrema to continue: this is the trend. PHASE-RECTIFYING SIGNAL AVERAGING An alternative approach to determining hidden periodicities in price variations, which can also be applied in conjunction with EMD, is phase-rectifying signal averaging (PRSA) [6]. Here, we adopt the point of view that the signal at hand is essentially the sum of periodic signals plus some impurities (such as white noise and spikes). PRSA constructs a new signal, where periodicities appear stronger and impurities weaker, so that IEEE SIGNAL PROCESSING MAGAZINE [156] SEPTEMBER 2009 Authorized licensed use limited to: University College Dublin. Downloaded on January 12, 2010 at 05:59 from IEEE Xplore. Restrictions apply. periodicities can be reliably detected by applying ordinary Fourier transform. For this, we assume the signal is stationary and, in particular, detrended: trend is removed either by linear (or, in general, polynomial) fitting, or through EMD. To apply PRSA, we first determine the m anchor points of the signal S, namely the points of increase i where S 1 i 2 . S 1 i 2 1 2 , labeled as i1, c, im, and place a (rectangular) window of length 2L around each with the anchor point in the middle. We then “fold’’ the signal, averaging over all windows. More explicitly, we construct the auxiliary signal 1 m , S1 n 2 5 a S 1 n 1 ij 2 , m j51 n 5 2L, c, 21, 0, c L 2 1 4 3 2 1 0 –1 –2 –3 –4 –5 0 0.1 0.2 0.3 0.4 0.5 (a) 0.6 0.7 0.8 0.9 1 0 0.1 0.2 0.3 0.4 0.5 (b) 0.6 0.7 0.8 0.9 1 0 0.1 0.2 0.3 0.4 0.5 (c) 0.6 0.7 0.8 0.9 1 3 2.5 (edge effects can be dealt with in several obvious ways). Finally, we take the , Fourier transform of S . A windowed version of PRSA exists where PRSA is applied on sections of S, just like the windowed Fourier transform. THE FUTURE OF SIGNAL PROCESSING IN FINANCE Today’s recession is widely recognized to be a manifestation of a change in the rules of the financial game. Markets expand due to globalization; investors’ numbers increase; investors are offered an increasing number of increasingly complex alternatives to invest in; abundant computational power gives investors the potential to be (or the illusion of being) smarter. Indeed, today’s recession is partly (mostly or exclusively, according to some) attributed to mispricing “exotic” investment options, a result of glorifying brute force (i.e., computational power) at the expense of the models and techniques used. Finance and economy developed for centuries without reference to advanced mathematics (and signal processing). Economists were trained by a combination of tradition and psychology, being taught for each situation what the traditionally appropriate action is, and above all to develop and trust their instinct. Indeed, most established economy and finance textbooks (such as [8] 2 1.5 1 0.5 0 –0.5 –1 –1.5 1.5 1 0.5 0 –0.5 –1 –1.5 [FIG1] Showing the (a) first, (b) second, and (c) fifth iteration of the EMD algorithm on a signal: the signal appears in blue, the upper envelope in green, the lower envelope in red, and the local mean in light blue. It is clear that, as the iterations progress, the upper and lower envelopes tend to become mirror images of each other and the local mean tends identically to zero; as for the signal itself, it converges to the first IMF. IEEE SIGNAL PROCESSING MAGAZINE [157] SEPTEMBER 2009 Authorized licensed use limited to: University College Dublin. Downloaded on January 12, 2010 at 05:59 from IEEE Xplore. Restrictions apply. [in the SPOTLIGHT] continued and [9]) tended to keep mathematical prerequisites to an absolute minimum (and only in their more recent editions did they reverse this policy). Signal analysts were unaware of the potential of their techniques in finance , simply because the financial system, organized in its own way, never gave the impression of needing their help, and financiers, lacking a mathematical education, did not seek answers to their plights in the realm of mathematics. The pioneering work of Fischer Black and Myron Scholes changed all that. As a result of their work, mathematical finance is offered as a course in more and more mathematics and engineering departments, and an increasing number of financiers and economists are trained in computer simulations using mathematical models and in financial analysis using advanced signal processing techniques. [best of THE WEB] Careful quantitative risk analysis seems to be the antidote to today’s recession. The “mathematization’’ of the financial system places policy making in the hands of the experts and increases investors’ trust, as decisions are directly based on realistic, observable, and reproducible quantitative analysis instead of the “good hunch” of a “market-seasoned veteran.” Signal processing is the reliable way to our financial future. AUTHOR Konstantinos Drakakis (Konstantinos. Drakakis@ucd.ie) is a research fellow in the School of Electrical, Electronic, and Mechanical Engineering at University College Dublin (UCD), Ireland. He is also affiliated with UCD’s Complex and Adaptive Systems Laboratory and with the Claude Shannon Institute for Discrete Mathematics, Coding Theory, and Cryptography. REFERENCES [1] J. Nocera. (2009, Jan. 4). Risk mismanagement. NY Times Mag. [Online]. Available: http://www.nytimes. com/2009/01/04/magazine/04risk-t.html [2] (2009, Jan. 18). Letters to the editor: Risk management. New York Times Mag. [Online]. Available: http://www. nytimes.com/2009/01/18/magazine/18letters-t-. html [3] B. Oksendal, Stochastic Differential Equations: An Introduction with Applications, 6th ed. New York: Springer-Verlag, 2003. [4] K. Drakakis, S. Rickard, R. de Frein, and A. Cichocki, “Analysis of financial data using non-negative matrix factorization,” Int. Math. Forum, vol. 3, no. 38, pp. 1853–1870, 2008. [5] N. E. Huang, Z. Shen, S. R. Long, M. C. Wu, E. H. Shih, Q. Zheng, C. C. Tung, and H. H. Liu, “The empirical mode decomposition method and the Hilbert spectrum for non-stationary time series analysis,” Proc. R. Soc. Lond. A, vol. 454, no. 1971, pp. 903–995, 1998. [6] A. Bauer, J. W. Kantelhardt, A. Bunde, P. Barthel, R. Schneider, M. Malik, and G. Schmidt, “Phaserectified signal averaging detects quasi-periodicities in non-stationary data,” Physica A, vol. 364, pp. 423–434, 2006. [7] I. Karatzas and S. Shreve, Methods of Mathematical Finance. New York: Springer-Verlag, 2001. [8] R. Dornbusch, S. Fischer, and R. Startz, Macroeconomics, 10th ed. New York: McGrawHill, 2007. [9] G. Hubbard, Money, the Financial System, and the Economy, 6th ed. Reading, MA: Addison-Wesley, 2007. [SP] continued from page 154 genes, clustering, and classification. Many existing computing technologies such as statistic inference, neural network, data mining, and pattern recognition work well on these tasks. Some of these tools are open source software so that you are free to copy and redistribute them. MeV is one of them and is a good starting point if you are going to see what you can do about gene expression analysis. It integrates several popular analysis tools [e.g., hierarchical clustering, K-means, significance analysis of microarrays (SAM), and clustering affinity search technique (CAST)] and supports various data formats. It can give you an idea of what you need for analysis of gene expression data. SYSTEMS BIOLOGY http: //cytoscape.org/ (Cytoscape) http: //www.genome.jp/kegg/ (Kyoto E nc yclo p e dia o f G e ne s a nd Genomes, KEGG) http: / / w w w . b i o c o n d u c t o r . o r g / (Bioconductor) [tutorials] Instead of just analyzing individual components, systems biology, which focuses on several components simultaneously, is the systematic study of complex or integrated interactions of gene, proteins, and biological reactions. The goal of systems biology is to allow people to delve deeper into the foundation of biological activities and have a more general view on the function or mechanism of gene and protein. Because of the complex system, it needs interdisciplinary studies that involve statistics, computation, chemistry, and other fields to discover the biological silhouette. These systems biology works usually include a network of interaction or biological signal pathway. KEGG is one of the most famous pathway databases, and, by some knowledge-based methods, it provides information of cell and organism behaviors at the genomic and molecular level. As for the network of interaction, Cytoscape gives the convenient visualization of molecular interaction networks and has many open source plug-in modules to help users integrate the network with the data of the gene profiles or analyze the properties of the network and subnetwork. Bioconductor is a powerful open source and open development software project for the analysis and comprehension of genomic data. Because it is based on the R project (http://www. r-project.org/), users have many resources that they can do for data analysis, such as gene expression analysis, sequence and genome analysis, structural analysis, statistical analysis, and computational modeling. AUTHOR Jung-Hsien Chiang (jchiang@mail. ncku.edu.tw) is a professor in the Department of Computer Science and Information Engineering at National Cheng Kung University, Taiwan. He is currently a visiting professor with the Institute for Systems Biology, Seattle, Washington. [SP] IEEE SIGNAL PROCESSING MAGAZINE [158] SEPTEMBER 2009 Authorized licensed use limited to: University College Dublin. Downloaded on January 12, 2010 at 05:59 from IEEE Xplore. Restrictions apply.