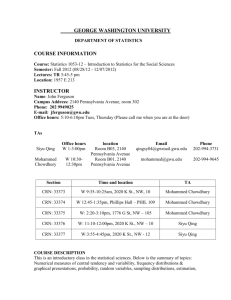

CS 211: Computer Architecture

advertisement

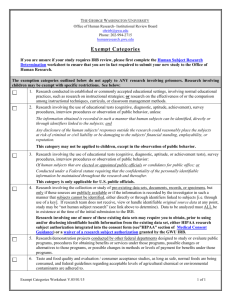

Summary: Architecture Trends ? CS 211: Computer Architecture x Moore’s law: density doubles every 18-24 months ¾ ¾ smaller processors, faster clocks Price drops due to volume and dev. costs what next? x Interconnect delays could dominate over feature delay Instructor: Prof. Bhagi Narahari Dept. of Computer Science Course URL: www.seas.gwu.edu/~narahari/cs211/ ¾ ¾ Need for simpler architectures Distributed logic and control x More functionality ¾ ¾ communicating processors network of embedded processors x To extract max performance ¾ ¾ Thumb rules: Amdahl’s law, Parallelism, Locality Software and compiler support needed!!! CS 211: Bhagi Narahari,CS, GWU Next: Review Computer Organization in an hour! x Overview of Computer Organization ¾ ¾ Components Sample processor design process Review: Computer Organization Basics x What are the components of a CPU x What is the microarchitecture level ? x What is an ISA - Instruction set architecture ? x How does a sample processor design look ? ¾ A simple processor architecture x what is the basic concept of pipelining CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU 1 A Computer Input Device Memory Unit x An ordered sequence of storage cells, each capable of holding a piece of data. x Volatile Memory Output Device Central Processing Unit Input Device ¾ Auxiliary Storage Device RAM – Random Access Memory x Non-volatile Memory ¾ ROM – Read Only Memory Memory The computer is composed of input devices, a central processing unit, a memory unit and output devices. CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU Memory Hierarchy: The Tradeoff Computer System cache Processor Processor CPU CPU Cache Cache regs regs Memory-I/O Memory-I/Obus bus Memory Memory virtual memory interrupts I/O I/O controller controller disk Disk disk Disk I/O I/O controller controller I/O I/O controller controller Display Display Network Network size: speed: $/Mbyte: block size: C a c h e register reference L1-cache reference 608 B 1.4 ns 128k B 4.2 ns 4B 4B 16 B C a c h e 8B L2-cache reference 512kB -- 4MB 16.8 ns $90/MB 16 B Memory Memory memory reference 128 MB 112 ns $2-6/MB 4-8 KB 4 KB disk disk disk memory reference 27GB 9 ms $0.01/MB larger, slower, cheaper (Numbers are for a 21264 at 700MHz) CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU 2 Central Processing Unit (CPU) x The CPU has two components: ¾ Arithmetic and Logic Unit (ALU) ¾ ¾ ¾ Performs arithmetic operations Performs logical operations Control Unit ¾ Controls the action of the other computer components so that instructions are executed in the correct sequence x Note: we will get back to Memory design after covering processor design Input/Output (I/O) Devices x I/O devices are the components of a computer system that accepts data to be processed and presents the results of the processing x Input Device Examples ¾ ¾ Keyboard Mouse x Output Device Examples ¾ ¾ Video display Printer x We won’t touch on this in this course! CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU The Operating System x The Operating System (OS) is a set of programs that manages all of the computer’s resources x Unix, Linux, Windows 98, Me, NT, 2000, XP, and MacOS are all examples of modern operating systems x Not the focus of this course! CS 211: Bhagi Narahari,CS, GWU Computer Architecture x The computer architecture encompasses the user’s view of the computer. x This includes such things as the assembly language instruction set, the number and types of internal registers, the memory management system and the model for exception handling. CS 211: Bhagi Narahari,CS, GWU 3 Architecture Models: Von Neumann architecture CPU + memory x Memory holds data, instructions. x Central processing unit (CPU) fetches instructions from memory. ¾ Separate CPU and memory distinguishes programmable computer. x CPU registers help out: program counter (PC), instruction register (IR), generalpurpose registers, etc. CS 211: Bhagi Narahari,CS, GWU address memory CPU ADD r5,r1,r3 200 ADD IR r5,r1,r3 CS 211: Bhagi Narahari,CS, GWU Harvard architecture address data memory data address PC CPU von Neumann vs. Harvard x Harvard can’t use self-modifying code. x Harvard allows two simultaneous memory fetches. x Most DSPs use Harvard architecture for streaming data: ¾ ¾ program memory CS 211: Bhagi Narahari,CS, GWU 200 PC data greater memory bandwidth; more predictable bandwidth. data CS 211: Bhagi Narahari,CS, GWU 4 Instruction Set Architecture x The Instruction Set Architecture (ISA) describes a set of instructions whose syntactic and semantic characteristics are defined by the underlying computer architecture. CS 211: Bhagi Narahari,CS, GWU Programming model x Programming model: registers visible to the programmer. x Some registers are not visible (IR). CS 211: Bhagi Narahari,CS, GWU Multiple implementations x Successful architectures have several implementations: ¾ ¾ ¾ ¾ varying clock speeds; different bus widths; different cache sizes; etc. Assembly language x One-to-one with instructions (more or less). x Basic features: ¾ ¾ ¾ ¾ CS 211: Bhagi Narahari,CS, GWU One instruction per line. Labels provide names for addresses (usually in first column). Instructions often start in later columns. Columns run to end of line. CS 211: Bhagi Narahari,CS, GWU 5 Computer Architecture is ... Instruction Set Architecture (ISA) Instruction Set Architecture software instruction set Organization hardware Hardware CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU Interface Design Evolution of Instruction Sets Single Accumulator (EDSAC 1950) A good interface: • Lasts through many implementations (portability, compatability) • Is used in many differeny ways (generality) Accumulator + Index Registers (Manchester Mark I, IBM 700 series 1953) Separation of Programming Model from Implementation • Provides convenient functionality to higher levels • Permits an efficient implementation at lower levels use Interface use use CS 211: Bhagi Narahari,CS, GWU imp 1 time High-level Language Based (B5000 1963) General Purpose Register Machines Complex Instruction Sets imp 2 Concept of a Family (IBM 360 1964) (Vax, Intel 432 1977-80) imp 3 Load/Store Architecture (CDC 6600, Cray 1 1963-76) RISC (Mips,Sparc,HP-PA,IBM RS6000,PowerPC . . .1987) CS 211: Bhagi Narahari,CS, GWU VLIW/”EPIC”?(IA-64. . .1999) 6 Evolution of Instruction Sets x Major advances in computer architecture are typically associated with landmark instruction set designs ¾ Ex: Stack vs GPR (System 360) x Design decisions must take into account: ¾ ¾ ¾ ¾ ¾ ¾ technology machine organization programming languages compiler technology operating systems applications CISC vs. RISC x Complex instruction set computer (CISC): ¾ ¾ many addressing modes; many operations. x Reduced instruction set computer (RISC): ¾ ¾ load/store; pipelined instructions. x And they in turn influence these CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU CISC Processors x Instruction decoding is performed with large microcode ROMs x Some instructions require more than a single instruction cycle to execute x Many addressing modes supported x Register set was designed to support specific functions CS 211: Bhagi Narahari,CS, GWU RISC Processors x Instruction decoding is performed with static (hard-wired) logic for a much faster result x Instructions are designed to execute in a single instruction cycle x Data processing instructions operate only on registers. Load and store instructions were designated to access memory x Register set is large and general purpose (in many cases) CS 211: Bhagi Narahari,CS, GWU 7 IA - 32 x x x x x x x x x x 1978: The Intel 8086 is announced (16 bit architecture) 1980: The 8087 floating point coprocessor is added 1982: The 80286 increases address space to 24 bits, +instructions 1985: The 80386 extends to 32 bits, new addressing modes 1989-1995: The 80486, Pentium, Pentium Pro add a few instructions (mostly designed for higher performance) 1997: 57 new “MMX” instructions are added, Pentium II 1999: The Pentium III added another 70 instructions (SSE) 2001: Another 144 instructions (SSE2) 2003: AMD extends the architecture to increase address space to 64 bits, widens all registers to 64 bits and other changes (AMD64) 2004: Intel capitulates and embraces AMD64 (calls it EM64T) and adds more media extensions IA-32 Overview x Complexity: ¾ ¾ ¾ ¾ x Saving grace: ¾ ¾ x “This history illustrates the impact of the “golden handcuffs” of compatibility -“adding new features as someone might add clothing to a packed bag” -“an architecture that is difficult to explain and impossible to love” CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU x Will use MIPS ¾ Simple RISC ISA Widely used CS 211: Bhagi Narahari,CS, GWU the most frequently used instructions are not too difficult to build compilers avoid the portions of the architecture that are slow “what the 80x86 lacks in style is made up in quantity, making it beautiful from the right perspective” Instruction set characteristics Quick look at ISA ¾ Instructions from 1 to 17 bytes long one operand must act as both a source and destination one operand can come from memory complex addressing modes e.g., “base or scaled index with 8 or 32 bit displacement” x x x x Fixed vs. variable length. Addressing modes. Number of operands. Types of operands. CS 211: Bhagi Narahari,CS, GWU 8 A "Typical" RISC - MIPS x fixed format instruction (3 formats I,R,J): ¾ 31 26 25 Op (R0 contains zero, DP take pair) x 3-address, reg-reg arithmetic instruction x Single address mode for load/store: base + displacement ¾ R-type: Register-Register 32 or 64 bits x 32 General Purpose Registers (GPR) ¾ Example: 32 bit MIPS 31 26 25 Op 31 21 20 Rs1 26 25 Rs1 31 16 15 0 0 immediate Rd 21 20 16 15 Rs2/Opx 0 immediate 26 25 Op 0 target CS 211: Bhagi Narahari,CS, GWU Processor Design. . . Instruction Types x When is memory accessed ? ¾ 6 5 Opx J-type: Jump / Call x Delayed branch CS 211: Bhagi Narahari,CS, GWU 11 10 Rd I-type: Branch Op see: SPARC, MIPS, HP PA-Risc, DEC Alpha, IBM PowerPC, CDC 6600, CDC 7600, Cray-1, Cray-2, Cray-3 16 15 Rs2 I-type: Register-Immediate (Load,Store) no indirection x Simple branch conditions (based on register values) 21 20 Rs1 How is address computed ? x Let’s glance at processor and inst. Set design. ¾ This is review material…from a typical course on Computer Organization (pre-req) x When is control flow affected ? ¾ ¾ How is branch outcome computed How is branch target address computed CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU 9 The Big Picture: The Performance Perspective CPI Microarchitecture Design: How ? x Any design must attempt to meet the requirements ¾ Inst. Count x Performance of a machine is determined by: ¾ ¾ ¾ ¾ Cycle Time Where do the requirements come from ? Ex: need to represent numbers in binary; integers, text, floating point x How to proceed with design ? Instruction count Clock cycle time Clock cycles per instruction x Processor design (datapath and control) will determine: ¾ ¾ Clock cycle time Clock cycles per instruction CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU Some History… How to Design a Processor: step-by-step x 1. Analyze instruction set => datapath requirements ¾ the meaning of each instruction is given by the register transfers datapath must include storage element for ISA registers ¾ datapath must support each register transfer ¾ x The Indiana Legislature once introduced legislation declaring that the value of S was exactly 3.2 ¾ possibly more x 2. Select set of datapath components and establish clocking methodology x 3. Assemble datapath meeting the requirements x 4. Analyze implementation of each instruction to determine setting of control points that effects the register transfer. x 5. Assemble the control logic x Let’s look at a single cycle ISA… CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU 10 The MIPS Instruction Formats x op ¾ R-type ¾ I-type ¾ J-type rs 6 bits 31 5 bits 21 5 bits rd shamt funct 5 bits 5 bits 6 bits 16 rs 6 bits 31 rt 5 bits 26 op immediate rt 16 bits 26 0 target address 6 bits 26 bits The different fields are: ¾ op: operation of the instruction ¾ rs, rt, rd: the source and destination register specifiers ¾ shamt: shift amount ¾ funct: selects the variant of the operation in the “op” field ¾ address / immediate: address offset or immediate value CS 211: Narahari,CS, GWU ¾Bhagi target address: target address of the jump instruction Logical Register Transfers x RTL gives the meaning of the instructions x All start by fetching the instruction Register Transfers ADDU R[rd] <– R[rs] + R[rt]; 26 op 6 bits x BRANCH: ¾ beq rs, rt, imm16 21 rs 16 rt 5 bits 5 bits 21 16 5 bits 21 5 bits 16 rs 11 funct 5 bits 5 bits 6 bits rs 5 bits 16 bits 0 immediate 5 bits 21 0 immediate rt 5 bits 0 shamt rt rs 6 rd 16 bits 16 rt 5 bits 0 immediate 16 bits • Register rs and rt are the source registers. • If the instruction has three operand register, then rd is the destination register • If the instruction has two operand register, then rt is the destination register CS 211: Bhagi Narahari,CS, GWU x Combinational Elements x Storage Elements = MEM[ PC ] inst 31 x ADD and SUB op 6 bits ¾ addU rd, rs, rt ¾ subU rd, rs, rt 31 26 op x OR Immediate: 6 bits ¾ ori rt, rs, imm16 31 26 op x LOAD and STORE Word 6 bits ¾ lw rt, rs, imm16 31 26 ¾ sw rt, rs, imm16 Step 2: Components of the Datapath op | rs | rt | rd | shamt | funct = MEM[ PC ] op | rs | rt | Imm16 0 0 5 bits op x Step 1a: The MIPS-Inst Set (eg.) All MIPS instructions are 32 bits long. The three instruction 31 26 21 16 11 6 formats: ¾ Clocking methodology PC <– PC + 4 SUBU R[rd] <– R[rs] – R[rt]; PC <– PC + 4 ORi R[rt] <– R[rs] | zero_ext(Imm16); PC <– PC + 4 LOAD R[rt] <– MEM[ R[rs] + sign_ext(Imm16)]; PC <– PC + 4 STORE MEM[ R[rs] + sign_ext(Imm16) ] <– R[rt]; PC <– PC + 4 BEQ if ( R[rs] == R[rt] ) then PC <– PC + 4 + sign_ext(Imm16)] || 00 else PC <– PC + 4 CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU 11 Combinational Logic Elements (Basic Building Blocks) x Adder A Adder 32 B x MUX Storage Element: Register (Basic Building Block) CarryIn 32 x Register Sum Carry 32 ¾ Similar B input and output ¾ Write Enable input 32 ¾ Write Y A ALU B 32 ¾ asserted 32 Result CS 211: Bhagi Narahari,CS, GWU RWRA RB Write Enable 5 5 5 x busA Register File consists of 32 registers: busW 32 32 32-bit ¾ Two 32-bit output busses: 32 Registers busB busA and busB Clk 32 ¾ One 32-bit input bus: busW Register is selected by: ¾ RA (number) selects the register to put on busA (data) ¾ RB (number) selects the register to put on busB (data) ¾ RW (number) selects the register to be written via busW (data) when Write Enable is 1 Clock input (CLK) ¾ The CLK input is a factor ONLY during write operation ¾ During read operation, behaves as a combinational logic block: ¾ (0): Data Out will not change (1): Data Out will become Data 32 Storage Element: Register File x Clk In CS 211: Bhagi Narahari,CS, GWU x N Enable: ¾ negated 32 OP x ALU Data Out N ¾ N-bit MUX 32 to the D Flip FlopData In except Select A Write Enable RA or RB valid => busA or busB valid after “access time.” CS 211: Bhagi Narahari,CS, GWU Storage Element: Idealized Memory x Memory (idealized) ¾ ¾ Write Enable Data In One input bus: Data In 32 One output bus: Data Out Clk Address DataOut 32 x Memory word is selected by: ¾ ¾ Address selects the word to put on Data Out Write Enable = 1: address selects the memory word to be written via the Data In bus x Clock input (CLK) ¾ ¾ The CLK input is a factor ONLY during write operation During read operation, behaves as a combinational logic block: ¾ Address valid => Data Out valid after “access time.” CS 211: Bhagi Narahari,CS, GWU 12 Step 3: Assemble DataPath meeting our requirements Clocking Methodology Clk Setup Hold Setup Hold Don’t Care Rising Edge Falling Edge Clock Period x x x x Clocks needed in sequential logic to decide when an element that contains state should be updated. A clock is a free-running circuit with a fixed cycle time or clock period. The clock frequency is the inverse of the cycle time. The clock cycle time or clock period is divided into two portions: when the clock is high and when the clock is low. Edge-triggered clocking: all state changes occur on a clock edge. CS 211: Bhagi Narahari,CS, GWU x The common RTL operations ¾ The common RTL operations for all instructions are: (a) Fetch the instruction using the Program Counter (PC) at the beginning of an instruction’s execution (PC -> Instruction Memory -> Instruction Word). (b) Then at the end of the instruction’s execution, you need to update the Program Counter (PC -> Next Address Logic -> PC). More specifically, you need to increment the PC by 4 if you are executing sequential code. For Branch and Jump instructions, you need to update the program counter to “something else” other than plus 4. The Next Address Logic block: • Add 4 (number of bytes in an instruction) or • Branch and Jump instructions CS 211: Bhagi Narahari,CS, GWU 3a: Overview of the Instruction Fetch Unit ¾ x Register Transfer Requirements Datapath Assembly x Instruction Fetch x Read Operands and Execute Operation Fetch the Instruction: mem[PC] Update the program counter: 3b: Add & Subtract x R[rd] <- R[rs] op R[rt] Example: addU ¾ ¾ 31 RegWr Instruction Memory CS 211: Bhagi Narahari,CS, GWU Instruction Word 16 5 bits 5 bits Rd Rs 5 5 Rt 32 Clk 32 32-bit Registers 6 0 rd shamt funct 5 bits 5 bits 6 bits ALUctr 5 Rw Ra Rb busW 11 rt busA 32 busB ALU Next Address Logic 21 rs 6 bits PC Address 26 op Sequential Code: PC <- PC + 4 ¾ Branch and Jump: PC <- “something else” ¾ Clk rd, rs, rt Ra, Rb, and Rw come from instruction’s rs, rt, and rd fields ALUctr and RegWr: control logic after decoding the instruction Result 32 32 32 CS 211: Bhagi Narahari,CS, GWU 13 Putting it All Together: A Single Cycle Datapath ¾ ALUctr MemWr Equal Rs Ideal Instruction Memory Rt 5 busW 32 Data In 32 Clk 32 0 WrEn Adr 1 Data Memory Instruction Rd Rs 5 5 Instruction Address Rt 5 Imm 16 A 32 Rw Ra Rb 32 32 32 32-bit Registers B ExtOp Data Address Data In Clk Ideal Data Memory Clk 32 Clk 16 1 Critical Path (Load Operation) = PC’s Clk-to-Q + Instruction Memory’s Access Time + Register File’s Access Time + ALU to Perform a 32-bit Add + Data Memory Access Time + Setup Time for Register File Write + Clock Skew ALU Extender imm16 Clk 0 Mux Adder Clk = 32 Mux PC Mux 32 Rw Ra Rb 32 32-bit Registers busB 32 ALU 00 busA Next Address 5 Address valid => Output valid after “access time.” MemtoReg 0 RegWr 5 Adder PC Ext ALUSrc CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU An Abstract View of the Implementation Step 4: Given Datapath: RTL -> Control Instruction<31:0> PC Next Address 32 ALU Clk 32 Rb 32 32-bit Registers B 32 Data Address Data In Ideal Data Memory Rs Rd Imm16 Control Data Out nPC_sel RegWr RegDst ExtOp ALUSrc ALUctr MemWr MemtoReg Equal Clk DATA PATH Datapath CS 211: Bhagi Narahari,CS, GWU Rt <0:15> Op Fun A Rw Ra 32 Adr <11:15> Conditions Rt 5 <16:20> Instruction Address Control Signals Inst Memory <21:25> Instruction Rd Rs 5 5 <21:25> Control Ideal Instruction Memory Clk imm16 Imm16 Rd Rt 1 4 Rd Register file and ideal memory: ¾ The CLK input is a factor ONLY during write operation ¾ During read operation, behave as combinational logic: PC Rt An Abstract View of the Critical Path x Instruction<31:0> <0:15> Rs RegDst nPC_sel <11:15> Adr <16:20> <21:25> Inst Memory CS 211: Bhagi Narahari,CS, GWU 14 Control Signals R[rd] <– R[rs] + R[rt]; x nPC_sel <= if (OP == BEQ) then EQUAL else 0 x ALUsrc <= if (OP == “000000”) then “regB” else “immed” x ALUctr <= if (OP == “000000”) then funct elseif (OP == ORi) then “OR” elseif (OP == BEQ) then “sub” else “add” x ExtOp <= if (OP == ORi) then “zero” else “sign” x MemWr <= (OP == Store) x MemtoReg <= (OP == Load) x RegWr: <= if ((OP == Store) || (OP == BEQ)) then 0 else 1 x RegDst: <= if ((OP == Load) || (OP == ORi)) then 0 else 1 CS 211: Bhagi Narahari,CS, GWU PC <– PC + 4 ALUsrc = RegB, ALUctr = “add”, RegDst = rd, RegWr, nPC_sel = “+4” R[rd] <– R[rs] – R[rt]; PC <– PC + 4 ALUsrc = RegB, ALUctr = “sub”, RegDst = rd, RegWr, nPC_sel = “+4” ORi R[rt] <– R[rs] + zero_ext(Imm16); PC <– PC + 4 ALUsrc = Im, Extop = “Z”, ALUctr = “or”, RegDst = rt, RegWr, nPC_sel = “+4” LOAD R[rt] <– MEM[ R[rs] + sign_ext(Imm16)]; PC <– PC + 4 ALUsrc = Im, Extop = “Sn”, ALUctr = “add”, MemtoReg, RegDst = rt, RegWr, nPC_sel = “+4” STORE MEM[ R[rs] + sign_ext(Imm16)] <– R[rs]; PC <– PC + 4 ALUsrc = Im, Extop = “Sn”, ALUctr = “add”, MemWr, nPC_sel = “+4” BEQ if ( R[rs] == R[rt] ) then PC <– PC + sign_ext(Imm16)] || 00 else PC <– PC + 4 nPC_sel = EQUAL, ALUctr = “sub” CS 211: Bhagi Narahari,CS, GWU Example: Load Instruction nPC_sel +4 4 Rd Equal Rs 5 ALUctr MemWr add 32 Data In 1 32 Clk 00 sign ExtOp CS 211: Bhagi Narahari,CS, GWU 0 ext 32 0 Mux 16 32 ALU imm16 Extender Adder Clk Clk = Mux PC Mux 32 WrEn Adr Rd Rs 5 5 Instruction Address 5 Rw Ra Rb 32 32-bit Registers busB 32 Instruction 1 Control Signals Conditions Rt 5 A 32 Rw Ra 32 Rb 32 32-bit Registers 32 ALU busW MemtoReg Rt busA Control Ideal Instruction Memory Imm16 RegDst Rd Rt rt 0 1 RegWr 5 Adder PC Ext imm16 Rt An Abstract View of the Implementation Instruction<31:0> <0:15> Rs <11:15> Adr <16:20> <21:25> Inst Memory PC SUB Next Address ADD Step 5: Logic for each control signal Register Transfer B Clk Clk inst Data Memory 32 Data Address Data In Ideal Data Memory Data Out Clk Datapath x Logical vs. Physical Structure ALUSrc CS 211: Bhagi Narahari,CS, GWU 15 Summary 2. Select set of datapath components & establish clock methodology ¾ 3. Assemble datapath meeting the requirements ¾ 4. Analyze implementation of each instruction to determine setting of control points that effects the register transfer. ¾ 5. Assemble the control logic Control Logic / Store (PLA, ROM) Conditions ¾ Instruction 1. Analyze instruction set => datapath requirements microinstruction Control Points Datapath MIPS makes it easier ¾ Instructions same size ¾ Source registers always in same place ¾ Immediates same size, location ¾ Operations always on registers/immediates x In a single-cycle processor, each instruction is realized by exactly one control command or “microinstruction” Single cycle datapath => CPI=1, CCT => long What’s wrong with our CPI=1 processor? ALU Inst Memory Reg File mux Critical Path ALU Data Mem Inst Memory Reg File ALU Data Mem Inst Memory Reg File ¾ MemWr MemRd MemWr RegDst RegWr Reg. File Result Store x Long Cycle Time x All instructions take as much time as the slowest x Real memory is not as nice as our idealized memory Exec Data Mem mux ALUctr mux setup Mem Access cmp setup Equal mux mux ExtOp Branch PC mux ALUSrc Store PC Reg File Operand Fetch Load PC Partitioning the CPI=1 Datapath x Add registers between smallest steps Instruction Fetch Arithmetic & Logical PC Inst Memory in general, the controller is a finite state machine microinstruction can also control sequencing (see later) ¾ CS 211:¾Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU nPC_sel x Decode ¾ PC x OPcode 5 steps to design a processor Next PC x Systematic Generation of Control cannot always get the job done in one (short) cycle CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU 16 RegDst RegWr MemToReg Reg. File MemRd MemWr ALUctr S x x The state digrams that arise define the controller for an instruction set processor are highly structured Use this structure to construct a simple “microsequencer” Control reduces to programming this very simple device microprogramming sequencer datapath control control M Result Store Operand Fetch Instruction Fetch B x Data Mem A Mem Access IR Reg File Controller Design Ext ALUSrc ALU Equal nPC_sel PC Next PC E ExtOp Example Multicycle Datapath microinstruction micro-PC sequencer x Critical Path ? CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU Microprogramming Microprogramming sequencer datapath control control x Microprogramming is a convenient method for implementing structured control state diagrams: ¾ ¾ ¾ Random logic replaced by microPC sequencer and ROM Each line of ROM called a Pinstruction: contains sequencer control + values for control points limited state transitions: branch to zero, next sequential, branch to Pinstruction address from displatch ROM x Horizontal PCode: one control bit in PInstruction for every control line in datapath x Vertical PCode: groups of control-lines coded together in PInstruction (e.g. possible ALU dest) x Control design reduces to Microprogramming ¾ Part of the design process is to develop a “language” that describes control and is easy for humans to understand CS 211: Bhagi Narahari,CS, GWU microinstruction (P) micro-PC P-sequencer: fetch,dispatch, sequential Dispatch ROM Opcode Decode Decode To DataPath P-Code ROM Decoders implement our Pcode language: For instance: rt-ALU rd-ALU mem-ALU x Microprogramming is a fundamental concept ¾ ¾ ¾ implement an instruction set by building a very simple processor and interpreting the instructions essential for very complex instructions and when few register transfers are possible overkill when ISA matches datapath 1-1 CS 211: Bhagi Narahari,CS, GWU 17 How to improve performance? Microprogramming one inspiration for RISC x If simple instruction could execute at very high clock rate… ¾ you could even write compilers to produce microinstructions… x If most programs use simple instructions and addressing modes… x If microcode is kept in RAM instead of ROM so as to fix bugs … x Then why not skip instruction interpretation by a microprogram and simply compile directly into lowest language of machine? (microprogramming is overkill when ISA matches datapath 1-1) CS 211: Bhagi Narahari,CS, GWU x Recall performance is function of ¾ ¾ ¾ CPI: cycles per instruction Clock cycle Instruction count x Reducing any of the 3 factors will lead to improved performance CS 211: Bhagi Narahari,CS, GWU How to improve performance? x First step is to apply concept of pipelining to the instruction execution process ¾ Overlap computations x What does this do? ¾ ¾ Decrease clock cycle Decrease effective CPI compared to original clock cycle Pipelining: Its Natural! x Laundry Example x Ann, Brian, Cathy, Dave each have one load of clothes to wash, dry, and fold x Washer takes 30 minutes A B C D x Dryer takes 40 minutes x “Folder” takes 20 minutes CS 211: Bhagi Narahari,CS, GWU CS 211: Bhagi Narahari,CS, GWU 18 Pipelined Laundry Start work ASAP Sequential Laundry 6 PM 7 8 9 11 10 Midnight 6 PM Time 7 8 30 40 20 30 40 20 30 40 20 30 40 20 T a s k 30 40 A T a s k B O r d e r D CS 211: Bhagi Narahari,CS, GWU 11 Midnight 40 40 40 20 A O r d e r C D x Pipelined laundry takes 3.5 hours for 4 loads CS 211: Bhagi Narahari,CS, GWU Pipelining Lessons 6 PM 7 8 9 x Time O r d e r 10 B C x Sequential laundry takes 6 hours for 4 loads x If they learned pipelining, how long would laundry take? T a s k 9 Time 30 40 A 40 40 40 20 x x x B C D CS 211: Bhagi Narahari,CS, GWU x x Pipelining doesn’t help latency of single task, it helps throughput of entire workload Pipeline rate limited by slowest pipeline stage Multiple tasks operating simultaneously Potential speedup = Number pipe stages Unbalanced lengths of pipe stages reduces speedup Time to “fill” pipeline and time to “drain” it reduces speedup Instruction Pipeline x Instruction execution process lends itself naturally to pipelining ¾ overlap the subtasks of instruction fetch, decode and execute CS 211: Bhagi Narahari,CS, GWU 19 How to improve performance? x Recall performance is function of ¾ ¾ ¾ CPI: cycles per instruction Clock cycle Instruction count x Reducing any of the 3 factors will lead to improved performance How to improve performance? x First step is to apply concept of pipelining to the instruction execution process ¾ ¾ ¾ CS 211: Bhagi Narahari,CS, GWU ¾ Overlap computations of different tasks by operating on them concurrently in different stages Decrease clock cycle Decrease effective CPU time compared to original clock cycle CS 211: Bhagi Narahari,CS, GWU Instruction Level Parallel Processors (ILP) Pipeline Approach to Improve System Performance x Analogous to fluid flow in pipelines and assembly line in factories x Divide process into “stages” and send tasks into a pipeline Overlap computations x What does this do? x early ILP - one of two orthogonal concepts: ¾ ¾ pipelining - vertical approach multiple (non-pipelined) units - horizontal approach x progression to multiple pipelined units x instruction issue became bottleneck, led to ¾ ¾ superscalar ILP processors Very Large Instruction Word (VLIW) x Note: key performance metric in all ILP processor classes is IPC (instructions per cycle) CS 211: Bhagi Narahari,CS, GWU this is the degree of parallelism achieved ¾ Narahari,CS, GWU CS 211: Bhagi 20 Instruction Pipeline x Instruction execution process lends itself naturally to pipelining ¾ overlap the subtasks of instruction fetch, decode and execute CS 211: Bhagi Narahari,CS, GWU 21