Significance Testing - Computer Graphics Home

advertisement

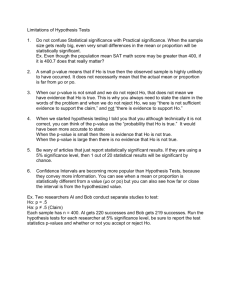

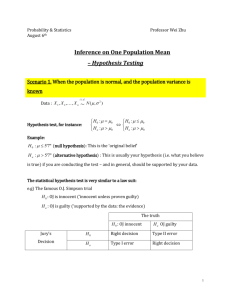

§3.3 Significance Testing

The topics in this section concern with the second course objective.

The topics in this section heavily depend on those in the last section. I

strongly recommend reading the last section at least one more time before

starting on this section.

The very hypotheses that were tested by hypothesis testing can be tested by

significance testing. Significance testing uses the same test statistics (to test

the same corresponding hypotheses) as those used in hypothesis testing.

Ironically, as you will see, significance testing has no significance level.

Here is the difference between significance testing and hypothesis testing.

The conclusion of a significance test is always “reject Ho with the p-value.”

However, the part “reject Ho” is always implicitly understood. As a result,

only the p-value is given as the conclusion of significance testing. See the

diagram of significance testing given below.

Data

Test Statistic

P-Value

Significance Testing

At this point, your question should be “What the heck is ‘p-value’?”

In significance testing, after the computation of the observed value from

data, a probability is found using this computed observed value. This

probability is called the “p-value.” The “p” stands for “probability.” It has

1

nothing to do with bodily functions. In fact, the following is the definition

of the p-value.

A p-value is the maximum possible probability for data falsely rejecting a

null hypothesis.

It would be truly helpful if you understand that “p-value” is “probabilityvalue.” Read Appendix: P-Values given below in this section.

Now, at this point, you should be asking “how to find a p-value.”

You compute the observed value (from data using an appropriate test

statistic). Generally, this observed value splits the entire probability of one

into two probabilities, one probability on the left and another probability on

the right of the observed value. See the diagram given below.

A probability is the area between the curve and horizontal axis when

the probability distribution is continuous. All the test statistics in

this section have continuous distributions (such as the standard

Normal, Student’s t, and Chi-square distributions).

The probability on the left

of the observed value (ov)

Note: An observed value is a number,

a point on the horizontal axis.

The probability on

the right of the

observed value (ov)

The observed value (ov)

We are doing significance testing and not hypothesis testing. However, if

we were doing hypothesis testing, it would be either a left-tailed test, righttailed test or two-tailed test, depending on the hypotheses being tested. By

the way, please read Appendix: Recommendations given below.

2

Let us called “left-tailed case” if it were a left-tailed test, “right-tailed case”

if it were a right-tailed test, and “two-tailed case” if it were a two-tailed test

in hypothesis testing. These cases will determine which one of the two split

probabilities is the p-value.

For a right-tailed case, the p-value is the probability on the right of the

observed value.

For a left-tailed case, it is the probability on the left of the observed value.

For a two-tailed case, twice the smaller probability is the p-value.

See the diagram given below.

This probability is the p-value for a

left-tailed case since it is on the left

of the observed value (ov).

This probability times two

is the p-value for a twotailed case since this is

smaller than the other

probability.

The probability is

the p-value for a

right-tailed case

since it is on the

right of the

observed value.

The observed value (ov)

Generally in a two-tailed case, a probability is multiplied by two to obtain

the p-value. An observed value splits the entire probability (of one) into two

probabilities, one is smaller than the other. We multiply the smaller

probability with two to find the p-value. You would not have any other

3

choice if you are multiplying one of them by two to obtain the p-value

because a p-value is a probability. Read Appendix: P-values again.

Of course, if the two probabilities are equal to each other, you can multiply

either probability by two to obtain the p-value in a two-tailed case.

Why?????!!!!!! Well, you could try this with each of the two equal

probabilities and compare their p-values. ☺

As you may have noticed, there is no significance level given in significance

testing. However, the p-value is also called “observed significance level,”

and the term “significance” in “significance testing” has come from the term

“significance” in “observed significance level.” Do not confuse with an

observed significance level (p-value) and a significance level (α).

When you hear “significance level,” you should think of “hypothesis

testing.”

When you hear “significance testing,” you should think of “observed

significance level” (p-value).

An observed significance level is a probability determined by the observed

value and the alternative hypothesis.

A p-value is an observed significance level.

Here is actually how to find numbers (values) for p-values. You need to

compute observed values from test statistics. As you remember from the last

section, the test statistics are

( x - µo)/(s/ n ) for testing hypotheses on µ,

^

( p - po)/ po(1 - po)/n for testing hypotheses on p, and

(n – 1)(s/σo)2 for testing hypotheses on σ.

Also, recall, the probability distribution of the test statistics for testing µ and

p is the standard Normal distribution (with large data). The probability

distribution of the test statistic for testing σ is the Chi-square distribution

4

with n – 1 degree of freedom (with large data). Now, read Appendix: How

to Find P-Values given below.

Let us have a numerical example by conducting significance testing on

Ho: µ ≤ 20.0

vs

Ha: µ > 20.0

from the Orange Crate Example. The test statistic is ( x - µo)/(s/ n ) which

is the Normal standardization of the sample average, x , the estimator for µ.

The sampling distribution of the test statistic is, of course, the standard

Normal distribution.

The observed value is 1.2086 = (20.22 –

20.00)(0.997/ 30 ) . This is a right-tailed case so the p-value is

P(Z > 1.2086) = 0.1134

where Z is the standard Normal variable. This probability is found using the

website given in Appendix: How to Find P-Values, and it is the p-value

for this significance test. This significance testing concludes by stating

The p-value is 0.1134 for the significance testing on the hypotheses given

above.

By the way, if you were testing

Ho: µ = 20.0

vs

Ha: µ ≠ 20.0,

then the p-value would be 0.2268 (= 0.1134*2) since it is a two-tailed case.

If this were a left-tailed case, then the p-value would be 0.8866. Can you

tell why it is 0.8866? At any rate, this means that you cannot pick a smaller

probability for the p-value for a one-tailed test all the time.

5

Significance testing is a system whose input is data and its output is the pvalue (see the first diagram given above in this section). That is,

significance testing ends with the p-value. However, with the p-value,

hypothesis testing can be done. That is, you can conduct hypothesis testing

after significance testing.

Namely, once a significance testing is conducted and concluded with its pvalue, a hypothesis testing can be conducted with the p-value if so desired.

If the p-value is less than or equal to the significance level α (p-value ≤ α),

then the null hypothesis Ho is rejected at α.

This is because, if the p-value is less than or equal to α, then the observed

value must be in the rejection area. See the diagram given below.

A right-tailed case: The p-value (the area in blue) is less than α (the area in the red;

that is, the area to the left of the p-value and p-value itself together) and, hence, the

observed value is in the rejection area. As a result, the Ho is rejected at α.

α

The critical point

The p-value

The observed value (ov)

If the p-value is greater than the significance level α (p-value > α), then the

null hypothesis Ho is not rejected α.

This is because, if the p-value is greater than α, then the observed value

must be outside the rejection area. See the diagram given below.

6

A right-tailed case: The p-value (the area in the red; that is, the area to the left of the

α and α itself together) is greater than the α (the area in blue) and, hence, the

observed value is outside the rejection area. As a result, the Ho is not rejected at α.

The p-value

α

The observed value (ov)

The critical point

Let us conduct a hypothesis testing at 5% (= α) significance level on

Ho: µ ≤ 20.0

vs

Ha: µ > 20.0

with the p-value of 0.1134 from the significance testing. Since the p-value

0.1134 is greater than α = 0.05, the null hypothesis cannot be rejected at 5%

significance level. By the way, it cannot be rejected at 1% level either.

If you are testing

Ho: σ ≥ 0.75

vs

Ha: σ < 0.75

in the Orange Crate Example, the observed value is (30 – 1)(0.997376/0.75)2

= 51.285 and its p-value is close to 99.35%. It is a left-tailed case with

7

hypotheses on σ with 29 degree of freedom. See Appendix: How to Find

P-Values given below to find the p-value.

If you wish, hypothesis testing can be conducted at, say, 1% significance

level. This p-value is great than 1% and, hence, the null hypothesis is not

rejected at 1% significance level (or any significance level less than 0.9935).

Note that a p-value can be large, like 99.35% in this example. That is, you

cannot automatically pick a smaller probability of the two probabilities split

by an observed value for a p-value for a one-tailed test.

A reason for significance testing is that a statistician does not have to do

hypothesis testing. Suppose a pharmaceutical company has spent 10 years

and 300 million dollars to develop a new medicine. To market the medicine

(so that the company can recover the investment and makes some profit),

this medicine must be tested and proven to be safe and effective by

conducting clinical trials and hypothesis testing. Everyone involved,

including statisticians, comes under a heavy pressure to approve the

medicine.

A statistician might be asked (forced) to alter the data or to increase the

significance level so that null hypothesis is rejected. With significance test,

a statistician does not have to worry about this kind of pressure. He does not

even know what the significance level is in the significance testing (because

there is none). The only thing for him to do is to compute the observed

value from data and its p-value from the observed value and to give the pvalue to the management so that they can conduct hypothesis testing

themselves.

Another advantage for significance testing is for computer programming. It

is simpler and easier to program significance testing than to program

hypothesis testing. It does not concern the different levels of significance,

and the output is a single number. There are many computer statistical

packages which perform F-tests, t-tests and z-tests with input data and

produce p-values for the output, letting the users do hypothesis testing.

Let us have another numerical example form the Political Poll Example.

You want to test

Ho: P({D}) = 0.40

8

vs

Ha: P({D}) ≠ 0.40.

Then, this is a two-tailed case, and the test statistic is

^

( p - po)/ po(1 - po)/n for testing the hypotheses on p = P({D}) where po =

^

0.40, p = P̂({D}) = 357/850 = 0.42 and n = 850. The observed value is

(0.42 – 0.40)/ 0.40(1 − 0.40) / 850 = 1.1902. This results in the p-value of

0.234. See Appendix: How to Find P-Values given below to find the pvalue.

If hypothesis testing is to be performed on these hypotheses, then Ho cannot

be rejected at the 1%, or even 5%, significance level.

Finally, the p-value is the maximum probability for Ha to be true in light of

the obtained data. For instance, a significance test on

Ho: P(D) ≥ P(R)

vs

Ha: P(D) < P(R)

is conducted in the Political Poll Example. This test produces the p-value of

3.6%. This p-value of 3.6% means that the maximum chance for the Ho:

P(D) ≥ P(R) to be true is 3.6% given the data. If the hypothesis testing is

conducted, the null hypothesis is rejected and accepted is the alternative

hypothesis of the P(R) is greater than P(D) at 5%. However, it fails to reject

the null hypothesis at 1%.

As you have realized, if you can do significance testing, you can do

hypothesis testing with the p-value. Significance testing is performed by

finding the right test statistic and its probability distribution. The observed

value, computed from the test statistic and data, gives you the p-value (using

tables or a website). If the p-value is less than a significance level, reject

Ho. Otherwise, Ho cannot be rejected.

9

You can do any significance and hypothesis testing on any parameter by

finding the appropriate test statistic and its probability distribution for testing

the hypotheses in question. With data, you can compute an observed value

and find the p-value by using tables or a website. With the p-value you can

perform hypothesis testing.

Testing on hypotheses on parameters of two independent groups is given in

Appendix(Optional): More Testing given below.

Appendix: P-Values

A p-value is the maximum possible probability for data falsely rejecting a

null hypothesis. Thus, a p-value is a probability value. Understanding of

this fact would prevent you from making a mistake like giving a number

greater than one or a negative number for a p-value like many students do.

Why is this so? Well, did you understand what probability is numerically?

Also, you multiply a probability by two when you find a p-value in a twosided case. You would not multiply a probability greater than 0.5 by two to

find a p-value. Why? If this is not obvious to you, please think through. I

hope you will find the reason why one should not multiply a number greater

than 0.5 by two to find a p-value.

By the way, this is where the difference between memorization and

understanding comes in play (to help you or to kill you).

Appendix: Recommendations

At this point, if you are not sure what we are talking about in this section,

please go back and read the last section, §3.2 Hypothesis Testing. You

should have learned and understood test statistics, observed values, lefttailed tests, right-tailed tests, two-tailed tests and such in the last section.

These topics (concepts) are used in the subject (significance testing) and its

related topics of this section. Lack of understanding of the topics/concepts

in the last section would impede your understanding of the subject and the

related topics in this section. On the other hand, their understanding would

enable you to understand significance testing and its related topics.

10

Generally, this is true in mathematics, applied mathematics and sciences. A

current topic (or a subject) is developed using (or based on) the previous

topics (subjects). Thus, understanding of previous topics (subjects) is

necessary for learning and understanding of a current topic or subject. Lack

of understanding of past topics would severely impede understanding of the

current topics.

The key to all this is “understanding” of topics and subjects. Memorizing

them would not be of much help. As Confucius said, “He who memorizes is

buying a car with no engine.” He does not go too far.

Appendix: How to Find P-Values

For testing hypotheses on µ and p, the p-values are

P(Z > ov) for a right-tailed case,

P(Z < ov) for a left-tailed case, and

2*Min{ P(Z > ov), P(Z < ov)} for a two-tailed case,

where ov is an observed value and Min{ P(Z > ov), P(Z < ov)} is the smaller

of P(Z > ov) or P(Z < ov). Now go to the website,

http://surfstat.anu.edu.au/surfstat-home/tables/normal.php

or

http://sites.csn.edu/mgreenwich/stat/normal.htm

For a right-tailed case, click on the circle under the second picture, put the

ov under “z value” and click on the button “→.” This should give you the pvalue under “probability.”

For a left-tailed case, click on the circle under the first picture and repeat the

above. You should get the p-value under “probability.”

For a two-tailed case, click on the circle under the fourth picture and repeat

the above. You should get the p-value under “probability.” Do not multiply

this p-value by two. It is the p-value, given under “probability” for a twotailed test. This is because, see the picture, the probability given under

11

“probability” is P(Z < ov) + P(Z > ov) = 2* Min{ P(Z > ov), P(Z < ov)} =

the p-value.

Let us have some exercises. Conduct significance testing (that is, find the pvalue) on Ho: p ≤ 0.23 vs Ha: p > 0.23 based on data of size 800 whose

estimate for p is 0.21.

The answer: The p-value is 0.9106. Hint: The ov = -1.3442 and it is a

right-tailed case.

Conduct significance testing on Ho: µ = 41.85 vs Ha: µ ≠ 41.85 based on

data of size 36 whose sample mean and sample standard deviation are 42.57

and 2.5 respectively.

The answer: The p-value is 0.084. Hint: The ov = 1.728 and it is a twotailed case. Remember, you do not multiply the probability by two when

you use the website.

For testing hypotheses on σ, the p-values are

P (Χn-12 > ov) for a right-tailed case,

P(Χ n-12 < ov) for a left-tailed case, and

2*Min{ P(Χ n-12 > ov), P(Χ n-12 < ov)} for a two-tailed case,

where Χ n-12 is the Chi-square variable with n – 1 degree of freedom. Now

go to the website,

http://surfstat.anu.edu.au/surfstat-home/tables/chi.php

or

http://sites.csn.edu/mgreenwich/stat/chi.htm

For a right-tailed case, click on the circle under the second picture, put the

degree of freedom under “d.f.” and put the ov under “Χ2 value.” Then, click

on the button “→” which should give you the p-value under “probability.”

For a left-tailed case, click on the circle under the first picture, and the repeat

the above. You should find the p-value under “probability.”

12

For a two-tailed case, you repeat the above for both right-tailed and lefttailed cases. Find the smaller probability under “probability” and multiply it

by two, which is the p-value. This time, you have to multiply it by two.

Let us have some exercises. Conduct significance testing on Ho: σ > 35.0

vs Ha: σ ≤ 35.0 based on data of size 35 whose sample mean and sample

standard deviation are -35.7 and 32.5 respectively.

Answer: The p-value is 0.3035. Hint: The ov = 29.316 and the degree of

freedom is 34. It is a left-handed case.

Conduct significance testing on Ho: σ = 3.7 vs Ha: σ ≠ 3.7 base on data of

size 48 whose sample mean and sample standard deviation are 3.4 and 4.2.

The answer: The p-value is 0.1768. Hint: The ov = 60.561 and the degree

of freedom is 47. The two probabilities are 0.9116 and 0.0884.

Appendix (Optional): More Testing

When there are two independent populations (groups) or two independent

variables, their parameters are often compared.

Let µ1 and µ2 be the means of Groups 1 and 2 respectively. The test statistic

−

−

( x 1 - x 2 – δo )/ (s1)2 /n1+(s2)2 /n2

is use to test hypotheses on the difference of the means of two independent

groups such as those given below.

Right-tailed case;

Ho: µ1 - µ2 ≤ δo

vs

Ha: µ1 - µ2 > δo

Left-tailed case:

13

Ho: µ1 - µ2 ≥ δo

vs

Ha: µ1 - µ2 < δo

Two-tailed case:

Ho: µ1 - µ2 = δo

vs

Ha: µ1 - µ2 ≠ δo

−

−

where x 1 and x 2 are respectively sample means of Data 1 of size n1 taken

from Group 1 and Data 2 of size n2 taken from Group 2 and s1 and s2 are

sample standard deviations of Data 1 and Date 2.

The hypothesized difference between two means is indicated by δo (the null

value). The probability distribution of the test statistic is the standard

Normal distribution.

Let us have a numerical example by modifying the Orange Crate Example. I

have received another crate of oranges from the supplier who claims that the

oranges in the second crate are 5 oz heavier, on average, and have 80% less

variation in their weight measurements. So, we are testing,

Ho: µ1 - µ2 ≥ -5.00

vs

Ha: µ1 - µ2 < -5.00

Note that the alternative hypothesis is that the average (mean) weight of

oranges in the second crate is greater than that in the first crate by 5 oz or

more. Thus, Ha is µ1 - µ2 < -5.00, and this is a left-tailed case.

14

We randomly sampled 40 oranges from the second crate and took the weight

measurements of these 40 oranges. Their sample mean is 29.87 oz, and their

−

sample standard deviation is 0.679 oz. That is, x 2 = 25.87, s2 = 0.679, n2 =

−

40 and δo = - 5.00. Of course, x 1 = 20.22, s1 = 0.997 and n1 = 30 from the

original data from the first crate.

Then, (20.22 – 25.87 – (-5.00))/ (0.997)2 / 30 + (0.679)2 / 40 = -6.054.

You can go to the website,

http://surfstat.anu.edu.au/surfstat-home/tables/normal.php

or

http://sites.csn.edu/mgreenwich/stat/normal.htm

and find the p-value or you realize that it must be very close to zero (since

ov is -6.054). We have the p-value = 0. The Ho is rejected at 5% or even at

1% level. There is very strong evidence that, on average, the oranges in the

second crate are heavier than those in the first crate by at least 5 oz.

Let us, now, test the claim on the variation. The hypotheses are

Ho: σ2/σ1 ≥ 0.80

vs

Ha: σ2/σ1 < 0.80.

The test statistic to test hypotheses on standard deviations of two

independent groups is

((n1 – 1)s12 /σ12)/((n2 – 1)s22/ σ22)

which has an F distribution. Please read Appendix: F Distributions & Pvalues given below.

15

By the way, this test statistic consists of a test statistic for testing hypotheses

on one sigma over another test statistic for testing hypotheses on another

sigma.

At any rate, we have σ2 = 0.80*σ1, s1 = 0.997, and s2 = 0.679. As a result,

((30 – 1)(0.997)2/ σ12)/((40 – 1)(0.679)2/( 0.80*σ1)2) = 1.02604,

which is the observed value. By going to the website,

http://stattrek.com/Tables/F.aspx,

you find the p-value of 0.46. This is a right-tailed case and, to learn how to

find a p-value, please read Appendix: F Distributions & P-values again.

The test statistic

^

^

^

^

^ ^

( p 1 - p 2 – δo )/ p 1(1- p 1)/n1+ p 2(1- p 2)/n2

is use to test hypotheses on the difference between probabilities

(proportions) of two independent groups such as those given below.

Right-tailed case;

Ho: p1 - p2 ≤ δo

vs

Ha: p1 - p2 > δo

Left-tailed case:

Ho: p1 - p2 ≥ δo

vs

Ha: p1 - p2 < δo

16

Two-tailed case:

Ho: p1 - p2 = δo

vs

Ha: p1 - p2 ≠ δo

The test statistic has the standard Normal distribution for large n1 and n2.

Let us have a numerical example. In Indiana, a political poll of 850 was

taken, and 54% of the likely voters surveyed would vote for the Republican

candidate (recall the Political Poll Example). In Nevada, a political poll of

900 was conducted, and 51% of the likely voters surveyed would vote for

the Republican candidate. I am wondering whether P({R}) = p1 in Indiana

is greater than P({R}) = p2 by at least 2% or not. So, the hypotheses to be

tested are

Ho: p1 - p2 ≤ 0.02

vs

Ha: p1 - p2 > 0.02

^

^

We also have p 1 = 0.54, p 2 = 0.51, n1 = 850, n2 = 900.The observed value

is (0.54 – 0.51 – 0.02)/ (0.54)(1 − 0.54) / 850 + (0.51)(1 − 0.51) / 900 =

0.41889. It is a right-tailed test so, by going to the website

http://surfstat.anu.edu.au/surfstat-home/tables/normal.php

or

http://sites.csn.edu/mgreenwich/stat/normal.htm

you find the p-value of 0.3376. You cannot reject the null hypothesis at 5%

or 1%, and there is no evidence that the Republican candidate in Indiana has

at least a 2% point edge over the Republican candidate in Nevada in spite of

the estimates.

17

You should not compare P({R}) and P({D}) in the same state since these

estimates P̂({D}) = 0.42 and P̂({R}) = 0.54 were obtained from the same

political poll (only one group); that is, we do not have two independent

groups. Any comparison of two proportions, by the hypotheses given above,

must be conducted over two independent groups or a huge, huge group if it

is only one group.

Finally, having two collections of data does not mean that they came from

two independent groups. If they are not independent, then, of course, you

cannot use significance (hypothesis) testing for two independent groups.

For example, there are thirty test scores (pre-scores) of students before

taking a course and thirty test scores (post-scores) of the same students after

the course. There are two collections of data, but there is only one group

(the thirty students). So, how do you test the effectiveness of the course?

If you think that students should learn from the course to improve the test

scores at least ten points, then the following hypotheses should be tested.

Ho: µd ≥ -10.0

vs

Ha: µd < -10.0

using the data which are the differences of the pre-scores and post-scores

(the pre-score of one student – the post score of the student, for the thirty

students). That is, create a collection of data by subtracting a post-score

from the pre-score of each student for all the thirty students and test the

hypotheses with the created data of differences. See the table given below.

By the way, µd is the mean of the differences between the pre-scores and the

post-scores. The null value is -10 because the difference is obtained as “prescore – post-score” for every student. You are speculating that, on average,

the post-scores are higher than the pre-scores by at least 10 points.

Also, because of that, the alternative hypothesis is that the mean is less than

-10 since, if the speculation is true, the mean of the differences must be less

18

than -10. If it were greater than -10, then the post-scores would not be

higher than the pre-scores by at least 10 points on average as speculated.

The hypotheses are tested by using the test statistic ( x - µo)/(s/ n ) with the

standard Normal distribution just like a regular significance or hypothesis

testing on µ. By the way, x and s are the sample average and standard

deviation of the 30 differences given in the last column of the table given

below, µo is -10 and n is 30 in the example.

Example: Some pre-scores, post-score

Students

Student 1

Student 2

Student 3

Pre-scores

65

43

54

Post-scores

78

83

81

Differences

-13

-40

-27

.

.

.

.

Student 28

Student 29

Student 30

67

36

42

87

32

65

-20

4

-23

↑

Use these differences to test the hypotheses.

Using differences between two collections of data is common in before-after

situations with diet programmes, educational programmes medical

treatments/procedures and such where each person (in a group) produces one

measurement before it and another measurement after it. To correctly test

hypotheses, you need to look at the number of groups of objects from which

data are taken from, not the number of collections of data.

Appendix: F Distributions & P-Values

19

Hypotheses concerning variances of two independent groups can be tested

by a test statistic ((n1 – 1)s12 /σ12)/((n2 – 1)s22/ σ22). The hypotheses come in

the following forms.

A right-tailed case:

Ho: σ2/σ1 ≥ ro

vs

Ha: σ2/σ1 < ro.

A left-tailed case:

Ho: σ2/σ1 ≤ ro

vs

Ha: σ2/σ1 > ro.

A two-tailed case:

Ho: σ2/σ1 = ro

vs

Ha: σ2/σ1 ≠ ro.

Why the first set of hypotheses is a right-tailed case? Look at the Ha. If it is

true, then σ2 should be small, which leads to a small s2. Now, look at the test

statistic, a small value of s2 would result in a large observed value. That is,

the rejection area must be on the right hand side of the real number line.

The test statistic has an Fd1,d2 distribution. This F distribution with d1 and

d2 degrees of freedom is constructed as a quotient of two independent Chisquare distributions, Chi-square distribution with d1 degree of freedom in the

numerator and Chi-square distribution with d2 degree of freedom in the

denominator.

20

This is the reason why an F distribution has two degrees of freedom, d1 and

d2. The first one is always from the denominator and the second one from

the denominator. For the test statistic, it is an Fn1-1, n2-1. Go to the website,

http://stattrek.com/Tables/F.aspx,

put the first degree of freedom at “Degrees of freedom (v1),” the second degree of

freedom at “Degrees of freedom (v2)” and the observed value at “f value” and click

on the button “Calculate.” You find the p-value for a left-tailed case at

“Cumulative probability.” That is, “Cumulative probability” = P(Fn1-1, n2-1< observed

value) = p-value for a left-tailed case. Thus, the p-value for a right-tailed

case is 1 – “Cumulative probability” and the p-value for a two-tailed case is

2*Min{“ Cumulative probability”,”1 - Cumulative probability”}.

For example, you are testing Ho: σ2/σ1 ≥ 0.80 vs Ha: σ2/σ1 < 0.80 with n1 –

1 = 30 – 1 = 29, n2 – 1 = 40 – 1 = 39, and the observed value of 1.02604.

Then, put 29 at “Degrees of freedom (v1),” 39 at “Degrees of freedom (v2),” 1.02604 at “f

value” and click on the button “Calculate.”

You find 0.54 at “Cumulative

probability.” However, this 0.54 is the p-value in a left-tailed case. For the

right-tailed case, which is our case here, the p-value is 0.46 = 1 – 0.54. The

Ho cannot be rejected at 5% or 1% significance level.

© Copyrighted by Michael Greenwich, 08/2013

☺

21