ch2

advertisement

38

CHAPТER 2

Analysis of Algoгithms

2.1 Algorithm Efficiency

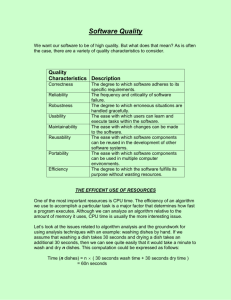

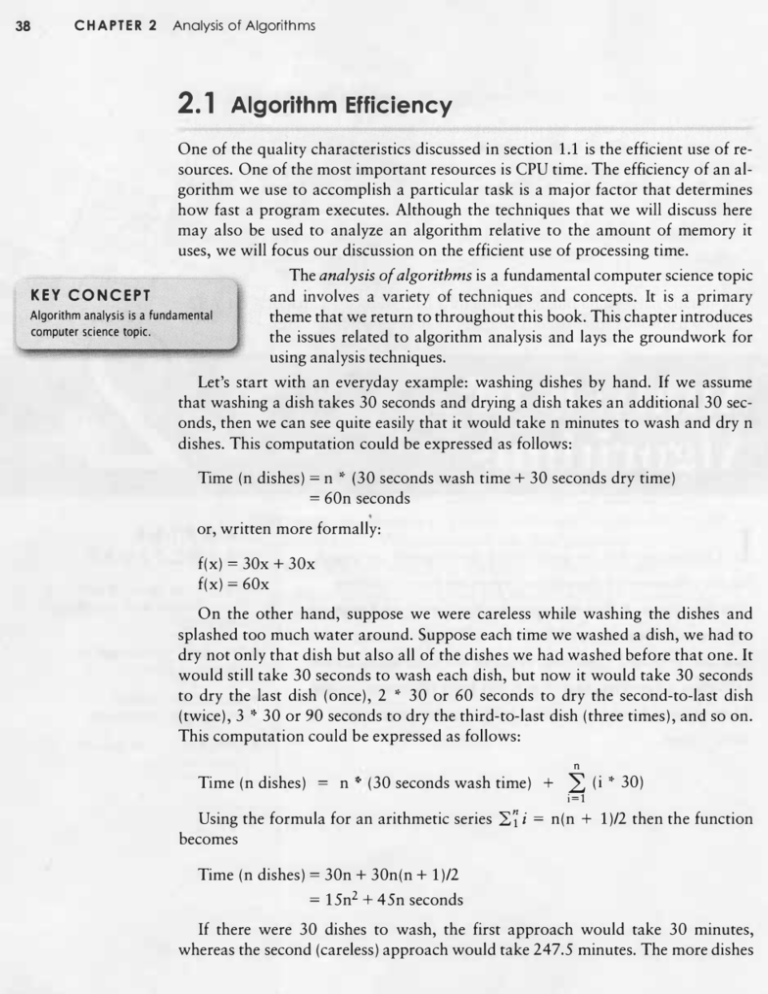

Опе of the quality characteristics discussed іп section 1.1 is the efficient use of re­

soиrces. Опе of the most important resoиrces is CPU time. The еНісіепсу of ап аl­

gorithm we use to accomplish а particular task is а major factor that determines

how fast а program executes. Although the techniques that we will discuss here

тау also Ье used to analyze ап algorithm relative to the amount of memory it

uses, we will focus oиr discussion оп the efficient use of processing time.

The analysis of algorithms is а fundamental computer science topic

and involves а variety of techniques and concepts. It is а primary

theme that we retиrn to throughout this book. This chapter introduces

the issues related to algorithm analysis and lays the groundwork for

using analysis techniques.

КЕУ CONCEPT

Algorithm analysis is а fundamental

computer science topic.

Let's start with ап everyday ехатрlе: washing dishes Ьу hand. If we assume

that washing а dish takes зо seconds and drying а dish takes ап additional зо sec­

onds, then we сап see quite easily that it would take n minutes to wash and dry n

dishes. This computation could Ье expressed as follows:

Тіте (п dishes)

=

=

n "- (зо seconds wash time + зо seconds dry time)

БОп seconds

.

or, written more formally:

f(x)

f(x)

=

=

ЗОх + ЗОх

БОх

Оп the other hand, suppose we were careless while washing the dishes and

splashed too much water around. Suppose each time we washed а dish, we had to

dry not опlу that dish but also аН of the dishes we had washed before that опе. It

would still take зо seconds to wash each dish, but now it would take зо seconds

to dry the last dish (опсе), 2 * зо or БО seconds to dry the second-to-last dish

(twice), З " ЗО or 90 seconds to dry the third-to-last dish (three times), and so оп.

This computation could Ье expressed as follows:

n

Тіте (п dishes)

=

п" (ЗО seconds wash time)

Using the formula for ап arithmetic series 2:7 і

becomes

Тіте (п dishes)

=

=

+

=

L

і=l

п(п

+

(і

*

ЗО)

1)/2 then the function

ЗОп + ЗОп(п + 1)/2

15п2 + 45п seconds

If there were ЗО dishes to wash, the first approach would take ЗО minutes,

whereas the second (careless) approach would take 247.5 minutes. The more dishes

2.2

Growth Functions and Big-OH Notation

we wash the worse that discrepancy becomes. For ехатрlе, if there were 300 dishes

to wash, the first approach would take 300 minutes or 5 hours, whereas the second

approach would take 908,315 minutes or roughly 15,000 hours!

2.2 Growth Functions and Big-OH Notation

For every algorithm we want to analyze, we need to define the size of the problem.

For our dishwashing ехатрlе, the size of the problem is the number of dishes to Ье

washed and dried. We also must determine the уаlие that represents efficient use of

time or space. For time considerations, we often pick ап appropriate processing

step that we'd like to minimize, such as our goal to minimize the number of times

а dish has to Ье washed and dried. The overall amount of time spent оп the task is

directly related to how тапу times we have to perform that task. The algorithm's

efficiency сап Ье defined іп terms of the problem size and the processing step.

Consider ап algorithm that sorts а list of numbers into increasing

order. Опе natural way to express the size of the problem would Ье

the number of values to Ье sorted. The processing step we are trying

to optimize could Ье expressed as the number of compariso.ns we

have to make for the algorithm to put the values іп order. The more

comparisons we make, the more CPU time is used.

КЕУ CONCEPT

А growth function shows time ог

space utilization relative to the

ргоытm size.

А growth function shows the relationship between the size of the problem (п)

and the уаlие we hope to optimize. This function represents the time complexity

or space complexity of the algorithm.

The growth function for our second dishwashing algorithm is

t(n)

=

15п2 + 45п

However, it is not typically necessary to know the exact growth function for ап

algorithm. Instead, we are таіпlу interested іп the asymptotic complexity of ап

algorithm. That is, we want to focus оп the general nature of the function as n іп­

creases. This characteristic is based оп the dominant term of the expression-the

term that increases most quickly as n increases. As n gets very.large, the уаlие of

the dishwashing growth function is dominated Ьу the п2 term because the п2 term

grows much faster than the n term. The constants, іп this case 15 and 45, and the

secondary term, іп this case 45п, quickly Ьесоmе irrelevant as n increases. That is

to say, the уаlие of п2 dominates the growth іп the уаlие of the expression.

The table іп Figure 2.1 shows how the two terms and the уаlие of the expres­

sion grow. As уои сап see from the table, as n gets larger, the 15п2 term domi­

nates the уаlие of the expression. It is important to note that the 45п term is

larger for very small values of п. Saying that а term is the dominant term as n gets

large does not теап that it is larger than the other terms for all values of п.

39

CHAPTER 2

40

Analysis of Algorithms

Number of dishes

(п)

15п2

45п

15п2

+

45п

1

15

45

60

2

60

90

150

5

375

225

600

10

1,500

450

1,950

100

150,000

4,500

154,500

1,000

15,000,000

45,000

15,045,000

10,000

1,500,000,000

450,000

1,500,450,000

100,000

150,000,000,000

4,500,000

150,004,500,000

1,000,000

15,000,000,000,000

45,000,000

15,000,045,000,000

10,000,000

1,500,000,000,000,000

450,000,000

1,500,000,450,000,000

FIGURE 2.1

Comparison of terms іп growth function

The asymptotic complexity is called the order of the algorithm. Thus, ош sec­

ond dishwashing algorithm is said to have order п2 time complexity, written

0(п2). Ош first, more efficient dishwashing ехаmрlе, with growth function t(n)

60(п) would have order п, written О(п). Thus the reason for the difference Ье­

tween our О(п) original algorithm and ош 0(п2) sloppy algorithm is the fact

each dish will have to Ье dried multiple times.

=

This notation is referred to as ОО or Big-Oh notation. А growth function that

executes іп constant time regardless of the size of the problem is said to have

0(1). Іп general, we are опlу concerned with executable statements іп а program

or algorithm іп determining its growth function and еНісіепсу. Кеер іп mind,

however, that some declarations mау include initializations and some of these

mау Ье соmрlех enough to factor into the еНісіепсу of ап algorithm.

As ап ехаmрlе, assignment statements and if statements that are

опlу executed опсе regardless of the size of the problem are 0(1).

The order of ап algorithm is found Ьу

Therefore, it does not matter how mапу of those уои string together; it

eliminating constants and аll ЬиІ the

is stiII 0(1). Loops and method calls mау result іп higher order growth

dominant Іегт іп the algorithm's

growth function.

functions because they mау result іп а statement or series of statements

being executed more than опсе based оп the size of the problem. We

wiII discuss these separately іп later sections of this chapter. Fіgше 2.2 shows several

growth functions and their asymptotic complexity.

КЕУ CONCEPT

More formally, saying that the growth function t(n) 15п2 + 45п is

0(п2) means that there exists а constant m and some уаlие of n (по),

such that t(n) ::; m п2 for аll n > по. Another way of stating this is that

the order of ап algorithm provides ап upper bound to its growth function. It is also important to note that there are other related notations

such as omega (Q) which refers to а function that provides а lower

=

КЕУ CONCEPT

The order of ап algorithm provides

ап иррег bound ІО the algorithm's

growth function.

*

2.3

Comparing Growth Functions

Label

Growth Function

Order

t(n)

=

17

0( 1)

constant

t(n)

=

310g n

O(log п)

logarithmic

t(n)

=

20п-4

О(п)

lіпеаг

t(n)

=

12п log n + 100п

О(п log п)

n log n

t(n)

=

зп2 + 5п - 2

0(п2)

quadratic

t(n)

=

8пЗ + зп2

О(пЗ)

cubic

t(n)

=

2П + 18п2 + 3п

0(2П)

exponential

FIGURE 2.2

Some growth functions and their asymptotic complexity

bound and theta (8) which refers to а function that provides both an upper and

lower bound. We will focus our discussion оп order.

Because the order of the function is the key factor, the other terms and constants

are often not even mentioned. Аll algorithms within а given order are considered to

Ье generally equivalent in terms of efficiency. For ехатрlе, while two algorithms to

accomplish the same task тау have different growth functions, if they are both

O(n2) then they are considered to Ье roughly equivalent with fespect to efficiency.

2.3 Comparing Growth Functions

One might assume that, with the advances in the speed of processors and the ауаіl­

ability of large amounts of inexpensive memory, algorithm analysis would по

longer Ье necessary. However, nothing could Ье farther from the truth. Processor

speed and memory cannot make ир for the differences in efficiency of algorithms.

Кеер in mind that in our previous discussion we have been eliminating constants as

irrelevant when discussing the order of an algorithm. Increasing processor speed

simply adds а constant to the growth function. When possible, finding а more effi­

cient algorithm is а better solution than finding а faster processor.

Another way of looking at the effect of algorithm complexity was proposed Ьу

Aho, Hopcroft, and Ullman (1974). If а system can currently handle а problem of

size n in а given tirnt: period, what happens to the allowable size of the problem if we

increase the speed of the processor tenfold? As shown in Figure 2.3, the linear

case is relatively simple. AIgorithm А, with а linear tirne complexity of n, is indeed

improved Ьу а factor of 10, meaning that this algorithm can process 10 times the

data in the same amount of time given а tenfold speed ир of the processor. However,

algorithm В, with а time complexity of n2, is only improved Ьу а factor of 3.16. Why

do we not get the full tenfold increase in problem size? Because the complexity of

algorithm В is n2 our effective speedup is only the square root of 10 or 3.16.

41

CHAPТER 2

42

Analysis of Algorithms

Algorithm

Мах Problem Size

Тіте Complexity

Before Speedup

Мах Problem Size

After Speedup

А

n

81

1081

В

п2

82

3.1682

С

п3

8з

2.158з

D

2n

84

Increase in prob!eт size with

F І GUR Е 2. З

а

84

+

:J. 3

tenfo!d increase in processor speed

Siтi!ar!y, a!gorithт С, with coтp!exity n3, is on!y iтproved Ьу а

factor of 2.15 or the сuЬе root of 10. For a!gorithтs with

If the al90rithm is inefficient, а faster

exponential complexity !ike a!gorithт D, in which the size variab!e is

processor will not help іп the IОП9

in

the exponent of the coтp!exity terт, the situation is far worse. Іп

run.

this case the speed uр is !og n or in this case, 3.3. Note this is not а

2

factor of 3, but the origina! prob!eт size p!us 3. In the grand scheтe

of things, іі an a!gorithт is inefficient, speeding uр the processor will not he!p.

КЕУ CONCEPT

Figиre 2.4 illustrates various growth functions graphically for re!ative!y sтall

va!ues of n. Note that when n is sтall, there is !itt!e difference between the a!go-

500 �----т-,---�-- ·

•

400

·

•

·

•

•

,

зао

•

,

·

,

ф

Е

і=

•

•

,

•

•

,

І

.

,

,

200

,

.

.

І

:

І

•

.

.

,

100

,

#

,

,

,

.

е.

•

,

.

.

.

.

.

е.

.

•

. ..

.

о

о

о

о

о

:

,

�

-

•

о

.

.

.

.

о

.

.

о

о

о

о

о

о

о

о

о

о

о

о

о

о

о

о

о

о

о

о

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

-

·

!

!І

- - - log n

-п

-•••••

nlogn

п2

___ п3

•

• • •

2n

..�•

.

- �-��

- �- - -=-=-==-=-=-=-==-=-=-=-�-=-=-==-=-��- -�-��

o ����'��···;��·;;;;-�-=-==- -==-=-=-===

10

5

15

20

25

Input Size (Н)

FIGURE 2.4

Coтparison of typica! growth functions for sтall va!ues of n

2.4

43

Deteгmining Тіте Complexity

rithms. That is, іЕ уои can guarantee а very small prob!em size (5 or !ess), it doesn't

[еаllу matter which a!gorithm is used. However, notice that in Figure 2.5, as n gets

very !arge, the differences between the growth functions Ьесоmе obvious.

204 Determining Time Complexity

Analyzing Loop Execution

То determine the order оЕ an a!gorithm, we have to determine how

often а particu!ar statement or set оЕ statements gets executed.

Therefore, we often have to determine how many times the body оЕ а

!оор is executed. То ana!yze !оор execution, first determine the order

оЕ the body оЕ the !оор, and then mu!tip!y that Ьу the number оЕ

times the !оор will execute re!ative to n. Кеер in mind that n repre­

sents the prob!em size.

200,000

-rl- .---

КЕУ CONCEPT

Analyzing algorithm сотрlехіІу of­

Іеп requires analyzing the execution

of loops.

r---

"" Сі

�o

:0

•

І

ggц

&Ш2Шifj

•

.

..

-

..

о

..

.

150,000"1

•

..

.. .

..

..

.

.

..

.

.

Q)

Е 100,000 lІ

•

.

і=

І

•

•

0

•

І

50,000

-І

•

:

•

·

:

..

•

•

•

•

•

•

•

•

•

.

.

.

о

.

..

.. .

.

..

..

.

о

.

.

І

-

••

.е

..

..

..

• • •

.

. .

.

.

·

o ���= �· ·; ;

o .�=_�����====��==�==���=;���� ::�

;;

.,

FIGURE 2.5

І

.

100

--

200

300

Input Size (N)

n log n

•••• • п2

3

_ _ _ п

.

'

· ,

а ,

- - - log n

--п

400

Comparison оЕ typica! growth functions for !arge va!ues оЕ n

500

•

2П

t

44

CHAPТER 2

Analysis of Algorithms

Assuming that the body of а lоор is 0(1), then а lоор such as this:

for (int count = О;

count

<

n;

count++)

{

/* Боте sequence of 0(1) steps */

}

would Ьауе О(п) time complexity. This is due to the fact that the body of the lоор

has 0(1) complexity but is executed n times Ьу the lоор structure. Іп general, if а

lоор structure steps through n items іп а linear fashion and the body of the lоор is

0(1), then the lоор is О(п). Еуеп іп а case where the lоор is designed to skip

some number of elements, as long as the progression of elements to skip is linear,

the lоор is still О(п). For ехатрlе, if the preceding lоор skipped every other пит­

ber (e.g. count += 2), the growth function of the lоор would Ье nl2, but since соп­

stants don't affect the asymptotic complexity, the order is still О(п).

Let's look at another ехатрlе. If the progression of the lоор is logarithmic

such as the following:

count = 1

while (count

<

n)

{

count *= 2;

/* Боте sequence of 0(1) steps */

}

then the lоор is said to Ье O(log п). Note that when we use а loga­

rithm іп ап algorithm complexity, we almost always теап log base

2. This сап Ье explicitly written as 0(log n). Since еасЬ time through

2

the lоор the уаlие of count is multiplied Ьу 2, the number of times

the lоор is executed is log n.

2

КЕУ CONCEPT

The Ііте сотрlехіІу of а lоор is

found Ьу multiplying the сотрlехіІу

of the body of the lоор Ьу how тапу

times the lоор will ехесиІе.

Nested Loops

А slightly more interesting scenario arises when loops are nested. Іп this case, we

must multiply the complexity of the outer lоор Ьу the complexity of the inner

lоор to find the resulting complexity. For ехатрlе, the following nested loops:

for (int count

=

О;

count

<

n;

count++)

{

for (int count2

=

О;

count2

<

n;

count2++)

{

/* Боте sequence of 0(1) steps */

}

2.4

Determining Тіте Complexity

""оиlсі have complexity 0(n2). The Ьосіу оі the inner lоор is 0(1)

anсі the inner lоор wi1I execute n times. This means the inner іоор is

O(n). Multiplying this result Ьу the number оі times the outer іоор

will execute (n) results in 0(n2).

КЕУ CONCEPT

The analysis of nested loops must

take іпІо account both the іппег and

outer loops.

What is the complexity оі the following nested lоор?

for (int count = О;

count

<

n;

count++)

{

for (int count2 = count;

count2

<

n;

count2++)

{

/* some sequence of

0(1)

steps */

}

}

In this case, the inner lоор index is initialized to the current уаlие оі the index

for the outer lоор. The outer lоор executes n times. The inner lоор executes n

times the first time, n-1 times the second time, etc. However, remember that we

are only interested in the dominant term, not in constants or any lesser terms. If

the progression is linear, regardless оі whether some elements are skipped, the or­

der is sti1I O(n). Thus the resulting complexity for this сосіе is 0(n2).

Method Calls

Let's suppose that we have the following segment оі сосіе:

for (int count = О;

count

<

n;

count++)

{

printsum (count);

}

We know from our previous discussion that we find the order оі the lоор Ьу

multiplying the order оі the Ьосіу оі the lоор Ьу the number оі times the іоор wi1I

execute. In this case, however, the Ьосіу оі the lоор is а method саll. Therefore,

we must first determine the order оі the method before we can determine the or­

der оі the code segment. Let's suppose that the purpose оі the method is to print

the sum оі the integers from 1 to n each time it is called. We might Ье tempted to

create а brute force method such as the following:

public void printsum(int count)

{

int sum = О;

for (int І = 1;

І

<

count;

sum += І;

System.out.println (sum);

}

І++)

45

46

CHAPTER 2

Analysis of Algorithms

What is the time complexity of this printsurn method? Кеер іп mind that

only executable statements contribute to the time complexity so іп this case, аll

of the executable statements асе 0(1) except for the loop. The loop оп the other

hand is О(п) and thus the method itself is О(п). Now to compute the time сот­

plexity of the original loop that called the method, we simply multiply the

complexity of the method, which is the body of the loop, Ьу the питЬес of

times the loop wi1l execute. Оис result, then, is 0(п2 ) using . this implementation of the printsurn method.

However, if уои сесаll, we know from ош earlier discussion that we do not have

to use а loop to calculate the sum of the numbers from 1 to n. Іп fact, we know that

the 2,�i

n(n + 1)/2. Now let's rewrite ош printsurn method and see what hap­

pens to ош time complexity :

=

public void printsurn(int count)

{

Биrn

=

count*(count+1)/2;

systern.out.println (Биrn);

}

Now the time complexity of the printsurn method is made ир of an assign­

ment statement which is 0(1) and а print statement which is also 0(1). The result

of this change is that the time complexity of the printsurn method is now 0(1)

meaning that the loop that calls this method now goes from being 0(n2) to O(n).

We know from ош оис earlier discussion and from Figше 2.5 that this is а уесу

significant improvement. Опсе again we see that there is а difference between

delivering correct results and doing so efficiently.

What if the body of а method is made ир of multiple method calls and loops?

Consider the following code using ош printsum method аЬоуе:

public void sarnple(int

п)

{

printsurn(n);

for (int count

/* this rnethod call іБ

=

О;

count

<

п;

count++)

/* this loop іБ

О(п)

count

<

п;

count++)

/* this loop іБ

0(п2)

printsurn (count);

for (int count

=

О;

for (int count2

=

О;

count2

<

Systern.out.println (count,

п;

count2++)

0(1)

*/

*/

*/

count2);

}

The initial саll to the printsurn method with the parameter ternp is 0(1) since

the method is 0(1). The for loop containing the саН to the printsurn method

with the parameter count is O(n) since the method is 0(1) and the loop executes

2.4

Determining Тime Complexity

rimes. The nested loops are О(п2) since the inner lоор will execute n times each

е the outer lоор executes and the outer lоор will also execute n times. The еп­

е method is then о(п2 ) since опlу the dominant term matters.

More formally, the growth function for the method sample is given Ьу:

f(x)

=

1 + n + п2

Then given that we eliminate constants and аll but the dominant term, the time

;:omplexity is О(п2).

There is опе additional issue to deal with when analyzing the time complexity

. method calls and that is recursion, the situation when а method calls itself. We

will save that discussion for Chapter 7.

47

48

CHAPTER 2

Analysis of Algorithms

SIIlППНН'У 0(' Кеу Сопеерts

•

Software must make efficient use of resources such as CPU time and memory.

•

AIgorithm analysis is а fundamental computer science topic.

•

А growth fиnction shows time or space utilization relative to the problem size.

•

The order of ап algorithm is found Ьу eliminating constants and аН but the

dominant term іп the algorithm's growth function.

•

The order of ап algorithm provides ап upper bound to the algorithm's

growth function.

•

If the algorithm is inefficient, а faster processor will not help іп the long run.

•

Analyzing algorithm complexity often requires analyzing the execution of

loops.

•

The time complexity of а lоор is found Ьу multiplying the complexity of the

body of the lоор Ьу how mапу times the lоор will execute.

•

The analysis of nested loops must take into account both the inner and outer

loops.