Introduction to Monte

advertisement

Introduction to Monte-Carlo Methods

Pascal Bianchi

14/11/2011

1/38

Outline

About this week

Introduction to MC methods

Convergence of random variables

Confidence intervals

Approximation of integrals : Monte-Carlo versus deterministic

Some applications

2/38

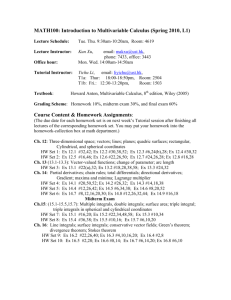

Telecom ParisTech and the group STA

I

Research group “Statistics and applications (STA)” in charge of this course

I

16 senior researchers in the various fields related to statistics (Machine learning,

time series analysis, distributed algorithms, statistical signal processing,

MC methods,. . .)

I

Monte-Carlo methods : research theme leaded by

Éric Moulines and Gersende Fort

Applications to finance, astronomy and robotics

3/38

Schedule of the week

Mornings : Lectures

Afternoons : Labs

I

Today : basics in probability theory + introduction to MC methods

I

Thuesday : how to generate random variables with a target distribution

An application to physics

I

Wednesday / Thursday : how to reduce the variance of the estimation error

An application to the computation of the waiting-time in a queue

I

Friday morning : MC methods for statistical inference in Hidden Markov Models

An application to self-localization in robotics

I

Friday afternoon : Seminar by G. Fort

Recent advances in MC methods and applications

4/38

Practical stuffs (1/2)

All materials available at http ://perso.telecom-paristech.fr/ bianchi/athens/athens.html

Evaluation Each student receives a grade from 0 to 20 points based on four Lab

reports and on a short Quiz. The Quiz counts for 7 points, Lab reports for 13 points.

Quiz

The quiz will take place on Friday afternoon. It will consist in a small series of brief

questions on the course. Documents are not permitted during the quiz.

5/38

Practical stuffs (2/2)

Lab reports There are four Labs. For each Lab, the person in charge of the Lab is :

I Lab I : Jérémie Jakubowicz, jakubowi@telecom-paristech.fr

I Lab II : Ian Flint, ian.flint@telecom-paristech.fr

I Lab III : Pascal Bianchi, pascal.bianchi@telecom-paristech.fr

I Lab IV : Ian Flint, ian.flint@telecom-paristech.fr

Students work in pair during each Lab.

Each pair of student provides four Lab reports, one for each Lab.

Each report must be send by email to the person in charge of the Lab before 28/11.

Please use email-titles of the form « ATHENS / Lab II / Name1 - Name2 »

Instructions for Lab reports

The numerical results should be commented. A great attention will be attached to the

relevance of the comments. It is recommended to send the source code in a file

separated from the report.

6/38

Outline

About this week

Introduction to MC methods

Convergence of random variables

Confidence intervals

Approximation of integrals : Monte-Carlo versus deterministic

Some applications

7/38

Monte-Carlo (MC) methods : general definition

MC methods refer to methods that allow to :

I

sample from a target distribution µ

I

use these samples to approximate numerical quantities

I

control the approximation error

Applications to statistical physics, molecular dynamics, finance, biology, astronomy, robotics,

signal and image processing,. . .

8/38

Buffon’s needles (1/2)

1777 : One of the most ancient and celebrated example of Monte-Carlo simulation

20

15

10

5

0

0

2

4

6

8

10

Consider a floor with parallel lines. Set r = distance between lines.

Throw n needles of length ` ≤ d on the floor

Count the number Nn intersecting the lines

Deduce an estimate of π = 3.14159 . . .

9/38

Buffon’s needles (2/2)

Define Xi = 1 if the ith needle intersects a line, 0 otherwise

This afternoon, you will prove that : P(Xi = 1) = π2`r

We can estimate the probability P(Xi = 1) by

θ̂n =

Nn

n

=

n

1

∑ Xi

n

i =1

Thus an estimate of π is given by

π̂n =

2`

θ̂n r

Performance ? Optimal choice of `, r ?

10/38

General case : evaluating integrals

Estimate

Z

θ=

f (x )d µ(x )

where µ is a probability measure on Rd .

Assume that we are able to draw i.i.d. samples from µ

iid

X1 , X2 , X3 , . . . ∼ µ

A MC estimator is given by

θ̂n =

1

n

∑ f (X i )

n

i =1

i.e. approximate the expectation by the empirical mean

Similarly, an estimator of g (θ) is given by g (θ̂n )

11/38

Questions

I

How to sample from an arbitrary distribution µ ? (c.f. tomorrow’s lecture)

I

Is the estimator consistent i.e., does it converge to the true value as n → ∞

I

What indications do we have about the estimation error ?

I

Can we improve the estimator i.e., reduce its variance ?

(c.f. wednesday/thursday’s lectures)

12/38

Outline

About this week

Introduction to MC methods

Convergence of random variables

Confidence intervals

Approximation of integrals : Monte-Carlo versus deterministic

Some applications

13/38

Convergence almost sure - convergence in probability

(Ω, F , P) a probability space

(Xn )n≥1 a sequence of random variables (r.v.) on Rd

I

(Xn )n≥1 converges almost surely (a.s.) to a r.v. X if

lim Xn (ω) = X (ω)

n→∞

for any ω except on a set of P-measure zero

a.s.

Notation : Xn → X

I

(Xn )n≥1 converges in probability to X if

∀ε > 0, lim P(|Xn − X | > ε) = 0

n→∞

P

Notation : Xn → X

Property : Convergence a.s. implies convergence in probability

Theorem of continuity : Let f be a continuous function.

a.s.

a.s.

I

Xn → X implies f (Xn ) → f (X )

I

Xn → X implies f (Xn ) → f (X )

P

P

14/38

Law of Large Numbers (LLN)

Theorem : Let (Xn )n≥1 be an iid sequence such that EkX1 k < ∞. Then,

1

n

a.s.

∑ Xi −→ E(X1 )

n

i =1

15/38

Example #1 : Convergence of the standard MC estimator

Analyze the MC-estimator

θ̂n =

1

n

∑ f (X i )

n i =1

where the Xi ’s are iid with distribution µ. Assume that Ekf (Xi )k < ∞.

1. The estimator is unbiased : Eθ̂n = θ

2. By the LLN, θ̂n converges a.s. to θ = Ef (X1 ).

The sequence of estimators θ̂n is said to be strongly consistent

Similarly, g (θ̂n ) is a strongly consistent estimator of g (θ) if g is continuous at θ.

3. However, we still ignore the fluctuations of the estimation error

16/38

Example #2 : Approximating densities by histograms

Let X1 , . . . , Xn be n real iid samples with density p(x ) Lipschitz-continuous on [a, b].

Define k ≥ 1.

(b−a)

Define a` = a + ` k for ` = 1, . . . , k

Consider the histogram :

k

h n (x ) =

(`)

∑ hn

1[a`−1 ,a` ] (x )

`=1

(`)

where hn = card{i = 1, . . . , n : Xi ∈ [a`−1 , a` ]}.

By the LLN, for each `,

(`)

hn

n

Noting that

R a`

a`−1

a.s.

−→

Z

a`

p(t )dt

a`−1

p(t )dt = p(a`−1 )/k + O (1/k 2 ), we conclude that

∀x ∈ [a, b],

k

n

a.s.

hn (x ) −→ p(x ) + O (1/k )

Normalized histograms can be interpreted as an approximation of the pdf

17/38

Convergence in distribution

Let FX denote the distribution function of a r.v. X

Definition : A sequence (Xn )n≥1 is said to converge in distribution (or in law) to a r.v.

X if

lim FXn (x ) = FX (x )

n→∞

at any continuity point x of FX

L

Notation : Xn −→ X

P

L

Property : Xn −→ X implies Xn −→ X

Portmanteau’s Theorem : The following statements are equivalent

L

1. Xn −→ X

2. For any bounded continuous function f : Rd → R, E(f (Xn )) → E(f (X ))

3. For any Borel set H such that P(X ∈ ∂H ) = 0, P(Xn ∈ H ) → P(X ∈ H )

L

L

Theorem of continuity : Let f be continuous. Then, Xn −→ X implies f (Xn ) −→ f (X )

18/38

Slutsky’s Lemma

L

L

Assume that Xn −→ X and Yn −→ c where c is a constant. Then :

L

Xn + Yn −→ X + c

L

Xn Yn −→ cX

Xn

Yn

L

−→

X

c

if c 6= 0

19/38

Central Limit Theorem (CLT)

Theorem : Let (Xn )n≥1 be an iid sequence such that E(kXn k2 ) < ∞.

Define m = E(X1 ) and Σ = Cov(X1 ). Then,

1

n

L

∑ (Xi − m) −→ N (0, Σ)

n

√

i =1

where N (0, Σ) stands for a Gaussian r.v. with zero mean and covariance Σ

20/38

Outline

About this week

Introduction to MC methods

Convergence of random variables

Confidence intervals

Approximation of integrals : Monte-Carlo versus deterministic

Some applications

21/38

Control of the estimation error (1/2)

Example : Let θ̂n = n1 ∑i f (Xi ) be the standard MC estimate of θ = E(f (X1 )).

We already know that the estimation tends a.s. to zero when n → ∞

Ideal objective : For a given tolerance level δ, find a n large enough which ensures

that the error kθ̂n − θk does not exceed δ

Remark : MC methods are random by nature. The (random) error can always

exceeds a given δ with a small but nonzero probability. Thus, we should reformulate

the problem as :

Find a n large enough which ensures that P(kθ̂n − θk > δ) is less than 1 − α

22/38

Control of the estimation error (2/2)

Assume θ ∈ R for simplicity

First solution : use upperbounds on the probability.

Example : the Chebischev bound leads to

P(|θ̂n − θ| > δ)

≤

≤

1

E(|θ̂n − θ|2 )

δ2

σ2

n δ2

where σ2 = Var(f (X1 ))

2

σ

It is sufficient to set n larger than (1−α)δ

2 to ensure that P(kθ̂n − θk > δ) < 1 − α

Two major drawbacks :

1. The bound is far brom being tight. Finer bounds do exist, but in general, a

pessimistic value of n is to be expected.

2. As θ = E(f (X1 )) is unknown, it is very likely that σ2 is unknown as well

Second solution : consider the asymptotic regime n → ∞ and use the CLT

23/38

Asymptotic confidence intervals (1/2)

By the CLT,

1 n

L

n(θ̂n − θ) = √ ∑ (f (Xi ) − θ) −→ N (0, σ2 )

n i =1

√

Select a such that

1

√

Z

a

2π −a

e−

s2

2

ds = 1 − α

otherwise stated, a is the quantile function at 1 − α2 . We obtain :

P(θ ∈ [θ̂n −

aσ

n

, θ̂n +

aσ

n

]) −→ 1 − α

The interval θ̂n ± anσ is called a 100(1-α)%-asymptotic confidence interval

Example : For a 95% confidence interval, set a ' 1.96

24/38

Asymptotic confidence intervals (1/2)

By the CLT,

1 n

L

n(θ̂n − θ) = √ ∑ (f (Xi ) − θ) −→ N (0, σ2 )

n i =1

√

Select a such that

1

√

Z

a

2π −a

e−

s2

2

ds = 1 − α

otherwise stated, a is the quantile function at 1 − α2 . We obtain :

P(θ ∈ [θ̂n −

aσ

n

, θ̂n +

aσ

n

]) −→ 1 − α

The interval θ̂n ± anσ is called a 100(1-α)%-asymptotic confidence interval

Example : For a 95% confidence interval, set a ' 1.96

Remark : The computation of this interval requires the knowledge of σ2 = Var(f (X1 ))

Again, as θ = E(f (X1 )) is unknown, it is very likely that σ2 is unknown as well

Can we still compute a confidence interval ?

24/38

Asymptotic confidence intervals (2/2)

The ideal is to replace the unknown variance by its MC-estimate :

σ̂2n =

n

1

∑ (f (Xi ) − θ̂n )2

n

i =1

a.s.

L

By Slutsky’s Lemma and the LLN, σ̂2n −→ σ2 . In particular, σ̂2n −→ σ2 .

√

√

n

σ̂n

(θ̂n − θ) =

n

σ

s

(θ̂n − θ)

σ2

σ̂2n

Thus, by Slutsky’s Lemma and the CLT

√

n

σ̂n

L

(θ̂n − θ) −→ N (0, 1)

n

Conclusion : The interval θ̂n ± aσ̂

n is a 100(1-α)%-confidence interval

25/38

Delta Method

Theorem : Consider a sequence of random variables (θ̂n )n≥1 on Rd which satisfy

√

L

n(θ̂n − θ) −→ Y

where θ ∈ Rd and Y is a r.v. on Rd (typically, a Gaussian r.v.)

Let g : Rd → Rd be a differentiable function at point θ. Then,

√

L

n(g (θ̂n ) − g (θ)) −→ ∇g (θ) Y

where ∇g (θ) is the Jacobian matrix of g at point θ

26/38

Delta Method : Example of application

iid

X1 , X2 , . . . ∼ E (λ)

where E (λ) is the exponential distribution with parameter λ > 0 : λe−λx 1R+ (x ).

Problem posed : To estimate λ.

Recall that : EX1 = λ1 . Thus, X̄n = n1 ∑ni=1 Xi is the standard MC-estimator of λ1 .

A natural estimate of λ is therefore :

ˆλn = 1

X̄n

1. ˆ

λn is a consistent estimator of λ

√

L

2. By the CLT, n(X̄n − λ1 ) −→ N (0, λ12 ). Thus, by the Delta-method,

√ ˆ

L

n(λn − λ) −→ N (0, λ2 )

3. Can you find an asymptotic 100(1 − α)% confidence interval ?

27/38

Outline

About this week

Introduction to MC methods

Convergence of random variables

Confidence intervals

Approximation of integrals : Monte-Carlo versus deterministic

Some applications

28/38

Deterministic methods

To compute

R

I

f (x )dx, why not use traditional quadrature methods ?

Quadrature methods consists in the approximation

Z

n

f (x )dx '

I

∑ wj f (xj )

(1)

j =0

where (x0 , x1 , . . . , xn ) are deterministic points (= the grid) and where wj are some

weights.

I

Newton quadrature : regular grid

I

Gauss quadrature : irregular grid chosen as the roots of a well-chosen

polynomial of degree n + 1.

The most famous Newton quadrature method is the trapeze method.

We refer to [Stoer-Bulirsch,2002] for more details about deterministic numerical

integration methods

29/38

Trapeze method in 1-dimension

1

Z

Example : approximate θ =

f (x )dx

0

Idea : Approximate f by a piecewise affine function

Z

k

n

k −1

n

f (x )dx '

1

k

f ( k−

n )+f(n)

2n

The integral θ can be approximated by :

In =

f (0) + f (1)

2

+

1 n−1

k

∑ f(n)

n k =1

Requires (n + 1) evaluations of f .

Assuming f is C 2 , we obtain the following control of the error :

|In − θ| ≤

1

sup |f 00 (x )|

12n2 x ∈[0,1]

Conclusion : In dimension 1, the trapeze method outperforms the MC estimate

30/38

Trapeze method in d-dimension

Z

Approximate θ =

0

1

···

Z

1

0

f (x1 , . . . , xd )dx1 · · · dxd

Idea : using Fubini’s theorem, the multiple integral can be rephrased as repeated

one-dimensional integrals → use the trapeze method for each of them

The trapeze approximation has the form :

In =

1

n

∑

nd j ,··· ,j =0

1

d

wj1 ,··· ,jd f

I

Requires N = (n + 1)d evaluation of f

I

Similarly to the 1-D case, one can show that

In

=

=

j1

n

,··· ,

jd

n

θ + O n−2

θ + O N −2/d

For a fixed computational complexity N, the error increases at speed N −2/d w.r.t. d

n.b. : More efficient methods than the trapeze do exist (Simpson method, Gauss

quadrature methods), but are still sensitive to the value of d.

31/38

Comparison to Monte-Carlo methods

The standard MC estimator for the estimation of

θ̂n =

1

n

R

f (x )d µ(x ) is

∑ f (Xi )

i

I

Main drawback : the estimation error is random. One can only ensure that the

approximation error associated with a MC run is small with high probability

I

Main asset : by the CLT, the estimation error converges a.s. to zero at

√

speed 1/ n, for any dimension d

To divide the standard deviation of the error by a factor 2,

multiply the number of samples by 4

Thus, MC methods are expected to perform well even in case of integrals on spaces

of high dimension

32/38

A remark on Quasi Monte-Carlo methods

Z

Principle : Approximate θ =

0

1

···

1

Z

f (x1 , . . . , xd )dx1 · · · dxd by

0

1

n

∑ f (xi )

n

i =1

where x1 , x2 , . . . is a well-chosen deterministic sequence in [0, 1]d

I

Despite their name, QMC methods are deterministic methods

→ out of the scope of this course

I

When d ≥ 2, the regular grid is not very efficient

More accurate approximations can be obtained by using sequences (xi )i ≥1 with

good algebraic properties (low discrepancy sequences)

If f is C k , the error is a O

(log n)d

n

I

The error depends on the dimension d. The use of QMC methods can be a good

move if d ∼ 15

I

n.b. : It is generally difficult to have tight bounds on the approximation error

33/38

Outline

About this week

Introduction to MC methods

Convergence of random variables

Confidence intervals

Approximation of integrals : Monte-Carlo versus deterministic

Some applications

34/38

Evaluating the price of an option (1/2)

I

Let St be the value of an asset at instant t → (St )t ∈R+ is a stochastic process

At t = 0, S0 is the present (known) value of the asset

I

A european call gives the right to buy the asset at time T (maturity) at a given

price K

I

At maturity T , if ST ≥ K the benefit is ST − K . Otherwise, the option is useless.

In order to determine the price of the option, one is left to compute

p = E[(ST − K )+ ]

The above value depends on the probabilistic model underlying St

I

In the Black-Scholes model (1973), ST follows a log-normal distribution

→ in this case, p admits a simple expression (no need for MC methods)

I

This is no longer the case in more involved models

Asian option :

"

p=E

I

1

! #

N

∑ St − K

N i =1

i

+

No explicit formula (even for the Black-Scholes model)

35/38

Evaluating the price of an option (2/2)

Algorithm (case an an Asian option)

I

I

I

estim = 0;

for j=1:n

Generate S∼ [St1 , . . . , StN ]

estim = estim + max( 0 , mean(S)-K );

estim = estim/n;

36/38

Evaluating the probability of rare events - Example : Ruin probability (1/2)

I

An insurance compagny earns premiums at rate r per unit of time

I

It pays claims at random time instants Ti . The amount of ith claim is Yi .

I

The benefit of the compagny at time t is

Nt

Xt = rt − ∑ Yi

i =1

where Nt is the number of claims in [0, t ]

I

Denote by R the event “the compagny is ruined”. We set

R = {∃t > 0, Xt < −m}

where m is the initial amount of credits.

I

Objective : What is the probability of ruin θ = P(R = 1) ?

I

Note : The actual event of ruin is expected to be rare, say for instance θ ∼ 10−20

37/38

Evaluating the probability of rare events - Example : Ruin probability (2/2)

At first glance, a naive MC-estimate θ = P(R = 1) would be expected to be

θ̂n =

1

n

∑ Ri

n

i =1

where R1 , . . . , Rn are iid with the same distribution as R

Two issues :

I

The event R = 1 depends on the whole process Xt , (t ∈ R+ ) :

One hardly see how to generate samples R1 , . . . , Rn

I

Even if we were able to simulate R1 , . . . , Rn , the number n of samples needed to

obtain a fine estimate of θ would be huge

Idea : change the law under which random variables are simulated

This is called importance sampling (c.f. thuesday’s lecture)

38/38