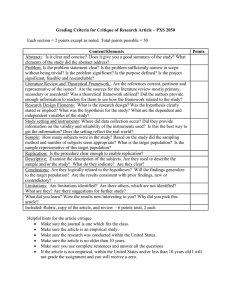

Abstract Replication, Data Analysis, and

advertisement

MTO Colloquium Tuesday March 18, 2014 at 12:45h in WZ 103 by prof. Greg Francis (Purdue University) “Replication, Data Analysis, and Theorizing in Psychological Science” Many of us have been taught that replication is the gold standard of science and that multiple successful replications provide strong evidence for an effect. Because psychological science uses statistics, this view is wrong. When findings are based on statistics, the outcomes occur with predictable probabilities, and for hypothesis testing the reported successful replication rate must reflect experimental power. Not recognizing this fundamental property of hypothesis testing causes many problems in how we design experiments and interpret experimental findings. Thus, making a scientific argument across a set of experiments requires reporting both significant and non-significant findings. A set of experiments with relatively low power that almost always rejects the null hypothesis should be interpreted as biased and thereby unscientific. These problems are not restricted to particular subfields of psychology, and reformers are just as susceptible to bias as anyone else. Importantly, a set of low-powered experiments is easily generated by following standard methods within experimental psychology. The problems are not just about questionable research practices or p-hacking, as there are more fundamental issues about how to derive or test a theory using empirical data.