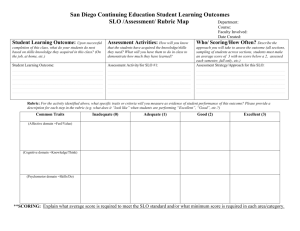

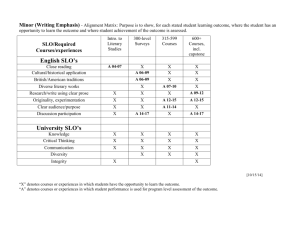

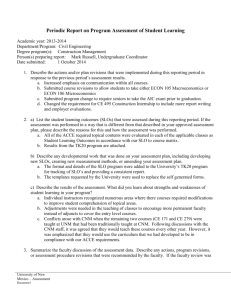

Student Learning Outcome Assessment Handbook

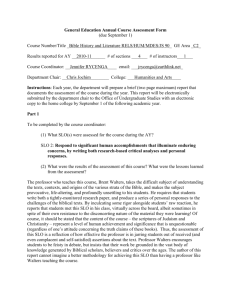

advertisement