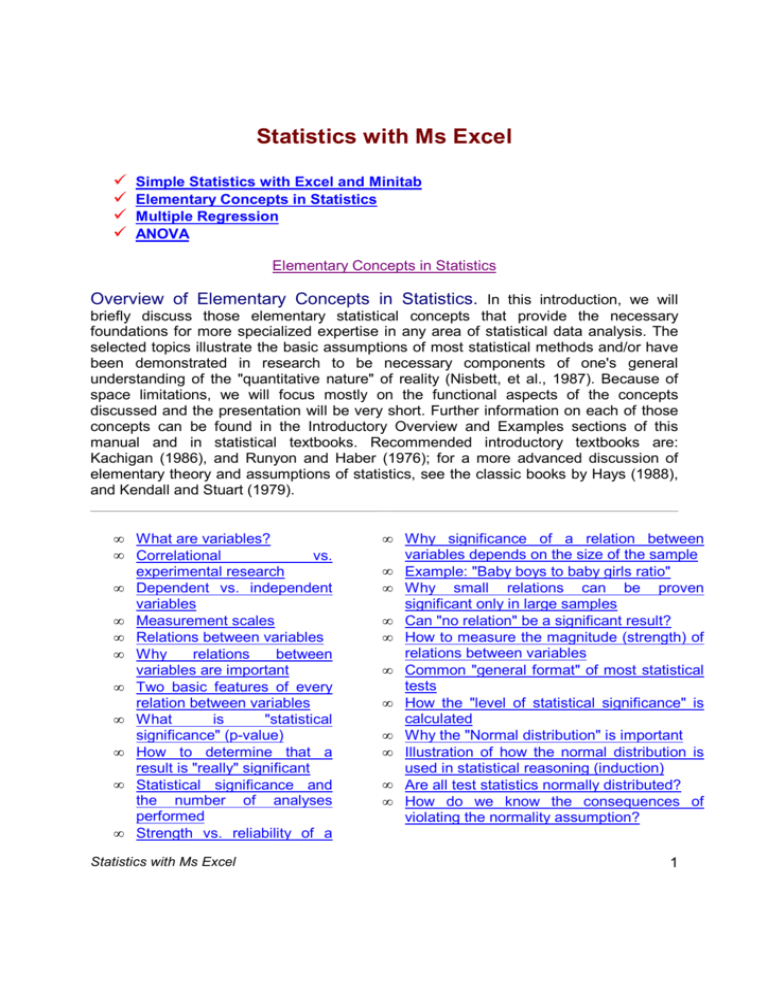

Statistics with Ms Excel

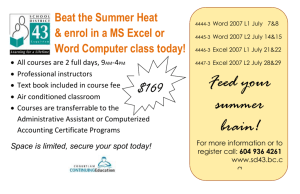

advertisement