Notes 5 - Indiana University Computer Science Department

advertisement

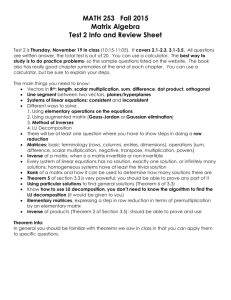

B553 Lecture 5: Matrix Algebra Review

Kris Hauser

January 19, 2012

We have seen in prior lectures how vectors represent points in Rn and

gradients of functions. Matrices represent linear transformations of vector

quantities. This lecture will present standard matrix notation, conventions,

and basic identities that will be used throughout this course. During the

course of this discussion we will also drop the boldface notation for vectors,

and it will remain this way for the rest of the class.

1

Matrices

A matrix A represents a linear transformation of an n-dimensional vector

space to an m-dimensional one. It is given by an m×n array of real numbers.

Usually matrices are denoted as uppercase letters (e.g., A, B, C), with the

entry in the i’th row and j’th column denoted in the subscript ·i,j , or when

it is unambiguous, ·ij (e.g., A1,2 , A1p ).

A1,1 · · · A1,n

..

A = ...

(1)

.

Am,n · · · Am,n

1

1.1

Matrix-Vector Product

An m × n matrix A transforms vectors x = (x1 , . . . , xn ) into m-dimensional

vectors y = (y1 , . . . , ym ) = Ax as follows:

y1 =

n

X

A1j xj

j=1

...

ym =

n

X

(2)

Amj xj

j=1

Pn

Or, more concisely, yi = j=1 Aij xj for i = 1, . . . , m. (Note that matrixvector multiplication is not symmetric, so xA is an invalid operation.)

Linearity of matrix-vector multiplication. We can see that matrixvector multiplication is linear, that is A(ax + by) = aAx + bAy for all a, b, x,

and y. It is also linear in terms of component-wise addition and multiplication of matrices, as long as the matrices are of the same size. More precisely,

if A and B are both m × n matrices, then (aA + bB)x = aAx + bBx for all

a, b, and x.

Identity matrix. One special matrix that occurs frequently is the n × n

identity matrix In , which has 0’s in all off-diagonal positions Iij with i 6= j,

and 1’s in all diagonal positions Iii . It is significant because In x = x for all

x ∈ Rn .

1.2

Matrix Product

When two linear transformations are performed one after the other, the result

is also a linear transformation. Suppose A is m × n, B is n × p, and x

is a p-dimensional vector, and consider the result of A(Bx) (that is, first

multiplying by B and then multiplying the result by A). We see that

p

p

X

X

Bx = (

B1j xj , . . . ,

Bnj xj )

j=1

and

(3)

j=1

n

n

X

X

Ay = (

A1k yk , . . . ,

Amk yk )

k=1

k=1

2

(4)

So

A(Bx) =

n

X

!

p

p

n

X

X

X

A1k (

Bkj xj ), . . . ,

Amk (

Bkj xj ) .

k=1

j=1

k=1

(5)

j=1

Rearranging the summations, we see that

A(Bx) =

!

p

p

n

n

X

X

X

X

(

(

A1k Bkj )xj ), . . . ,

Amk Bkj xj ) .

j=1 k=1

(6)

j=1 k=1

In other words, we could have A(Bx) = Cx if we were to form a matrix C

such that

n

X

Cij =

Aik Bkj

(7)

k=1

This is exactly the definition of the matrix product, and we say C = AB. The

entry Cij of can also be obtained taking the dot-product of the i’th column

of A and the j’th column of B.

Matrix product is associative but not symmetric. By the above

derivation we can drop the parentheses A(Bx) = (AB)x. So, matrix-vector

and matrix-matrix multiplication are associative. Note again however that

matrix-matrix multiplication is not symmetric, that is AB 6= BA in general.

Column and row vectors. Note that if we were to write an n-dimensional

vector x stacked in a n × 1 matrix x (denoted in lowercase), we can turn

the matrix-vector y = Ax into the matrix product y = Ax. Here, if A is an

m × n matrix, then y is an m × 1 matrix.

y1

A1,1 · · · A1,n

x1

.. ..

.. ..

(8)

. = .

. .

ym

Am,n · · · Am,n

xn

Hence, there is a one-to-one correspondence between vectors and matrices

with one column. These matrices are called column vectors and will be

our default notation for vectors throughout the rest of the course. We will

occasionally also deal with row vectors, which are matrices with a single row.

1.3

Transpose

The transpose AT of a matrix A simply switches A’s rows and columns.

(AT )ij = Aji .

3

(9)

If A is m × n, then AT is n × m.

Symmetric matrix. If A = AT , then A is symmetric.

1.4

Matrix Inverse

An inverse A−1 of an n × n square matrix A is a matrix that satisfies the

following equation:

AA−1 = A−1 A = In

(10)

where In is the identity matrix. Not all square matrices have an inverse, in

which case we say A is not invertible (or singular). Invertible matrices are

significant because the unique solution x to the system of linear equations

Ax = b, is simply A−1 b. This holds for any b. If the matrix is not invertible,

then such an equation may or may not have a solution.

Orthogonal matrix. An orthogonal matrix is a square matrix that satisfies

AAT = In . In other words, its transpose is its inverse.

1.5

Matrix identities

Identities involving the transpose:

• (cA)T = cAT for any real value c.

• (A + B)T = AT + B T .

• (AB)T = B T AT .

• All 1 × 1 matrices are symmetric, the identity matrix is symmetric, and

all uniform scalings of a symmetric matrix are symmetric.

• A + AT is symmetric.

• The dot product x·y is equal to xT y, with x and y denoting the column

vector representations of x and y, respectively.

• xT Ay = y T AT x, with x and y column vectors.

Identities involving the inverse:

• In−1 = In .

4

• (cA)−1 = 1c A−1 for any real value c 6= 0.

• (AB)−1 = B −1 A−1 if both B and A are invertible.

• If A and B are invertible, then (ABA−1 )−1 = AB −1 A−1 .

1.6

Common mistakes

Matrix expressions are similar to standard expressions regarding real numbers in that addition and subtraction are equivalent, multiplication is nearly

equivalent, and inverses give an approximation of division. But, this similarity leads to common pitfalls when manipulating matrix equations. Here are

some common mistakes that you should look out for.

1. Swapping the arguments of a matrix product.

2. Propagating transposes or inverses into a matrix product without swapping the order of arguments.

3. Assuming that a matrix is invertible (or worse, assuming a non-square

matrix is invertible).

4. Performing operations on matrices of incompatible size.

2

Rank, Null space, and Definiteness

If A is not invertible (for instance, it may not be square) then the system

of linear equations Ax = b may not have a solution x. Or, it may have an

infinite number of solutions. Or, it may have solutions for some b’s and not

others. We would like to characterize, based on properties of A, when such

equations can be solved.

2.1

Matrix rank

Consider the columns of A as a list of vectors a1 , . . . , an . Recall that if b ∈

Span(a1 , . . . , an ), then b is a linear combination of a1 , . . . , an . If this holds,

then it is sufficient to set each component xi to the respective coefficient on

ai in order to solve Ax = b. On the other hand, if b ∈

/ Span(a1 , . . . , an ), then

5

there is not solution. So, the set of vectors b such that Ax = b has a solution

is precisely Span(a1 , . . . , an ).

Rank. The rank of an m × n matrix A is the size of the largest subset of

{a1 , . . . , an } that is linearly independent. In other words, if A has rank k,

then Span(a1 , . . . , an ) is an k-dimensional subspace of Rm . If k = n, then A

is said to have full column rank, and such problems have at most one solution.

If k = m, then A is said to have full row rank, and such problems have at

least one solution. If k = m = n, then A is invertible.

Overdetermined system. Now suppose that the rank of A is k < m. Then

there are some possible values of b that are not attainable by linear combinations of a1 , . . . , an . Such systems are known as overdetermined because

there are more constraints than can be fulfilled by adjusting the values of x.

Overdetermined systems are usually not solved exactly, but are more often

solved in a least squares sense minx ||Ax − b||2 .

Underdetermined system. If the rank of A is k < n, then there are an

infinite number of solutions x to the equation Ax0 = Ax. To see this, let

some column of A be linearly dependent on the remaining columns.

Suppose

P

this column is a1 without loss of generality. Then, a1 − ni=2 ci ai = 0 for

some coefficients ci . So, any multiple of the vector v = (1, −c2 , . . . , −cn ) can

be added to x0 without affecting the value of A(x0 + cv). Such systems are

known as underdetermined because they may be solved by multple values of

x.

A system can be both underdetermined and overdetermined if k < m

and k < n. This means there are some values of b for which there is no

solution, but for those that do have a solution, there are an infinite number

of solutions.

2.2

Null space

For underdetermined systems with k < n, we ask how many directions d can

we move in to preserve Ad = 0? The space of such directions is known as the

null space. These are significant because if we move a point x in any such

direction, we leave the value of Ax = A(x + d) = Ax + Ad = Ax unchanged.

It turns out that this space can be spanned by n − k linearly independent

directions, and is therefore a space of dimension n − k. (Null spaces will

feature prominently in constrained optimization problems.)

6

2.3

Positive/Negative Definiteness

A symmetric square matrix A is positive semi-definite if for all vectors x,

xT Ax ≥ 0. It is strictly positive definite if equality holds only for x = 0. It

can be shown that positive definite matrices are invertible. The inverse of a

positive definite matrix is positive definite as well.

Although it is not clear at the moment what this condition means, it will

become important in later lectures. Many matrices that we encounter will be

shown to be positive definite! For example, the matrix (AT A) for a matrix

A of full column rank is positive definite. Also, a local minimum of a scalar

field, the Hessian matrix is positive definite.

Likewise, a matrix for which xT Ax ≤ 0 is called negative semi-definite,

and is called strictly negative definite if equality holds only at x = 0. If none

of these conditions holds, the matrix is called indefinite.

3

Matrix Factorizations

Several matrix factorizations have proven useful in numerical analysis, computer science, and engineering. It is a good idea to familiarize yourself with

these factorizations so that you can apply them.

3.1

Eigenvalues and Eigenvectors

If there exist a real number λ and vector x such that Ax = λx, then λ and x

are known as an eigenvalue and eigenvector of A, respectively. Briefly, here

are some facts about eigenvalues.

1. All matrices have at least one and at most n distinct eigenvalues.

2. Symmetric matrices have real eigenvalues.

3. Positive definite matrices have a full set of real, positive eigenvalues.

4. Positive semi-definite matrices have real, nonnegative eigenvalues.

5. Nonsymmetric matrices may have complex eigenvalues and eigenvectors.

7

Eigendecomposition. Symmetric matrices A can be decomposed into the

form QΛQT , where Λ is a diagonal matrix and Q is an orthogonal matrix. Λ

is related to Q in that the ith entry of Λ is an eigenvalue that corresponds

to the i’th column of Q, which is its eigenvector.

The significance of this decomposition is that multiplication by a symmetric matrix can be represented by a rotation transformation, then an axisaligned scaling, then an inverse rotation. It also gives a convenient form for

the inverse, and to test whether an inverse exists. If every element of the diagonal of Λ is nonzero, then A−1 = QΛ−1 QT . Λ−1 is easy to compute because

it simply requires taking the reciprocal of each element on the diagonal.

3.2

Decompositions into Triangular Forms

LU decomposition. It can be shown that using the Gaussian elimination

procedure, any matrix A can be decomposed into A = P LU , where P is

a permutation matrix, L is a lower triangular matrix, and U is an upper

triangular matrix. This decomposition is significant because permutation

matrices are easily invertible, and triangular matrices are easily invertible if

their diagonals are nonzero. (The solution to any invertible triangular matrix

equation Lx = b can be found quickly through a backsubstitution procedure)

So, if L, and U are invertible, then A is invertible as well! This method is

very frequently employed to solve an invertible system of equations.

Cholesky decomposition. The special case of the LU decomposition of

a symmetric positive-definite matrix is known as a Cholesky decomposition.

It can be seen that to be symmetric, U = LT , and hence A = LLT . For

symmetric indefinite matrices, there is a related Cholesky decomposition into

LDLT , where D is a diagonal matrix. Cholesky decompositions can be be

computed in slightly fewer steps than general LU decompositions.

3.3

Singular Value Decomposition

The singular value decomposition (SVD) is one of the most useful tools in

scientific computing. It gives a similar factorizaton to the eigendecomposition, but can be applied to non-square matrices. It also gives convenient

solutions to find a matrix’s rank and null space, and to compute pseudoinverses. It is the most common method used to perform principal components

analysis (PCA) in statistics and machine learning, and in generalizing New8

ton’s method to higher dimensions. It can also be used to perform robust

least-squares fitting in underdetermined systems.

The SVD of an m × n matrix A takes the form:

A = U ΣV T

(11)

where U is an m × m orthogonal matrix, V is an n × n orthogonal matrix,

and Σ is an m × n matrix with nonzero entries only on the diagonal.

Computing the rank. The rank of A is equal to the number of nonzero

elements on the diagonal of Σ.

Computing the nullspace. If Σii = 0 for some i, then the i’th column of

V T is in the null space of A. The set of all such columns of V T is an orthogonal

basis of the null space. If these vectors are assembled into an n × (n − k)

matrix N , then all solutions to the equation Ax = b can be obtained by

finding a single solution x0 , and letting x = x0 + N y any arbitrary choice of

y ∈ Rn−k .

Computing the pseudoinverse. A pseudoinverse is a generalization of

the inverse of a matrix that is used when an inverse does not exist. It can

also be used when a matrix is not square. The pseudoinverse A+ is defined

as an n × m matrix that has the following properties:

1. AA+ A = A

2. A+ AA+ = A+

3. (AA+ )T = AA+

4. (A+ A)T = A+ A

This matrix can be computed using the SVD. Note that the pseudoinverse

Σ+ of Σ can be computed by taking the reciprocal of all nonzero diagonal

entries of Σ, and leaving the zero entries. Then the pseudoinverse of A is

A+ = V Σ+ U T (convince yourself that this satisfies the properties of the

pseudoinverse). Note that if A is invertible, then A+ = A−1 .

Robust least squares. The SVD can be used to solve for all least-squares

solutions to a system linear equations, whether the system is full rank, underdetermined, overdetermined, or both! It can be shown that x0 = A+ b is

9

a least-squares solution to minx ||Ax − b||2 . To see this, take the gradient of

this quadratic function at x0

2AT (Ax0 − b) = AT (AA+ b − b) = (AT AA+ − AT )b

(12)

Now look at the transpose of the matrix above, apply the transpose rule, apply the third property of the pseudoinverse, and then apply the first property

of the pseudoinverse:

(AT AA+ − AT )T = (AA+ )T A − A = AA+ A − A = A − A = 0

(13)

Hence, the gradient at x0 is zero.

Since we can also compute the null-space matrix N , we see that all vectors

of the form x = A+ b + N y, with y arbitrary, are least squares solutions as

well.

4

4.1

Software considerations

Software libraries

Software libraries for basic matrix operations are available in most languages.

Examples include LAPACK, GSL, JAMA for Java, and Numpy for Python.

Matlab is a special purpose language devised explicitly to make matrix calculations convenient. Most packages will provide the Cholesky decomposition,

LU decomposition, QR decomposition, and SVD. They typically also provide

eigenvalue/eigenvector computations for symmetric positive definite matrices, and sometimes for nonsymmetric matrices as well.

4.2

Computational Complexity

For square matrices, matrix-vector multiplication is O(n2 ), while the naive

approach to matrix multiplication is O(n3 ). There are algorithms that achieve

a slightly lower exponent, but these are not typically not competitive in practice because of large hidden constants. Matrix inversion is as complex as

matrix multiplication, and is typically solved using the O(n3 ) LU decomposition or the Cholesky decomposition if the matrix is symmetric (also O(n3 )

but with a smaller constant factor). Eigendecompositions and SVDs are also

O(n3 ) but with yet a larger constant factor.

10

4.3

Sparse Matrices

Sparse matrices — matrices in which most entries are zero — arise in many

applications including physical simulation and problems on graphs. Sparse

matrices can be stored in less than O(n2 ) space, and many operations (addition, multiplication) can be performed in time proportional to the number

of nonzero entries rather than the size of the matrix.

Solving a sparse system of equations Ax = b can often be solved efficiently

using the conjugate gradient method. See J. Shewchuck (1994) “An Introduction to the Conjugate Gradient Method Without the Agonizing Pain” for

a good (and entertainingly written) reference on this method.

5

Exercises

1.

11