Do Items That Measure Self-Perceived Physical Appearance

advertisement

STRUCTURAL EQUATION MODELING, 72(1), 148-162

Copyright © 2005, Lawrence Eribaum Associates, Inc.

Do Items That Measure Self-Perceived

Physical Appearance Function

Differentially Across Gender Groups?

An Application of the MACS Model

Vicente Gonzalez-Roma, Ines Tomas, Doris Ferreres

and Ana Hernandez

Facultad de Psicologia

University of Valencia, Spain

The aims of this study were to investigate whether the 6 items ofthe Physical Appearance Scale (Marsh, Richards, Johnson, Roche, & Tremayne, 1994) show differential

item functioning (DIF) across gender groups of adolescents, and to show how this

can be done using the multigroup mean and covariance structure (MG-MACS) analysis model. Two samples composed of 402 boys and 445 girls were analyzed. Two

DIF items were detected. One of them showed uniform DIF in the unexpected direction, whereas the other showed nonuniform DIF in the expected direction. The practical significance of the DIF detected was trivial. Thus, we concluded that the differences between girls' and boys' average scores on the analyzed scale reflect valid

differences on the latent trait.

Psychologists providing assessment and testing services, as well as those who

carry out studies involving comparison of test scores across groups, are obligated

to select and use nonbiased test instruments (Knauss, 2001). For this to be possible,

researchers and test constructors have to provide empirical evidence about whether

test items function differently across groups (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, 1999), In this article, we investigate whether the six items of

the Physical Appearance Scale (PAS), a scale included in the Physical-Self DeCorrespondencc concerning this study should be addressed to Vicente Gonzalez-Roma, University

of Valencia, Faculty of Psychology, Department of Methodology of Behavioral Sciences, Av. Blasco

Ibaiiez, 21, 46010-Valencia, Spain. E-mail: Vicente.Glez-roma@uv.es

DIF IN PHYSICAL APPEARANCE ITEMS

149

scription Questionnaire (PSDQ; Marsh, Richards, Johnson, Roche, & Tremayne,

1994), show differential item functioning (DIF) across adolescent gender groups.

Furthermore, we show how this can be done using multigroup confirmatory factor

analysis with mean and covariance structure (MG-CFA-MACS; Sorbom, 1974), a

method frequently used to investigate group differences on factor means (e.g.,

McArdle, Johnson, Hishinuma, Miyamoto, & Andrade, 2001). During the last two

decades, many techniques have been proposed to detect DIF. Some examples are

the Mantel-Haenszel procedure (Holland & Thayer, 1988), the SIBTEST procedure (Shealy & Stout, 1993a, 1993b), the logistic regression procedure

(Swaminathan & Rogers, 1990), and item response theory (IRT) procedures (see

Camilli & Shepard, 1994). However, some of these techniques may be too complex for psychologists lacking a highly technical or statistical background. If we

want DIF analysis to be systematically implemented when tests are assessed, we

have to foster the use and application of the most accessible methods. With this

idea in mind, the DIF detection procedure used in this article was selected because

it is an extension of a technique familiar to many psychologists: factor analysis.

DIF AND SELF-PERCEIVED PHYSICAL APPEARANCE

Recent research has shown that adolescent girls obtain lower scores than adolescent boys on self-perceived physical appearance measures. Marsh and colleagues

(Marsh, Hey, Roche, & Perry, 1997; Marsh et al., 1994) have found consistent statistically significant differences between the average observed scores obtained by

girls and boys that favor boys. There are some data that suggest that adolescent

girls really have a lower self-perceived physical appearance than boys have. For instance, the percentage of anorexia cases among girls is higher than among boys,

and anorexia is related to a dysfunction in self-perception of physical appearance.

However, this does not rule out the possibility that DIF of appearance items contributes to producing gender differences in observed scores on self-perceived

physical appearance measures. To conclude that observed differences reflect valid

differences in the latent trait, measurement equivalence (or the opposite, DIF) of

items across gender groups must be analyzed. This involves ascertaining whether

item parameters differ across gender groups or not.

An item is said to function differentially across groups when individuals at the

same latent trait level, but belonging to different groups, respond differently to that

item. If a substantive number of items on a questionnaire show DIF (i.e., are not

equivalent across groups), "then we cannot even assume that the same construct is

being assessed across groups by the same measure" (Chan, 2000, p. 170). If the

proportion of items showing DIF is small, then meaningful between-group comparison may still be possible at the latent trait level (Chan, 2000; Reise, Widaman,

& Pugh, 1993). On the other hand, impact is defined as differences in item (or test)

150

GONZALEZ-ROMA, TOMAS, FERRERES, HERNANDEZ

performance caused by real differences in the underlying latent variable measured

by the test (Camilli & Shepard, 1994).

Two types of DIF could be distinguished depending on the type of item parameter that differs across groups (i.e., the item difficulty and the item discrimination

parameters). Uniform DIF exists when only the item difficulty parameter differs

across groups. In the context ofthe factor analytic item response model that is used

later, the item difficulty parameter corresponds to the expected (mean) item response value for participants with a score on the latent trait that equals zero. Therefore, the higher the expected item response value for those participants, the easier

the item. When the items under study are personality or attitude items, the item difficulty parameter is referred to as the item attractiveness or evocativeness parameter (e.g., Lanning, 1991; Oort, 1998). Nonuniform DIF exists when the item discrimination parameter differs across groups, whether or not the item difficulty

parameter remains invariant. Item discrimination represents the relation between

an item and the latent trait that the item is supposed to measure. It also refers to the

extent to which an item is able to distinguish between individuals with close but

different scores on the latent trait. In the context of the factor analytic item response model that is used later, the item discrimination parameter corresponds to

the item factor loading.

A pervasive criticism of empirical DIF detection studies is that the detection of

DIF has been performed in a nontheoretical manner without a priori hypotheses regarding whether DIF would exist and in which direction (Chan, 2000). One exception to this general trend is Chan (2000). He based his hypothesis about nonuniform DIF on Roskam's (1985) idea that in personality inventories the item's

discrimination parameter expresses its psychological ambiguity. According to

Roskam (1985), the more the item is formulated in concrete terms and the less ambiguous it is, the larger the discrimination parameter. However, Zumbo, Pope,

Watson, and Hubley (1997) tested Roskam's idea and did not find any evidence

supporting it. Zumbo et al. (1997) concluded that "a general theory on the interpretation of item parameters in personality inventories in terms of psychological ambiguity is not viable and such interpretation should be tailored to individual scales

or subscales" (p. 968).

In this study, to guide DIF detection, we formulated two tentative hypotheses,

taking into account item content and some characteristics ofthe groups compared.

The first tentative hypothesis refers to the difficulty or evocativeness parameter.

Because ofthe predominance of female models in advertisements and mass media,

adolescent girls are exposed to same-gender beauty models more frequently than

adolescent boys are. Thus, it is possible that when they rate their own physical appearance, their (top) models of reference are more clear and present, and this may

help explain why they score lower than adolescent boys on physical appearance

items. A recent experiment showed that children who were exposed to images

judged to epitomize the media emphasis on physical beauty reported lower physi-

DIE IN PHYSICAL APPEARANCE ITEMS

151

cal appearance than children who viewed images judged to be devoid of such messages (Oliveira, 2000). Considering these arguments, we expect that physical appearance items will show uniform DIF (i.e., differences in the item intercepts)

across gender groups, so that the item intercepts will be lower for girls than for

boys.

The second tentative hypothesis refers to the discrimination parameter. Because

of socialization processes, adolescent girls talk and express their opinions about

their physical appearance with more naturalness than do adolescent boys. In

groups of girls, it is socially acceptable to talk about physical appearance, whereas

in groups of boys, talking about physical appearance may be regarded unfavorably.

Therefore, it is possible that other factors, such as group norms, lack of familiarity,

and feelings of shame, also play a role when boys express their opinions about their

physical appearance. Thus, we think that girls' responses to physical appearance

items will be more strongly related to the intended underlying latent trait than

boys' responses will. Therefore, we hypothesize that physical appearance items

will show nonuniform DIF (i.e., differences in the item factor loadings) across gender groups, so that the factor loading estimates will be larger for girls than for boys.

Because of the lack of more precise theoretical reasons, no specific hypotheses as

to which items would show DIF were formulated.

THE MG-CFA-MACS MODEL FOR ANALYZING DIF

Unlike the CFA with covariance structure, which assumes that all measured variable and latent variable means are equal to zero, in the MACS model the means of

latent and measured variables are not presumed to be zero. Thus, within this framework the linear relation between items and latent traits is expressed as follows

(Sorbom, 1974):

X = T^ + A,^ + 6

(1)

where Xx is a (q x 1) vector that contains the intercept parameters; X is a (q x 1) vector containing the scores on the q measured variables or items; A^r is a (q x r) matrix

of factor loadings that represents the relations between the q measured variables

and the r latent variables; ^ is a (r x 1) vector that contains the factor scores on the r

latent variables; 5 is a (q x 1) vector that represents the measurement errors or

uniquenesses for each measured variable. It is assumed that uniquenesses are normally distributed with a population mean that equals zero. Mean values of measured variables [E(X)] can be explained in terms of the latent variable means as

follows:

X;, + A;,K

(2)

152

GONZALEZ-ROMA, TOMAS, FERRERES, HERNANDEZ

where K is a (r x 1) column vector containing the latent variahle means [E(^) - K].

Hence, in an unidimensional questionnaire, when the latent trait equals zero, the

expected score for the items is the corresponding item intercept. As stated earlier,

within the MACS model, the item intercept (T) represents the item difficulty (or

evocativeness) parameter, whereas the item factor loading (X) corresponds to the

item discrimination parameter.

When two or more groups are considered. Equations 1 and 2 become:

X(g) = X x(8) + Ax(s) ^(8) + 5(8)

(3)

E(X) (s) = Xx(8' + Ax(g) K(g)

(4)

where g = 1, 2, ... G refers to the different groups considered. If item parameters

are invariant across groups (i.e., items do not show DIE), then

E(X)(g) =Tx +Ax K<g)

(5)

According to Equation 5, when item intercepts and factor loading are invariant

across groups, between-group differences in average item scores do reflect between-group differences in latent means. Under these conditions, average item and

scale scores are comparable across groups. According to Meredith (1993), the

invariance of item intercepts and factor loadings represents a type of factorial

invariance, the so-called strong factorial invariance. When only the invariance of

item intercepts cannot be maintained, then uniform DIE exists. In this case, the intercepts that a given item (j) shows in the different groups of participants considered are not equivalent [i.e., TJO ^ TJ*^) ^^ ... ^ TJ(G)]. When the invariance of item

factor loadings cannot be maintained, regardless of whether the intercepts are invariant or not, then nonuniform DIE exists [i.e., Xf^^ ^ A,j(2) :^ ... ^ A,j(<^>]. Thus,

within the MG-MACS model, testing for DIE involves testing for item intercepts

and factor loadings invariance.

In summary, the aim of this study is twofold. The first is to investigate whether

the six items of the PAS, a scale included in the PSDQ (Marsh et al., 1994), show

DIE across gender groups of adolescents. The second is to show how this can be

done using MG-CEA-MACS.

METHOD

Participants

The study sample was composed of 847 participants of between 12 and 16 years of

age. Eorty-eight percent were boys (n = 402), and 52% were girls (n = 445). The

average age for both groups was 13.3 years (SD - 1 for both groups).

DIF IN PHYSICAL APPEARANCE ITEMS

1 53

All of the participants responded to the PSDQ (see Marsh et al., 1994, for a full

presentation of the questionnaire). Participation in the study was voluntary. The

same researcher administered the questionnaire to classroom units of high school

students during a regular class period. Before the participants completed the questionnaire, the test administrator read the written test instructions, and procedural

questions were solicited and answered.

Measures

The items analyzed in this study are those included in the Appearance scale of the

PSDQ (Marsh et al,, 1994). The Appearance scale comprises six items that are responded to using a 6-point response scale ranging from 1 (completely false) to 6

(completely true; see Appendix). Items 4 and 6 are reversed. Responses to these

items were transformed so that a high score was indicative of high self-perceived

physical appearance. The Cronbach's alpha estimates computed for the Appearance scale in both samples were satisfactory: ,87 in the boy sample and .86 in the

girl sample.

Analysis

All the MG-MACS models were tested using LISREL 8.30 (Joreskog & Sorbom,

1993) and normal theory maximum likelihood (ML) estimation methods. As the

ML estimation procedure assumes a multivariate normal distribution for the observed variables, this assumption was tested. The tests of multivariate normality

provided by PRELIS 2,30 indicated that this assumption could not be maintained

in the analyzed groups of participants. The tests of univariate normality indicated

that the analyzed variables could not be considered as normally distributed. Simulation studies that have analyzed the robustness of ML estimators to violations of

distributional assumptions when the observed variables are discrete (e.g.,

Boomsma, 1983; Harlow, 1985; Muthen & Kaplan, 1985; Qlsson, 1979), pointed

out that not much distortion of the ML chi-square, and very little or nonexistent parameter estimate bias, is to be expected with nonnormal ordinal variables when

they show a moderate departure from normality; that is, when they have univariate

skewness and kurtosis in the range from -1 to -i-l, The skewness statistic computed

for the items analyzed showed ranges from -0.66 to 0.05 and from -0.45 to 0.37 in

the boy and girl samples, respectively. The kurtosis statistic showed a range from

-0,60 to -0.13 in the boy sample and a range from -0.86 to -0.34 in the girl sample.

Therefore, because skewness and kurtosis are minimal, the assumption of approximate normality for the observed variables is reasonable, and the use of normal theory ML estimation techniques can be justified (Bollen, 1989).

To assess the goodness of fit for the models, we considered the chi-square goodness-of-fit statistic (x^), the root mean square error of approximation (RMSEA),

154

GONZALEZ-ROMA, TOMAS, FERRERES, H E R N A N D E Z

and the Nonnormed Fit Index (NNFI). The chi-square statistic is a test of the difference between the observed covariance matrix and the one predicted by the specifted model. Nonsignificant values indicate that the hypothesized model fits the

data. However, this index has two important limitations: (a) it is sensitive to sample

size, so that the probability of rejecting a hypothesized model increases as sample

size increases (Joreskog & Sorbom, 1993; La Du & Tanaka, 1995; Tanaka, 1993);

and (b) it evaluates whether or not the analyzed model holds exactly in the population, which is a very demanding assessment. Thus, the use of other fit indexes has

been suggested as an alternative to tests of statistical significance (e.g.. Marsh,

1994; Marsh & Hocevar, 1985; Marsh et al,, 1997; Reise et aL, 1993). The

RMSEA evaluates whether the analyzed model holds approximately in the population. Guidelines for interpretation of the RMSEA suggest that values of about .05

or less would indicate a close fit of the model, values of about .08 or less would indicate a fair fit of the model or a reasonable error of approximation, and values

greater than .1 would indicate poor fit (Browne & Cudeck, 1993; Browne & Du

Toit, 1992). Finally, the NNFI (Bentler & Bonett, 1980; Tucker & Lewis, 1973) is a

relative measure of fit that also applies penalties for a lack of parsimony. NNFI values of .90 or above indicate good model ftt (Bentler & Bonett, 1980). Chi-square

difference tests were used to compare the fit of nested models.

Considering that the DIF analyses assume that the scale under study is

unidimensional, this assumption was tested before running the DIF analyses. First,

scale factorability was assessed by means of Bartlett's sphericity test. The results

obtained for boys, ^^(is, A^ = 402) = 1275.6, p < .01, and girls, 5^^(15, A^ = 445) =

1235.9, p < .01, supported the factorability of the scale in both samples. Then, a

multigroup one-factor CFA model with no invariance constraints across groups

was tested. The fit provided by the model was acceptable, x\lS,N- 847) = 63.9,p

< .01; RMSEA = .075; NNFI - .97. Thus the unidimensionality of the Appearance

scale could be maintained.

The MG-MACS model was fitted to the 6 x 6 item variance-covariance matrices and vectors of six means of both the boys and the girls samples. To detect uniform and nonuniform DIF, a series of nested multiple-group, single-factor models

were tested according to the iterative procedure recommended by Oort (1998) and

followed by Chan (2000). For all the models, a number of constraints were imposed for model identification and scale purposes. First, an item was chosen as the

reference indicator. To guide this selection, we conducted an exploratory factor

analysis. The item that showed the highest loading (Item 5) was selected as the reference indicator. This item's factor loading was set to 1 in both groups to scale the

latent variable and provide a common scale in both groups. Second, the factor

mean was fixed to zero in the boys group for identification purposes, whereas the

factor mean in the girls group was freely estimated. Finally the reference indicator

intercepts were constrained to be equal in both groups to identify and estimate the

factor mean in the girls group and the intercepts in both groups (Sorbom, 1982).

DIF IN PHYSICAL APPEARANCE ITEMS

155

The iterative procedure starts with a fully equivalent model in which all the item

factor loadings and the intercepts are set to be equal in both groups. Then nonuniform DIF is evaluated. Specifically the largest modification index (MI) associated

to the factor loading estimates is evaluated to determine its statistical significance.

An MI shows the reduction in the model chi-square value if the implied constrained parameter is freely estimated. Because this chi-square difference is distributed with 1 4^ it is easy to determine whether the reduction in chi-square is statistically significant. If the largest loading MI is statistically significant, it is

concluded that the corresponding item exhibits nonuniform DIF across the two

groups. Then a new model is fitted. In this model, the factor loading that showed a

statistically significant MI is freely estimated, and the remaining factor loading estimates are constrained to be equal in both groups. The largest MI associated to the

factor loading estimates is evaluated again to determine its statistical significance,

and this iterative procedure continues until the largest MI is not statistically significant. After evaluating nonuniform DIF, the procedure focuses on uniform DIF to

determine the statistical significance ofthe Mis associated to the intercepts. If the

largest MI associated to the intercepts is statistically significant, it is concluded

that the corresponding item exhibits uniform DIF across groups. As before, a new

model is fitted in which the corresponding intercept is freely estimated, and the remaining intercepts are constrained to be equal in both groups. The largest intercept

MI is evaluated again to determine its statistical significance. This iterative procedure continues until the largest intercept MI is not statistically significant. Taking

into account that each MI is evaluated multiple times, the Bonferroni correction

should be used to test the significance of the reduction in chi-square at a specified

alpha. In this study, because at each step a maximum of six Mis were considered,

the alpha value for determining the significance of each MI was .05/6 = .008.

RESULTS

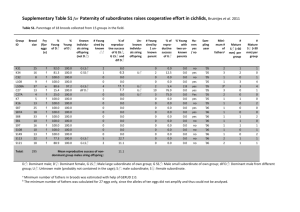

Descriptive statistics and correlations among items are displayed in Table 1. The

boy sample showed an average score on the Appearance scale (24.1) that is larger

than the average score obtained for the girl sample (21.1), t = 8.2, p < .01.

Nonuniform DIF

The initial model (Model 1) that we tested imposed invariance constraints across

groups on all the factor loadings and all the intercepts. The fit of this model was acceptable, x\2S,N^S47)

= 103.5,/?< .01; RMSEA = .079; NNFI = .97. To detect

nonuniform DIF, the largest MI associated with the factor loadings was tested: It

corresponded to the factor loading of Item 4 (MI =17,9 ,p< .008), and because it

was statistically significant, we concluded that Item 4 showed nonuniform DIF

156

GONZALEZ-ROMA, TOMAS, FERRERES, HERNANDEZ

TABLE 1

Descriptive Statistics and Correlations Among Items

Correlations^

Item

1

2

3

4

5

6

3,82/3,31

4,19/3,86

3,36/2,70

4,46/3,74

3,97/3,32

4,27/4,14

,35/1,34

,22/1,27

,37/1,29

,41/1,52

,39/1,41

,38/1,43

Skewness"

Kurtosis"

J

2

3

4

5

6

-0,22/-0,14

-0,46/-0,45

0,05 / 0,37

-0,66/-0,29

-0,46/-0,10

-O,58/-O,41

-0,50/-0,86

-0,13/-0,34

-0,60/-0,57

-0,32/-0,75

-0,34/-0,77

-0,21/-0,68

1

,63

,64

,48

,64

,44

,48

1

,59

,48

,59

,38

,59

,56

1

,48

,67

,43

,53

,56

,67

1

,63

,46

,61

,58

,72

,71

1

,42

,33

,39

,40

,45

,38

1

''The value on the left is for boys, the value on the right is for girls, ''The correlations below the diagonal are for boys, the correlations above the diagonal are for girls,

across gender groups. Then, a new model (Model 2) in which the aforementioned

factor loading was freely estimated in both groups was fitted. This model yielded

an acceptable fit to data, %2(27, N = 847) = 85,6,;? < .01; RMSEA = .070; NNFI =

.97. Now, the largest MI associated with the factor loadings was not statistically

significant (MI = 3.97, p > .008), so we concluded that none ofthe remaining items

showed nonuniform DIF.

Uniform DIF

Next, the MI values associated with the item intercepts were examined to detect

uniform DIR The largest MI was the one associated with the intercept of item 6

(MI - 8.7, p < .008). Because this MI was statistically significant, we concluded

that Item 6 showed uniform DIF across gender groups. Then, a new model (Model

3), in which the factor loading of Item 4 and the intercept of Item 6 were freely estimated in both groups, was fitted. This model showed an acceptable fit to data,

y}(21,N= 847) = 76.9,/? < .01; RMSEA = .067; NNFI = .98, and did not yield any

additional statistically significant ML Therefore, we concluded that Items 1,2, and

3 did not show DIF across gender groups.

To confirm that Items 4 and 6 showed nonuniform and uniform DIF, respectively, the fit of Model 3 was compared with the fit of Model 1. The difference between the chi-square statistics of each model is distributed as a chi-square with a

number of degrees of freedom equal to the difference between the degrees of freedom for both models. In this case, the difference in model fit was statistically significant, x'^(2,N= 847) = 26.5, p< .01, supporting the presence of DIF on the items

that were flagged by the MI values. To confirm that the remaining invariance constraints could be maintained, the fit of Model 3 was compared with the fit of the

model that imposed no invariance constraints across groups. The difference in

DIF IN PHYSICAL APPEARANCE ITEMS

1 57

model fit was not statistically significant, %\S,N- 847) = 13.0, p> .05, supporting

that Items 1, 2, and 3 did not show DIF.

To rule out the possibility that the item used as the reference indicator (Item 5)

might be a DIF item, the iterative procedure of DIF detection was repeated using a

randomly selected item (Item 3) as a reference indicator. Items 4 and 6 were

flagged again as showing nonuniform and uniform DIF, respectively, and Item 5

was not.

DIF Interpretation

The item parameter estimates yielded by Model 3 are displayed in Table 2. The difference observed in the factor loading estimates showed by Item 4 ("I am ugly") in

both samples was in the expected direction. The factor loading estimate for the

girls sample (.86) was higher than the factor loading estimate for the boys sample

(.64). However, the difference observed in the intercepts shown by Item 6 ("Nobody thinks that I'm good looking") in both samples was in the unexpected direction. The intercept esfimate for the girls sample (4.53) was higher than the intercept estimate for the boys sample (4.27).

To assess the practical significance of the DIF detected, an additional analysis

was carried out at the scale level. We ascertained the practical implications of retaining the DIF items in the Appearance scale. The mean score on the Appearance

scale, with and without removing Items 4 and 6, was computed for each gender

group and compared across groups using the standardized mean difference (d;

Chan, 2000). With Items 4 and 6 included, the means for the two groups differed

by .53 deviation units (d - .53). With Items 4 and 6 excluded from the scale, the

standardized mean difference equaled .54. The difference on d provides an index

of the practical significance of the DIF detected. In this study, the d difference

equaled .01. This value most likely points out that the practical implication of the

DIF detected at the scale level is trivial (Chan, 2000).

TABLE 2

Item Parameter Estimates Provided by Model 3

Item

1

2

3

4

5

6

Eactor Loading^

Intercept

0.74

0.70

0.81

0.64/0.86''

0.85

0.52

3.84

4.26

3.33

4.44

3.97

4.27/4.53''

"Common metric completely standardized solution. ''The value on the left is for

boys, the value on the right is for giris.

1 58

GONZALEZ-ROMA, T O M A S , FERRERES, HERNANDEZ

Differences in Latent Means

Finally, the estimates obtained for the latent means under Model 3 revealed statistically significant differences between groups. As stated earlier, for identification

purposes the latent mean for boys was fixed to zero. The estimated latent mean for

girls was -0.65, with a standard error of 0.09. Therefore, the latent means for the

two groups differed by practically two thirds of a standard deviation unit. Thus,

considering that there existed real differences in the underlying latent variable

measured by the Appearance scale, we concluded that the statistically significant

difference obtained between the observed average scores for the two groups reflected impact.

DISCUSSION

The aims of this study were to investigate whether the six items ofthe PAS (Marsh

et al., 1994) show DIF across gender groups of adolescents, and to show how this

can be done using MG-CFA-MACS. Recent research has consistently shown that

groups of adolescent girls obtain lower average scores than groups of adolescent

boys on self-perceived physical appearance measures (Marsh et al., 1997; Marsh et

al., 1994). DIF analysis must be carried out before researchers can conclude that

these observed gender differences reflect valid differences in the latent trait. Only

when items do not show DIF, or the amount of DIF detected is practically trivial,

does comparison of gender groups' observed average scores make sense.

According to our first tentative hypothesis, we expected that the analyzed physical appearance items would show uniform DIF (i.e., differences in the item intercepts) across gender groups, so that the item intercepts would be lower for girls

than for boys. This hypothesis was not supported. Only one ofthe six items showed

uniform DIF, but it was in the unexpected direction. According to our second tentative hypothesis, we expected that the items on the Marsh et al. (1994) PAS would

show nonuniform DIF (i.e., differences in the item factor loadings) across gender

groups, so that the factor loading estimates will be larger for girls than for boys.

This hypothesis was supported for only one of the six items analyzed. Interestingly, the two items that showed DIF were reversed items. However, the fact that

those items showed different types of DIF impedes, for the moment, easily interpreting this finding and formulating plausible post hoc explanations. Future research should address this issue.

From a practical point of view, the practical significance of the DIF detected

was trivial. The standardized mean difference (d) between the observed average

scores for boys and girls was practically the same regardless of whether it was

computed using the six appearance items or only the four items with no DIF {d values of .54 and .53, respectively). Thus, it can be concluded that the differences be-

DIF IN PHYSICAL APPEARANCE ITEMS

159

tween girls' and boys' average scores on the Marsh et al. (1994) PAS reflect valid

differences in the latent trait.

In relation to this, in this study we found gender differences that are congruent

with the findings reported in the literature (Marsh et al., 1997; Marsh et al., 1994):

Girls showed an average score on the Appearance scale that is significantly lower

than the average score obtained for boys. Besides, the latent means for the two

groups significantly differed by 0.65 SD, and the girls sample showed the lowest

latent mean. Because of socialization processes and the impact of mass media,

girls receive more pressure than boys to be good looking. Considering that the

standards of reference for both genders are high, the different pressure received

may help to explain why girls score lower than boys when they compare themselves to reference models and rate themselves on self-perceived physical appearance.

We also wanted to show how MG-CFA-MACS can be used for DIF detection.

In comparison with other methods, such as those based on item response theory

(IRT), the method used here has a number of advantages (Chan, 2000; Reise et al.,

1993). First, programs performing MG-CFA-MACS, such as LISREL, EQS,

Mplus and AMOS (i.e., structural equation modeling [SEM] programs), provide

different indexes of practical fit to data that are very useful when the sample size is

large and the models include a large number of indicators. IRT programs (e.g.,

MULTILOG, PARSCALE) only provide the likelihood ratio chi-square test as a

measure of model fit, and this test is very sensitive to sample size. Second, when a

model imposing invariance constraints on an item parameter cannot be maintained, modification indexes provided by SEM software are very useful for detecting which particular items have parameters that are not invariant. This facility allows researchers to specify models assuming partial invariance on the item

parameters involved. IRT programs do not provide modification indexes or analogues. Third, SEM programs allow researchers to work with multidimensional

questionnaires, whereas IRT programs, such as MULTILOG and PARSCALE, are

suitable for unidimensional questionnaires. Multidimensional models can be

operationalized using SEM programs following the strategy proposed by Little

(1997). This strategy allows researchers to test hypotheses that refer to the

invariance of factor parameters (correlations, variances, and means), hypotheses

that are relevant in cross-cultural research. Fourth, SEM methods offer researchers

different alternatives for testing the hypothesis that a grouping variable (e.g., gender) is producing DIF: a group comparison strategy (see Breithaupt & Zumbo,

2002) and a strategy based on including the grouping variable in the structural

model (see Oort, 1992).

However, this method also has some limitations. The MG-CFA-MACS model

is a model for continuous variables. In our study and in previous applications of

this model (e.g., Chan, 2000), ordinal Likert-type items were analyzed under the

assumption that responses to graded polytomous items approximate a continuous

160

GONZALEZ-ROMA, TOMAS, FERRERES, HERNANDEZ

scale. In relation to this, a recent simulation study has showed that

MG-CFA-MACS detects both uniform and nonuniform DIF in graded polytomous

items quite well when the amount of DIF is medium and large in a sample of 800

individuals, keeping the proportion of false positive detections (Type I error) close

to, or lower than, nominal alpha values (Hernandez & Gonzalez-Roma, 2003). Although these results are promising, more simulation research is needed regarding

the use of MG-CFA-MACS for testing item parameter invariance with graded

polytomous items.

REFERENCES

American Educational Research Association, American Psychological Association & National Council on Measurement in Education (1999). Standards for educational and psychological testing.

Washington DC: American Educational Research Association,

Bentler, P. M., & Bonett, D. G. (1980). Significance tests and goodness of fit in the analysis of

covariance structures. Psychotogieal Bulletin. 88, 588-606.

Bollen, K. A. (1989). Structural equatiotxs with latent variables. New York: Wiley.

Boomsma, A. (1983). On the robustness of LISREL (maxitnum likeiihood estimation) against small

sample size and non-normality. Unpublished doctoral dissertation. University of Groningen,

Groningen, The Netherlands.

Breithaupt, K., & Zumbo, B. D. (2002). Sample invariance ofthe structural equation model and the

item response model: A ease study. Structural Equation Modeling, 9, 390-412.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S.

Long (Eds.), Testing structural equation models (pp. 136-162), Newhury Park, CA: Sage.

Browne, M. W., & Du Toit, S. H. C. (1992). Automated fitting on nonstandard models. Multivariate Behavioral Research, 27, 269-300.

Camilli, G., & Shepard, L. A. (1994). Methods for identifying biased test items. Thousand Oaks, CA:

Sage.

Chan, D. (2000). Deteetion of differential item funetioning on the Kirton Adaptation-Innovation Inventory using multiple-group mean and covariance structure analyses, Multivariate Behavioral Research, 35, 169-199.

Harlow, L. L. (1985). Behavior of some elliptical estimators with nonnormal data in a covariance

structurefratnework: A Monte Carlo study. Unpublished doetoral dissertation. University of California, Los Angeles.

Herndndez, A., & Gonzalez-Roma, V. (2003). Evaluating the multiple-group mean and covariance

analysis model for the detection of differential item funetioning in polytomous ordered items.

Psieothema, 15, 322-327.

Holland, P. W., & Thayer, D. T. (1988). Differential item funetioning and the Mantel-Haenszel procedure. In H. Wainer & H. I. Braun (Eds.), Test validity (pp. 129-145), Hillsdale, NJ: Lawrence

Erlbaum Associates, Ine.

Joreskog, K. G., & Sorbom, D. (1993). LISREL 8: User's reference guide. Mooresville, IN: Seientific

Software.

Knauss, L. K. (2001). Ethical issues in psychological assessment in school settings. Journal of Personality Assessment, 77, 231-241.

La Du, T. J., & Tanaka, J. S. (1995). Incremental fit index changes for nested structural equation models. Multivariate Behavioral Research, 30, 289-316.

DIF IN PHYSICAL APPEARANCE ITEMS

1 61

Lanning, K. (1991). Consistency, scalability atid persortality measuretnent. New York:

Springer-Verlag.

Little, T. D. (1997). Mean and covariance structures (MACS) analyses of cross-cultural data: Practical

and theoretical issues. Multivariate Behavioral Research, 32, 53-76.

Marsh, H. W. (1994). Confirmatory factor analysis models of factorial invariance: A multifaceted approach. Structural Equation Modeling. I. 5-34.

Marsh, H. W., Hey, J., Roche, L., & Perry, C. (1997). Structure of physical self-concept: Elite athletes

and physical education students. Journal of Educational Psychology, 89, 369-380.

Marsh, H. W., & Hocevar, D. (1985). The application of confirmatory factor analysis to the study of

self-concept: First and higher order factor structures and their invariance across age groups. Psychoiogicai Bulletin, 97, 562-582.

Marsh, H. W., Richards, G. E., Johnson, S., Roche, L., & Tremayne, P (1994). Physical

Self-Description Questionnaire: Psychometric properties and a multitrait-multimethod analysis of

relations to existing instruments. Journal of Sport and Exercise Psychology, 16, 270-305.

McArdle, J. J., Johnson, R. C , Hishinuma, E. S., Miyamoto, R. H., & Andrade, N. N. (2001). Structural

equation modeling of group differences in CES-D ratings of native Hawaiian and non-Hawaiian

high school students. Journal of Adolescent Research, 16, 108-149.

Meredith, W. (1993). Measurement invariance, factor analysis and factorial invariance. Psychometrica,

58, 525-543.

Muthen, B., & Kaplan, D. (1985). A comparison of some methodologies for the factor analysis of

non-normal Likert variables. British Journal of Matketnatieat and Statistical Psychology, 38,

171-189.

Oliveira, M. A. (2000). The effect of media's objectification of beauty on children's body esteem. Dissertation Abstracts International: Section B: The Sciences and Engineering, 61, 221 A.

Olsson, U. (1979). On the robustness of factor analysis against crude classification ofthe observations.

Multivariate Behavioral Research, 14, 485-500.

Oort, F. J. (1992). Using restricted factor analysis to detect item bias. Methodika. 6, 150-166.

Oort, F. J. (1998). Simulation study of item bias detection with restricted factor analysis. Structural

Equation Modeling, 5, 107-124.

Reise, S. P., Widaman, K. F., & Pugh, R. H. (1993). Confirmatory factor analysis and item response

theory: Two approaches for exploring measurement invariance. Psychological Bulletin. 114,

552-566.

Roskam, E. E. (1985). Current issues in item response theory. In E. E. Roskam (Ed.), Measuretnent and

personality assessment (pp. 3-20). Amsterdam: North Holland.

Shealy, R., & Stout, W. F. (1993a). An item response theory model for test bias. In P. W. Holland & H.

Wainer (Eds.), Differential item functioning: Theory and practice (pp. 197-239). Hillsdale, NJ: Lawrence Eribaum Associates, Inc.

Shealy, R., & Stout, W. F. (1993b). A model-based standardization approach that separates true

bias/DIF from group differences and detects test bias/DTF as well as item bias/DIF. Psychometriica,

58, 159-194.

Sorbom, D. (1974). A general method for studying differences in factor means and factor structures between groups. British Journal of Mathematical and Statistical Psychology, 27, 229-239.

Sorbom, D. (1982). Structural equation models with structured means. In K. G. Joreskog & H. Wold

(Eds.), Systems under indirect observation (pp. 183-195). Amsterdam: North Holland.

Swaminathan, H., & Rogers, H. J. (1990). Detecting differential item functioning using logistic regression procedures. Journal of Educational Measurement. 27, 361-370.

Tanaka, J. S. (1993). Multifaceted conceptions of fit in structural equation models. In K. A. Bollen & J.

S. Long (Eds.), Testing structural equation models (pp. 10-39). Newbury Park, CA: Sage.

Tucker, L. R., & Lewis, C. (1973). A reliability coefficient for maximum likelihood factor analysis.

Psychometriica, 38, 1-10.

162

GONZALEZ-ROMA, TOMAS, FERRERES, HERNANDEZ

Zumbo, B. D., Pope, G. A., Watson, J. E., & Hubley, A. M. (1997). An empirical test of Roskam's conjecture about the interpretation of an ICC parameter in personality inventories. Edueational and Psychological Measurement, 57, 963-969.

APPENDIX

PSDQ APPEARANCE SCALE ITEMS

1.

2.

3.

4.

5.

6.

I am attractive for my age

I have a nice looking face

I'm better looking than most of my friends

I am ugly

I am good looking

Nobody thinks that I'm good looking

Note. From Physical Self-Description Questionnaire: Psychometric properties

and a multitrait-multimethod analysis of relations to existing instruments, by H,

W. Marsh, G, E. Richards, S. Johnson, L. Roche, & P. Tremayne, 1994, Journal of

Sport and Exercise Psychology, 16, pp, 270-305. Reprinted with permission ofthe

authors.