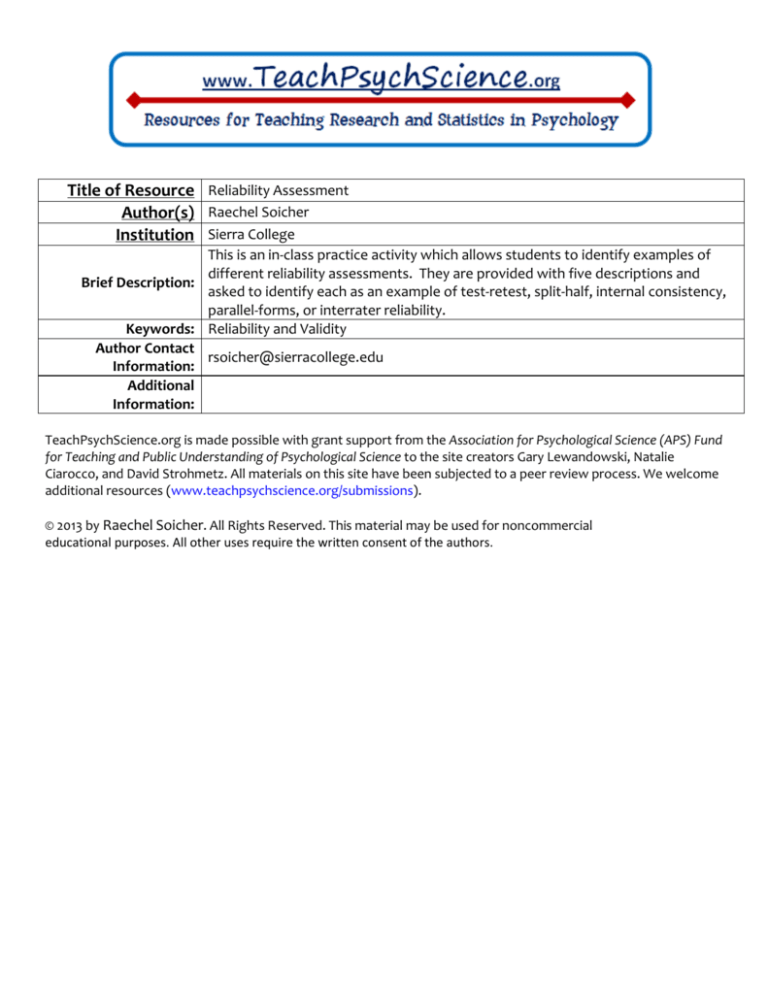

Title of Resource Reliability Assessment

Author(s) Raechel Soicher

Institution Sierra College

This is an in-class practice activity which allows students to identify examples of

different reliability assessments. They are provided with five descriptions and

Brief Description:

asked to identify each as an example of test-retest, split-half, internal consistency,

parallel-forms, or interrater reliability.

Keywords: Reliability and Validity

Author Contact

rsoicher@sierracollege.edu

Information:

Additional

Information:

TeachPsychScience.org is made possible with grant support from the Association for Psychological Science (APS) Fund

for Teaching and Public Understanding of Psychological Science to the site creators Gary Lewandowski, Natalie

Ciarocco, and David Strohmetz. All materials on this site have been subjected to a peer review process. We welcome

additional resources (www.teachpsychscience.org/submissions).

© 2013 by Raechel Soicher. All Rights Reserved. This material may be used for noncommercial

educational purposes. All other uses require the written consent of the authors.

Instructors:

It is often useful to provide students with concrete examples that help to illustrate concepts in research methods that

the students may not have the opportunity to experience first-hand. In this brief exercise (5-10 minutes), students

review examples of reliability assessment which are real-life descriptions of the different assessment types. This can be

given as an in-class assignment following review of the test types, either individually or in pairs, or may be assigned as

homework.

Testing Reliability: Handout 1

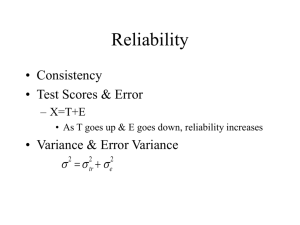

Test-Retest Reliability: In this type of reliability assessment, the measure of the construct is tested on two

different occasions (time-points) for consistency. Testing is typically completed a couple of weeks apart. If the

measure is reliable, then the two scores for each participant will be comparable at each time point. The

scores do not need to be exactly the same, but should be similar.

Split-Half Method: This reliability assessment splits the measure of the construct into two halves and

compares performance on the two halves. In this method, the researcher randomly assigns half of the

questions (or items) to one group of participants and half of the questions (or items) to a different group of

participants. Then, a correlation is computed between each half. If the measure is reliable, then the

correlation will be high. This method is preferable to test-retest because it requires only one time point for

data collection.

Internal Consistency: This method is similar to the split-half method. In the case of internal consistency,

however, the split-half procedure is repeated multiple times (Think! What do you think would be the

advantage of administering this multiple times?). Because of this, multiple correlation coefficients will be

computed. Lastly, the research finds the average of the correlations. In practice, “Cronbach’s Alpha” can be

used to compute a value for internal consistency.

Parallel-Forms Method: In this method, the researcher creates two measures of the same construct which

are administered to the same group of people at one time point. Then, the researcher calculates the

correlation between the two forms. The higher the correlation, the more reliable the measure. This method

is not very time consuming to administer, which is a benefit, but you need to have a relatively long measure in

order to divide it into two parts of sufficient length.

Inter-rater Reliability: The previous four tests of reliability are focused on creating a measure of a construct.

For this test of reliability, the emphasis is on using a particular measure consistently across different

researchers. When a research design requires observations of an event (e.g., acts of aggression), this

assessment is used to make sure the observations are consistent and unbiased when more than one person on

the research team is doing the observations. The results of the observations for multiple observers are

compared and their agreement is assessed. The higher the agreement between observers, the more reliable

they have been. For example, if the observers agree 92 times out of100 acts of aggression, the reliability is a

strong 92%.

Handout 2: Identify the Reliability Assessment Being Used

In each of the following examples, determine which form of assessment is being used for the reliability of the

measure of the dependent variable. Possible forms of assessment:

Test-Retest

Split-Half Internal Consistency Parallel-Forms Inter-Rater

______________________1. Julie is going to measure a person’s mood before and after a stressful situation.

However, she is worried that if a participant sees the same mood questionnaire before and after the

experimental situation, it will change their responses. Therefore, Julie decides to create two different mood

inventories composed of different questions. In order to assess reliability of the two, she brings in twenty

pilot participants, administers both forms to the group, and then correlates scores on the two forms.

______________________ 2. Ronnie, Janna, and Todd have been watching video recordings of children

playing in a room with different types of toys. They have been tasked with noting when a child displays

helping behaviors. To make sure they are consistent with their categorizations, the researcher compared all

three of their observations to see the extent to which they agreed with one another.

______________________ 3. Dr. Perkins wants to know if his test of extraversion is reliable. On Monday, he

splits the test of extraversion such that twenty participants see the odd-numbered questions and twenty

participants see the even-numbered questions. On Wednesday, he repeats a similar procedure with a new

group, where twenty participants respond to questions 1-20 and the other twenty participants respond to

questions 21-40. After correlating scores on all four “parts”, he evaluates the average of the correlations.

______________________ 4. Rodger has developed a test of depressive symptoms. He gives the test to

twelve of his patients in October and then again to the same twelve in December. Next, he determines the

correlation in scores between the two testing occasions.

______________________ 5. In just one session, Dr. Woolley administers an inventory she developed to

measure college students’ level of motivation. To determine if her inventory is reliable, she compares the

scores on the first half of the inventory to the second half of the inventory.

______________________ 6. Professor X wants to assess the level of pre-existing knowledge of students in

his upper division psychology class. On the first day of class, the students complete the final exam from

Professor X’s introductory psych class. Then, a week later, before any content has been introduced, Professor

X administers the exam for a second time. A high level of consistency in the results between day one and the

end of week 1 would indicate that Professor X’s exam is reliable.

______________________ 7. Sierra College is interested in evaluating the reliability of a critical thinking

assessment. After developing a set of 100 critical thinking problems, half of them are randomly assigned to

students at the Rocklin campus and the other half are assigned to students at the Roseville campus. The

reliability of the critical thinking assessment will be high if the scores of students at both campuses is

comparable.