The Clicker Way to an “A”! New Evidence for Increased Student Learning

and Engagement: Understanding the Pedagogy behind the Technology

Lena Paulo Kushnir, Ph.D.

Teaching Technology Support Office,

Office of the Dean, Faculty of Arts and Science,

University of Toronto

Toronto, Ontario, Canada

lena.paulo.kushnir@utoronto.ca

Abstract: More and more universities look to educational media and technology to engage students and

enrich learning environments. Integrating interactive online tools with active teaching pedagogies can

effectively transform otherwise passive lecture-based classes into lively, engaging discussion-based courses.

Research on the use of student response systems (clickers) and a related teaching strategy, Peer Instruction

(PI), in undergraduate Psychology classes is presented. Over 350 students were surveyed on their opinions,

perceptions and use of clickers and PI. Empirical measures including EEG brain-wave patterns were used to

assess the impact that clickers and PI had on student activity and learning outcomes. Findings showed a

significant, positive impact on learning; preliminary analyses of EEG brain-wave data show significant

differences in brain activity during PI versus traditional lectures. Variables that explain how clickers and PI

influence brain activity, cognition and student learning outcomes are considered.

Keywords: Pedagogy, Educational Technology, Student Response System, Clickers, Peer Instruction,

Active learning, Student Engagement, Learning Outcomes

1. Introduction

1.1 Problem

In some disciplines, instruction does little to change undergraduates’ misconceptions about core subject areas.

For example, in psychology there are some concepts with which students almost always have difficulty, and this

observation is independent of instructor, text, or student level of performance. So there are some concepts with

which even the brightest students typically have problems. Active teaching and learning strategies can help correct

existing misconceptions that might otherwise go unchecked (Berry, 2009; Caldwell, 2007; Chen et al., 2010; Harlow

et al., 2009; Martyn, 2007; Reay et. al., 2005). More and more university instructors look to educational media and

technologies to liven up traditional lectures (Johnson & McLeod, 2004; Moredich & Moore, 2007; Ribbens, 2007).

A lot of research shows that the use of student response systems (clickers: hand-held, web-based or mobile) in

undergraduate lectures is increasingly popular and often viewed as an effective way to engage students, create

enriched learning environments, and positively impact student learning (Addison et al., 2009; Beatty, 2004; Berry,

2009; Blood and Neel, 2008; Carnevale, 2005; Chen et al., 2010; Crews et al., 2011; Crouch & Mazur, 2001;

Duncan, 2005; Fitch, 2004; Harlow et al., 2009; Hall et al., 2005; Liu & Stengel, 2011; Martyn, 2007; Mula &

Kavanagh, 2009). The use of clickers together with interactive learning (either in the classroom or online) are

effective teaching strategies for engaging students and avoiding passive, non-participatory learning that is often

characteristic of lecture-based classes (Bachman & Bachman, 2011; Beatty, 2004; Brueckner & MacPherson, 2004;

Burnstein & Lederman, 2003; Caldwell, 2007; Carnevale, 2005; Crouch & Mazur, 2001; Dufresne et. al., 1996;

Harlow et al., 2009; Mazur, 1997; Rao & DiCarlo, 2000).

There is a growing body of empirical literature investigating the impact of educational media and technologies

on student learning outcomes; the same is true of research on the use of clickers and related teaching strategies that

go well with clicker use. With the exception of a small number of studies scattered through the literature, it has been

only since about 2007 that more and more empirically based studies investigating the impact of clickers have been

published (e.g., Caldwell, 2007; Crossgrove & Curran, 2008; Cummings & Hsu, 2007; Freeman et al., 2007; and

Martyn, 2007) with about two thirds of that literature published in just the last few years; for example, see Addison

et al., (2009), Bachman and Bachman (2011), Berry (2009), Chen et al., (2010), Freeman et al., (2011), Hull and

Hull, (2011), Lui and Stengel, (2011), Mula and Kavanagh, (2009), Mayer et al., (2009), Smith et al., (2011),

Sprague and Dahl, (2010), and Yaoyuneyong and Thornton, (2011).

Generally the literature is very positive about the use of clickers, especially with more vendors offering web and

mobile solutions (including devices and software). Current research shows that students report overwhelmingly

positive attitudes towards the use of clickers in their courses, and that they believe that the use of clickers and the

resulting class and online discussions, plus student interactions, help them to learn (Bachman and Bachman, 2011;

Berry, 2009; Caldwell, 2007; Chen et al., 2010; Crossgrove & Curran, 2008; Fitch, 2004; Harlow et al., 2009; Hall

et al., 2005; Martyn, 2007; Moredich & Moore, 2007; Ribbens, 2007). About half of the studies that measure

differences in student learning in courses that use clickers and active learning strategies versus those that do not, find

statistically significant differences in student grades, suggesting that this particular educational technology

sometimes can have a significant and positive impact on student learning outcomes.

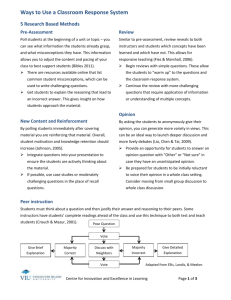

1.2 What is Peer Instruction?

Peer Instruction (PI) is a teaching strategy used during lecture that actively involves students in the teaching

process while assessing comprehension on the fly (for both instructors and students). For students, it allows them to

discover and immediately correct misconceptions; for instructors, it lets them know whether to move onto another

topic or spend more time to review a topic that seems to be problematic (Baderin, 2005; Bauchman & Bauchman,

2011; Bean & Peterson, 1998; Blood & Neel, 2008; Fitzpatrick et al., 2011; Hall et.al., 2002; Kellum et.al., 2001;

King, 1991; Kuh, 2004). Instructors and students do not have to wait until scheduled tutorials, or worse, a test, to

demonstrate mastery in particularly difficult subject areas. PI helps students think about difficult conceptual

material and trains students to process material deeply, to apply concepts they learn, and to justify their reasoning to

classmates in either online or face-to-face discussions (Bauchman &Bauchman, 2011; Bean & Peterson, 1998;

Fitzpatrick et al., 2011; Hall et.al., 2002; King, 1991; Smith et.al., 2011).

1.3 How does Peer Instruction work?

Specific PI procedures vary from instructor to instructor, but a common procedure described in the literature is a

vote–discuss–vote again procedure where instructors first ask students a question about what was taught, and (using

classroom, web or mobile clickers) students answer the question first on their own. Students then are asked to

discuss the same question in smalls groups, and then asked to answer again using clickers. During this second vote,

students have the opportunity to revise their answers (e.g., Beatty, 2004; Crouch & Mazur, 2001; Harlow et al., 2009;

Mazur, 1997; Rao & DiCarlo, 2000).

1.4 Why does Peer Instruction work?

PI distinguishes between memorization and understanding; it forces students to think for themselves and to put

their thoughts into words in engaged and interactive settings. Unlike traditional class discussions, PI includes all

students in the active learning, rather than just a small number of students who often tend to be the same students

leading traditional class discussions. Unlike traditional class discussions, PI encourages students to think carefully

about their answers and opinions regarding concepts they discuss with peers, rather than, for example, reinforcing

students in face-to-face classes who quickly put their hands up to answer first (without careful thought). Even shy

or quiet students can respond and click along with the entire class. It is much more meaningful and effortful to write

effectively in online discussions or to speak coherently in small groups, than it is to blurt out the first thing that

comes to mind in large class discussions.

1.5 Issues considered in this study.

In this study, the use of clickers and PI together were considered active teaching strategies that could positively

impact engagement, learning and cognition. But what are the long term benefits of this? The literature is clear on

how clickers and PI together help comprehension during online discussions or lecture, but are there any savings in

students’ learning, such that students’ understanding and memory for the learned material is preserved over time and

beyond the immediate online discussion or outside the classroom (measured in this study, for example, in

subsequent term tests and a final exam)? Even if there are savings in learning, what other learning might one

observe? Is there any transfer of valuable learning skills to other course work and tasks? Instructors cannot cover

all of the material in a course through the use of clickers and PI. Just like instructors cannot teach everything, they

cannot “PI” everything. In many disciplines (at least in higher education), students are for the most part self-directed

learners, left to learn the majority of the curriculum on their own while the instructor covers only a small portion of

the material in lecture. So, can the use of clickers and PI in only certain segments of a course help students acquire

skills that they can apply more broadly (e.g., through modeling) to the rest of the course curriculum, that is, more

than just to the specific concepts covered in PI (as measured by overall course grades which, in this study, reflect

much more than just student performance on PI, term tests and final exam)? In this particular course, students were

given over a dozen course components that contributed to their final grade. The instructor believed that if PI had

any significant impact on behaviours that contribute overall to successful learning skills, then this might be detected

in student’s levels of performance in all areas of the course and not just those directly linked to clickers and PI.

What does cognition and learning look like in a course with educational media, technologies and collaborative

activities versus a traditional lecture-based course (as measured in this study by electroencephalography (EEG) –

brain-wave patterns measuring the electrical activity of the brain from the skull)? What are students’ experiences

with the use of clicker technology and PI in their courses (as measured in this study by an end-of-course student

survey and evaluation of the use of student response technologies)?

2. Research Questions

This study investigated the following three questions: (1) Does the use of clickers and PI increase student

learning (online or face-to-face) as measured by (a) student performance on PI, (b) student performance on term

tests and a final exam?; (2) Does student cognition and learning “look” different in clicker and PI segments of

courses versus lecture-based classes, as measured by student EEG brain-wave patterns?; (3) What are students’

experiences with the use of clickers and PI, as measured by a student perception survey?

3. Design of study

3.1 Description of study

This study observed and tracked the behaviors of students enrolled in one of six sessions of an Introductory

Psychology course taught by the same Professor. The Professor consistently used clicker technology and PI together

to engage students in collaborative discussions in each of the sessions. Clickers were a required component of the

course (much like a required textbook, laboratory component, tutorial, etc.,). This requirement satisfied the PI and

participation component of the course which collectively was worth 10% of students’ final grade in the course. A

small number of students chose not to get the required technology and/or participate, but this was not considered to

be different than cases where some students choose not to purchase a text, not to participate in specific course

components, or to not complete an assignment.

The instructor used the PI vote–discuss–vote again procedure described above, that is, where an instructor first

asks students a question about what was taught, and (using clickers) students answer the question on their own,

followed by small group discussions and then another opportunity to vote. The instructor also asked students to rate

their level of confidence that their answers were correct after each question vote (i.e., after the questions they

answered on their own, and then again after the questions they answered following small group discussions).

Wireless EEG headsets were used to compare brain-wave patterns representing the brain activity of students actively

participating in PI versus the brain activity of students listening to a traditional lecture.

Students were surveyed at the end of each section of the course about their opinions, perceptions and use of

clickers and PI. Students were asked if they acquired the technology, used the clicker to participate in the course, if

they liked using it, if they thought the use of clickers with PI helped them learn course material, and what other

comments or experiences they wanted to share about the clicker technology and PI. Students’ participation in

completing the surveys was voluntary and they were encouraged to complete the surveys to help with the continued

development of the course and the development of clicker and PI use in future courses.

3.2 Participants

Participants were three hundred and fifty-eight undergraduate students enrolled in an Introductory Psychology

course at a small urban center university. Data were collected from six different sessions of the same course offered

by the same Professor.

3.3 Analyses

Analyses of the data included response frequencies of the quantitative survey questions across all six sections of

the course. Independent samples t-Tests were used to compare mean scores on test and exam questions that tested

comprehension of course material that was covered in PI (i.e., questions identified as PI Questions) versus test and

exam questions that tested comprehension of course material that was not covered in PI but that students could have

learned about either from lectures or from the assigned course readings (i.e., questions identified as non-PI

Questions). Qualitative analyses of the open-ended survey questions included weighted word lists that were

calculated and puzzled out into word clouds that were generated from the students’ text answers. The word clouds

represented a summary of the text that students wrote in their open-ended answers. A user-generated word cloud

visualizes information that is related to specific survey questions and depicts visually, the frequency of specific

topics that students write about in their survey answers. The importance (or frequency) of specific words is often

displayed through font size (as in the examples in this study), font colour, or some other attribute (see Bateman et

al., 2008 for an overview of word clouds). EEG brain-wave patterns were analyzed and qualitative differences

between wave types and differences across learning contexts (i.e., PI versus traditional lecture) were considered.

4. Results and Discussion

This study investigated whether the use of clickers and PI had any impact on students learning outcomes,

engagement and experience. PI data, test scores and exam scores were analyzed to measure learning outcomes.

EEG brain-wave patterns were used as a measure of student activity and engagement during PI versus traditional

lectures, and an end-of-term student survey provided measures of students’ experiences with the use of clickers and

PI.

PI data revealed significant shifts from wrong answers (for questions that the instructor first asked students to

answer on their own) to right answers (for questions that the instructor asked students to answer following small

group discussions). Similar shifts in students’ increased levels of confidence (that their answers were correct after

each question) were also detected after small group discussions. Figures 1, 2 and 3 illustrate just a few examples of

over 100 hundred examples of data from PI questions that worked well and served to assess comprehension on the

fly for both the instructor and students. Figure 1 demonstrates a tremendous increase in student learning and

confidence after small group discussions.

Figure 1: Example of PI question that worked very well and increased student learning and confidence.

This finding supports the idea that students should not study alone and that study groups (or discussion groups) can

support learning by fostering deep processing of course material, application of concepts, and justification of

thought to peers. Such active and interactive learning positively impacts students’ confidence and understanding of

the course material. Figure 2 demonstrates how PI data can signal the instructor the need for more review of lecture

material since the data revealed that almost none of the students understood the concept.

Figure 2: Example of PI question that worked well to signal both the instructor and students that more instruction was necessary

since students did not understand the concept.

This finding supports the idea that active teaching and learning strategies can correct existing misconceptions

immediately, which otherwise might go unchecked until a tutorial, or worse, a test or exam (when it might be too

late to correct the misconception). Figure 3 demonstrates how PI data can signal the instructor to move along since

the data revealed that almost all of the students understood the concept and that no further instruction was necessary.

Figure 3: Example of PI question that worked well to signal both the instructor and students that no further instruction was

necessary since almost all students understood the concept but very few were confident that their answer is correct until after PI.

Interestingly, figure 3 indicates that no further instruction is necessary but that PI is still worthwhile since many

students are not confident in their answers until after small group discussions, again, suggesting that students who

work collaboratively in groups can be more confident in their understanding of course material.

Two term tests (held in weeks 5 and 9 of the 12 week course, respectively) and one final exam (held in week 14,

during a scheduled exam period) were analyzed to compare mean scores on questions identified as PI Questions (as

described above) and questions identified as non-PI Questions. Independent samples t-Tests revealed significant

differences between mean grades on PI questions and non-PI questions across both term tests and the final exam

t = (69) = 13.02, p ≤ .0001. Students consistently performed better on PI Questions than non-PI Questions;

providing evidence that PI had a positive impact on student learning, and more importantly, that there was a savings

in students’ learning up to 12 weeks later (i.e., that students understood and remembered PI concepts better than

non-PI concepts up to 2 - 12 weeks later, from when they first started using PI to when they were tested on their

knowledge and memory of those concepts on the term tests and the final exam). This is a particularly important

finding, since it provides empirical evidence that active, deep processing has a lasting effect on memory for

learning. It would be very interesting to re-test students (who participated in this study) a year later to see if there is

any further savings in learning, but as with most other undergraduate courses, once a course is completed, such

analyses would be difficult to track.

EEG brain-wave data revealed interesting differences in brain activity during PI activities versus traditional

lectures. Overall, the EEG data showed a variety of different patterns for each individual participant, and individual

patterns depended on what any one individual paid attention to, listened for, or did (e.g., whether students took

lecture notes, worked on a computer, etc.,), but some characteristic patterns emerged during PI and others during

traditional lecture. Figures 4 to 9 depict snippets or still images of brain activity (i) at the start of an introductory

Psychology lecture on Memory and Cognition, (ii) several minutes into the lecture, (iii) at the beginning of PI and

clicker use, (iv) during small-group PI discussions, and (v) at the end of PI discussions.

Figure 4: Brain waves measured at the start of a lecture.

Figure 5. Brain waves measured several minutes into a memory and cognition lecture.

The still EEG images are representative of the characteristic patterns identified from hours of EEGs collected

over the different sessions. These serve to illustrate characteristic patterns of brain activity that helped the

researchers infer participants’ mental state (e.g., anxious, relaxed, attentive, engaged, etc.,), provides empirical

evidence for such states, and provides another measure of what engagement “looks” like, so to speak. For

discussions here, the most relevant waves have been labeled in figures 4 to 9 so that reader can better understand

what the EEG image illustrates. The grey line, labeled “overall level of attention”, is a general measure of

attentiveness resulting from sensory input and processing from the various sensory modalities. The black line,

labeled “arousal/anxiety” is an aggregate measure of the different neural frequencies and provides a measure of

arousal or anxiety. In this case, the higher the line the more relaxed (or less anxious or aroused) the person was.

The other lines represent different types of wavelengths corresponding to different brain activity. For example, the

light and dark purple lines, labeled “focused attention/higher executive function”, are Beta waves which are

characteristic of deep focused attention; the sort of focused attention required to work on a difficult problem,

coordinate and manage a meaningful discussion, and other activities that facilitate higher executive function in

human cognition. Figures 4 and 5 demonstrate the differences in neural activity at the beginning of a class

compared to several minutes into a traditional lecture. At first there is a great deal of activity at the beginning of

lecture (in this particular case, both in the class as students get settled, and in their brains!). Within 10 to 15

minutes, brain activity drops dramatically, students still pay attention and are relaxed, but focused attention and deep

thought is low.

Figure 6. Brain waves measured several minutes into a memory and cognition lecture.

Figure 7: Brain waves measured at the beginning of PI and clicker use.

Figures 6 and 7 demonstrate characteristic differences in neural activity during a traditional lecture versus neural

activity at the beginning of PI and small-group discussions. What is apparent immediately are the spikes in

“focused attention” (purple lines) in figure 7, demonstrating that all of a sudden, students are focused and attending

carefully. What is not so obvious, but very interesting, is the sudden drop in the “arousal/anxiety” line in Figure 7,

indicating that students are, all of a sudden, anxious or nervous. There is an optimal level of arousal for working on

difficult tasks, so being a bit nervous or excited when working on a difficult task facilitates performance. This

phenomenon is known as the Yerkes-Dodson Law and it describes the empirical relationship between performance,

levels of arousal and task difficulty (Yerkes & Dodson, 1908). It is fascinating to “see” this evidenced here.

Figure 8: Brain waves measured during small-group PI discussions.

Figure 8 illustrates brain activity during small-group discussion and corroborates what we see in figure 7. Delta

brain waves are characteristic of deep sleep and unconsciousness, but spikes are common after an individual spends

some time focused on one particular task.

Figure 9: Brain waves measured at the end of the small-group PI discussions.

Figure 9 illustrates this phenomenon after students finish PI and wrap up the small-group discussions (as delta waves

are detected and illustrated as light yellow lines).

Consistent with other studies discussed above, an end-of-term student survey revealed that students reported

overwhelmingly positive attitudes towards the use of clickers. About 85% of students reporting that they enjoyed

using their clickers in the course and hoped that other courses would adopt them also, and about 78% of students

believed that clickers (and the resulting discussions and student interactions) helped them to learn the material better

than if they had not used them and had not had the group discussions. Figures 10 and 11 corroborate these findings

and show that students perceived value in using the clickers and PI, enjoyed their experience, and they felt most

engaged when in discussions with their peers. As described earlier, the larger the words in these word clouds, the

more often students used the terms in their responses. About 10% of students reported that they did not satisfy the

clicker and PI component of the course (i.e., they did not obtain a clicker for PI participation) or that they did but not

enjoy using them, and did not believe that clickers helped with learning the course material.

Figure 10: Word cloud of students’ open-ended answers about when they felt most engaged.

Figure 11: Word cloud of students’ open-ended answers about the use of Clickers and PI in class.

5. Conclusions and Recommendations

The findings in this study suggested that integrating student response systems and PI had a positive impact on

students’ learning, engagement with their peers and the course material, their experience of being exposed to such

teaching strategies and educational technologies, and their perception of the efficacy of such strategies and

technologies. The findings also demonstrated the need for better, more engaging ways to teach difficult material and

to dispel misconceptions, since traditional lectures might not always help in this regard. The EEG data showed that

drawn out, passive lectures risked what almost looked like flatlined brain activity (had these measures been

electrocardiograms (ECGs) the instructor would have had to call 911!). At first glance, these EEGs look like a mess

of peeks, valleys and lines, but upon careful inspection, this mess of data can be parceled out to reveal fascinating

and meaningful outcomes, linking brain activity to course activity. Future research might focus on this sort of

empirical connection between brain and behaviour. The PI data demonstrated that the outcomes are clearly not

about the technology itself (i.e., the clickers) but rather about the process of interacting with peers, feeling the

pressure to process material deeply, apply the concepts and then justify their reasoning in small group discussions

(i.e., the peer instruction). The real benefit of the clickers is that they facilitate the quick, immediate feedback that

benefits both students and the instructor. It is encouraging that the use of clicker technology is not simply some

frivolous distraction and fun gadget. Results in this study supported the idea that students can learn better, at a

deeper level, and retain that knowledge longer with the use of this particular technology and associated teaching

strategies. Also, as reported in this study (and in most other studies), students report overwhelmingly that they

really like learning with these tools (and yes, it is also fun; they really like the clicking!).

The results in this study support the literature that suggests that active and interactive teaching and learning

strategies are effective ways of enriching learning environments and transforming otherwise passive lecture-based

courses into lively, engaging, discussion-based courses. It is in these sorts of environments that deeper and better

learning occurs. Perhaps lectures are better taken out of the classroom to make more room (more time) for such

teaching strategies and classroom activities; not necessarily to do more or cover more material, rather to do it

differently and to get students to work differently, and to think differently about their learning. Online educational

resources and tools are certainly a good way to facilitate flipping or inverting traditional lecture courses so that

passive lectures are moved out of the classroom and more time can be dedicated to active teaching and learning

strategies in the classroom. In a subsequent study, data has already been collected and analyses begun to see if the

findings here in this study are replicated in hybrid or blended courses and completely online courses. At the time of

this publication, those analyses were not yet completed but preliminary findings were promising. As more and more

university instructors look to educational media and technologies to help engage students and enrich learning

environments, to create hybrid or blended learning environments, or completely online learning environments, it will

be helpful if future research focuses on the use of different learning contexts and online innovations to facilitate

different teaching and learning methods, both in and out of the classroom. Advancements in technology and

innovations in education allow universities to entertain new ways of teaching and learning. It is in understanding the

pedagogy behind the technology that will get us further along in understanding how to best implement educational

media and technologies to enrich learning environments.

References

Addison, S., Wright, A., & Milner, R. (2009; 2009). Using clickers to improve student engagement and performance in an

introductory biochemistry class. Biochemistry and Molecular Biology Education, 37(2), 84-84-91.

Bachman, L., & Bachman, C. (2011; 2011). A study of classroom response system clickers: Increasing student engagement and

performance in a large undergraduate lecture class on architectural research. Journal of Interactive Learning Research,

22(1), 5-5-21.

Baderin, M.A. (2005). Towards improving student attendance and quality of undergraduate tutorials: A case study on law. \

Teaching in Higher Education, 101), 99-116.

Bateman, S., Gutwin, C., Nacenta, M. (2008). Seeing Things in the Clouds: The Effect of Visual Features on Tag Cloud

Selections. In: Proc. of the 19th ACM conference on Hypertext and Hypermedia, pp. 193–202. ACM Press, New York.

Beatty, I. 2004. Transforming Student Learning with Classroom Communication Systems. Educause Centre for Applied Research,

Research Bulletin, V2004(3): 2-13.

Berry, J. (2009; 2009; 2011). TECHNOLOGY SUPPORT in nursing education: Clickers in the classroom. Nursing Education

Perspectives; Nursing Education Perspectives, 30(5), 295-298.

Blood, E., & Neel, R. (2008). Using student response systems in lecture-based instruction: Does it change student engagement

and learning? Journal of Technology and Teacher Education (JTATE), 16(3), 375-383.

Brueckner, J.K., and MacPherson, B.R. 2004. Benefits from peer teaching in the dental gross anatomy laboratory. European

Journal of Dental Education, 8: 72-77.

Burnstein, R.A., and Lederman, L.M. 2003. Comparison of Different Commercial Wireless Keypad Systems. The Physics

Teacher, 41(5): 272-275.

Caldwell, J. E. (2007; 2007). Clickers in the large classroom: Current research and best-practice tips. CBE - Life Sciences

Education, 6(1), 9-9-20.

Chen, J. C., Whittinghill, D. C., & Kadlowec, J. A. (2010; 2010). Classes that click: Fast, rich feedback to enhance student

learning and satisfaction. Journal of Engineering Education, 99(2), 159-159-168.

Carnevale D. 2005. Run a Class Like a Game Show: “Clickers” keep students involved. Chronicle of Higher Education, 1(42):B3.

Crews, T.B., Ducate, L., Rathel, J.M., Heid, K., and Bishoff, S.T., 2011. Clicker in the Classroom: Transforming Students into

Active Learners. Educause Centre for Applied Research, Research Bulletin, 9: 2-12.

Crossgrove, K., & Curran, K. L. (2008; 2008). Using clickers in nonmajors- and majors-level biology courses: Student opinion,

learning, and long-term retention of course material. CBE - Life Sciences Education, 7(1), 146-146-154.

Crouch C.H., and Mazur, E. 2001. Peer Instruction: Ten years of experience and results. American Journal of Physics, 9(9):970977.

Cummings, R. (2007). The effects of student response systems on performance and satisfaction: An investigation in a tax

accounting class. Journal of College Teaching and Learning, 4(12), 21-26.

Dufresne, R.J., Wenk, L., Mestre, J.P., Gerace, W.J., and Leonard, W.J., 1996. Classtalk: A Classroom Communication System

for Active Learning. Journal of Computing in Higher Education, 7(2): 3-47.

Duncan, D. 2005. Clickers in the Classroom: How to Enhance Science Teaching Using Classroom Response Systems. Toronto.

Pearson Prentice-Hall and Pearson Benjamin Cummings.

FitzPatrick, K. A., Finn, K. E., & Campisi, J. (2011). Effect of personal response systems on student perception and academic

performance in courses in a health sciences curriculum. Advances in Physiology Education, 35(3), 280-289.

Freeman, M., Blayney, P., & Ginns, P. (2006). Anonymity and in class learning: The case for electronic response systems.

Australasian Journal of Educational Technology, 22(4), 568-580.

Freeman, S., O'Connor, E., Parks, J. W., Cunningham, M., Hurley, D., Haak, D., et al. (2007). Prescribed active learning

increases performance in introductory biology. CBE Life Sciences Education, 6(2), 132-139.

Freeman, S., Haak, D., & Wenderoth, M. P. (2011; 2011). Increased course structure improves performance in introductory

biology. CBE - Life Sciences Education, 10(2), 175-186.

Hall, R. H., Collier, H. L., Thomas, M. L., & Hilgers, M. G. A student response system for increasing engagement, motivation,

and learning in high enrollment lectures.

Harlow, J., Paulo Kushnir, L., Bank, C., Browning, S., Clarke, J., Cordon, A., Harrison, D., Ing, K., Kutas, C., & Serbanescu, R.

(2009). What’s all the clicking about? A study of Classroom Response System use at the University of Toronto.

Collection of Essays on Learning and Teaching (CELT), Vol. II, 114-121.

Hull, Debra. B., & Hull, John. H. (2011). Impact of wireless technology in a human sexuality course. North American Journal of

Psychology, 13(2), 245-254.

Liu, W. C., & Stengel, D. N. (2011). Improving student retention and performance in quantitative courses using clickers.

International Journal for Technology in Mathematics Education, 18(1), 51-58.

Martyn, M. (2007; 2007). Clickers in the classroom: An active learning approach. EDUCAUSE Quarterly, 30(2), 71-71-74.

Mayer, R. E., Stull, A., DeLeeuw, K., Almeroth, K., Bimber, B., Chun, D., et al. (2009; 2009). Clickers in college classrooms:

Fostering learning with questioning methods in large lecture classes. Contemporary Educational Psychology, 34(1), 5157.

Mazur, E. 1997. Peer Instruction: A User’s Manual. Toronto: Prentice-Hall.

Mula, J. M., & Kavanagh, M. (2009). Click go the students, click-click-click: The efficacy of a student response system for

engaging students to improve feedback and performance. E-Journal of Business Education and Scholarship Teaching,

3(1) 1-17.

Radosevich, D. J., Salomon, R., Radosevich, D. M., & Kahn, P. (2008). Using student response systems to increase motivation,

learning, and knowledge retention. Innovate: Journal of Online Education, 5(1), 7.

Rao, S.P. and DiCArlo, S.E. 2000. Peer Instruction Improves Performance on Quizzes. Advances in Physiology Education, 24:

51-55.

Reay, N.W., Bao, L., Pengfei, L., Warnakulasooriya, R., & Bough, G. (2005). Toward an effective use of voting machines in

physics lectures. American Journal of Physics, 73(6), 554-558.

Smith, M. K., Trujillo, C., & Su, T. T. (2011). The benefits of using clickers in small-enrollment seminar-style biology courses.

CBE-Life Sciences Education, 10(1), 14-17.

Williamson Sprague, E., & Dahl, D. (2010). Learning to click. Journal of Marketing Education, 32(1), 93-103.

Yaoyuneyong, G., & Thornton, A. (2011). Combining peer instruction and audience response systems to enhance academic

performance, facilitate active learning and promote peer-assisted learning communities. International Journal of

Fashion Design, Technology and Education, 4(2), 127-127-139.

Yerkes R.M., Dodson J.D. (1908). The relation of strength of stimulus to rapidity of habit-formation. Journal of Comparative

Neurology and Psychology 18: 459–482.