FORMULAS

advertisement

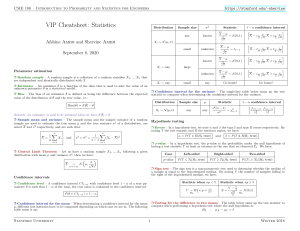

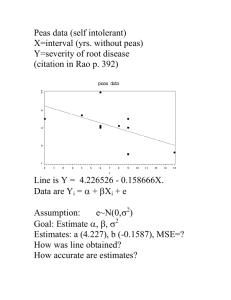

ELEMENTARY STATISTICS, 5/E Neil A. Weiss FORMULAS NOTATION The following notation is used on this card: n sample size σ population stdev x sample mean d paired difference s sample stdev p̂ sample proportion p population proportion Qj j th quartile N population size O observed frequency µ population mean E expected frequency CHAPTER 5 Probability and Random Variables • Probability for equally likely outcomes: P (E) f , N where f denotes the number of ways event E can occur and N denotes the total number of outcomes possible. • Special addition rule: P (A or B or C or · · · ) P (A) + P (B) + P (C) + · · · CHAPTER 3 Descriptive Measures (A, B, C, . . . mutually exclusive) x • Sample mean: x n • Complementation rule: P (E) 1 − P (not E) • Range: Range Max − Min • General addition rule: P (A or B) P (A) + P (B) − P (A & B) • Mean of a discrete random variable X: µ xP (X x) • Sample standard deviation: (x − x)2 s n−1 s or x 2 − (x)2 /n n−1 • Interquartile range: IQR Q3 − Q1 • Lower limit Q1 − 1.5 · IQR, Upper limit Q3 + 1.5 · IQR • Population mean (mean of a variable): µ x N • Standard deviation of a discrete random variable X: σ (x − µ)2 P (X x) or σ x 2 P (X x) − µ2 • Factorial: k! k(k − 1) · · · 2 · 1 n n! • Binomial coefficient: x x! (n − x)! • Binomial probability formula: n x p (1 − p)n−x , x • Population standard deviation (standard deviation of a variable): (x − µ)2 x 2 σ or σ − µ2 N N x−µ • Standardized variable: z σ • Mean of a binomial random variable: µ np CHAPTER 4 • Standard deviation of a binomial random variable: σ Descriptive Methods in Regression and Correlation • Sxx , Sxy , and Syy : Sxy (x − x)(y − y) xy − (x)(y)/n Syy (y − y)2 y 2 − (y)2 /n and b0 1 (y − b1 x) y − b1 x n • Total sum of squares: SST (y − y)2 Syy 2 • Regression sum of squares: SSR (ŷ − y)2 Sxy /Sxx 2 • Error sum of squares: SSE (y − ŷ)2 Syy − Sxy /Sxx • Coefficient of determination: r 2 SSR SST r (x − x)(y − y) sx sy √ • Standard deviation of the variable x: σx σ/ n Confidence Intervals for One Population Mean • Standardized version of the variable x: z n or x−µ √ σ/ n • z-interval for µ (σ known, normal population or large sample): σ x ± zα/2 · √ n • Sample size for estimating µ: • Linear correlation coefficient: 1 n−1 The Sampling Distribution of the Sample Mean σ • Margin of error for the estimate of µ: E zα/2 · √ n • Regression identity: SST SSR + SSE Sxy r Sxx Syy np(1 − p) • Mean of the variable x: µx µ CHAPTER 8 • Regression equation: ŷ b0 + b1 x, where Sxy Sxx where n denotes the number of trials and p denotes the success probability. CHAPTER 7 Sxx (x − x)2 x 2 − (x)2 /n b1 P (X x) zα/2 · σ E 2 rounded up to the nearest whole number. , ELEMENTARY STATISTICS, 5/E Neil A. Weiss FORMULAS • Studentized version of the variable x: t x−µ √ s/ n • t-interval for µ (σ unknown, normal population or large sample): s x ± tα/2 · √ n with df n − 1. CHAPTER 9 z x − µ0 √ σ/ n • t-test statistic for H0 : µ µ0 (σ unknown, normal population or large sample): t x − µ0 √ s/ n with df n − 1. • Paired t-test statistic for H0 : µ1 µ2 (paired sample, and normal differences or large sample): d √ sd / n t with df n − 1. • Paired t-interval for µ1 − µ2 (paired sample, and normal differences or large sample): sd d ± tα/2 · √ n with df n − 1. CHAPTER 11 Inferences for Population Proportions • Sample proportion: Inferences for Two Population Means • Pooled sample standard deviation: (n1 − 1)s12 + (n2 − 1)s22 sp n1 + n2 − 2 • Pooled t-test statistic for H0 : µ1 µ2 (independent samples, normal populations or large samples, and equal population standard deviations): t x1 − x2 √ sp (1/n1 ) + (1/n2 ) with df n1 + n2 − 2. • Pooled t-interval for µ1 − µ2 (independent samples, normal populations or large samples, and equal population standard deviations): (x 1 − x 2 ) ± tα/2 · sp (1/n1 ) + (1/n2 ) with df n1 + n2 − 2. p̂ x , n where x denotes the number of members in the sample that have the specified attribute. • One-sample z-interval for p: p̂ ± zα/2 · p̂(1 − p̂)/n (Assumption: both x and n − x are 5 or greater) • Margin of error for the estimate of p: E zα/2 · p̂(1 − p̂)/n • Sample size for estimating p: zα/2 2 n 0.25 or E n p̂g (1 − p̂g ) zα/2 E 2 rounded up to the nearest whole number (g “educated guess”) • One-sample z-test statistic for H0 : p p0 : • Degrees of freedom for nonpooled-t procedures: 2 2 s /n1 + s22 /n2 1 2 2 2 , s2 /n2 s12 /n1 + n1 − 1 n2 − 1 rounded down to the nearest integer. • Nonpooled t-test statistic for H0 : µ1 µ2 (independent samples, and normal populations or large samples): x1 − x2 t (s12 /n1 ) + (s22 /n2 ) with df . with df . Hypothesis Tests for One Population Mean • z-test statistic for H0 : µ µ0 (σ known, normal population or large sample): CHAPTER 10 • Nonpooled t-interval for µ1 − µ2 (independent samples, and normal populations or large samples): (x 1 − x 2 ) ± tα/2 · (s12 /n1 ) + (s22 /n2 ) p̂ − p0 z √ p0 (1 − p0 )/n (Assumption: both np0 and n(1 − p0 ) are 5 or greater) • Pooled sample proportion: p̂p x1 + x2 n1 + n2 • Two-sample z-test statistic for H0 : p1 p2 : p̂1 − p̂2 z √ p̂p (1 − p̂p ) (1/n1 ) + (1/n2 ) (Assumptions: independent samples; x1 , n1 − x1 , x2 , n2 − x2 are all 5 or greater) ELEMENTARY STATISTICS, 5/E Neil A. Weiss FORMULAS • Two-sample z-interval for p1 − p2 : (p̂1 − p̂2 ) ± zα/2 · p̂1 (1 − p̂1 )/n1 + p̂2 (1 − p̂2 )/n2 • One-way ANOVA identity: SST SSTR + SSE • Computing formulas for sums of squares in one-way ANOVA: (Assumptions: independent samples; x1 , n1 − x1 , x2 , n2 − x2 are all 5 or greater) SST x 2 − (x)2 /n SSTR (Tj2 /nj ) − (x)2 /n • Margin of error for the estimate of p1 − p2 : E zα/2 · p̂1 (1 − p̂1 )/n1 + p̂2 (1 − p̂2 )/n2 • Sample size for estimating p1 − p2 : • Mean squares in one-way ANOVA: n1 n2 0.5 or SSE SST − SSTR zα/2 E 2 MSTR z n1 n2 p̂1g (1 − p̂1g ) + p̂2g (1 − p̂2g ) 2 α/2 Chi-Square Procedures • Expected frequencies for a chi-square goodness-of-fit test: E np • Test statistic for a chi-square goodness-of-fit test: χ 2 (O − E)2 /E with df k − 1, where k is the number of possible values for the variable under consideration. • Expected frequencies for a chi-square independence test: R·C n where R row total and C column total. E F MSTR MSE CHAPTER 14 Inferential Methods in Regression and Correlation • Population regression equation: y β0 + β1 x SSE • Standard error of the estimate: se n−2 • Test statistic for H0 : β1 0: t b1 √ se / Sxx with df n − 2. • Confidence interval for β1 : se b1 ± tα/2 · √ Sxx with df n − 2. χ 2 (O − E)2 /E with df (r − 1)(c − 1), where r and c are the number of possible values for the two variables under consideration. Analysis of Variance (ANOVA) • Notation in one-way ANOVA: • Confidence interval for the conditional mean of the response variable corresponding to xp : (xp − x/n)2 1 ŷp ± tα/2 · se + n Sxx with df n − 2. k number of populations n total number of observations x mean of all n observations nj size of sample from Population j x j mean of sample from Population j sj2 variance of sample from Population j Tj sum of sample data from Population j • Defining formulas for sums of squares in one-way ANOVA: • Prediction interval for an observed value of the response variable corresponding to xp : (xp − x/n)2 1 ŷp ± tα/2 · se 1 + + n Sxx with df n − 2. • Test statistic for H0 : ρ 0: t SST (x − x)2 SSTR nj (x j − x) 2 SSE (nj − 1)sj2 SSE n−k with df (k − 1, n − k). • Test statistic for a chi-square independence test: CHAPTER 13 MSE • Test statistic for one-way ANOVA (independent samples, normal populations, and equal population standard deviations): E rounded up to the nearest whole number (g “educated guess”) CHAPTER 12 SSTR , k−1 with df n − 2. r 1 − r2 n−2